Our new preprint is out! We present the Heart Rate Discrimination Task, a novel psychophysical Bayesian method for estimating the accuracy, bias, and precision of interoceptive beliefs! https://www.biorxiv.org/content/10.1101/2021.02.18.431871v1 Preview thread + all experiment code

First the TLDR: we developed and tested a novel Bayesian approach to estimating cardiac beliefs in ~ 200 participants. Compared to exteroception, we find cardiac beliefs are negatively biased, more imprecise, and show poorer metacognition. All data + code:

https://github.com/embodied-computation-group/CardioceptionPaper#readme

https://github.com/embodied-computation-group/CardioceptionPaper#readme

Current methods for measuring cardiac interoception are mired in controversy. In particular, for nearly a decade the role of subjective beliefs about the heart rate in these measures has been a subject of intense debate.

However, we think this is a bit misguided, and that top-down beliefs about whether your heart is beating fast or slow are a core part of interoceptive phenomenology. To measure these beliefs, we devised a novel 2-interval forced choice task.

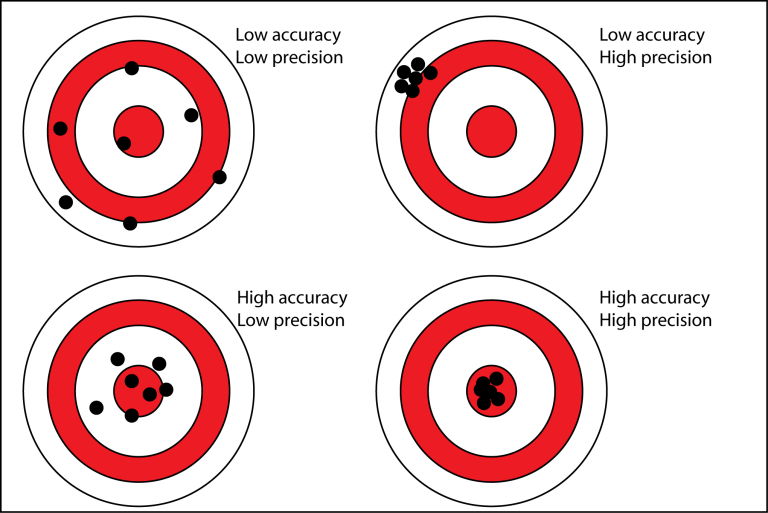

In our task, participants listen quietly to their cardiac sensations and then decide whether an auditory feedback tone is faster or slower than their heartbeat.

Behind the scenes, we use an adaptive Bayesian procedure ('psi') to determine the "point of subjective equality" between the true HR and the feedback BPM. This yields threshold and slope measures in units of "delta-BPM".

This threshold measure tell us about the accuracy and bias of HR beliefs, such that the the closer to zero, the more accurate, with the + or - sign indicating the degree of over- or under-estimation. The slope of this function tells us about the precision or uncertainty.

A nice feature of this design is that it enables us to implement a well-matched exteroceptive control condition, where participants listen to two tone sequences and decide if the second 'target' is faster/slower than the first 'reference'.

We can then use the adaptive procedure to estimate exteroceptive accuracy, threshold, and bias in the same units! This lets us compare how different groups or experimental manipulations influence specific intero, extero, or general temporal parameters.

All of that sounds great, but to test out and validate the task, we conducted a test-retest experiment in 218 healthy participants, measuring HRD performance twice before and after a 6-week interval. What did we find?

Examining the estimated psychometric functions, we find that interoceptive beliefs are negatively biased, meaning participant underestimate their resting HR by about 7BPM on average. We also found substantially more inter-individual variance in intero vs extero thresholds.

We also find that interoceptive beliefs are substantially more imprecise than exteroceptive - participants exhibit consistently flatter slopes. Both of these effects are robustly replicated 6 weeks later, at session 2.

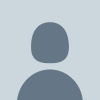

What about metacognitive insight? A strength of our task is that it allows us to collect many (~100) near-threshold trials in just 20 minutes, and our 2IFC design makes it more amenable to estimate and compare metacognition between modalities.

By fitting a repeated measures hierarchical model to confidence ratings and ground-truth accuracy, we see that participants are overall less confident for interoception, and show reduced metacognitive insight compared to exteroception. We again replicate this effect at Session 2.

To sum up so far: compared to a matched exteroceptive control, our task finds that interoceptive HR beliefs are more negatively biased, imprecise, and show reduced metacognitive sensitivity compared to a matched exteroceptive control. Exciting!

But what about validity and reliability? One of our major aims with this task is that it "measures what it says on the tin", i.e. that we measure what we actually set out too. One worry could be that the task really just measures a general temporal estimation bias.

In this case, you would expect strong correlations between perceptual and/or metacognitive parameters for each condition, in particular threshold. We tested this via robust cross-correlation analyses. As you can see, we found a very high degree of independence between modalities.

Next, we wondered about "face validity". If our task measures beliefs about the HR, we would expect to see a correlation with heartbeat counting "iACC" scores. And indeed, we do find a modest correlation, such that underestimation of the heart rate correlates with lower iACC!

Finally, we were interested to establish test-retest reliability. Are interoceptive thresholds stable across time points? This is important in particular for clinical and intervention research, where poor reliability can obscure treatment effects.

And indeed - we find that interoceptive reliability is "pretty good" [my own effect size yardstick there ;)] across 6-weeks test-retest. Further, all effects reported in the paper are replicated across both sessions. There are some caveats here wrt slope - see paper for details.

In all, we find these results to be extremely encouraging. The HRD can tease apart the unique sources of input that drive cardiac belief updating, enabling researchers to test specific hypothesis about specific interoceptive versus general inputs and abilities.

But this is just the beginning! Our road map will focus on: 1) causal manipulations of both HR and prior belief to disentangle how cardiac beliefs are formed, 2) extensive clinical studies to map and identify psychiatric markers, and 3) computational modelling of cardiac beliefs.

As always, you can engage interactively with ALL of our code and data, including fully interactive notebooks that go from basic trial level preprocessing all the way to group level model fitting and data visualization: https://github.com/embodied-computation-group/CardioceptionPaper#readme

Last but not least, a massive thanks to our team @visceral_mind, in particular our brilliant postdoc @legrandni who worked tirelessly the past two years to bring this task to life, and our funders @lundbeckfonden and @AIAS_dk who make this research possible!

And there is more - in the coming weeks, we will be releasing the "python cardioception package", an open-source @psychopy repository that will allow you to install our task + other mainstream interoception tasks in a single "pip install cardioception" command!

Small disclaimer: some of the open code notebooks may not be fully online yet, so have some patience as we get everything there ready. But these notebooks are cool- you can inspect each and every subject using beautiful HTML reports if you like!

Thanks also to our collaborators @cjcascio_phd @PaulPcf22 @HZiauddeen @neurodelia @Katlab_UCL @manos_tsakiris @KiraVibe and @AskDrJeg who are helping us to test a variety of clinical populations and drug manipulations using the task!

Read on Twitter

Read on Twitter

![And indeed - we find that interoceptive reliability is "pretty good" [my own effect size yardstick there ;)] across 6-weeks test-retest. Further, all effects reported in the paper are replicated across both sessions. There are some caveats here wrt slope - see paper for details. And indeed - we find that interoceptive reliability is "pretty good" [my own effect size yardstick there ;)] across 6-weeks test-retest. Further, all effects reported in the paper are replicated across both sessions. There are some caveats here wrt slope - see paper for details.](https://pbs.twimg.com/media/Euk0gahXcAEfr-j.jpg)