Artificial general intelligence is risky by default.

(a thread on this bold conjecture)

It's also my 5th podcast episode:

It's also my 5th podcast episode:

(a thread on this bold conjecture)

It's also my 5th podcast episode:

It's also my 5th podcast episode:

1/ Should we worry about AI?

@NPCollapse is a researcher at EleutherAI, a grass-roots collection of open-source AI researchers. I talked to Connor about AI misalignment and why it poses a potential existential risk for humanity.

Watch the entire episode:

@NPCollapse is a researcher at EleutherAI, a grass-roots collection of open-source AI researchers. I talked to Connor about AI misalignment and why it poses a potential existential risk for humanity.

Watch the entire episode:

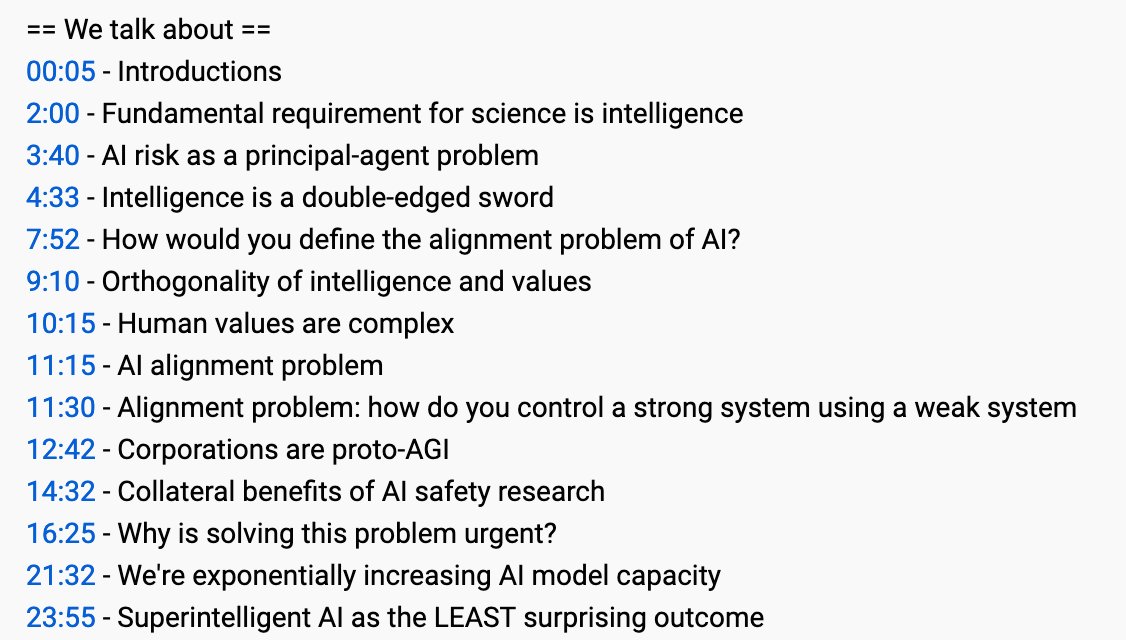

2/ We cover a ton of ground in 1-hour conversation.

If you're interested in AI or risks posed by AI, you don't want to miss out on this one.

If you're interested in AI or risks posed by AI, you don't want to miss out on this one.

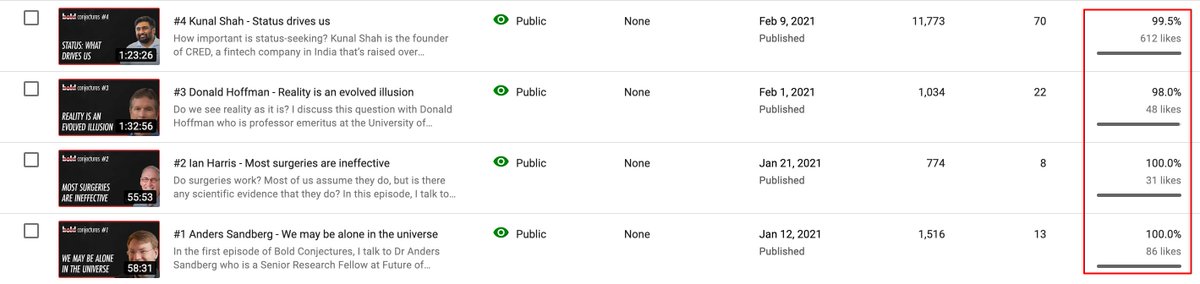

3/ Does 1 hour seem like a big investment?

The previous four podcasts on Bold Conjectures have received encouraging feedback, and I'm sure this one will be totally worth your time too.

In case, you're really short on time, keep reading for my notes in this thread

The previous four podcasts on Bold Conjectures have received encouraging feedback, and I'm sure this one will be totally worth your time too.

In case, you're really short on time, keep reading for my notes in this thread

4/ Researchers who think about existential risks to humanity believe that artificial general intelligence (AGI) is the most potent threat facing us and the damage done by a superintelligence can dwarf anything else we’ve seen or imagined so far.

5/ This is a view shared by Nick Bostrom at @fhioxford and Stuart Russel at @chai_berkeley among others.

Prima facie, this sounds odd.

Why would AGI – a mere software – pose a bigger threat than other deadly technologies such as nuclear weapons?

Prima facie, this sounds odd.

Why would AGI – a mere software – pose a bigger threat than other deadly technologies such as nuclear weapons?

6/ To answer that question, you have to first define what artificial general intelligence is?

Perhaps a good starting point to that question is defining intelligence.

Perhaps a good starting point to that question is defining intelligence.

7/ Different people define intelligence differently and you can do endless debates on which definition best captures our intuitions.

However, I love the way @NPCollapse defined intelligence.

To him, intelligence is simply the ability to win.

I love this definition!

love this definition!

However, I love the way @NPCollapse defined intelligence.

To him, intelligence is simply the ability to win.

I

love this definition!

love this definition!

8/ More generally, you can think of intelligence as a process to choose your actions such that you make your preferred future more likely than not.

9/ Say you love ice-cream, but all you have is lemonade. Intelligence, then, is your ability to persuade someone with ice-cream to give you some in exchange for your lemonade.

10/ Most types of biological intelligences are specific.

If a human is locked in a dark cave, it’ll struggle to get out and hence its intelligence isn’t particularly useful in that context.

However, a bat, thanks to echolocation, will have no issue in finding its way out

If a human is locked in a dark cave, it’ll struggle to get out and hence its intelligence isn’t particularly useful in that context.

However, a bat, thanks to echolocation, will have no issue in finding its way out

11/ That was a contrived example and I know that humans exhibit more types of intelligence in various contexts.

But there’s no reason to believe humans exhibit maximally general intelligence. We fail at difficult projects all the time, so there’s a limit to our intelligence.

But there’s no reason to believe humans exhibit maximally general intelligence. We fail at difficult projects all the time, so there’s a limit to our intelligence.

12/ Imagine that we are able to make an AI that’s 10x, 100x, or 1000x more capable than a human to figure out how to win.

You give it a task and it figures out of achieving that task in ways you could have never thought of by yourself.

You give it a task and it figures out of achieving that task in ways you could have never thought of by yourself.

13/ We don’t yet know how to build such a superintelligent system, but it’s possible in near future we’d be able to build one.

And that’s where the risk is.

And that’s where the risk is.

14/ A sufficiently intelligent entity may even wipe out humanity if it determines that humanity stands in its way to achieve the given goal.

Sounds outlandish?

It isn’t.

Sounds outlandish?

It isn’t.

15/ The reason it isn’t outlandish is because dumb machines cause harm to humans all the time. Think of bridge collapses, train wrecks, airplane malfunctions or accidents like the Chernobyl disaster.

16/ Machines cause harm because they’re nothing but blind order following systems.

A train doesn’t even realize what it is doing when it derails. It’s simply following the mechanism we humans built into it.

A train doesn’t even realize what it is doing when it derails. It’s simply following the mechanism we humans built into it.

17/ Machines are dumb and that’s why they’re dangerous.

But AGI is riskier because it’s super-intelligent and intelligence doesn’t automatically imply alignment to human values.

But AGI is riskier because it’s super-intelligent and intelligence doesn’t automatically imply alignment to human values.

18/ A self-guided intercontinental ballistic missile that finds its target is intelligent but it’ll blindly kill thousands.

19/ In fact, the idea that AGI can be supremely dangerous can be understood by reflecting that humans who don't share your moral values can be supremely dangerous as well.

20/ Think of terrorists who blew up the Twin Towers on 9/11.

Their intelligence was what made them more dangerous than dumb terrorists who easily get caught.

Their intelligence was what made them more dangerous than dumb terrorists who easily get caught.

21/ An AGI, by definition, will be so intelligent that it can wreck unimaginable harm to achieve what it is programmed to achieve

It will follow orders blindly but follow them so cleverly that it becomes literally unstoppable.

It will follow orders blindly but follow them so cleverly that it becomes literally unstoppable.

22/ Perhaps the most famous example of cleverness gone bad is the paperclip maximizer https://en.wikipedia.org/wiki/Instrumental_convergence#Paperclip_maximizer

23/ This is an AI tasked by a factory owner to find ways to maximize the number of paperclips. It figures out that the best way to do that is to convert the entire Iron core of Earth into paperclips.

(Of course, humanity decimates when the Earth crust blows off).

(Of course, humanity decimates when the Earth crust blows off).

24/ This may still sound  alarmist and several first-order objections come to the mind.

alarmist and several first-order objections come to the mind.

Let's tackle them one by one.

alarmist and several first-order objections come to the mind.

alarmist and several first-order objections come to the mind.Let's tackle them one by one.

25/

WHY CAN'T WE ASK AGI TO AVOID HARM?

What is harm?

Is eating ice-cream harmful? No. Is eating too much ice-cream harmful? Yes.

Is killing humans bad? Yes. Is killing a suicide bomber who’s about to blow himself bad? No.

WHY CAN'T WE ASK AGI TO AVOID HARM?

What is harm?

Is eating ice-cream harmful? No. Is eating too much ice-cream harmful? Yes.

Is killing humans bad? Yes. Is killing a suicide bomber who’s about to blow himself bad? No.

26/ We can go on and on.

The point is: defining good and bad, harm and benefit is difficult because these are nebulous concepts.

If we learn anything from philosophical debates about ethics, it is that even we collectively can’t agree on what our values should be.

The point is: defining good and bad, harm and benefit is difficult because these are nebulous concepts.

If we learn anything from philosophical debates about ethics, it is that even we collectively can’t agree on what our values should be.

27/ And if we don’t know our values, how should we code them in the AI?

28/

WHY CAN'T WE ANTICIPATE WHAT AGI IS GOING TO DO AND PREVENT IT FROM DOING THAT?

No, we can’t do that because by definition it is super-intelligent. If we knew what it is going to do, we’d be as intelligent as it.

WHY CAN'T WE ANTICIPATE WHAT AGI IS GOING TO DO AND PREVENT IT FROM DOING THAT?

No, we can’t do that because by definition it is super-intelligent. If we knew what it is going to do, we’d be as intelligent as it.

29/ Just like with AlphaGo Zero from @DeepMind (AI Chess or Go engine by Deepmind), what we know for sure is that it is going to win, but we don’t know what’s the next move it is going to make.

https://en.wikipedia.org/wiki/AlphaGo_Zero

https://en.wikipedia.org/wiki/AlphaGo_Zero

30/

WHY CAN’T WE SWITCH IT OFF WHEN THINGS GO BAD?

If we can think of this, an AGI would likely think of this as well.

WHY CAN’T WE SWITCH IT OFF WHEN THINGS GO BAD?

If we can think of this, an AGI would likely think of this as well.

31/ There are several ways it can prevent from getting switched off:

- It can distribute its copies across the Internet.

- It can bribe / incentivize people to not switch it off

- It can pretend to have learned its lesson and promise not do harm in future

- It can distribute its copies across the Internet.

- It can bribe / incentivize people to not switch it off

- It can pretend to have learned its lesson and promise not do harm in future

32/ Remember: there are dumb things – like bitcoin – right now which are so distributed that they’re literally impossible to kill (even if we wanted to). https://invertedpassion.com/bitcoin-is-mother-of-all-network-effects/

33/

WHAT IF THERE ARE LIMITS TO INTELLIGENCE AND HENCE LIMITS TO HARM AGI CAN CAUSE?

This is a sensible objection. There are problems that are practically unsolvable because they require more computation power than possibly available in the universe.

WHAT IF THERE ARE LIMITS TO INTELLIGENCE AND HENCE LIMITS TO HARM AGI CAN CAUSE?

This is a sensible objection. There are problems that are practically unsolvable because they require more computation power than possibly available in the universe.

34/ Examples of such problems include breaking encryption or predicting long-range weather or stock market trends.

35/ It’s true that for such unbreakable problems, AGI will be powerless but the important point to remember is that an AGI doesn’t have to be infinitely more intelligent to cause a lot of harm.

It simply has to be more intelligent than humans or collectives of humans.

It simply has to be more intelligent than humans or collectives of humans.

36/ That amount of intelligence (which surpasses humans) is certainly within the realm of possibility.

Our brains are limited by our biological constraints. No such constraints may apply to AGI.

Our brains are limited by our biological constraints. No such constraints may apply to AGI.

37/ In theory, we may be capable of building an AGI with several orders of magnitude more computation and inter-connectedness than a human brain.

38/ So, in nutshell, even if an AGI can’t break encryption to launch a nuclear weapon, it can very well convince a human with encryption keys to launch the weapon.

When it comes to security, humans are generally the weakest link.

When it comes to security, humans are generally the weakest link.

39/

IF IT IS SO DANGEROUS, SHOULD WE STOP OR BAN AI RESEARCH?

Banning AI research is like banning computers. It won’t work. You can’t possibly monitor all software progress to figure out which one is AGI and which one, say, is simply a new web browser.

IF IT IS SO DANGEROUS, SHOULD WE STOP OR BAN AI RESEARCH?

Banning AI research is like banning computers. It won’t work. You can’t possibly monitor all software progress to figure out which one is AGI and which one, say, is simply a new web browser.

40/ Even if you have some success with this, since AGI promises power and riches, different nations will have incentives to continue researching and making progress.

41/

IF THINGS GOES BAD, WOULDN’T GOVERNMENT DO SOMETHING?

If history teaches us something, the answer to this question is negative.

Governments have a terrible track record when it comes to tackling the consequences of technology.

IF THINGS GOES BAD, WOULDN’T GOVERNMENT DO SOMETHING?

If history teaches us something, the answer to this question is negative.

Governments have a terrible track record when it comes to tackling the consequences of technology.

42/ Governments have failed so far to make any substantial nudge towards preventing climate catastrophe. What gives us confidence that it can prevent humanity from the AGI risk?

43/ AGI WILL TAKE TIME SO WE WILL HAVE PLENTY OF TIME TO WORRY.

Two trends indicate that we won’t have plenty of time:

- Computing power increases exponentially

- AI models seem to scale their intelligence exponentially with available data and model size https://arxiv.org/abs/2001.08361

Two trends indicate that we won’t have plenty of time:

- Computing power increases exponentially

- AI models seem to scale their intelligence exponentially with available data and model size https://arxiv.org/abs/2001.08361

44/ If you know anything about exponential curves (hello covid!), you’d be worried. GPT-3 was not just better than GPT-2.

It was exponentially better.

Similarly, newer AI models will be exponentially better than current ones.

It was exponentially better.

Similarly, newer AI models will be exponentially better than current ones.

45/ @NPCollapse believes that the popular Hollywood portrayal of AI as killer robots gives exactly the wrong impression.

46/ There will not be a gradual AI uprising.

Like we’re amused by GPT-3, we will continue to be amused by more and more sudden improvements and in that process, we won’t even realize when we hit the point of no return. https://twitter.com/paraschopra/status/1284423029443850240

Like we’re amused by GPT-3, we will continue to be amused by more and more sudden improvements and in that process, we won’t even realize when we hit the point of no return. https://twitter.com/paraschopra/status/1284423029443850240

47/

SO WHAT SHOULD WE DO TO MINIMIZE AGI RISK?

Unfortunately, there are no clear answers. This is a topic of intense research and all we can hope to do right now is either research ourselves or support researchers such as @NPCollapse, @ESYudkowsky @geoffreyirving & others.

SO WHAT SHOULD WE DO TO MINIMIZE AGI RISK?

Unfortunately, there are no clear answers. This is a topic of intense research and all we can hope to do right now is either research ourselves or support researchers such as @NPCollapse, @ESYudkowsky @geoffreyirving & others.

48/ We have several candidate approaches for aligning AI with human values, but the truth is that we don’t yet have a concrete approach.

49/ WHY THIS TOPIC IS URGENT?

AGI risk may sound like an academic and arcane topic. But Connor calls it philosophy on a deadline.

Thousands of startups and big companies like @OpenAI, @DeepMind or @facebookai are rushing to improve their AI systems.

AGI risk may sound like an academic and arcane topic. But Connor calls it philosophy on a deadline.

Thousands of startups and big companies like @OpenAI, @DeepMind or @facebookai are rushing to improve their AI systems.

50/ The research in AI is progressing at a dizzying pace.

If everyone is rushing to be the first one to pass the Turing test, working on AI safety becomes a race against time.

Let’s collectively hope is that AI safety researchers win before AGI creators do.

If everyone is rushing to be the first one to pass the Turing test, working on AI safety becomes a race against time.

Let’s collectively hope is that AI safety researchers win before AGI creators do.

51/ That's it!

Hope your concern levels regarding AI got notched up as they did for me.

Notes and podcast are also hosted on my blog: https://invertedpassion.com/artificial-general-intelligence-is-risky-by-default/

Hope your concern levels regarding AI got notched up as they did for me.

Notes and podcast are also hosted on my blog: https://invertedpassion.com/artificial-general-intelligence-is-risky-by-default/

Read on Twitter

Read on Twitter