***** New Working Paper - Artificial Intelligence, Teacher Tasks and Individualized Pedagogy *****

***** New Working Paper - Artificial Intelligence, Teacher Tasks and Individualized Pedagogy *****

@EconTwitter

@EconTwitterJoint work with @bruno_ferman and @Lycia_Lima, hope you find it as interesting as we did!

Link to full paper:

https://drive.google.com/file/d/1xcY4uSU2rWzGx5R60gU7VcveXZ4RRael/view?usp=sharing

The recent progress in artificial intelligence (AI) changed the terms of comparative advantage between technology and humans, shifting the limits of what can —and reviving a longstanding debate on what should— be automated.

In education, applications of AI in linguistics prompted controversy on automated writing evaluation (AWE) systems.

AWE uses NLP to extract essay features and ML to predict scores and allocate feedback.

Check out the “Human Readers” petition: http://humanreaders.org/

AWE uses NLP to extract essay features and ML to predict scores and allocate feedback.

Check out the “Human Readers” petition: http://humanreaders.org/

Central to this controversy is the ability of systems that are blind to meaning to emulate human parsing, grading, and individualized feedback behavior.

Our paper finds empirical support for a fact that seems to have been overlooked in this debate: new technologies have first-order effects on the composition of job tasks.

In schools, even if AWE is an imperfect substitute for some of teachers' most complex tasks, it may allow teachers to relocate time from tasks requiring lower-level skills, ultimately fostering students' writing abilities that AI only imperfectly gauges.

We conducted a field experiment with app 19,000 students in 178 public schools in Espirito Santo, Brazil, to study two ed techs that allow teachers to outsource grading and feedback tasks on *writing* practices of high school seniors.

The 110 treated schools incorporated one of two ed techs designed to improve scores in the *argumentative essay* of the National Secondary Education Exam (ENEM) by alleviating Language teachers' time and human capital constraints.

ENEM is a HUGE deal! It is the second-largest college admission exam in the world, falling shortly behind the Chinese gaokao.

In 2019, the year of our study, roughly 5 million people and 70% of the total of high school seniors in the country took the exam!

In 2019, the year of our study, roughly 5 million people and 70% of the total of high school seniors in the country took the exam!

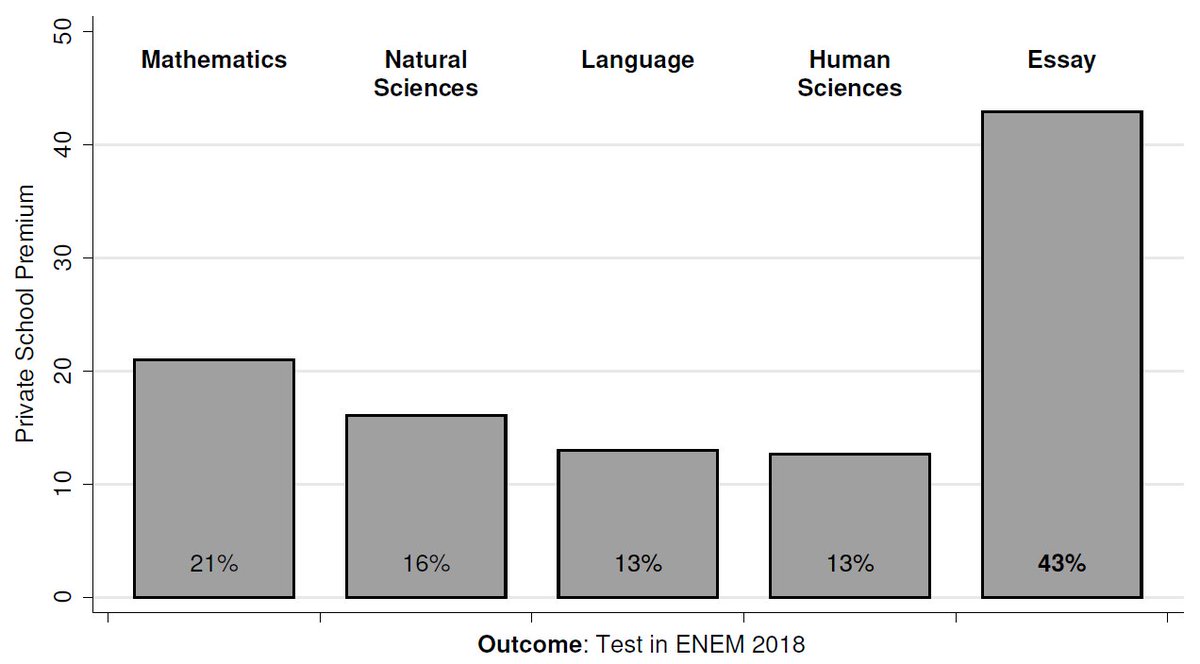

The large gap between public and private schools' quality in Brazil is salient in all ENEM tests and, in particular, in the written essay.

At least two times the gap in multiple-choice test scores:

At least two times the gap in multiple-choice test scores:

The first ed tech is a fully automated system that provides instantaneous scores and feedback using *only* an AWE system.

In the paper, we refer to this ed tech as “pure AWE”.

In the paper, we refer to this ed tech as “pure AWE”.

In the second ed tech, the AWE system withholds the automated score and, about three days after submitting their essays, students receive a final enhanced grading elaborated by *human graders*.

In the paper, we refer to this ed tech as “enhanced AWE”.

In the paper, we refer to this ed tech as “enhanced AWE”.

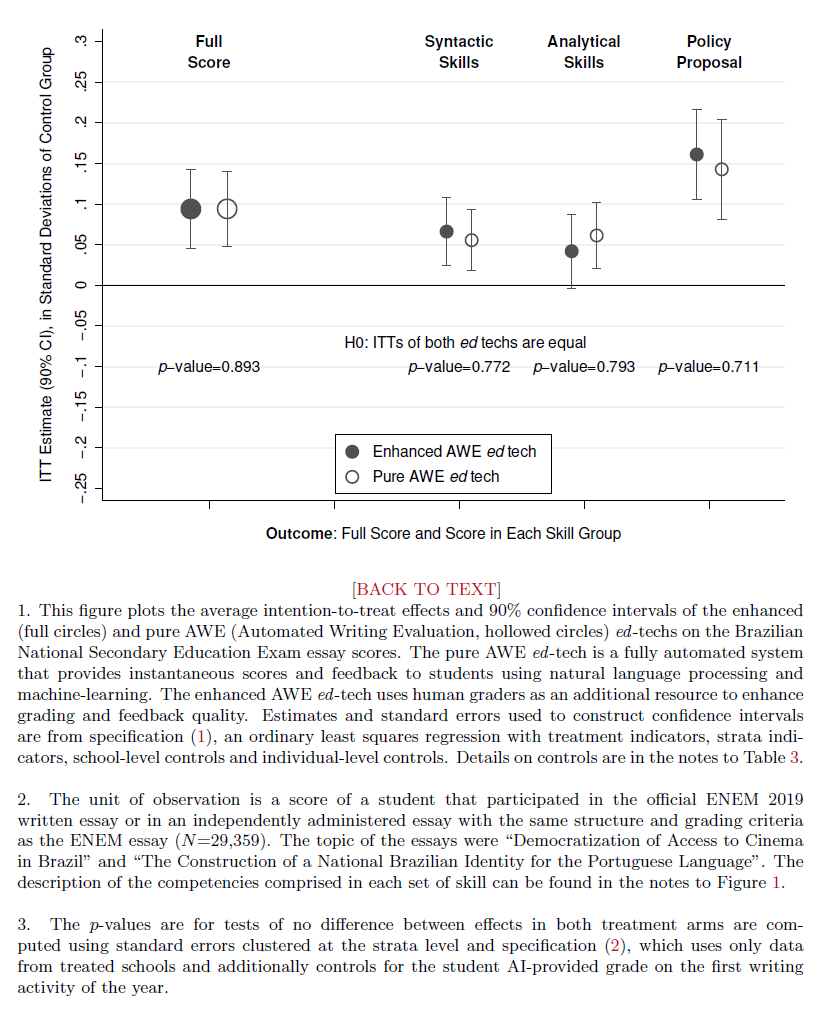

To our surprise, we found VERY similar reduced-form effects on ENEM essay scores, not only in total but on each of a broad set of skills, from syntactic to analytical to a skill capturing global consistency, creativity, and problem-solving skills.

We were able to collect primary data to try to understand better how the ed techs were incorporated. Here is a summary of the channels of impact we found:

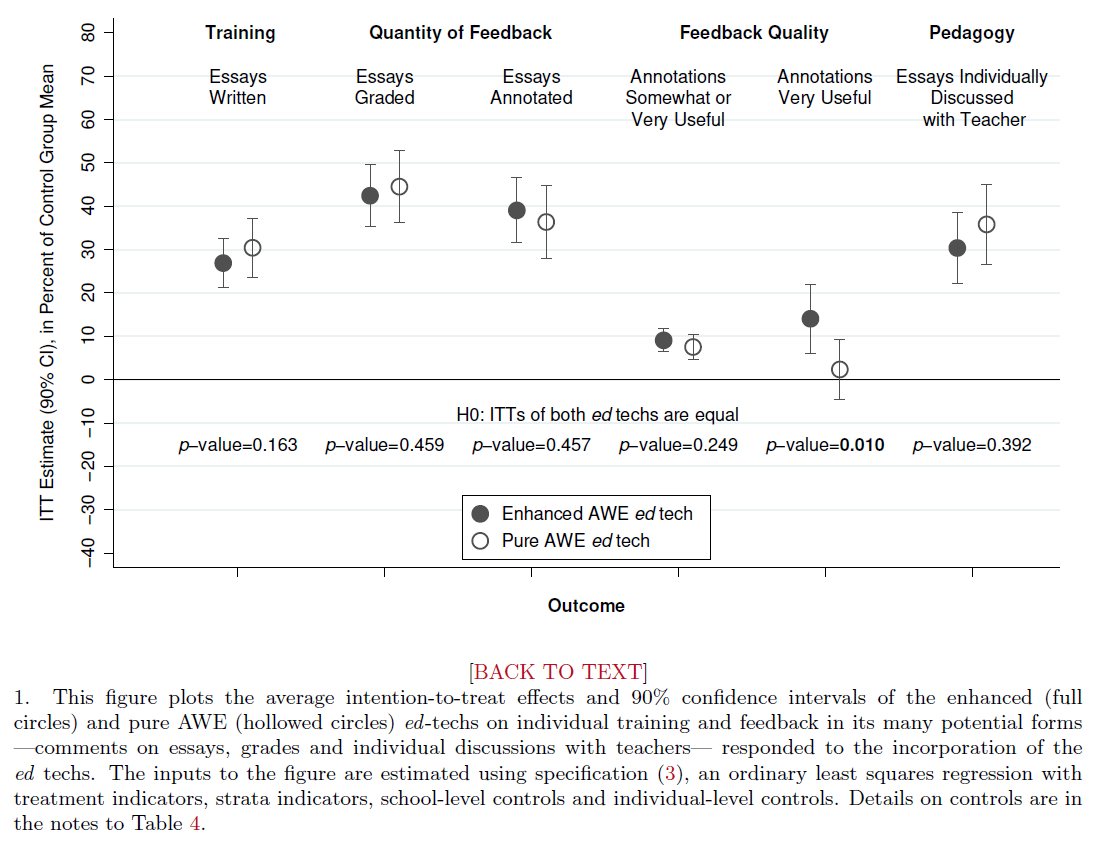

Both ed techs increased the number of essays written to practice.

Also, both ed techs similarly increased the quantity of feedback students ended up with.

Finally, even though both treatments increased the quality of feedback, effects were starker in the enhanced AWE.

Also, both ed techs similarly increased the quantity of feedback students ended up with.

Finally, even though both treatments increased the quality of feedback, effects were starker in the enhanced AWE.

Importantly, we show that both technologies similarly increased the number of ENEM training essays that were *personally discussed* with teachers after grading.

Thus, both ed techs enabled teachers to engage more on tasks that lead to the individualization of pedagogy.

Thus, both ed techs enabled teachers to engage more on tasks that lead to the individualization of pedagogy.

Such shifts toward “nonroutine” tasks can help explain the positive effects of the pure AWE in skills that AI alone may fall short in capturing...

... and also the lack of differential effects of using human graders as an additional resource to improve feedback.

... and also the lack of differential effects of using human graders as an additional resource to improve feedback.

Using a teacher survey, we document that teachers using the enhanced AWE ed tech adjusted hours worked from home downwards and perceived themselves as less time-constrained.

In turn, teachers in the pure AWE ed tech arm were not impacted in any of these margins.

In turn, teachers in the pure AWE ed tech arm were not impacted in any of these margins.

At face value, this suggests that teachers using pure AWE were able to keep pace by taking over some of the tasks of human graders, without increasing their usual workload.

This is important for policy: scaling up enhanced AWE would entail much larger costs!

This is important for policy: scaling up enhanced AWE would entail much larger costs!

Overall, we believe that the most interesting questions on AI are whether and how it affects production functions in spite of being unable to emulate intrinsic aspects of human intelligence.

Read on Twitter

Read on Twitter