~300 papers over the past 10 years, concentrated in subfields of medicine, archaeology, and animal bio, use (and in some cases entirely rest on) a method called "between-groups PCA" which is *guaranteed to give false positive results in typical use cases".

Short thread:

Short thread:

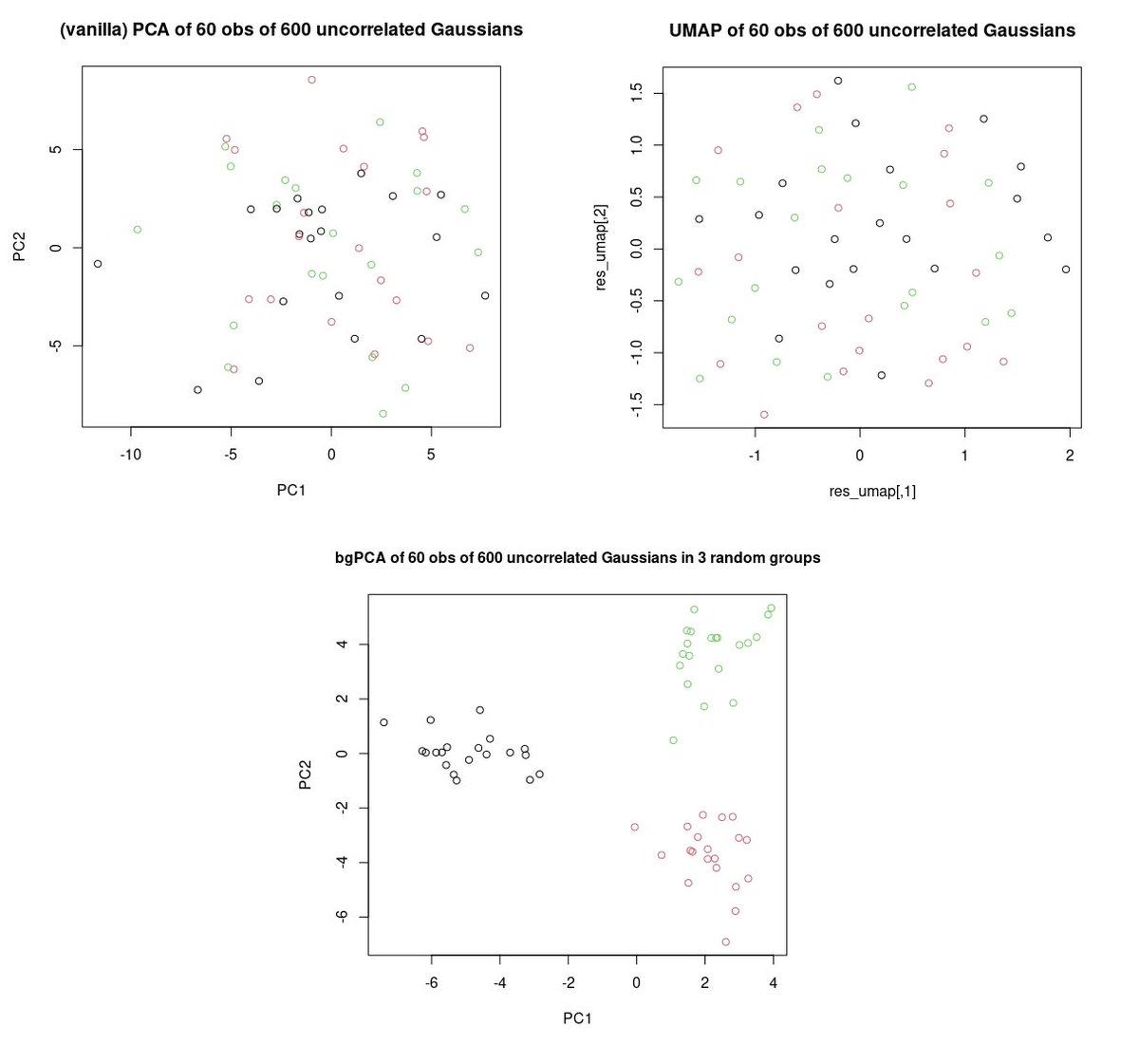

In bgPCA, you:

1) find mean coords of pre-defined "groups"

2) run PCA on the means

3) project all data points onto resulting PCs

4) wow, groups are really separated now!

The circularity should raise alarm bells! The "real" failure reason is more complicated, but simply:

1) find mean coords of pre-defined "groups"

2) run PCA on the means

3) project all data points onto resulting PCs

4) wow, groups are really separated now!

The circularity should raise alarm bells! The "real" failure reason is more complicated, but simply:

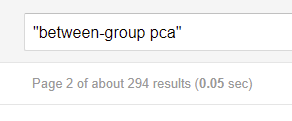

With few groups (g) + many variables – i.e. typical PCA use case – reducing to g-1 PCs *has to* discard a huge % of *within-group* variance.

Even when between-group variance is tiny + noise, in bgPCA it can swamp whatever within-group variance is left: spurious differentiation!

Even when between-group variance is tiny + noise, in bgPCA it can swamp whatever within-group variance is left: spurious differentiation!

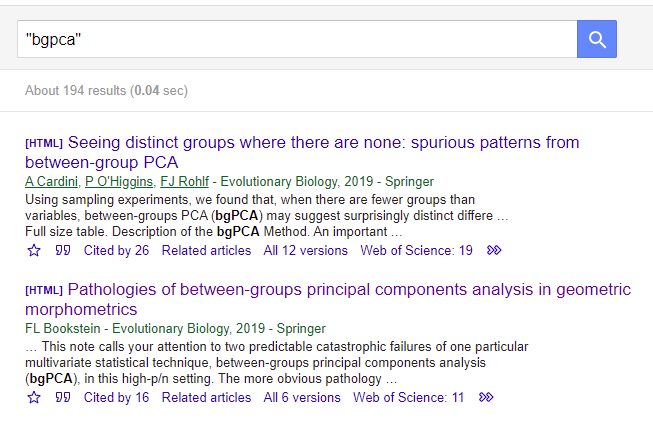

The biometricians eventually figured this out, hence the top Google Scholar results for bgPCA.

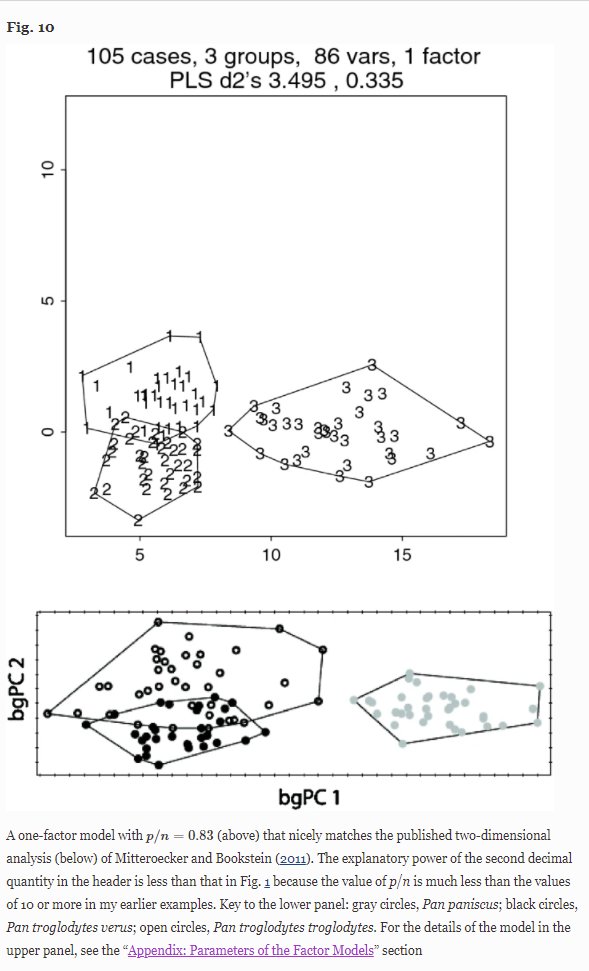

Special kudos to Fred Bookstein – he'd previously published a figure showing differentiation on 2 PCs, and admits he can replicate it using simulated data with *only one real factor*!

Special kudos to Fred Bookstein – he'd previously published a figure showing differentiation on 2 PCs, and admits he can replicate it using simulated data with *only one real factor*!

… But despite those critiques, bgPCA papers just keep coming! Including the one that I read today which entirely rests on it…

(I hope that this is mostly because the papers were in review when the 2019 critiques/demolitions were published. At least one is another critique.)

(I hope that this is mostly because the papers were in review when the 2019 critiques/demolitions were published. At least one is another critique.)

Big picture: most of these papers are from fields where collecting data is hard.

Sometimes all you have is n=30. You're desperate for a +ve result, but there's none to be found. It sucks.

Always be sceptical, especially if a simple scatterplot doesn't show your proposed result.

Sometimes all you have is n=30. You're desperate for a +ve result, but there's none to be found. It sucks.

Always be sceptical, especially if a simple scatterplot doesn't show your proposed result.

Read on Twitter

Read on Twitter