Do you really need deeeep networks for *all inputs*?

Can't you do better?

A small thread to summarize a number of interesting works I have been reading on how to adaptively and independently select a proper complexity for each input.

Continued below 1/n

1/n

Can't you do better?

A small thread to summarize a number of interesting works I have been reading on how to adaptively and independently select a proper complexity for each input.

Continued below

1/n

1/n

2: Quick recap -> Gumbel-Softmax (GS) is a neat trick that allows you to take a categorical decision in a differentiable fashion.

It is the building block for the techniques below.

Blog explanation by @FabianFuchsML : https://fabianfuchsml.github.io/gumbel/

Paper: https://arxiv.org/abs/1611.01144

It is the building block for the techniques below.

Blog explanation by @FabianFuchsML : https://fabianfuchsml.github.io/gumbel/

Paper: https://arxiv.org/abs/1611.01144

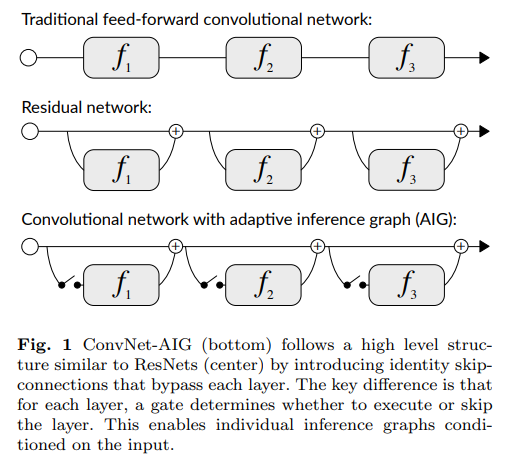

3: *Convolutional Networks with Adaptive Inference Graphs*

Let's start by this #IJCV paper by @SergeBelongie & Veit

Adding a gate with GS sampling before a layer allows you to adaptively skip/keep the layer for every input!

Link: https://arxiv.org/abs/1711.11503

Let's start by this #IJCV paper by @SergeBelongie & Veit

Adding a gate with GS sampling before a layer allows you to adaptively skip/keep the layer for every input!

Link: https://arxiv.org/abs/1711.11503

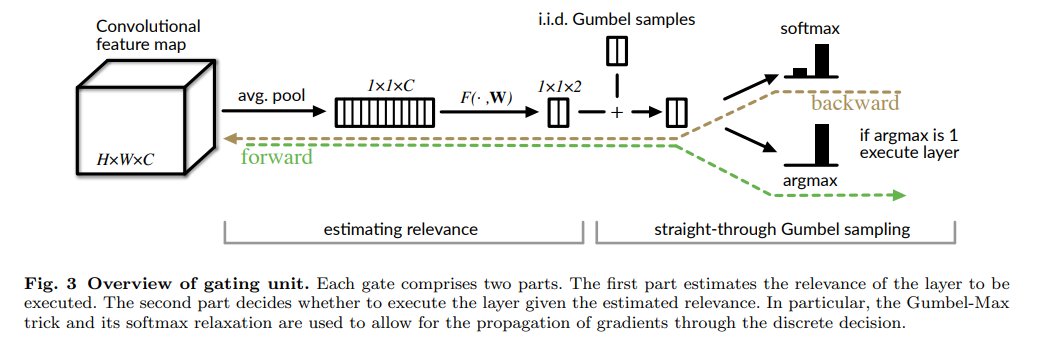

4: In order to make this viable, the gate is activated by avg. pooling of the previous activation map.

Interestingly, the sum of the gating values gives you a simple measure of how "non-linear" the network is for a certain input!

(And it can be regularized)

Continued below

Interestingly, the sum of the gating values gives you a simple measure of how "non-linear" the network is for a certain input!

(And it can be regularized)

Continued below

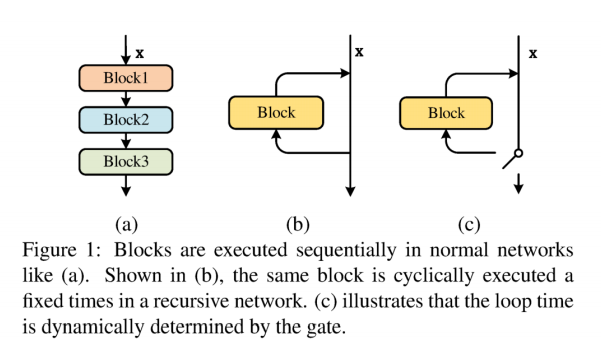

5: *Dynamic Recursive Neural Network*

Nice extension in this #CVPR paper: instead of choosing whether to skip a layer, you can choose to *repeat* the layer itself.

Similar to the adaptive computation time for RNNs ( https://arxiv.org/abs/1603.08983 ).

Paper: https://ieeexplore.ieee.org/abstract/document/8954249

Nice extension in this #CVPR paper: instead of choosing whether to skip a layer, you can choose to *repeat* the layer itself.

Similar to the adaptive computation time for RNNs ( https://arxiv.org/abs/1603.08983 ).

Paper: https://ieeexplore.ieee.org/abstract/document/8954249

5b: Not in thread, but in the limit of repeating a layer ∞ times, we get a deep equilibrium model as in the paper by @shaojieb @zicokolter & Koltun

Many "continuous-time" networks also have adaptive trade-offs in memory/accuracy required for each input.

https://arxiv.org/pdf/1909.01377.pdf

Many "continuous-time" networks also have adaptive trade-offs in memory/accuracy required for each input.

https://arxiv.org/pdf/1909.01377.pdf

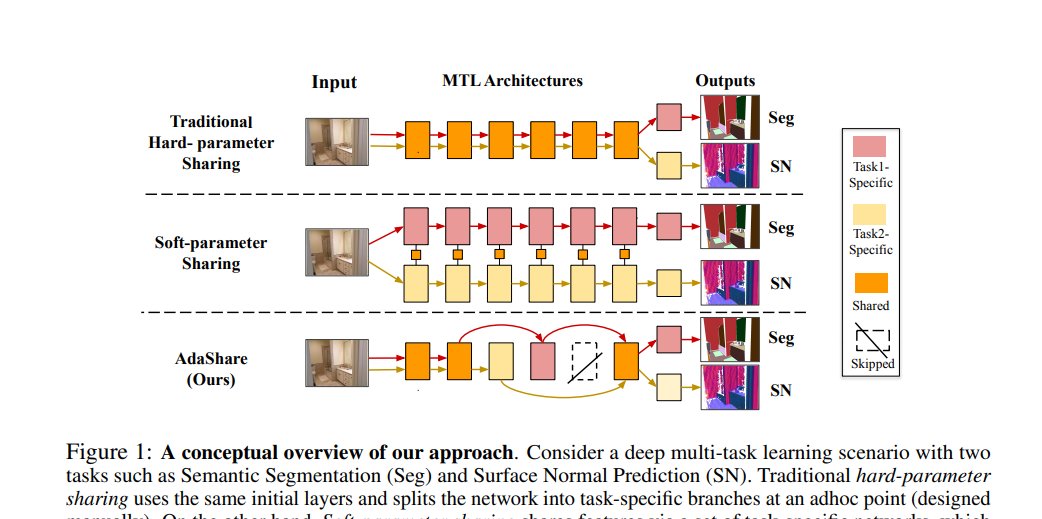

6: *AdaShare: Learning What To Share For Multi-Task Learning*

What can we do apart from speeding-up inference?

This #NeurIPS paper by @kate_saenko_ et al. uses this idea to perform adaptive sharing of the network in multi-task learning.

Paper: https://arxiv.org/abs/1911.12423

What can we do apart from speeding-up inference?

This #NeurIPS paper by @kate_saenko_ et al. uses this idea to perform adaptive sharing of the network in multi-task learning.

Paper: https://arxiv.org/abs/1911.12423

7: *Learning to Branch for Multi-Task Learning*

But there's more! In this #ICML paper, they use an almost identical idea to grow a *tree* of computations instead of a sequential set of blocks!

https://arxiv.org/abs/2006.01895

But there's more! In this #ICML paper, they use an almost identical idea to grow a *tree* of computations instead of a sequential set of blocks!

https://arxiv.org/abs/2006.01895

8: TL;DR These are super interesting because they provide a way to

(i) adapt the complexity on-the-fly for each input/task;

(ii) find tricks to regularize for complexity;

(iii) move away from the classical "sequential" model.

Concluded below with a bit of self-promotion

(i) adapt the complexity on-the-fly for each input/task;

(ii) find tricks to regularize for complexity;

(iii) move away from the classical "sequential" model.

Concluded below with a bit of self-promotion

9: My interest stems from works we have been doing on "multi-exit" networks.

The idea is to "exit" the model as soon as possible, which is a simpler version of "adaptive complexity".

If you are interested, we recently published a survey on the topic: https://arxiv.org/abs/2004.12814

The idea is to "exit" the model as soon as possible, which is a simpler version of "adaptive complexity".

If you are interested, we recently published a survey on the topic: https://arxiv.org/abs/2004.12814

Read on Twitter

Read on Twitter