At the #AAAI2021 workshop on RL in Games:

@beckypeng6 will present "Detecting and Adapting to Novelty in Games” at poster session #2

10:50am PT / 1:50am ET

What do you do if the rules of a game suddenly change and the RL policy no longer works?

Paper: http://aaai-rlg.mlanctot.info/papers/AAAI21-RLG_paper_50.pdf

@beckypeng6 will present "Detecting and Adapting to Novelty in Games” at poster session #2

10:50am PT / 1:50am ET

What do you do if the rules of a game suddenly change and the RL policy no longer works?

Paper: http://aaai-rlg.mlanctot.info/papers/AAAI21-RLG_paper_50.pdf

This paper looks at the problem in which an agent has learned a policy to play a game, but then the rules of the game plays.

This happens with “house rules” where different people play a familiar game but with different rules. For example, in the game, Monopoly.

This happens with “house rules” where different people play a familiar game but with different rules. For example, in the game, Monopoly.

If an agent plays a game with a fixed policy learned under a different set of rules, it can fail spectacularly.

Humans don’t have as much trouble with adapting quickly to house rules.

How do (a) detect rule changes, and (b) adapt the policy to the new rules?

Humans don’t have as much trouble with adapting quickly to house rules.

How do (a) detect rule changes, and (b) adapt the policy to the new rules?

By the way, this has relevance outside of games. Agents and robots can only deal with what is represented/representable. The real world is both more complex than the world we might choose to make representable, but also might change in ways that aren’t representable.

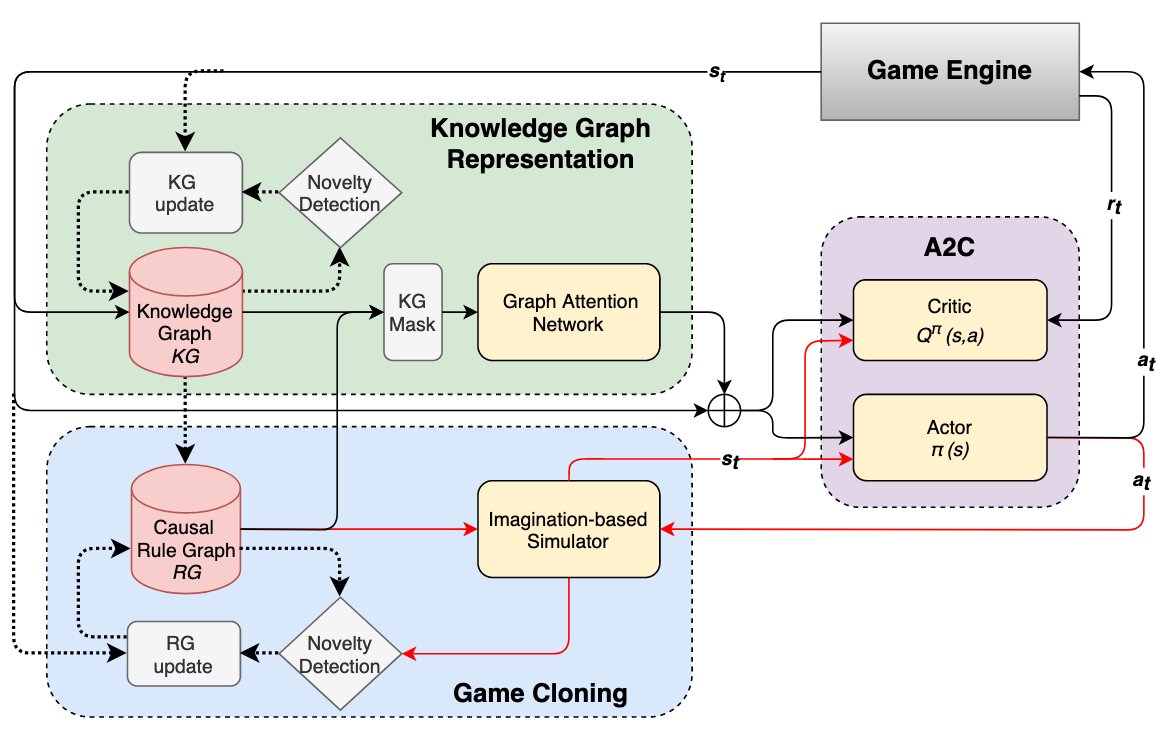

We propose an agent that learns the rules of the game as a symbolic knowledge structure alongside a policy.

This knowledge graph can tell the agent if a state-action transition is observed is inconsistent with its expectations about what is possible under the rules it knows.

This knowledge graph can tell the agent if a state-action transition is observed is inconsistent with its expectations about what is possible under the rules it knows.

Read on Twitter

Read on Twitter