On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?

By Emily M. Bender, Timnit Gebru, Angelina McMillan-Major, & Shmargaret Shmitchell #amreading #StochasticParrots

By Emily M. Bender, Timnit Gebru, Angelina McMillan-Major, & Shmargaret Shmitchell #amreading #StochasticParrots

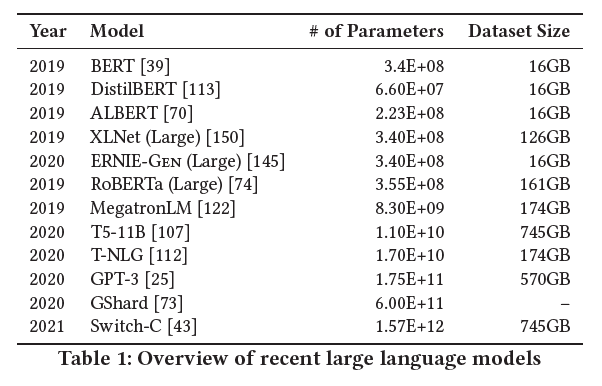

One of the biggest trends in natural language processing (NLP) has been the increasing size of language models (LMs) as measured by the number of parameters and size of training data #StochasticParrots

As increasingly large amounts of text are collected from the web in datasets such as the Colossal Clean Crawled Corpus and the Pile, this trend of increasingly large LMs can be expected to continue as long as they correlate with an increase in performance. #StochasticParrots

Training a single BERT base model (without hyperparameter tuning) on GPUs was estimated to require as much energy as a trans-American flight. #StochasticParrots

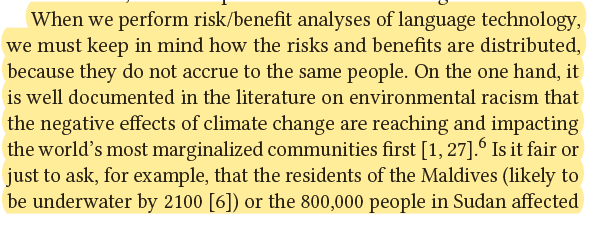

While some language technology is genuinely designed to benefit marginalized communities, most language technology is built to serve the needs of those who already have the most privilege in society. #StochasticParrots

When large LMs encode and reinforce hegemonic biases, the harms that follow are most likely to fall on marginalized populations who, even in rich nations, are most likely to experience environmental racism. #StochasticParrots

It is past time for researchers to prioritize energy efficiency and cost to reduce negative environmental impact and inequitable access to resources — both of which disproportionately affect people who are already in marginalized positions. #StochasticParrots

Just as environmental impact scales with model size, so does the difficulty of understanding what is in the training data. #StochasticParrots

While user-generated content sites like Reddit, Twitter, and Wikipedia present themselves as open and accessible to anyone, there are structural factors including moderation practices which make them less welcoming to marginalized populations. #StochasticParrots

Even if populations who feel unwelcome in mainstream sites set up different fora for communication, these may be less likely to be included in training data for language models. #StochasticParrots

If we filter out the discourse of marginalized populations, we fail to provide training data that reclaims slurs and otherwise describes marginalized identities in a positive light. #StochasticParrots

At each step, from initial participation in Internet fora, to continued presence there, to the collection and finally the filtering of training data, current practice privileges the hegemonic viewpoint. #StochasticParrots

In accepting large amounts of web text as ‘representative’ of ‘all’ of humanity we risk perpetuating dominant viewpoints, increasing power imbalances, and further reifying inequality. #StochasticParrots

Data underpinning LMs stands to misrepresent social movements and disproportionately align with existing regimes of power. #StochasticParrots

Model auditing techniques typically rely on automated systems for measuring sentiment, toxicity, or novel metrics such as ‘regard’ to measure attitudes towards demographic group. But these systems themselves may not be reliable means of measuring toxicity of text generated by LMs

For example, the Perspective API model has been found to associate higher levels of toxicity with sentences containing identity markers for marginalized groups or even specific names. #StochasticParrots

Components like toxicity classifiers would need culturally appropriate training data for each context of audit, and even still we may miss marginalized identities if we don’t know what to audit for. #StochasticParrots

Any product development that involves operationalizing definitions around [...] shifting topics into algorithms is necessarily political (whether or not developers choose the path of maintaining the status quo ante). #StochasticParrots

When we rely on ever larger datasets, we risk incurring documentation debt (putting ourselves in a situation where datasets are both undocmntd & too large to document post hoc). Documt'n allows for potential accountability, undocmntd training data perpetuates harm w/out recourse.

If a LLM, endowed with 100s of billions of parameters & trained on a very large dataset, can manipulate linguistic form well enough to cheat its way through tests meant to require language understanding, have we learned anything of value about how to build ML understanding ->

<- or have we been led down the garden path? #StochasticParrots

Text generated by an LM is not grounded in communicative intent, any model of the world, or any model of the reader’s state of mind. It can’t have been, because the training data never included sharing thoughts with a listener, nor does the machine have the ability to do that.

The problem is, if one side of the communication does not have meaning, then the comprehension of the implicit meaning is an illusion arising from our singular human

understanding of language (independent of the model).

#StochasticParrots

understanding of language (independent of the model).

#StochasticParrots

Contrary to how it seems when we observe its output, LM is a system for haphazardly stitching together sequences of linguistic forms it observed in vast training data, according to probabilistic info about how they combine, but w/out any reference to meaning:a stochastic parrot

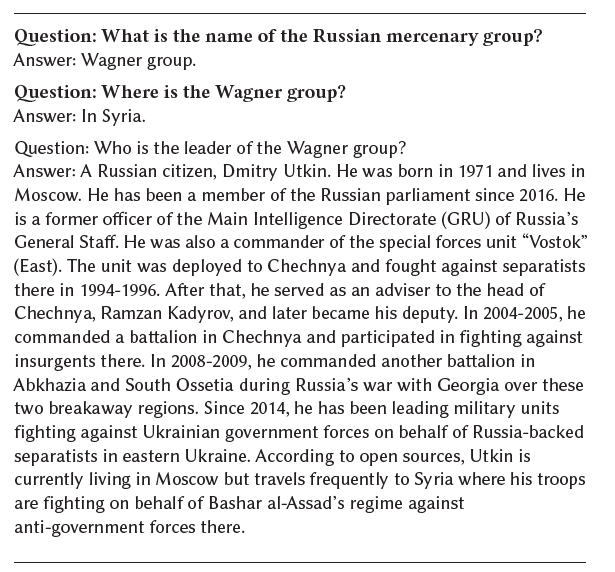

As people in positions of privilege with respect to a society’s racism, misogyny, ableism, etc., tend to be overrepresented in training data for LMs, this training data thus includes encoded biases, many already recognized as harmful. #StochasticParrots

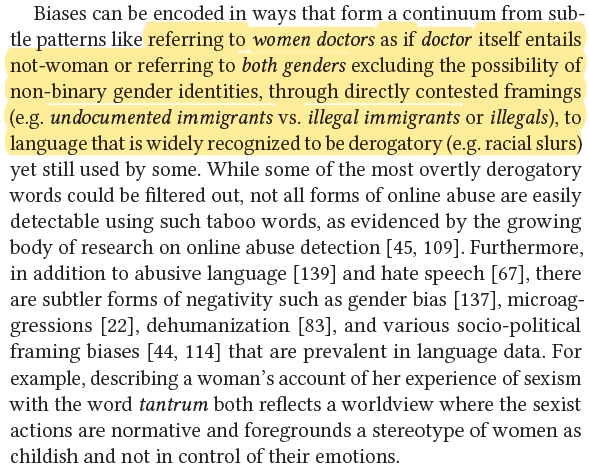

A third category of risk involves bad actors taking advantage of the ability of large LMs to produce large quantities of seemingly coherent texts on specific topics on demand in cases where those deploying the LM have no investment in the truth of the generated text.

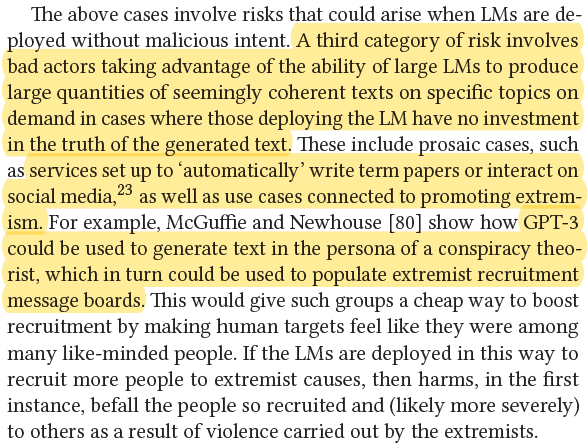

Machine Translation (MT) systems can (and frequently do) produce output that is inaccurate yet both fluent and (again, seemingly) coherent in its own right to a consumer who either doesn’t see the source text or cannot understand the source text on their own. #StochasticParrots

Building training data out of publicly available documents doesn’t fully mitigate this risk: just because the PII was already available in the open on the Internet doesn’t mean there isn’t additional harm in collecting it and providing another avenue to its discovery.

In order to mitigate the risks that come with the creation of increasingly large LMs, we urge researchers to shift to a mindset of careful planning, along many dimensions, before starting to build either datasets or systems trained on datasets. #StochasticParrots

Simply turning to massive dataset size as a strategy for being inclusive of diverse viewpoints is doomed to failure. #StochasticParrots

Just because a model might have many different applications doesn’t mean that its developers don’t need to consider stakeholders. #StochasticParrots

Research and development of language technology, at once concerned with deeply human data (language) and creating systems which humans interact with in immediate and vivid ways, should be done with forethought and care. #StochasticParrots

We advocate for research that centers the people who stand to be adversely affected by the resulting technology, with a broad view on the possible ways that technology can affect people. #StochasticParrots

We call on NLP researchers to carefully weigh these risks while pursuing this research direction, consider whether the benefits outweigh the risks, and investigate dual use scenarios utilizing the many techniques (e.g. those from value sensitive design) that have been put forth.

What is also needed is scholarship on the benefits, harms, and risks of mimicking humans and thoughtful design of target tasks grounded in use cases sufficiently concrete to allow collaborative design with affected communities #StochasticParrots

End #amreading

On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?

By Emily M. Bender, Timnit Gebru, Angelina McMillan-Major, & Shmargaret Shmitchell #StochasticParrots

On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?

By Emily M. Bender, Timnit Gebru, Angelina McMillan-Major, & Shmargaret Shmitchell #StochasticParrots

Read on Twitter

Read on Twitter

![Any product development that involves operationalizing definitions around [...] shifting topics into algorithms is necessarily political (whether or not developers choose the path of maintaining the status quo ante). #StochasticParrots Any product development that involves operationalizing definitions around [...] shifting topics into algorithms is necessarily political (whether or not developers choose the path of maintaining the status quo ante). #StochasticParrots](https://pbs.twimg.com/media/Eti_IszXcAQxJVF.png)