The three hard problems with GPT-3 — a thread

Anyone who’s ever tried @OpenAI’s #GPT3 model is guaranteed to have their minds blown. From writing fiction to generating code, its capabilities seem to come straight of sci-fi movies.

But this is not a thread of praise.

Anyone who’s ever tried @OpenAI’s #GPT3 model is guaranteed to have their minds blown. From writing fiction to generating code, its capabilities seem to come straight of sci-fi movies.

But this is not a thread of praise.

There are three very real issues with #GPT3. Worst of all, they are intrinsic to the model, so they’re not something you can easily fix. What you can do, though, is be aware of them and mitigate their negative consequences.

So here we go:

So here we go:

1. Memories. #GPT3’s latest “memories” are from mid-2018. It might not be a problem if you are using it for “timeless” stuff (such as pondering over the meaning of life or coming up with business advice), but if your use case is dependent on recent events, you might get screwed.

Most notably, #GPT3 knows nothing about the #COVID19 pandemic, an event that has brought drastic changes to economies around the world. If you ask GPT-3, “what is a good business to invest in?”, it might well suggest $BKNG or $DAL.

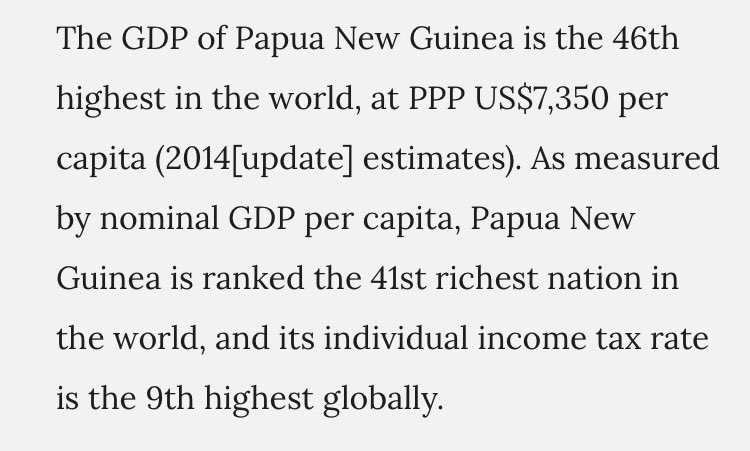

2. Facts & figures. #GPT3 knows some facts pretty well. It knows others not too well. But what sucks most is that it has no idea what it knows and what it doesn’t. If you ask it what the GDP of Papua New Guinea is, it might give out this — which has nothing to do with reality.

So using #GPT3 as an expert tool to give geopolitical (or any other fact/number-based) advice might not be a good idea. What’s a good one? I’ll come to this in the end of the thread.

3. Specialist topics. As #GPT3 was trained on “broad” internets [citation needed], its knowledge of highly specialist topics is, to put it mildly, limited. For example, I work in the #localization industry, and the model’s knowledge of it is next to none.

Here, again, #GPT3 does not realize how bad it is at any specific topic, it just autocompletes the hell out of anything, so if you research some specialist topic you’re not knowledgeable of, you may end up with logically sounding gibberish and more trouble down the road.

# Takeaways

So what you can do to avoid or mitigate the effect of these three flaws? Three broad suggestions:

1. Provide context. If your use case is dependent on recent events, at least make sure to prime the model with information about the #COVID19 pandemic and its effects.

So what you can do to avoid or mitigate the effect of these three flaws? Three broad suggestions:

1. Provide context. If your use case is dependent on recent events, at least make sure to prime the model with information about the #COVID19 pandemic and its effects.

2. Fact-check ALL factual information, or, even better, avoid it at all. #GPT3 is not an expert tool, and using it as such is just like “hammering nails with a microscope,” as a Russian saying goes.

3. If working on a specialist use case, make sure to either BE a specialist or to HIRE one — someone who will do a sanity check on #GPT3’s output. Otherwise you may fall victim to “false fluency” — an effect where logically sounding output is actually nonsensical.

All an all, and ironically, #GPT3 works best for creative tasks — examples such as @copy_ai and @ShortlyAI are a good proof to this. It can do wonders for brainstorming too. Just avoid relying on it as some all-knowing Oracle, and it’ll do wonders for you.

That’s all, folks

That’s all, folks

Oh, and if you liked it pls engage in any way that’s comfortable for you. Thx!

Read on Twitter

Read on Twitter