Since there was interest in how I addressed this question, here's a thread on what I said in class. TLDR: if you're operating under certain narrow assumptions about what data analysis is, maybe you should worry about looking at data too much. But usually its better not to 1/ https://twitter.com/JessicaHullman/status/1355711415420661765

We had already talked a lot about Tukey's ideas on exposure of data to display the unanticipated, & of how starting with simple direct plots then gradually adding complexity to test expectations is a natural way to do visual analysis. 2/

So I started by talking about how there is sometimes a desire to talk about EDA as something that should be sharply distinguished from whatever confirmatory analysis follows is, where EDA is model free and CDA is exploration free, and we don't want mixing 3/

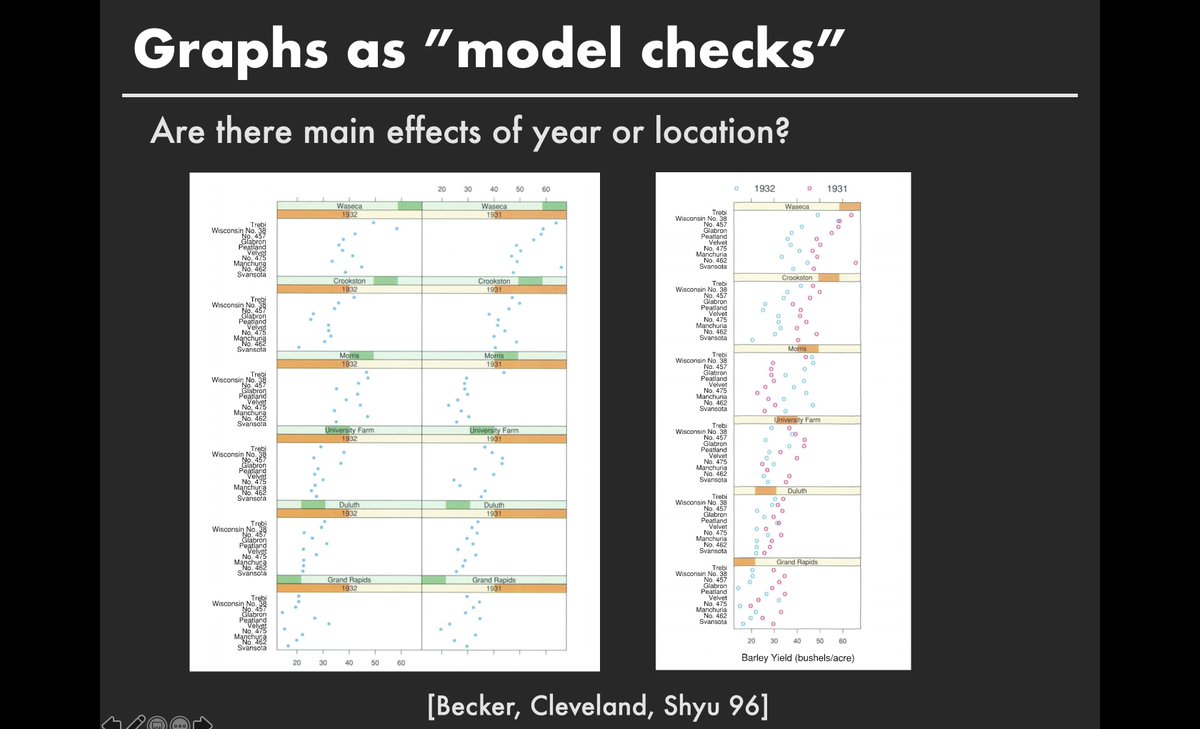

But this can be an unhelpful distinction. First it ignores natural overlap between modeling and exploration. A good plot created during visual exploration can be a model in itself. Eg a trellis plot can help one examine main effects 4/

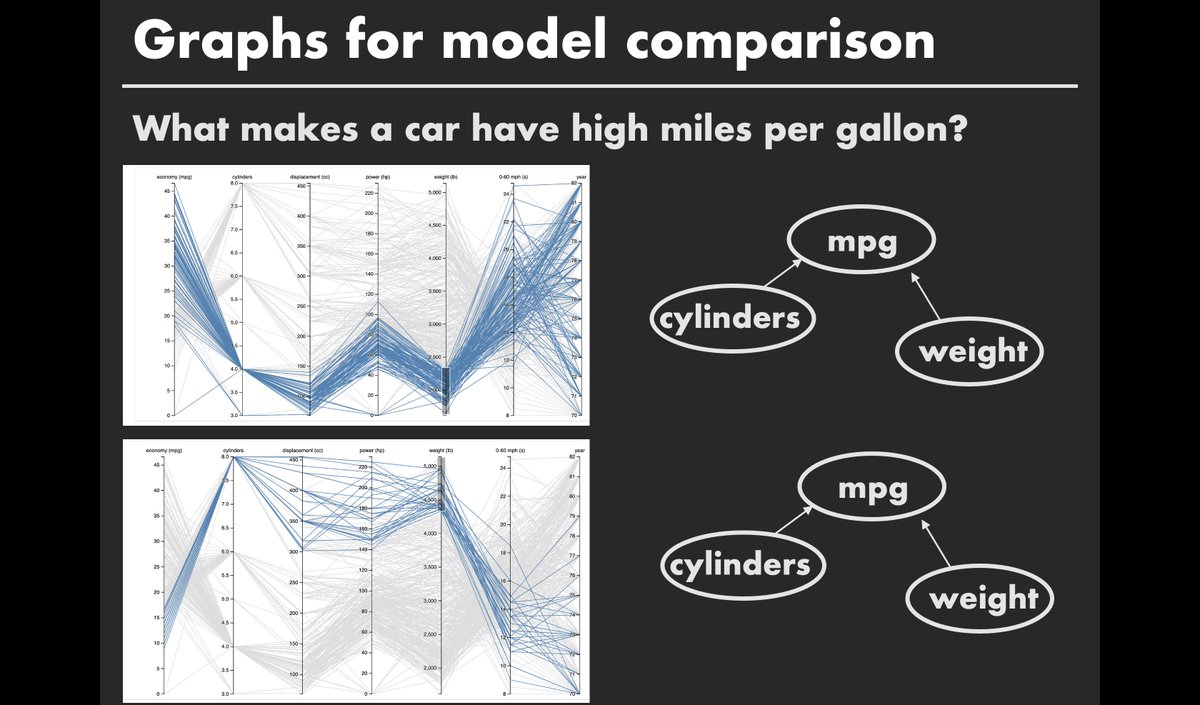

Another example I gave is when we use interactive visualizations to do "casual" causal inference (a topic @AlexKale17 is currently thinking about w/me and @yifanwu, & which some visual analytics research has addressed) 5/

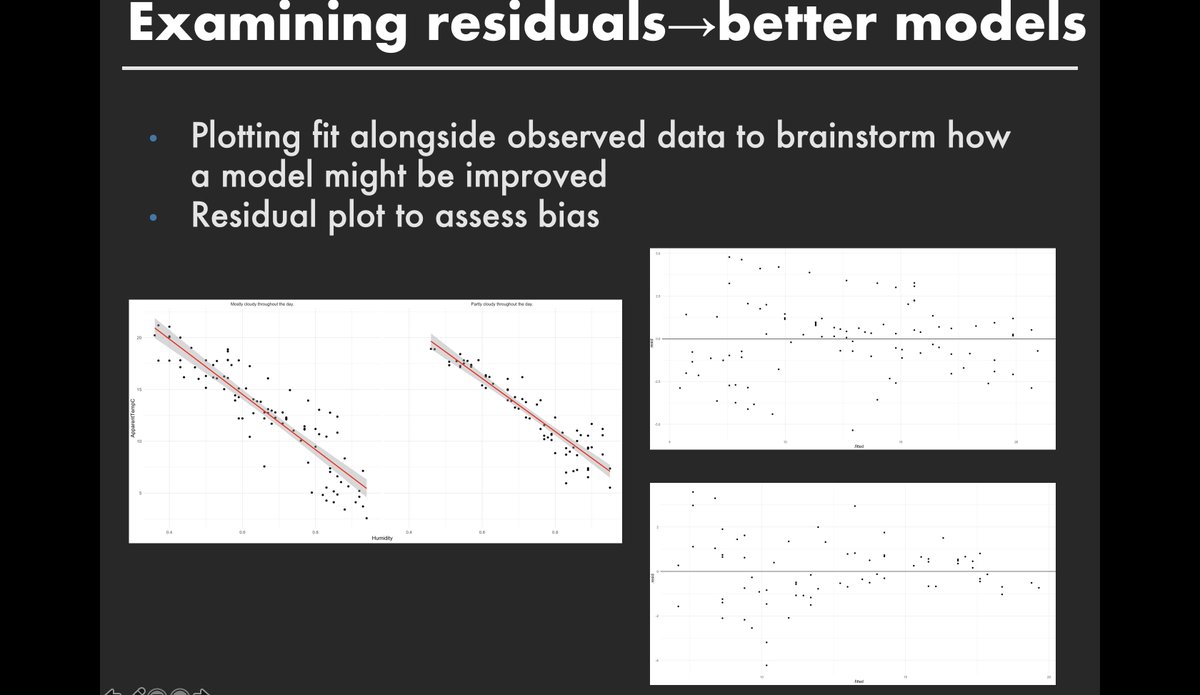

The typical EDA-then-CDA view also treats CDA as an end in the pipeline, but sometimes, such as in looking at plots of a model fit, it's better thought of as a "new beginning", eg useful for brainstorming a revised model that will fit the data better. 6/

So ideally there IS overlap & iteration betw stages in the analysis pipeline, from data diagnostics to exploratory vis analysis to formal or informal modeling. Adapting models to data is often a good idea. Tukey himself spoke of the iterative nature of exposing & summarizing 7/

But can overlap between exploratory visual analysis and confirmatory analysis procedures sometimes manifest as fishing for results? E.g., if we visually analyze data to find significant seeming differences then confirm/report those, do we have a multiple comparisons problem? 8/

Too much overlap is one proposed reason for the replication crisis in psych where researchers ability to make lots of comparisons then only report the significant ones has been discussed Should we be careful about overexamining data whenever we want to report on it? 9/

This can be a problem but it generally occurs under a relatively narrow set of scenarios where you don't know much before analysis so you have to discover & confirm on the same data (ie hard to get more), you worry abt type 1 error, & you don't want to adjust point estimates 10/

There are various alternatives to not looking too much at data that are better ways to address the need to condition your tests on prior activity, like multilevel modeling to shrink estimates away from extremes, splitting data into train/test, & vis-specific regularization 11/

Slides & class time rushed so I know this could be improved! I plan to create an interactive analysis class eventually. Also, for more polished thoughts on why blanket warnings abt looking at data too much are silly see @zerdeve @djnavarro et al (claim 2) https://www.biorxiv.org/content/10.1101/2020.04.26.048306v2.full

Read on Twitter

Read on Twitter