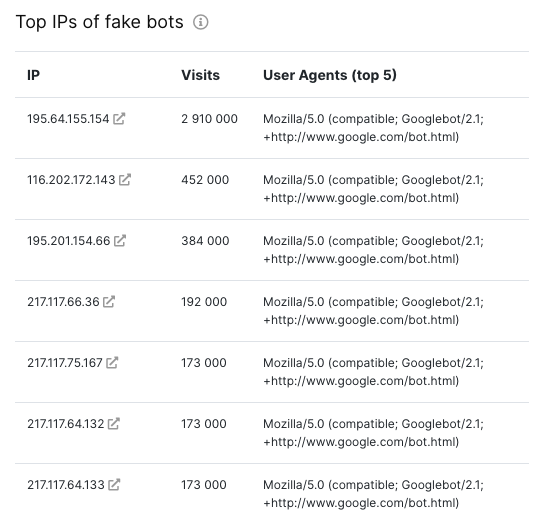

Fake bots are bots that use the user-agent of Googlebot or other search bots, and don't pass reverse DNS check, as described in the manual - https://developers.google.com/search/docs/advanced/crawling/verifying-googlebot

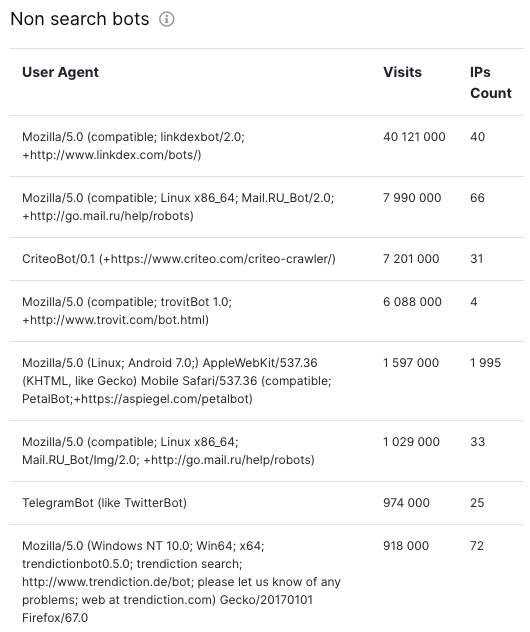

Scrapers are bots with their own user-agent, which also crawl your site for various purposes, such as page analysis, price analysis, content theft, etc.

So what should you do with scrapers? It all depends on your situation - if there are not many requests from such bots, then just ignore it and don’t block anything.

Often such bots provide a significant server load. For example, in the e-commerce niche, we saw cases where 50% of traffic were scrapers.

In this case, you can block them by IP / subnet. From practice, you need to do this very, very carefully, and look at ‘Whois’ for each IP.

In this case, you can block them by IP / subnet. From practice, you need to do this very, very carefully, and look at ‘Whois’ for each IP.

We also saw cases when blocking the subnet of some kind of hosting has disabled access to the site for a large area of the city.

Also recently, a client told us that he blocked IPs - not related to Google by Whois - but at the same time received messages from GSC that the site pages are not available. We will analyze this case and report the results.

Read on Twitter

Read on Twitter