A short thread:

It amazes me how many crucial ideas underlying now-popular semiparametrics (aka doubly robust parameter/functional estimation / TMLE / double/debiased/orthogonal ML etc etc) were first proposed many decades ago.

I think this is widely under-appreciated!

It amazes me how many crucial ideas underlying now-popular semiparametrics (aka doubly robust parameter/functional estimation / TMLE / double/debiased/orthogonal ML etc etc) were first proposed many decades ago.

I think this is widely under-appreciated!

Here’s a (now 35 yr old) book review where Bickel gives some history: https://projecteuclid.org/euclid.aos/1176346530

A more detailed discussion of the early days is in the comprehensive semiparametrics text by BKRW:

https://www.springer.com/gp/book/9780387984735

Would love to hear if others know good historical resources!

A more detailed discussion of the early days is in the comprehensive semiparametrics text by BKRW:

https://www.springer.com/gp/book/9780387984735

Would love to hear if others know good historical resources!

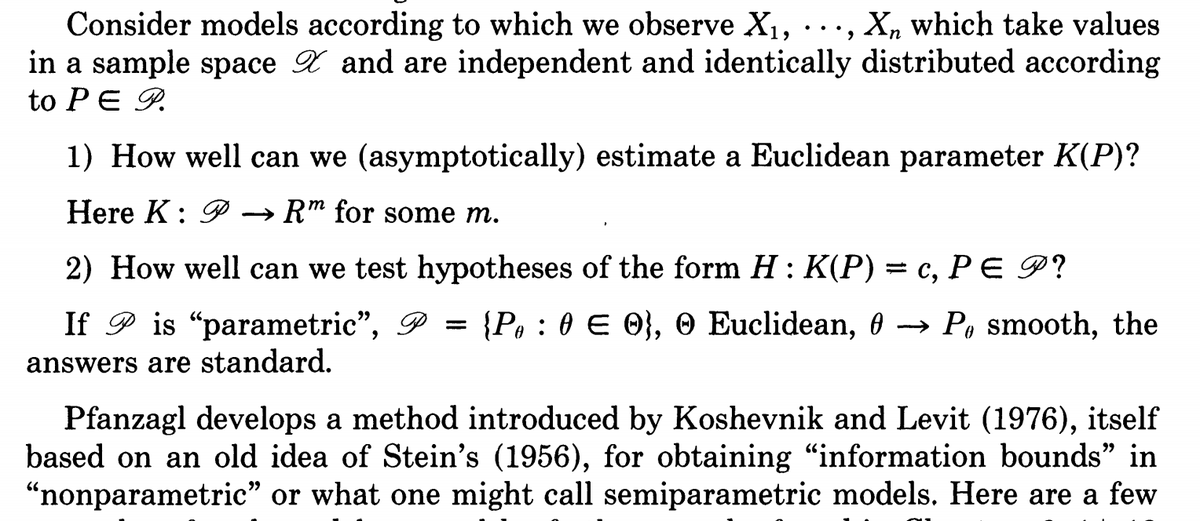

For lower bounds & optimality:

65 yrs ago Stein laid the groundwork for adapting classic Cramer-Rao bounds to the messy nonparametric world:

https://twitter.com/edwardhkennedy/status/1121269311027535873?s=20

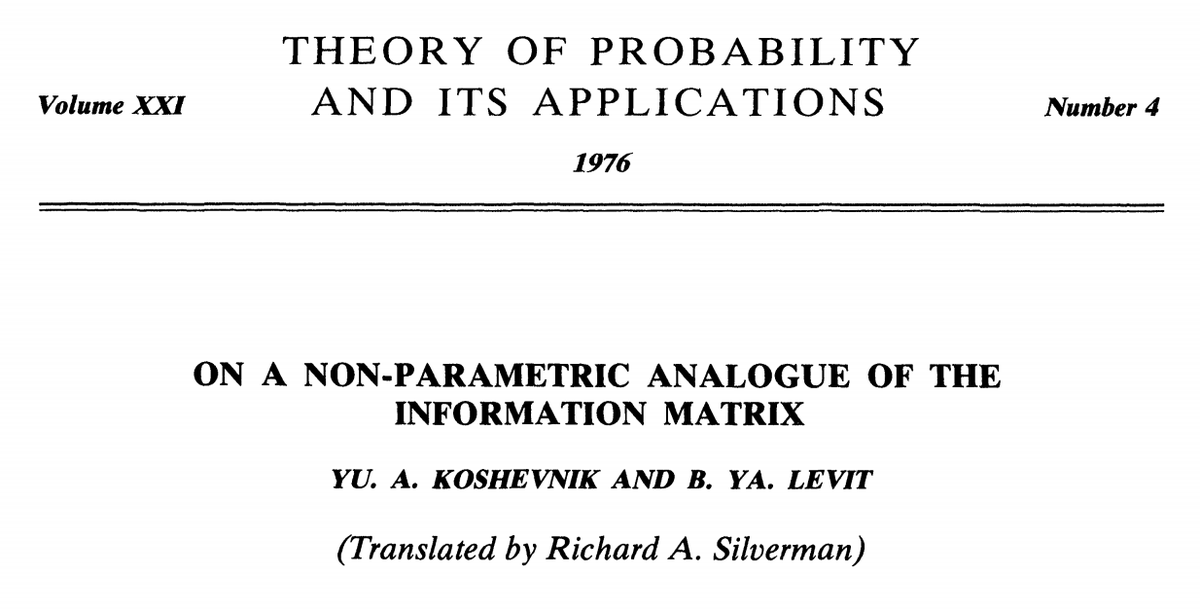

This was extensively developed & generalized in 70s/80s by Hasminskii, Ibragimov, Koshevnik, Levit, Pfanzagl, etc

65 yrs ago Stein laid the groundwork for adapting classic Cramer-Rao bounds to the messy nonparametric world:

https://twitter.com/edwardhkennedy/status/1121269311027535873?s=20

This was extensively developed & generalized in 70s/80s by Hasminskii, Ibragimov, Koshevnik, Levit, Pfanzagl, etc

Next I'll show parts of a 1982 text by Johann Pfanzagl, which will look very familiar to people in double ML, TMLE, etc

https://www.springer.com/gp/book/9780387907765

(Pfanzagl's typesetting is horrible, but let's push thru)

(Also at times I'll contrast a modern treatment in

https://projecteuclid.org/euclid.ss/1421330553)

https://www.springer.com/gp/book/9780387907765

(Pfanzagl's typesetting is horrible, but let's push thru)

(Also at times I'll contrast a modern treatment in

https://projecteuclid.org/euclid.ss/1421330553)

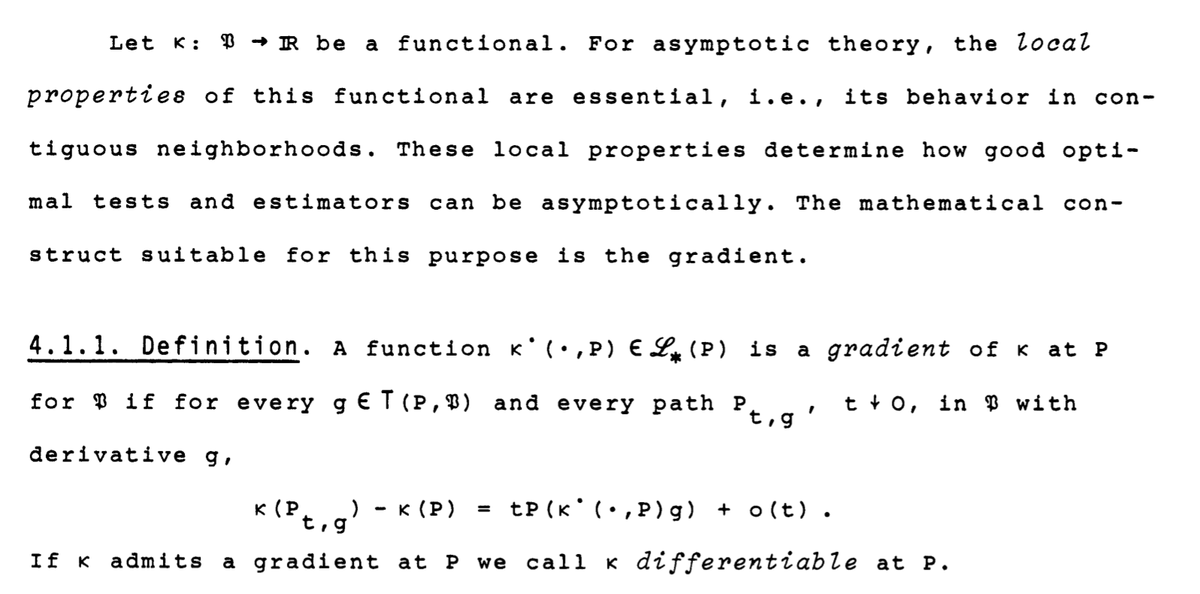

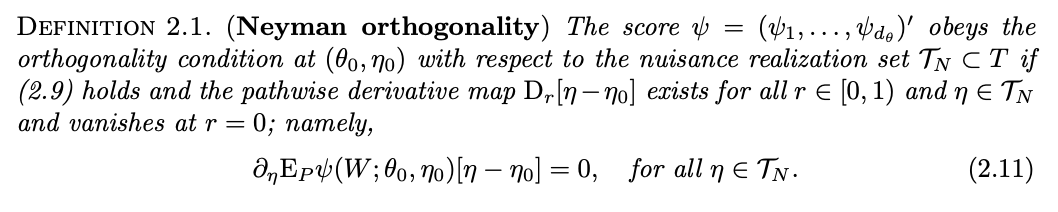

Here’s Pfanzagl on the gradient of a functional/parameter, aka derivative term in a von Mises expansion, aka influence function, aka Neyman-orthogonal score

Richard von Mises first characterized smoothness this way for stats in the 30s/40s! eg:

https://projecteuclid.org/euclid.aoms/1177730385

Richard von Mises first characterized smoothness this way for stats in the 30s/40s! eg:

https://projecteuclid.org/euclid.aoms/1177730385

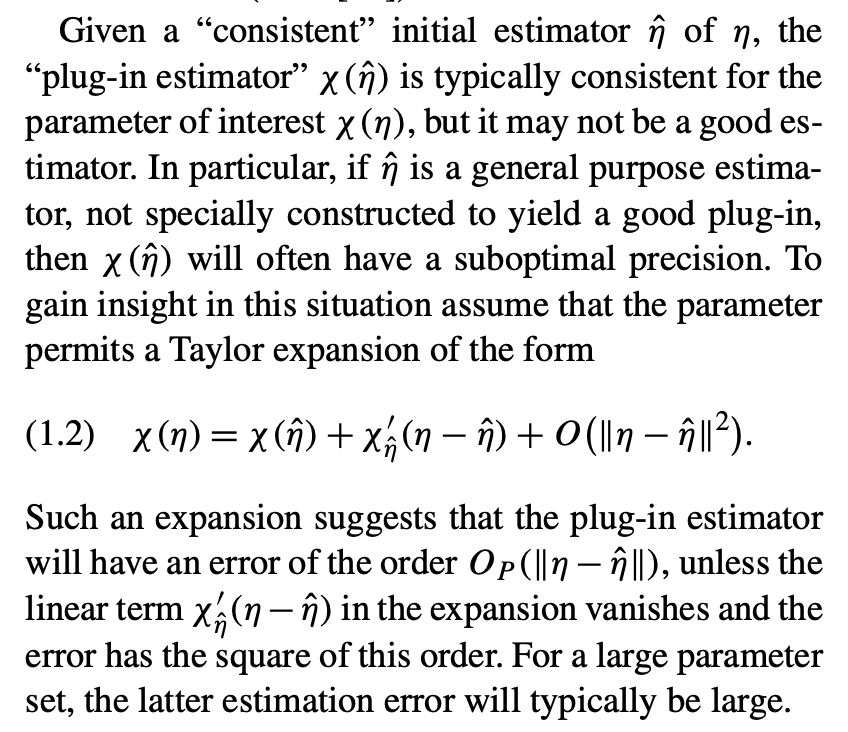

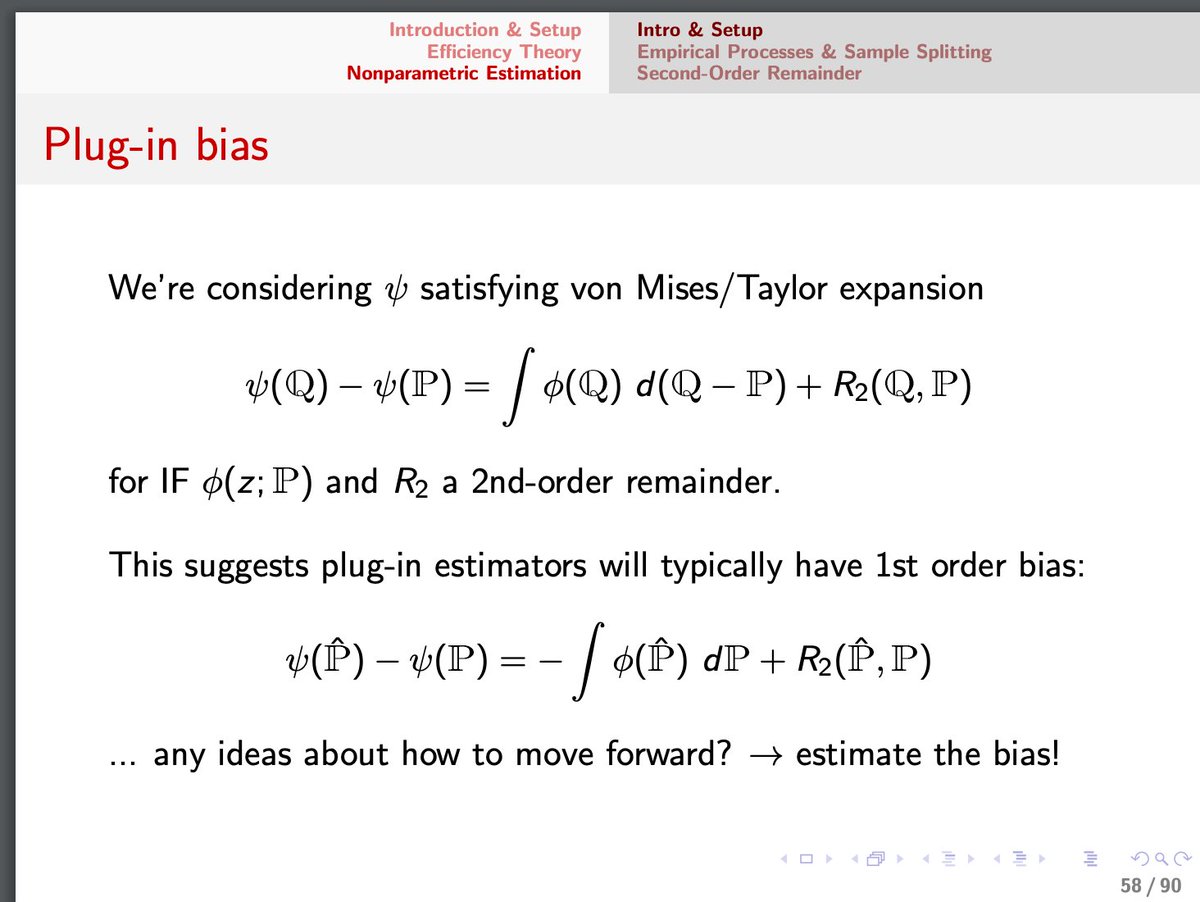

Pfanzagl uses pathwise differentiability above, but w/regularity conditions this is just a distributional Taylor expansion, which is easier to think about

I note this in my tutorial here:

https://twitter.com/edwardhkennedy/status/1045652861605097476?s=20

Also v related to @VC31415 orthogonality - worth a separate thread

I note this in my tutorial here:

https://twitter.com/edwardhkennedy/status/1045652861605097476?s=20

Also v related to @VC31415 orthogonality - worth a separate thread

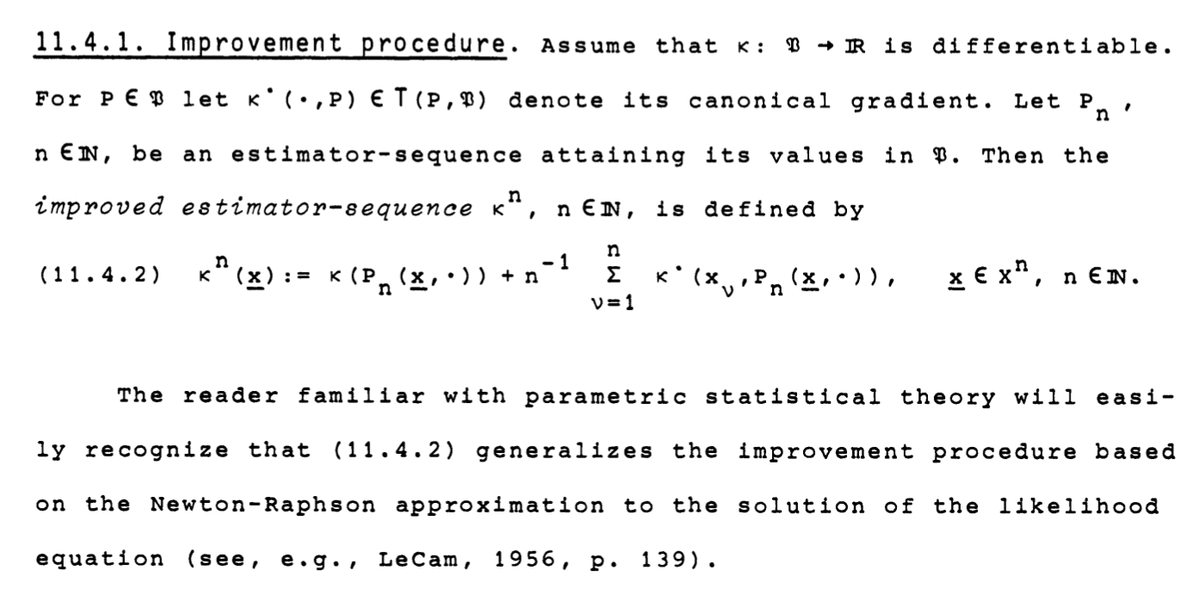

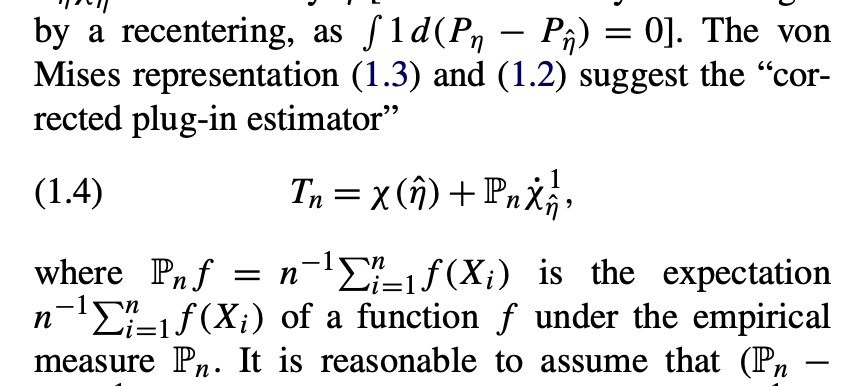

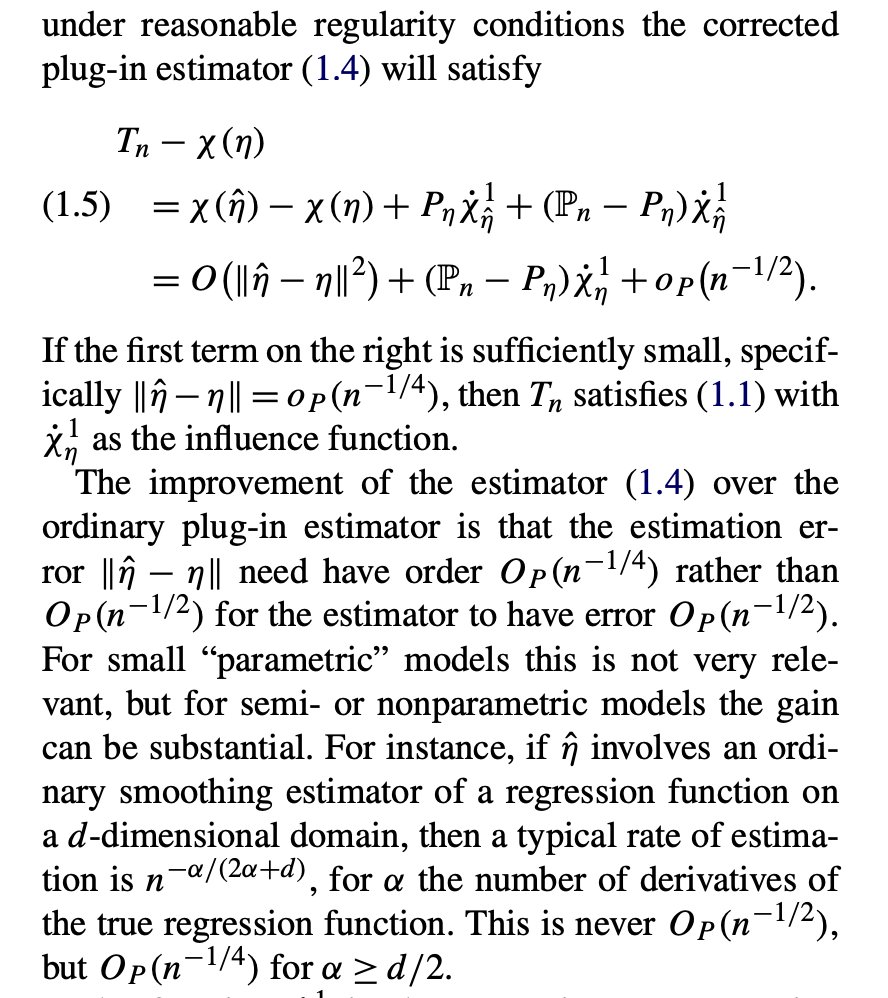

Once you have a pathwise differentiable parameter, a natural estimator is a debiased plug-in, which subtracts off the avg of estimated influence fn

Pfanzagl gives this 1-step estimator here - in causal inference this is exactly the doubly robust / DML estimator you know & love!

Pfanzagl gives this 1-step estimator here - in causal inference this is exactly the doubly robust / DML estimator you know & love!

Pfanzagl also points out our prized n^(1/4) nuisance rate conditions, which allow fast rates & inference for the parameter of interest, even w/ flexible np smoothing / lasso etc

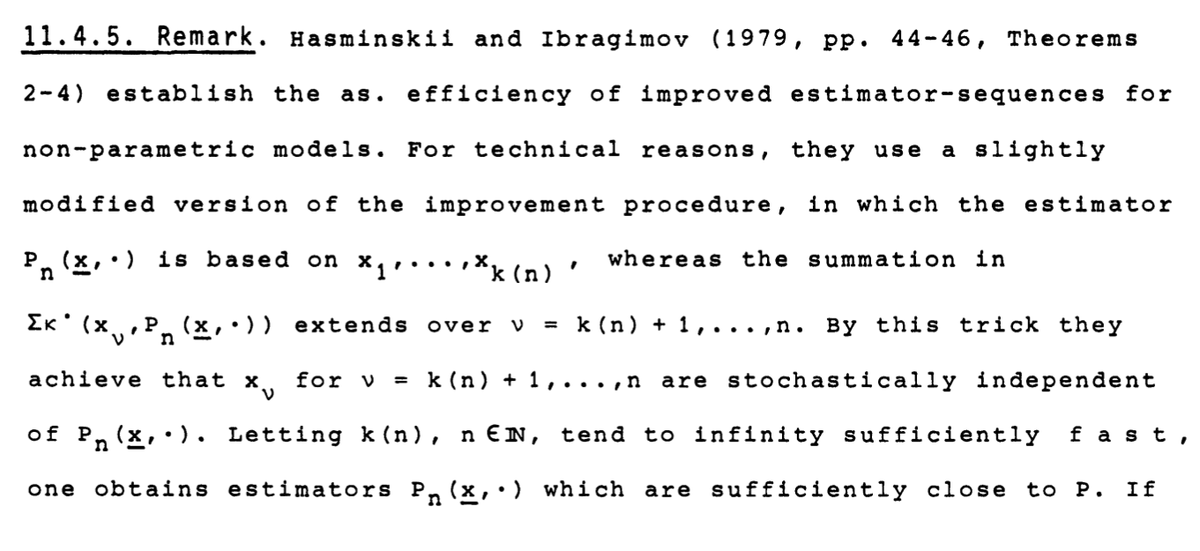

Even throws in a mention of sample-splitting / cross-fitting! (from Hasminskii & Ibragimov 1979)

Even throws in a mention of sample-splitting / cross-fitting! (from Hasminskii & Ibragimov 1979)

Ok I think I'll stop now :) I'm always amazed at how ahead of its time this work was.

It's too bad it's not as widely known among us causal+ML people

(I also didn't know about it for a while - I think it was @mcaronebstat who introduced me to the wonderful world of Pfanzagl)

It's too bad it's not as widely known among us causal+ML people

(I also didn't know about it for a while - I think it was @mcaronebstat who introduced me to the wonderful world of Pfanzagl)

Also: none of this is meant to diminish ground-breaking recent work in stats/biostat/econ, which has pushed flexible causal inference & param estimation forward in huge huge ways

I just think these connections are often missed & are super interesting, so wanted to point them out

I just think these connections are often missed & are super interesting, so wanted to point them out

Read on Twitter

Read on Twitter