Now we can move on to talking about microscopes proper. For people who don't work with them for a living, you probably haven't seen once since school and you remember them looking like this

The strange thing is, that's not hugely different from what microscopes looked like for a long time.

Original microscope design in the 1600s looked like this. A single ball of glass in a metal holder

Original microscope design in the 1600s looked like this. A single ball of glass in a metal holder

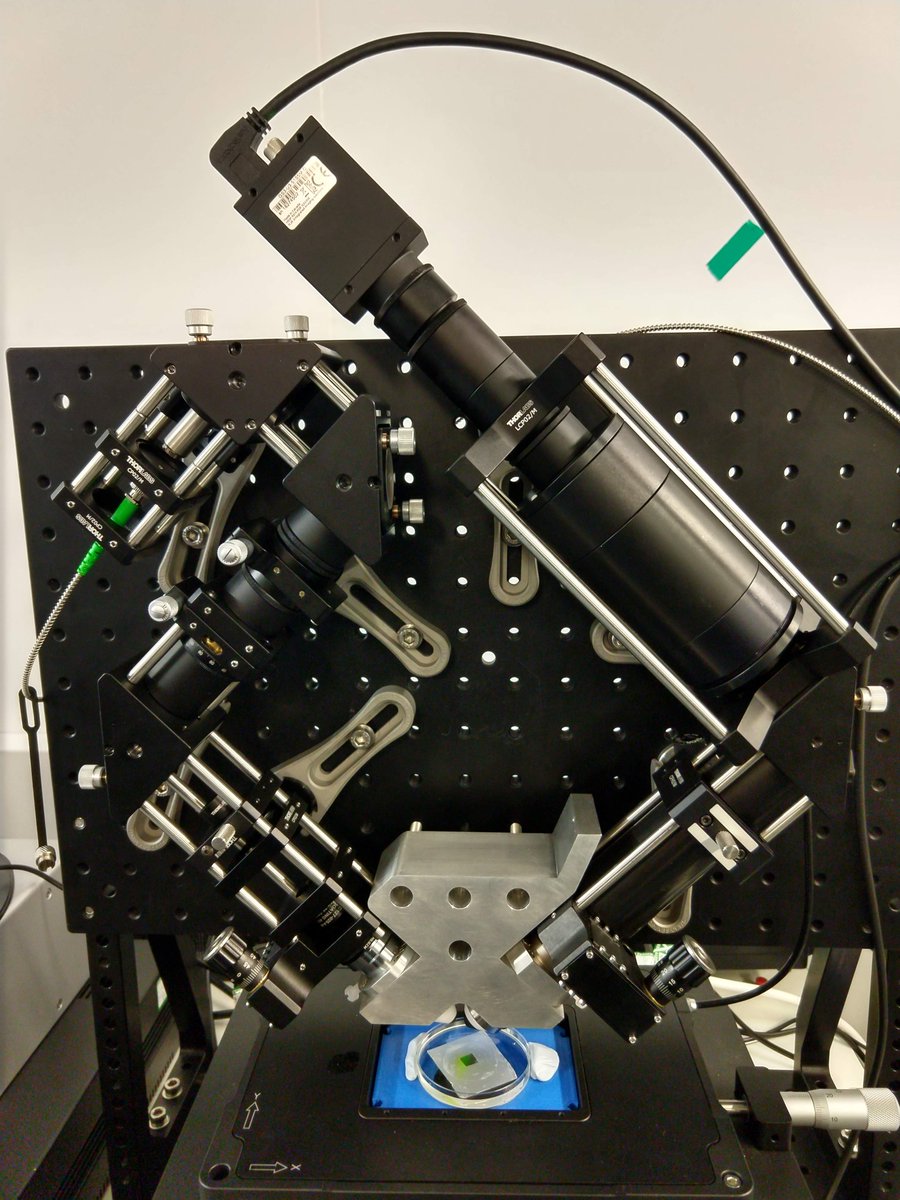

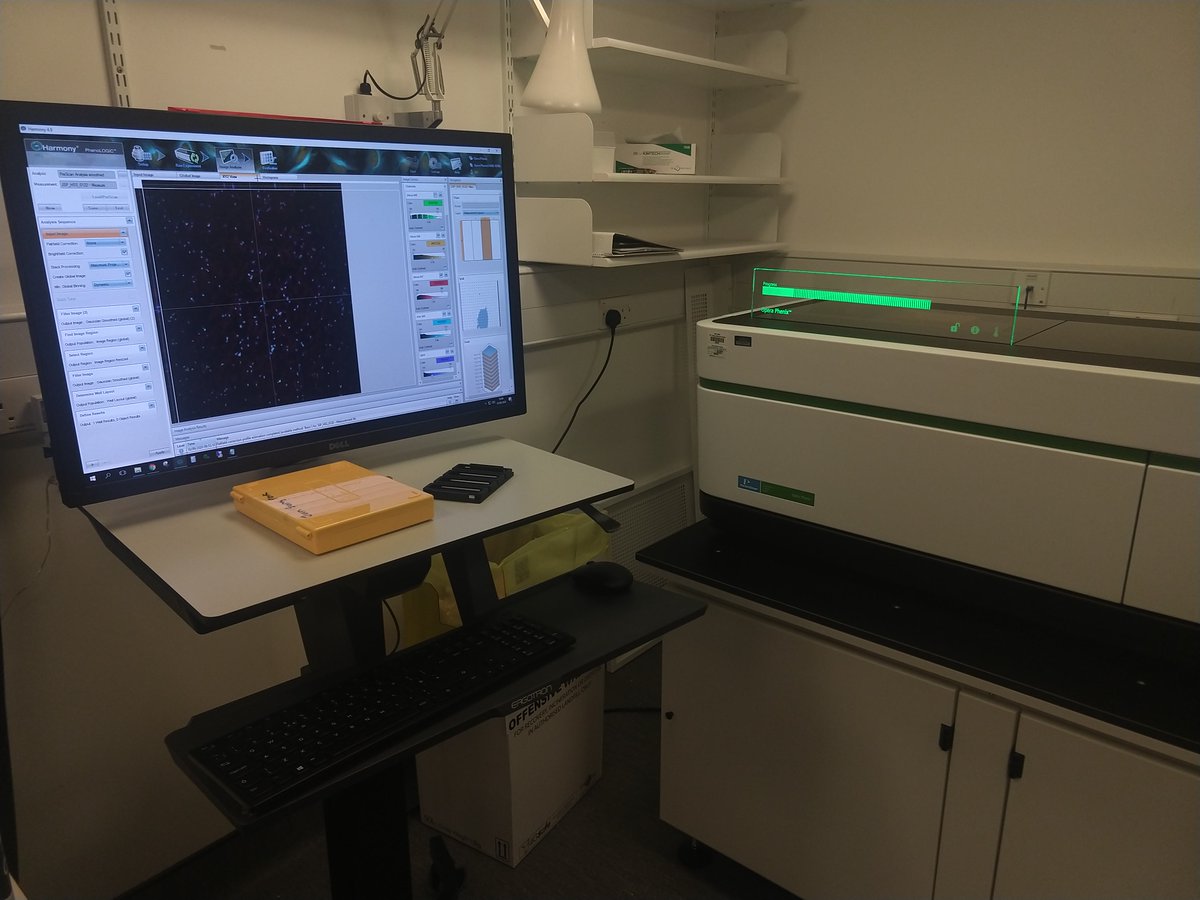

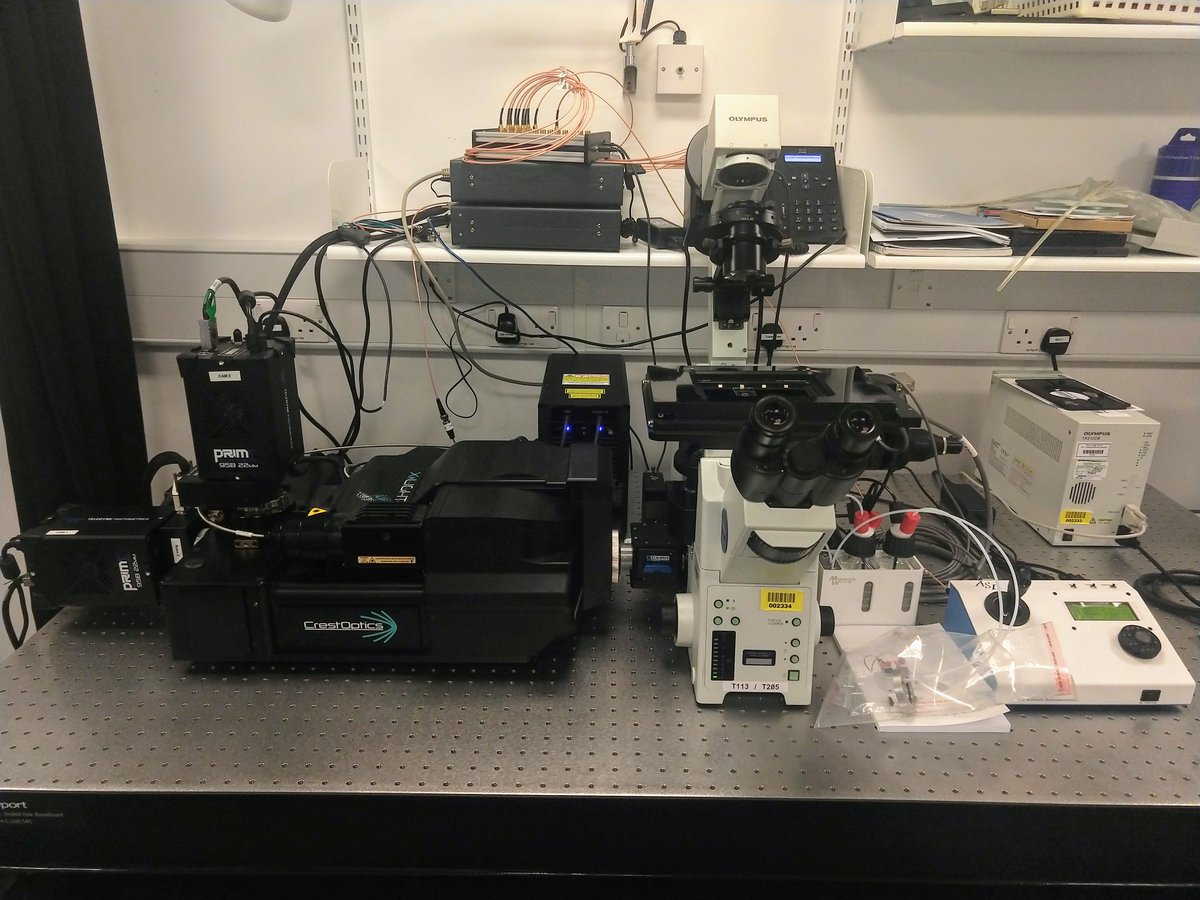

Within a century though, they looked like this. And that fundamental design did't change hugely for a long time. Lens building got better, people figured out how to use bright lamps for light sources and focus them in samples, but the basic design didn't change too much

If those don't look like you expect, it is because the last 3 decades or so have massively changed what microscopes look like and the ways they are used, and that change is accelerating

The cause of those changes? Also Physics

(I may be a tad biased)

Basically optics got a little better, but what has really driven innovation has been three kinda connected things. Computing power, lasers and image sensors

(I may be a tad biased)

Basically optics got a little better, but what has really driven innovation has been three kinda connected things. Computing power, lasers and image sensors

I say connected, because all three things exist because we discovered quantum physics in the mid 20th century and then applied it to understanding how materials behave. Hence solid state physics, hence lasers and transistors, hence the field that makes me a living

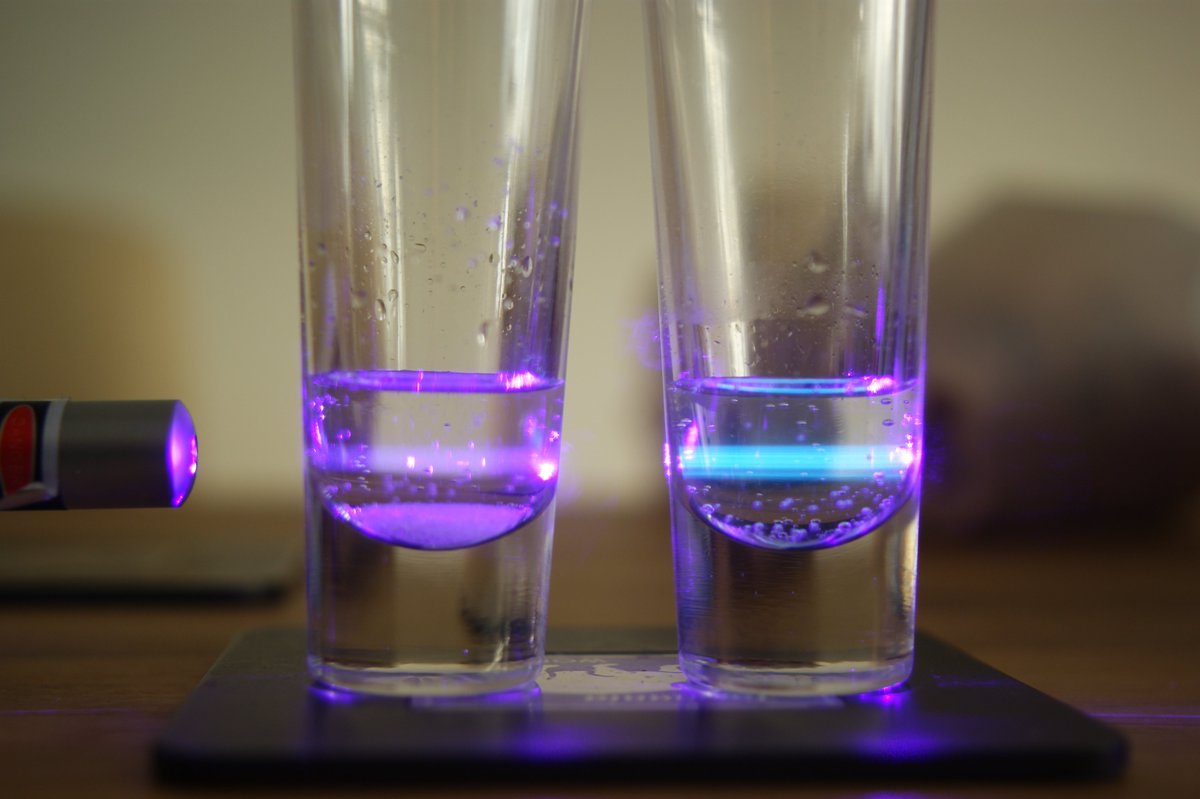

Why lasers? Because most modern microscopy methods use a principle called fluorescence. Basically, you shine light of a specific wavelength/colour on a material, it absorbs that light and it emits light back at a different colour

(purple laser, blue fluorescence on the right)

(purple laser, blue fluorescence on the right)

Lasers allow us to deliver very bright light to a specific point in space, which is great for lighting up samples to be imaged. We use some fancy biochemistry to stick fluorescent molecules to the things we want to, we hit them with bright light, and we take a picture

To go one level down, why fluorescence?

Because a cell is basically a sack made of fatty acids in which a whole bunch of chemistry happens. Most of the stuff biologists care about is transparent, so we have to label it to look at it

Because a cell is basically a sack made of fatty acids in which a whole bunch of chemistry happens. Most of the stuff biologists care about is transparent, so we have to label it to look at it

Next, Image sensors, also known as the piece of silicon in your digital camera that actually collects the light and converts it to a digital signal.

Quick bit of history: Early microscopists looked through the glass and drew what they saw by hand. Then film cameras came along and people started mounting film cameras on microscopes, taking pictures of they samples, and developing them

Then we got point detectors, which were sort of like reverse CRT screens. they could convert a spot of light focused on them into an electrical signal, so you could scan a point of light across a sample and record the light coming back one point at a time

These existed in parallel with film for a while, because for some kinds of microscopy, point detectors didn't make much practical sense

Then came image sensors, and all of a sudden biological images could be pushed directly from a microscope to a PC without a few hours of messing around with chemicals

Also image sensor technology kept advancing and got so sensitive that they could detect single photons of light, so microscopes could "see" things that had previously been invisible

Nowadays the kind of image sensors that end up in scientific cameras are almost as sensitive as the most sensitive point detectors, and almost as fast, but they can take a singe image at once instead of one point at a time, so they are more useful for most kinds of microscopy

And the thing that ties them all together, faster computers. To get why computers matter so much here it helps to realize that a digital image is a picture represented as a series of numbers, and you can therefore use maths to 'see' things in the image...

Since computers are good at maths, and since cameras can pump out images as fast as computers can see them, you can now create computerized tools for looking at pictures and extracting interesting data out of them

So basically the kind of maths that can be used to recognise faces in photos can also be used to do things like count cells, measure their sizes and shapes, track their movements etc. etc.

You tie all of this together and all of a sudden microscopes become big data tools that can take incredibly large numbers of pictures of impossibly small amounts of light inside cells and turn that into numbers that tell us what is going on

Read on Twitter

Read on Twitter