On the hazards of using of proxy variables in causal inference – a short tweetorial #EpiTwitter #CausalTwitter

Unconfoundedness of the treatment-outcome relationship is necessary for causal effect identification. But in most real-world observational studies, true ignorability of the treatment assignment doesn’t hold, because we can’t accurately measure all possible confounders.

Say we want to measure SES because it potentially confounds the effect of red wine intake on the risk for CVD. But what is SES anyway? It’s an abstract concept, never to be measured directly. Same goes for intelligence, or health.

But we may have access to proxies for these constructs. E.g. ZIP code, years of education and income for SES, IQ test score for intelligence and BMI, blood tests for health status.

Proxies are everywhere! It can even be argued that all variables are proxies for the latent constructs we would have wanted to measure.

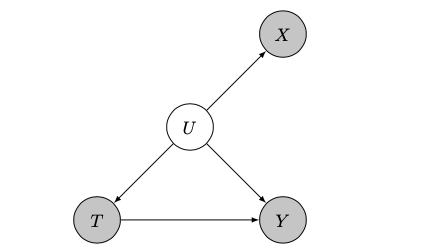

How should we use these variables in a causal analysis? Should we control for them, or not? Technically they’re not confounders, because they don’t lie on a backdoor path b/w treatment and outcome.

But still they are associated with the unmeasured confounder, and so may indirectly adjust for some of the confounding bias.

The common practice is to use them just like true confounders. @_MiguelHernan recommends that it’s typically preferable to adjust, rather than not adjust for these variables https://www.hsph.harvard.edu/miguel-hernan/causal-inference-book/

What are the implications? Confounding bias *will not be eliminated*, no matter how many proxies we gather and irrespective of sample size! To demonstrate this residual confounding, consider the above DAG with X = U + noise for increasing noise intensity.

ATE=1 always. When U is accurately measured (noise=0), the ATE is consistently estimated. But with measurement error, the ATE estimate is biased.

The corruption mechanism is the infamous attenuation bias, elegantly illustrated here @page_eco @MaartenvSmeden https://twitter.com/page_eco/status/1061934897755877377

Unlike simple attenuation bias which always shrinks the regression coefficient towards zero, the bias in ATE estimation can go either way, depending on the data generating process.

Sounds pretty bad. Is it prevalent in real world studies? Presumably, but hard to evaluate. Some examples include https://link.springer.com/article/10.2307/2648118

https://bpspsychub.onlinelibrary.wiley.com/doi/full/10.1111/bmsp.12146?casa_token=d0jDi0c8ROAAAAAA%3AyM6kJWHzFZSX8guP7ayeWKnWwqDz5Mv5SAiTw0bZrKfcnMIWD-R0XE-piFFzlXouuzCA7r0watzzrg and also recent theoretical work by @lindanab1, @MaartenvSmeden, @RuthHKeogh https://journals.lww.com/epidem/Fulltext/2020/11000/Quantitative_Bias_Analysis_for_a_Misclassified.7.aspx

https://bpspsychub.onlinelibrary.wiley.com/doi/full/10.1111/bmsp.12146?casa_token=d0jDi0c8ROAAAAAA%3AyM6kJWHzFZSX8guP7ayeWKnWwqDz5Mv5SAiTw0bZrKfcnMIWD-R0XE-piFFzlXouuzCA7r0watzzrg and also recent theoretical work by @lindanab1, @MaartenvSmeden, @RuthHKeogh https://journals.lww.com/epidem/Fulltext/2020/11000/Quantitative_Bias_Analysis_for_a_Misclassified.7.aspx

What can be done to mitigate this bias? Some references in this thread https://twitter.com/VC31415/status/1351231707677122562 Other approaches are matrix factorization @madeleineudell @XiaojieMao @nathankallus

https://papers.nips.cc/paper/2018/file/86a1793f65aeef4aeef4b479fc9b2bca-Paper.pdf, DL as in http://papers.neurips.cc/paper/7223-causal-effect-inference-with-deep-latent-variable-models.pdf @wellingmax @ShalitUri @david_sontag

https://papers.nips.cc/paper/2018/file/86a1793f65aeef4aeef4b479fc9b2bca-Paper.pdf, DL as in http://papers.neurips.cc/paper/7223-causal-effect-inference-with-deep-latent-variable-models.pdf @wellingmax @ShalitUri @david_sontag

That’s it for now, thanks for tuning in. The familiarity I have with this topic is only from a theoretical perspective. If you do applied work and had thought about this issue, it would be great if you could share from your experience!

Oh and thanks @EpiEllie for the tweetorial inspiration!

Read on Twitter

Read on Twitter