Quick RISC OS question.

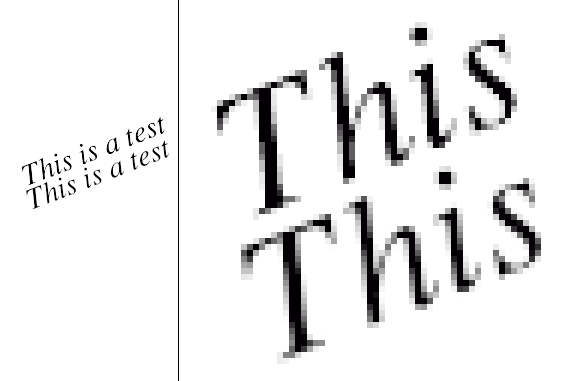

Just comparing the venerable FontManager’s rendering (top) to a certain vector graphics program I won’t mention (bottom).

This was because I came across an oddity in the code and had to count greys to make sure I wasn’t mad.

FontManager uses 4bpp AA, we all know that. Fifteen shades and transparent (when blending; bg otherwise). Except I keep getting 14. Often 13. Code looks odd, output doesn’t lie.

FontManager uses 4bpp AA, we all know that. Fifteen shades and transparent (when blending; bg otherwise). Except I keep getting 14. Often 13. Code looks odd, output doesn’t lie.

But then I considered that it only uses 4bpp to save memory. There was a time when 4bpp AA was 𝙡𝙤𝙩𝙨 and memory was tight. But these days, why not use 8bpp? It looks a lot better (and yes, OK, the lower renderer is using over a quarter of a million levels internally)

“But if FontManager used 8bpp wouldn’t it double the amount of Font Cache used?”

I’m not exactly 𝙖𝙥𝙥𝙖𝙡𝙡𝙚𝙙 by that thought.

I’m not exactly 𝙖𝙥𝙥𝙖𝙡𝙡𝙚𝙙 by that thought.

PS the before-and-after screenshots... the FontManager there is using my much sharper SuperSample antialiasing – the standard RO output would be an incomparable blurry mess.

Read on Twitter

Read on Twitter