My DMs are exploding with requests for language model bigness takes and I'm happy to oblige:

The scaling laws paper (Kaplan et al https://arxiv.org/abs/2001.08361 ) shows that, *for a particular neural network architecture* (transformers), increasing the number of parameters improves language model performance, and so does increasing the training corpus size.

It seems plausible that similar scaling laws would apply for other architectures, as long as the architecture is kept constant. But they don't apply across architectures: some architectures are more efficient in number of parameters than others.

Here's an example from Jozefowicz et al 2016: https://arxiv.org/abs/1602.02410

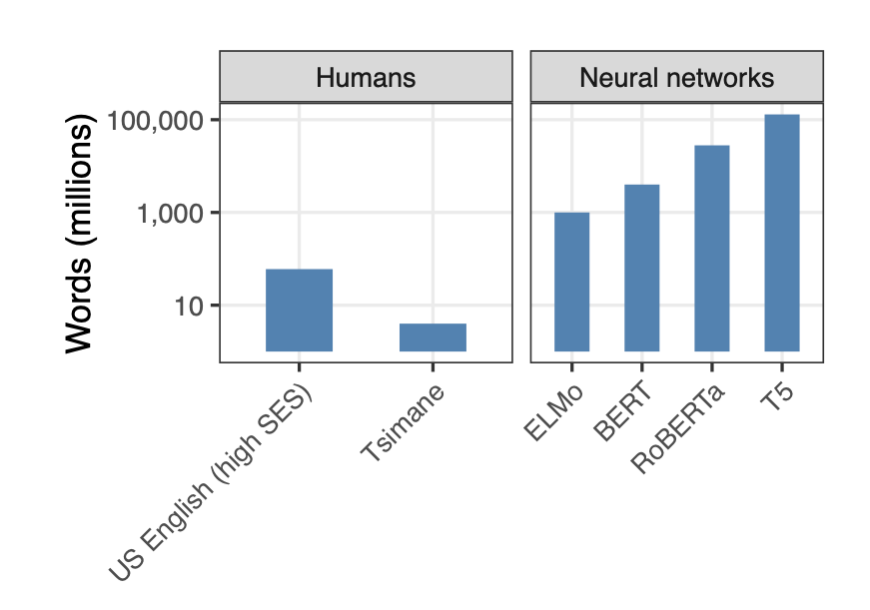

The same holds for training data. Transformers might a need 100 billion word corpus to reach GPT-3-level fluency, but other architectures can get by with a much smaller amount of data. Humans are one example:

Architectures that rely on an enormous amount of training data scraped from the Internet are clearly problematic because it becomes infeasible to get a handle of what's included in the training data: https://twitter.com/emilymbender/status/1353447336433782784

I'm not sure model size per se (as in number of parameters) is directly relevant to the bias debate, though. There are two arguments I can think of:

1. Larger models are, all else being equal, more data-hungry, so the two sizes are related. This is reasonable, but again the focus should be on developing sample efficient models (i.e. ensuring that all else is not equal).

2. Larger models are harder to understand than smaller ones (again, all else being equal), so biases are more likely to go undetected. Maybe, though I think this overstates our ability to understand "small" neural networks.

(An unrelated argument against having a lot of parameters is the environmental impact one, but after a few discussions here I've come to the conclusion that this isn't a serious concern at this point.)

(A bonus tweet on a very extreme dissociation between language model size and perplexity: small neural networks are much better than large n-gram models https://twitter.com/kchonyc/status/1335953953012510721)

Anyway, you know my spiel, we need models that can be trained on a reasonably sized corpus and generalize in the way we want them to, but I'm not sure it's that important for them to be small (have a small number of parameters). https://arxiv.org/abs/2005.00955

Read on Twitter

Read on Twitter