This is a beginner-friendly introduction to:

Linear Algebra for Machine Learning.

Linear Algebra for Machine Learning.

One of the topics in math that you'll find in machine learning courses as a pre-requisite is Linear Algrebra.

What is 'Linear Algebra' and how is it used in machine learning?

This thread will answer just that.

Prerequisites to follow along: None.

What is 'Linear Algebra' and how is it used in machine learning?

This thread will answer just that.

Prerequisites to follow along: None.

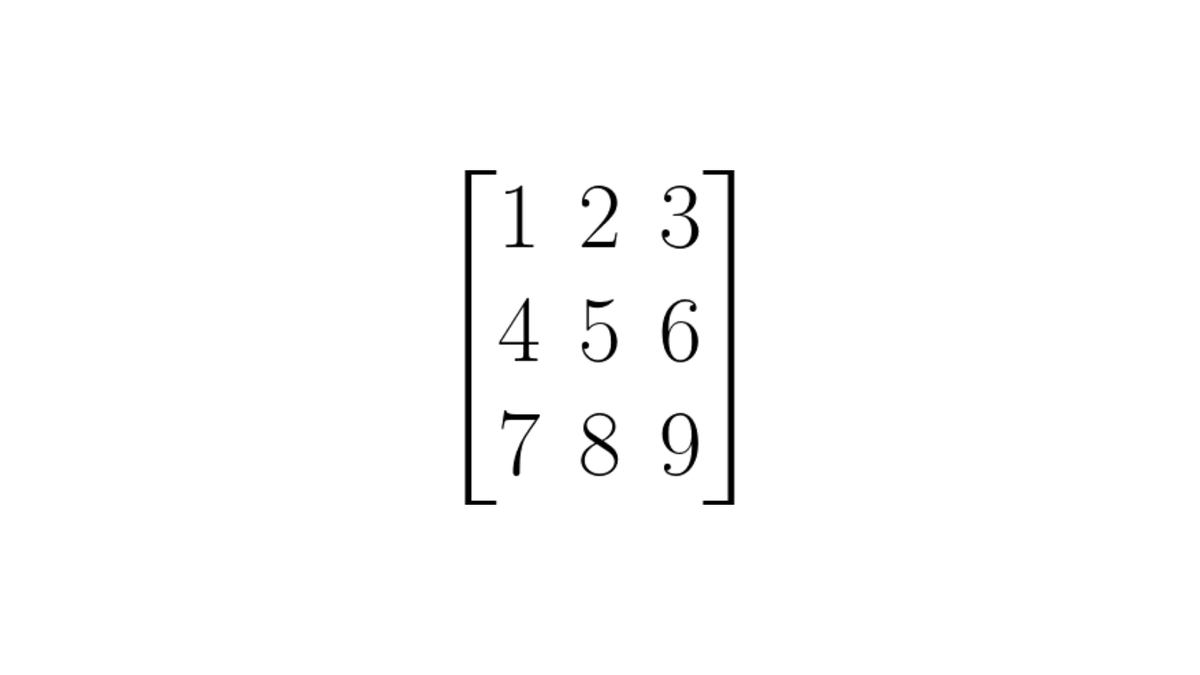

The Matrix

This is the heart of Linear Algebra.

A matrix is essentially a bunch of numbers stored between 2 brackets in a tabular manner.

This is the heart of Linear Algebra.

A matrix is essentially a bunch of numbers stored between 2 brackets in a tabular manner.

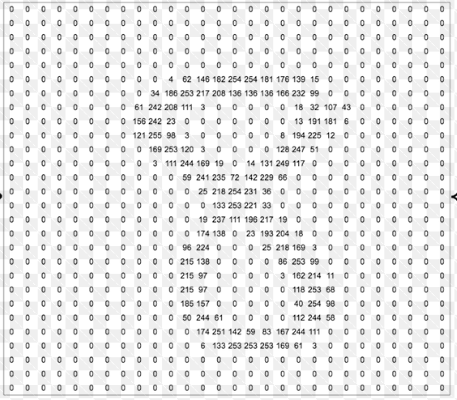

What could these numbers in the matrix be?

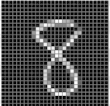

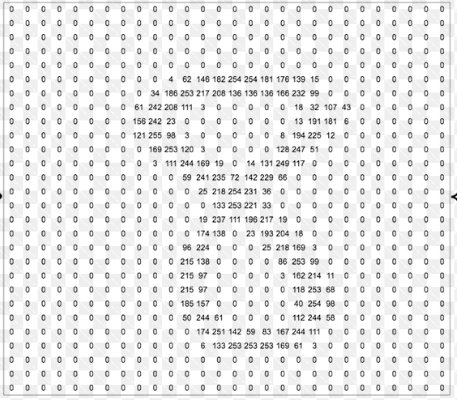

- Pixels in an image

Left is an image of '8' and on the right is a matrix showing the numerical values of each pixel (black-0, white-255).

- Pixels in an image

Left is an image of '8' and on the right is a matrix showing the numerical values of each pixel (black-0, white-255).

The below picture shows the mathematical notation for writing matrices.

'i' denotes the number of terms on the Y-axis and 'j' denotes the number of terms on the X-Axis in the Matrix.

'i' denotes the number of terms on the Y-axis and 'j' denotes the number of terms on the X-Axis in the Matrix.

We can 'transform' these matrices in several ways. 'Transpose' is one of them.

Let's look at it

Let's look at it

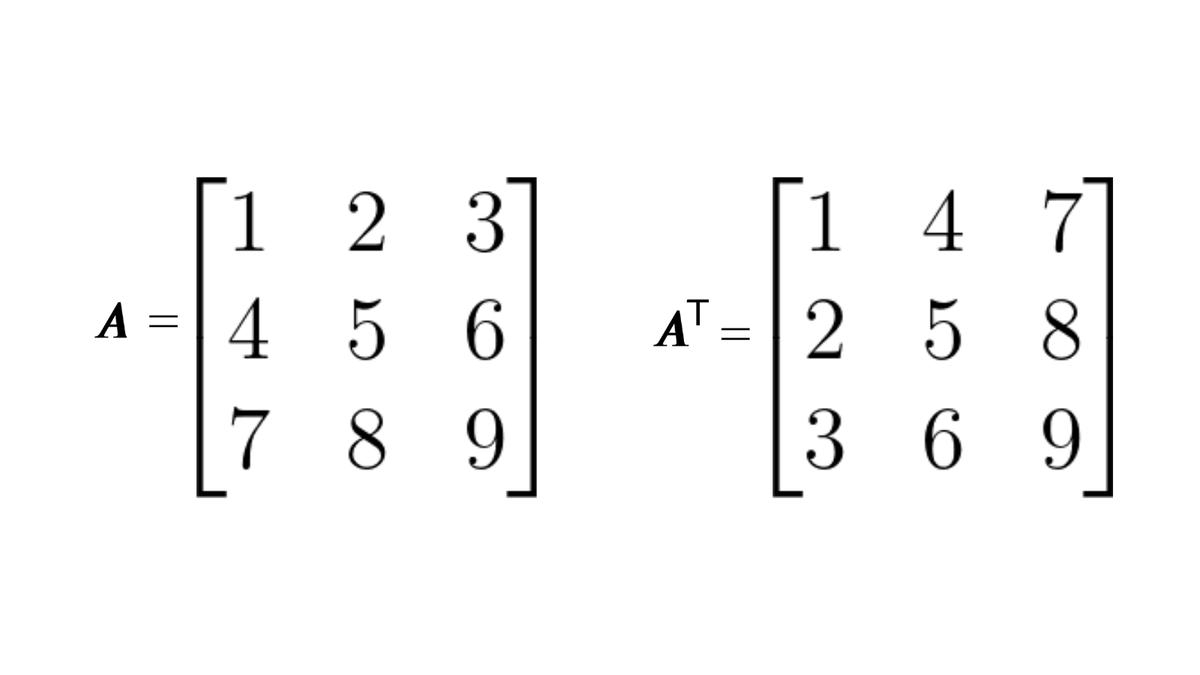

Transpose of a Matrix

Now imagine you have an imaginary line going from the top-left corner of the matrix to the bottom right, and then you interchange the opposite values.

The matrix you get after switching the values is the transpose of the matrix.

Now imagine you have an imaginary line going from the top-left corner of the matrix to the bottom right, and then you interchange the opposite values.

The matrix you get after switching the values is the transpose of the matrix.

Think of a transpose as flipping the matrix along the red line.

In mathematical notation, a transpose is denoted by a small 'T' at the top of the letter that was originally assigned to the matrix.

In mathematical notation, a transpose is denoted by a small 'T' at the top of the letter that was originally assigned to the matrix.

There is a special type of matrix called the 'identity' matrix.

They look like this (basically the top-left to bottom-right diagonal consists of 1s and the other values are 0s.

They look like this (basically the top-left to bottom-right diagonal consists of 1s and the other values are 0s.

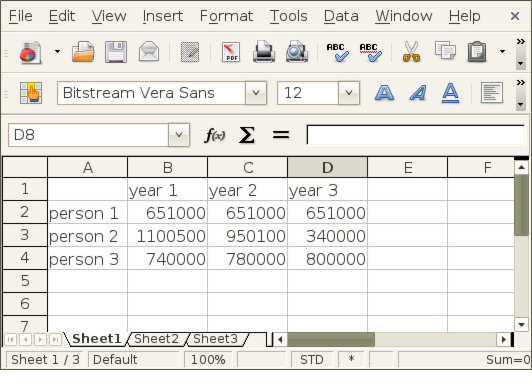

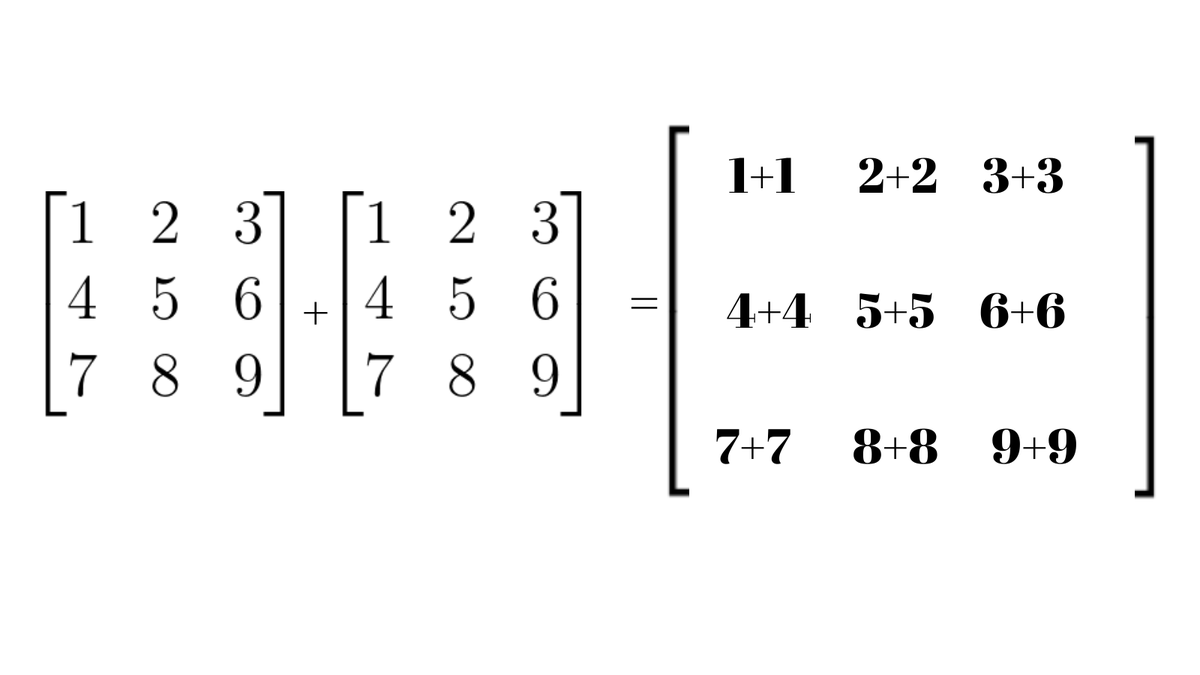

We can also add, subtract or multiply matrices.

Adding and subtracting matrices is as simple as adding/subtracting the corresponding values of the matrices

Adding and subtracting matrices is as simple as adding/subtracting the corresponding values of the matrices

On the other hand, multiplying is a bit tricky.

It looks somewhat like this

It probably doesn't make sense, which is why you should look at this site: http://mathsisfun.com/algebra/matrix-multiplying.html

It looks somewhat like this

It probably doesn't make sense, which is why you should look at this site: http://mathsisfun.com/algebra/matrix-multiplying.html

Of course, this isn't everything and there are *many more * concepts in linear algebra used for machine learning but this thread covers a lot of the basics of Linear Algebra that you'll need for machine learning.

If you liked this thread then share it with others so that they can benefit from it.

I am planning to make a similar thread for calculus and I'd love to hear your thoughts in the replies.

Keep Learning

I am planning to make a similar thread for calculus and I'd love to hear your thoughts in the replies.

Keep Learning

"But wait, you did not explain how is all of this helpful in machine learning?"

I left a couple of hints in the thread for you to brainstorm about it

Here's the answer

I left a couple of hints in the thread for you to brainstorm about it

Here's the answer

Let's say we want to make a machine learning model that can recognize numbers in a given image.

We can't just give this image to a computer and ask "Hey, what number is in this image?"

We can't just give this image to a computer and ask "Hey, what number is in this image?"

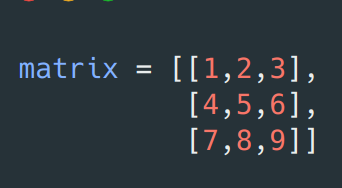

We need to convert that image into something that a computer can understand, like a LoL (List of Lists  ) which is a representation of a matrix in Python.

) which is a representation of a matrix in Python.

) which is a representation of a matrix in Python.

) which is a representation of a matrix in Python.

Many times we need to transform this matrix in a way that our machine learning model wants it to be.

It could be a transpose, addition, or multiplication but I won't get into the specifics of that.

It could be a transpose, addition, or multiplication but I won't get into the specifics of that.

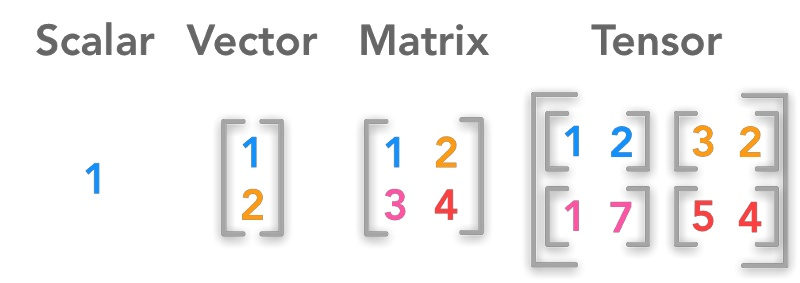

Another thing that you must know about is the difference between a Scalar, Vector, and Matrix.

Scalar: Just a number

Scalar: Just a number

Vector: A row or column of numbers between brackets

Vector: A row or column of numbers between brackets

Matrix: Numbers in a tabular form between brackets with multiple rows and columns.

Matrix: Numbers in a tabular form between brackets with multiple rows and columns.

Scalar: Just a number

Scalar: Just a number Vector: A row or column of numbers between brackets

Vector: A row or column of numbers between brackets Matrix: Numbers in a tabular form between brackets with multiple rows and columns.

Matrix: Numbers in a tabular form between brackets with multiple rows and columns.

Another addition:

Tensors: These are multiple Vectors stored in a Matrix.

Tensors: These are multiple Vectors stored in a Matrix.

Image Source: http://kdnuggets.com/2018/05/wtf-tensor.html

Thanks to @badamczewski01 for the suggestion.

Tensors: These are multiple Vectors stored in a Matrix.

Tensors: These are multiple Vectors stored in a Matrix.Image Source: http://kdnuggets.com/2018/05/wtf-tensor.html

Thanks to @badamczewski01 for the suggestion.

Read on Twitter

Read on Twitter