Does higher performance on ImageNet translate to higher performance on medical imaging tasks?

Surprisingly, the answer is no!

We investigate their relationship.

Paper: https://arxiv.org/abs/2101.06871

@_alexke, William Ellsworth, Oishi Banerjee, @AndrewYNg @StanfordAILab

1/8

Surprisingly, the answer is no!

We investigate their relationship.

Paper: https://arxiv.org/abs/2101.06871

@_alexke, William Ellsworth, Oishi Banerjee, @AndrewYNg @StanfordAILab

1/8

Transfer learning using pretrained ImageNet models has been the standard approach for developing models in medical imaging.

We’ve been assuming that better ImageNet architectures perform better and that pretrained weights boost performance on the medical task!

2/8

We’ve been assuming that better ImageNet architectures perform better and that pretrained weights boost performance on the medical task!

2/8

We compare the transfer performance and parameter efficiency of 16 popular convolutional architectures on a large chest X-ray dataset (CheXpert) to investigate these assumptions.

Our 4 top findings:

3/8

Our 4 top findings:

3/8

Finding 1: Architecture improvements on ImageNet do not lead to improvements on chest x-ray interpretation

We find no relationship between ImageNet performance and CheXpert performance (𝜌 = 0.08, 𝜌 = 0.06 respectively) regardless of whether the models are pretrained.

4/8

We find no relationship between ImageNet performance and CheXpert performance (𝜌 = 0.08, 𝜌 = 0.06 respectively) regardless of whether the models are pretrained.

4/8

Finding 2: Surprisingly, model size matters less than model family when models aren’t pretrained

Within an architecture family, the largest and smallest models have small differences in performance, but different model families have larger differences in performance.

5/8

Within an architecture family, the largest and smallest models have small differences in performance, but different model families have larger differences in performance.

5/8

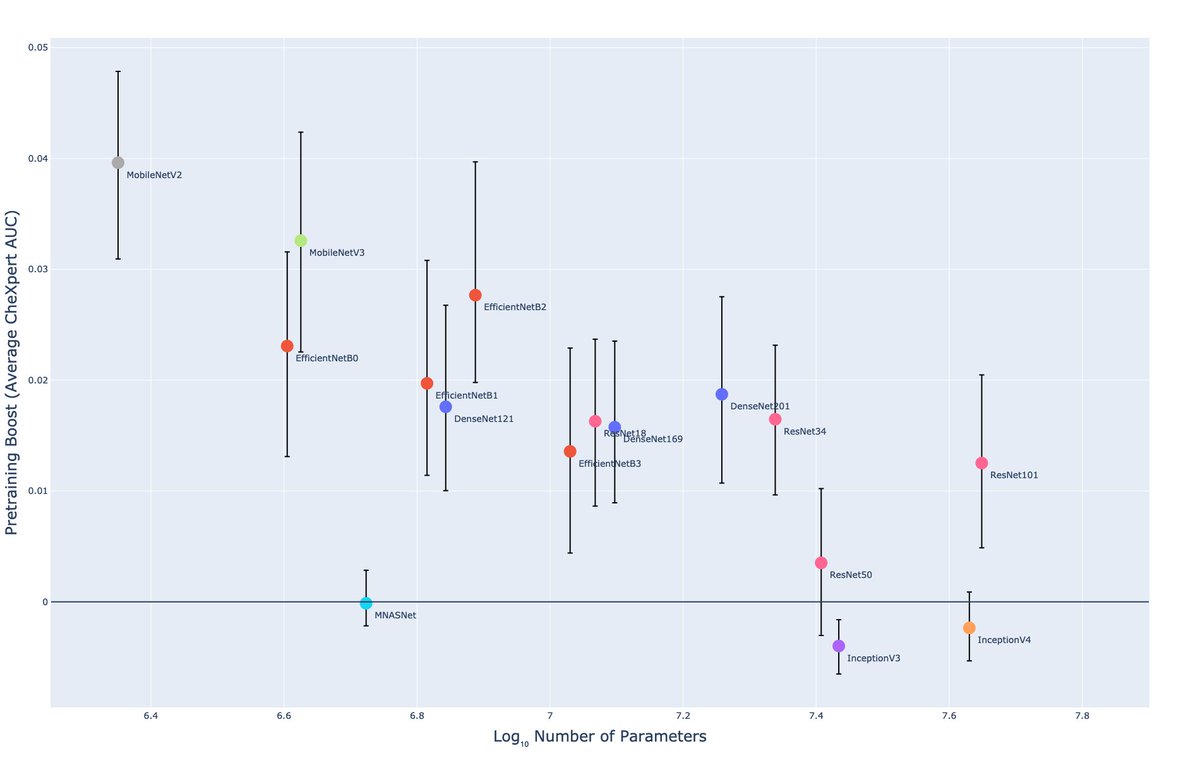

Finding 3: Imagenet pretraining helps, especially for smaller models

We observe that ImageNet pretraining yields a statistically significant boost in performance across architectures, with a higher boost for smaller architectures (𝜌 = −0.72 with number of parameters).

6/8

We observe that ImageNet pretraining yields a statistically significant boost in performance across architectures, with a higher boost for smaller architectures (𝜌 = −0.72 with number of parameters).

6/8

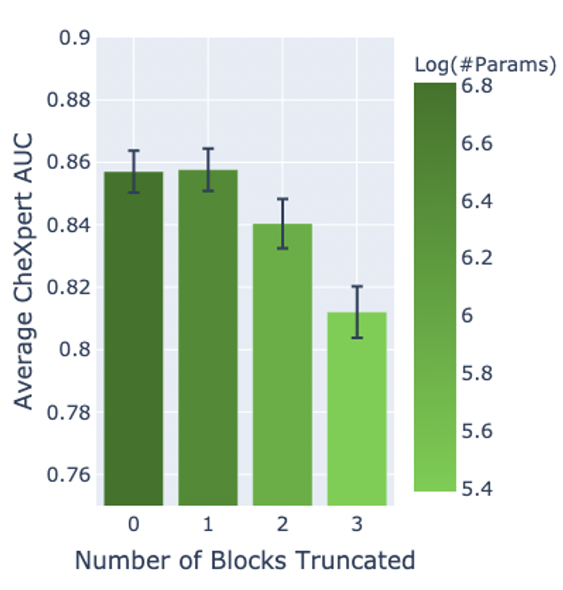

Finding 4: Many layers can be discarded to reduce size of a model without performance drop

We find that by truncating the final blocks of pretrained models, we can make models 3.25x more parameter-efficient on average without a statistically significant drop in performance.

7/8

We find that by truncating the final blocks of pretrained models, we can make models 3.25x more parameter-efficient on average without a statistically significant drop in performance.

7/8

Read more in our paper here: https://arxiv.org/abs/2101.06871

Thanks to first authors Alexander Ke ( @_alexke), William Ellsworth, Oishi Banerjee for being such a wonderful, creative, and hardworking team!

8/8

Thanks to first authors Alexander Ke ( @_alexke), William Ellsworth, Oishi Banerjee for being such a wonderful, creative, and hardworking team!

8/8

Read on Twitter

Read on Twitter