Really impressed with CLIP, @OpenAI's recent model for image classification. I think it could change a LOT about how we train models.

Little summary

Little summary

CLIP is a new* image model that trains on both language & images. It's very generalizable and can solve new datasets and even new tasks using the same model.

(* = some similar approaches before but first time it works really well)

(* = some similar approaches before but first time it works really well)

Let's look at results first:

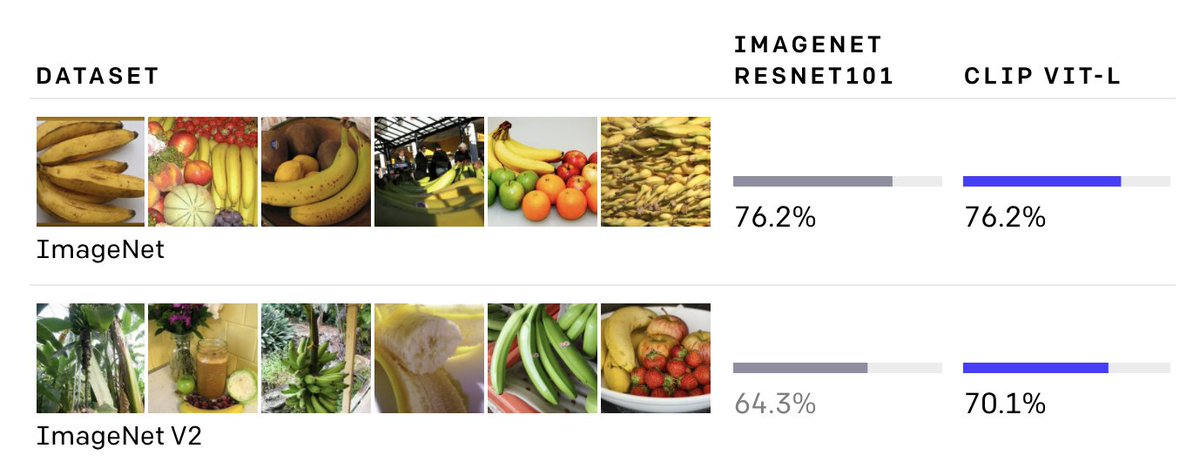

1. It achieves 76.2 % on ImageNet, which is comparable to ResNet but not impressive (SotA is 90.2 %). But: CLIP hasn't seen *a single example* from the ImageNet train set. It's completely zero-shot.

1. It achieves 76.2 % on ImageNet, which is comparable to ResNet but not impressive (SotA is 90.2 %). But: CLIP hasn't seen *a single example* from the ImageNet train set. It's completely zero-shot.

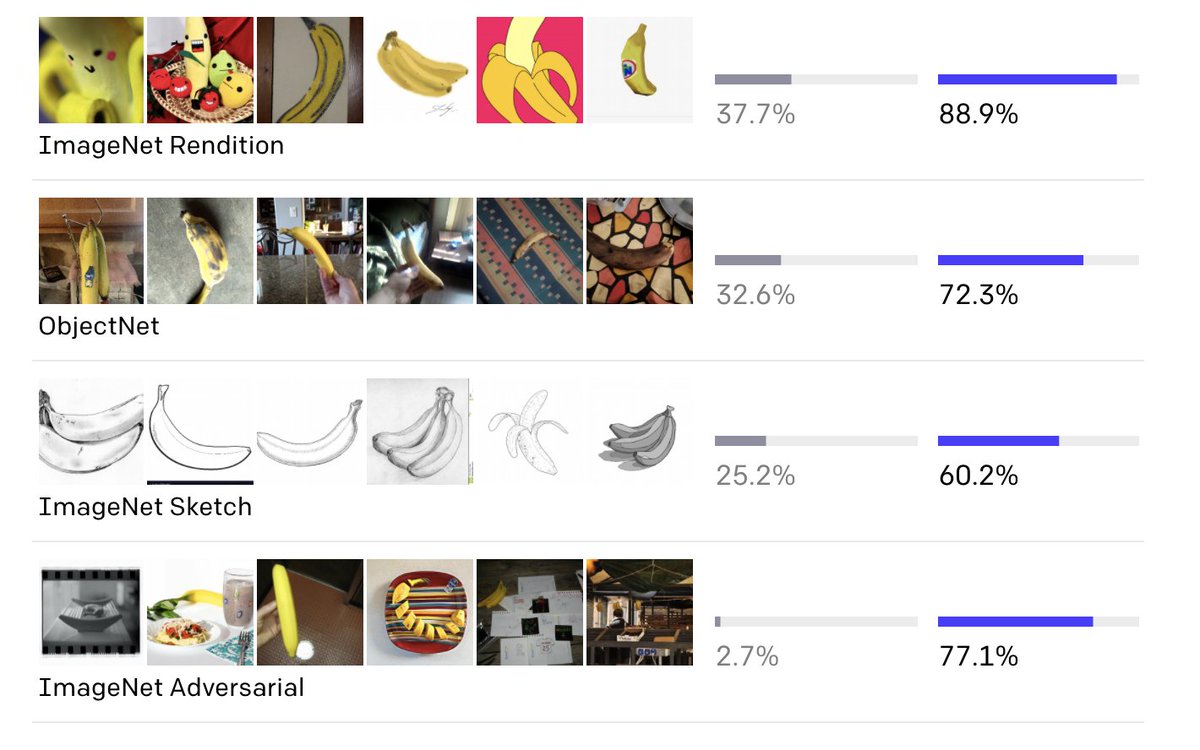

2. Because it wasn't trained on ImageNet, it generalizes *incredibly* well to other datasets. That's a thing that traditional image networks have been struggling with for years (compare ResNet performance in gray below!).

Think about it: This basically means you *don't need a dataset* anymore. You can just throw your images into CLIP without any training and there's a good chance it will solve them.

So how do they train?

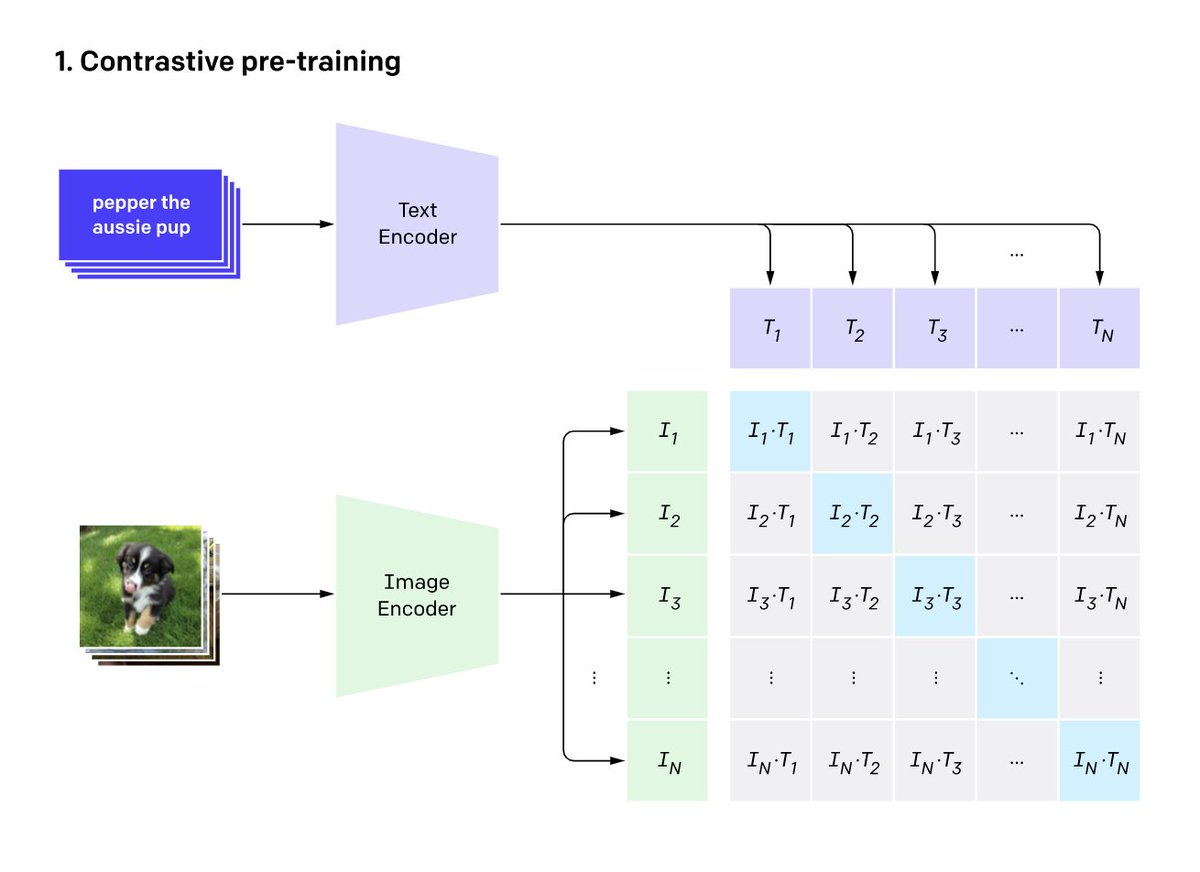

1. They scrape a ton of images + image descriptions from the internet (=endless data).

2. They embed the image description with a transformer-style network and the image itself with Visual Transformer.

1. They scrape a ton of images + image descriptions from the internet (=endless data).

2. They embed the image description with a transformer-style network and the image itself with Visual Transformer.

3. They select an image + the correct image description, sample 30k false image descriptions, and train the (complete) network to predict which description is correct.

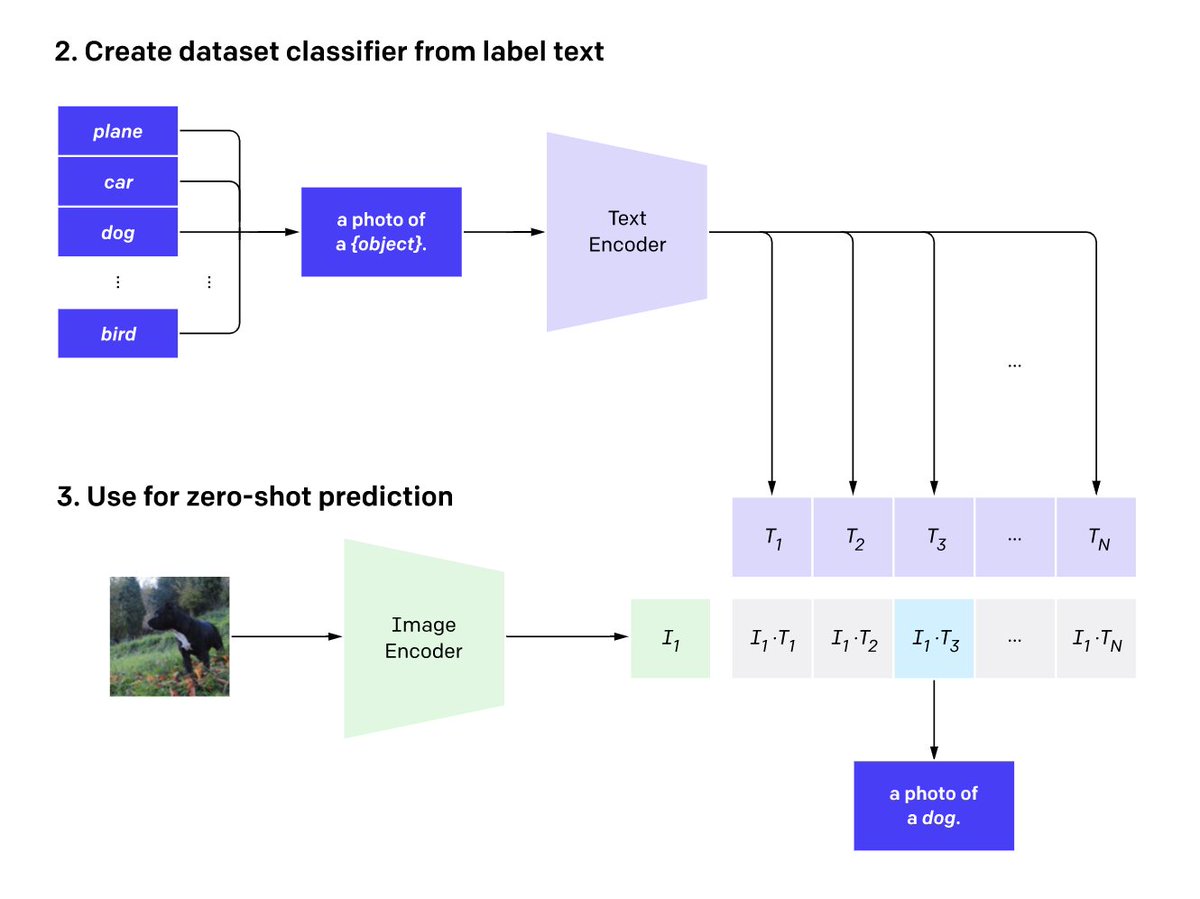

4. To do inference, they generate labels like "a photo of X", where X is a label from ImageNet, and predict which label has the highest score (i.e. best describes the image).

Step 4 means you can do lots of other tasks *with the same model*, just by changing the query string. E.g. they applied CLIP to tasks "such as fine-grained object classification, geo-localization, action recognition in videos, and OCR".

So, what does this mean? My opinion: ML is shifting away from "train your own model" towards "use a pre-trained model". We've seen this development in NLP and are now seeing it in computer vision.

This might not be so significant for researchers (because they have standard datasets) but it's a *huge* shift for applied ML, where datasets don't exist or are very difficult/costly to create.

This could also have implications on ML tools: I could imagine we will see a shift away from training frameworks to libraries for model access & fine-tuning (similar to @huggingface Transformers in NLP).

Best thing last: The models are available and OpenAI built a good, lightweight Python interface around them (plus, they are small enough to run on CPU). Try it out here: https://github.com/openai/CLIP

More details in the blog post and paper here: https://openai.com/blog/clip/

Read on Twitter

Read on Twitter