It’s Monday and I am bored so for all you $VLDR $LAZR & $CFAC junkies here’s a technical thread on how LIDAR works and why $CFAC's Aeye tech *could* be revolutionary.

Know what you own and even then be paranoid – but LFG! (1/x)

Know what you own and even then be paranoid – but LFG! (1/x)

LIDAR is pretty old tech – it functions very similarly to SONAR except with photons.

Light rays are emitted from a sensor, bounce off objects in the environment and are reflected back to the sensor again. (2/x)

Light rays are emitted from a sensor, bounce off objects in the environment and are reflected back to the sensor again. (2/x)

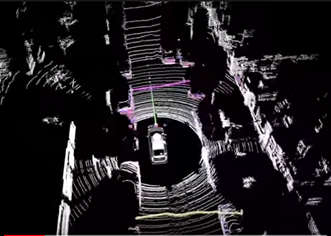

When you have 100’s of sensors emitting 1000’s of these lasers every second you can construct a 3D image of the world around you.

This is the tech $VLDR & $LAZR offer with differentiation coming from their quality - cost tradeoff in sensors. ($VLDR = Quality, $LAZR = Cost)

This is the tech $VLDR & $LAZR offer with differentiation coming from their quality - cost tradeoff in sensors. ($VLDR = Quality, $LAZR = Cost)

In a fully autonomous vehicle LIDAR would be one of many component; cameras, RADAR & HD Maps – that help a vehicle make decisions and provide redundancy/failsafe’s for edge cases.

The industry is very young & companies are pursuing different strategies to achieve fully autonomy

The industry is very young & companies are pursuing different strategies to achieve fully autonomy

Before I delve into $CFAC & Aeye – I want to address a popular misconception - $TSLA & its approach to autonomy. Elon Musk called LIDAR a ‘crutch’ because it can’t distinguish between a moving child and a paper bag. (5/x)

He claims roads are built with eye-vision in mind and hence cameras are the exclusive and ultimate ADAS route; Waymo & every single other vehicle manufacturer disagree but the issue reveals where $CFAC fits in. (6/x)

Problems with LIDAR;

1. It collects every data point and treats it equally requiring immense computing power. A lot of this data is unnecessary – you don’t need to know about birds flying 100m above to drive. Hence there is a lot of wastage. (7/x)

1. It collects every data point and treats it equally requiring immense computing power. A lot of this data is unnecessary – you don’t need to know about birds flying 100m above to drive. Hence there is a lot of wastage. (7/x)

2. Object classification; recognising when a constellation of data points is a cow or your mum is impossible without a huge data set for the machine to learn from. LIDAR data sets are growing but are still limited.

For ML to be effective you need enormous data sets (8/x)

For ML to be effective you need enormous data sets (8/x)

A camera based approach that Elon proposes would be a more accurate object classifier as a huge data set of images exists – Google Images! & the camera could focus on those objects it deems most necessary; cars/pedestrian & not birds flying high. (9/x)

But there’s a problem; cameras don’t work with glare, low light & with shadows, LIDAR does. Thus you need a combination of both – LIDAR & Camera to be optimal.

That’s where Aeye & $CFAC come on. (10/x)

That’s where Aeye & $CFAC come on. (10/x)

They combine LIDAR & camera data to create a new data type 'voxels' that combines the best of both worlds – working regardless of light condition and being able to classify objects on the road. This combination also helps them cut unnecessary data and thus reduce decision latency

But 2 problems are clear from this: if it’s a new data type – do they have sufficiently large data base for the ‘AI’ algo to learn from? You need millions upon millions of entries & whether they have that source is a key risk. (12/x)

Secondly focussing on only the ‘relevant’ objects implies you have near-perfect classification so you can ignore the birds & not the baby in the pram across the roads.

It also reduces redundancy which a camera + LIDAR approach has i.e. it’s more dangerous. (13/x)

It also reduces redundancy which a camera + LIDAR approach has i.e. it’s more dangerous. (13/x)

When the Investor presentation is released these are 2 key technical areas to focus on.

In a technical arms race without the technology the financial projections are irrelevant. $CFAC

THREAD FINISHED.

In a technical arms race without the technology the financial projections are irrelevant. $CFAC

THREAD FINISHED.

@stocktalkweekly some $CFAC DD

Read on Twitter

Read on Twitter