4 days ago, one of the most ground-breaking papers in #healthcareAI was published @NatureMedicine

It shows for the first time, the potential for #AI to actually EXPLAIN PAIN IN BLACK PEOPLE that doctors couldn’t explain. A thread.... https://www.nature.com/articles/s41591-020-01192-7

https://www.nature.com/articles/s41591-020-01192-7

It shows for the first time, the potential for #AI to actually EXPLAIN PAIN IN BLACK PEOPLE that doctors couldn’t explain. A thread....

https://www.nature.com/articles/s41591-020-01192-7

https://www.nature.com/articles/s41591-020-01192-7

Medicine as a field has been around for centuries. And it is largely built upon the knowledge and research passed down through a history of doctors and researchers

What if that knowledge passed down, left out an understanding of your community. My community. In medicine this is sadly the case for many ethnic and social minorities

This ground-breaking paper by @2plus2make5, @oziadias @m_sendhil @jure @Cutler_econ has proven this lack of understanding TRUE for patients with osteoarthritis (OA). And that AI could be our way of fixing these biases at scale.

Let me help explain…

Let me help explain…

The Kellgren and Lawrence grading (KLG) system published in 1952 is used by radiologist across the world to diagnosis the severity of Osteoarthritis (OA). In 1961 is was accepted by the @WHO as the radiological definition of OA for the purpose of epidemiological studies.

The study by Kellgren and Lawrence of coal miners in the Manchester in the 1950s never reported gender or race because, why report something when everyone is the same

OA is the most common type of arthritis in the world, that causes joints to become painful and stiff. The exact cause is not known. There are though a number of risk factors eg joint injury, age, family history, obesity and being a women. https://www.nhs.uk/conditions/osteoarthritis/

I can safely suggest the majority of people reading this will know someone who has OA. A mum, a dad, aunty, uncle, grandad or grandma

The severity of the condition varies from person to person. To help treat OA, the KLG system is used by radiologist as an ‘objective’ way of measuring severity using X-ray scans and determining which treatment patients should receive. Eg a knee replacement or not

This is important because an inappropriate diagnosis could mean patients are more likely to be offered non-specific therapy like opioids. And we know how horrific, inappropriate opioid prescriptions can be to citizens living with untreated pain. https://www.nytimes.com/spotlight/opioid-epidemic

It turns out from the amazing work in this paper by @2plus2make5 and her team. That the KLG may not be as objective as we think...

This study showed - When we take two knees with the same score via the KLG system - black, lower-income, less-educated patients - STILL had more pain. Yet importantly because they graded the same as the other knee - denied treatment despite in more pain

Some explanation in the literature may be the stress of these individuals make people with similar grading scores present with more pain

BUT the team behind this paper decided to ask what if the way we, as doctors, grade severity of OA in BLACK patients and other socio-economic backgrounds were BIASED against them

Guess, what. They were right!

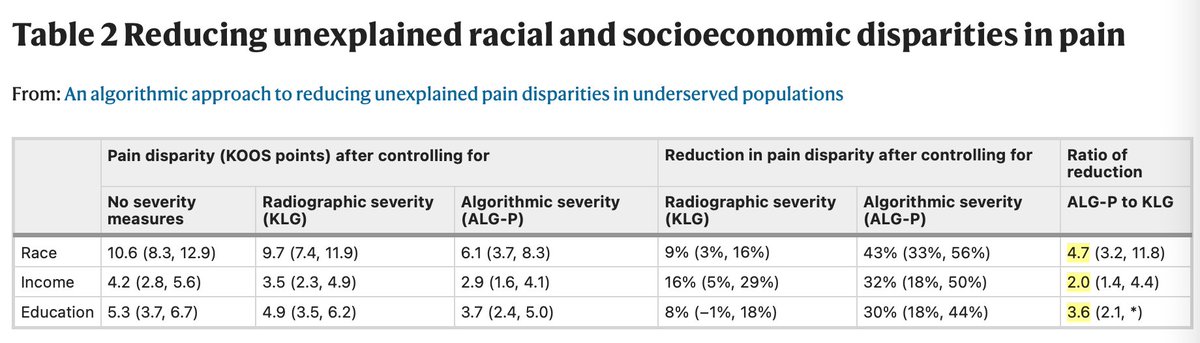

The paper details that an AI algorithm trained to use the patient’s reported pain as the outcome with which to diagnose severity of OA, rather than what the radiologist thought. It was almost *5x better* at EXPLAINING the severity of pain in black patients than radiologists....

5x PEOPLE

Similar results were found for those who were lower income (2x better) and less educated (3.6x better)...

There is so much detail to this paper. I highly recommend those in the field take the time to read the paper properly to understand details and really understand how and what this paper has proven

Further commentary here also by Dr Said Ibrahim.... https://www.nature.com/articles/s41591-020-01196-3

Further commentary here also by Dr Said Ibrahim.... https://www.nature.com/articles/s41591-020-01196-3

But for me personally, having read the paper, this a window into what modern medicine still has yet to do. Race, gender, class norming medicine is a task that we need to pursue as an industry to give everyone on this planet the healthcare they deserve https://www.nejm.org/doi/full/10.1056/NEJMms2004740

Modern medicine as it is practiced today, is biased. It’s a fact!

The most amazing thing about this paper, for me as a trainee doctor, trained data scientist, and black man is that this paints a picture that when AI is designed with right mindset and a diverse dataset it has the potential to reverse bias in our society rather than accelerate it

That’s the future I hope to contribute to in my lifetime... https://medium.com/@ivanbeckley/why-sponsor-a-medical-student-to-study-an-msc-in-data-science-7feb75cb21b

1. Is your dataset DIVERSE? If not, your algorithm is likely not as good as you think for everyone that it should be useful for

2. Are you using the right standard? Better than human level may not be that groundbreaking if the human is already biased

3. Are you building AI with the patient in mind or just to optimise the system? AI presents an opportunity to see things that perviously we haven’t seen. Focusing on improving what patients need rather than what the system wants is the opportunity

Thank you @2plus2make5 @oziadias @m_sendhil @jure @Cutler_econ for your diligence and work. This I am sure has been many many years in the making. Likely this will be see and unseen in time. But I hope it inspires an advancement of healthcare for all, not just a few

And importantly motivate a diverse array of people into the pursuit of AI for good in the world!! We need you all. We have a lot of bias and injustice in society to correct! We need to be the change we want to see

For further analysis of the paper please read @oziadias's thread here... https://twitter.com/oziadias/status/1350160528061075458?s=20

And @DrLukeOR's thread as a radiologist on his thoughts of the paper... https://twitter.com/DrLukeOR/status/1350294017603366915?s=20

Read on Twitter

Read on Twitter

for the

for the