1/5 In July 2016, Jitendra Malik gave an inspiring talk at Google, with a slide showing a block diagram of visual pathway in a primate. He said "there are a lot of feedback loops as you see". He then stressed that, yet the current deep neural architectures are mainly feedforward.

2/5 Then he said this, which has got stuck in mind since then: "I think in the next few years we' see a lot of papers which will show feedback has something significant". Sure do we have RNNs and LSTMs but, those are very limited forms of feedback: short-range and at micro-level.

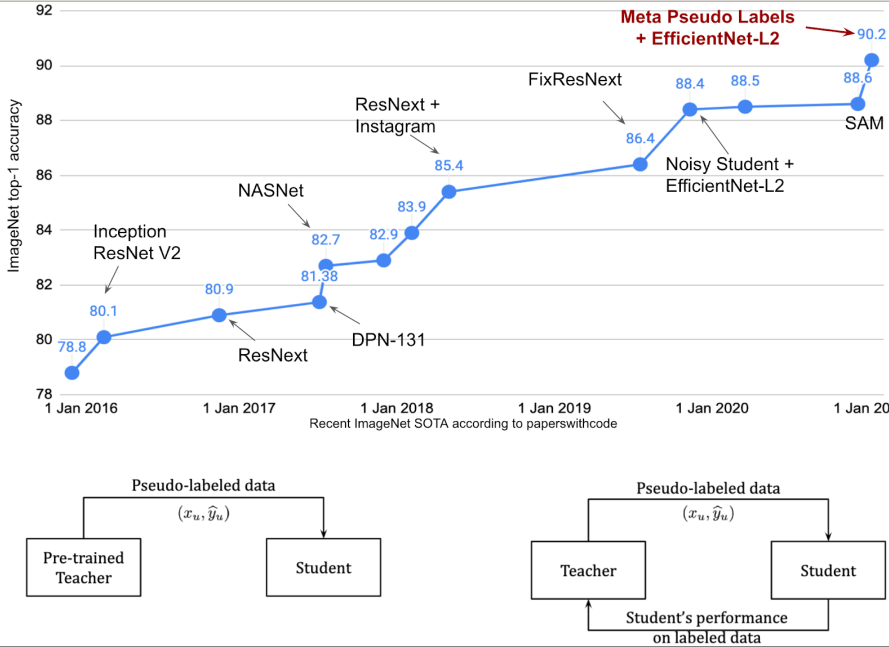

3/5 Earlier today a new SOTA on ImageNet was reported by @quocleix and his team (90.2% on top-1 accuracy). Their approach relies on a feedback loop between a "pair of architectures" (teacher and student). See their tweet here: https://twitter.com/quocleix/status/1349443438698143744

4/5 Such feedback loops across neural networks as we see here, or similarly see in self-distillation methods (figure below) are new and operate at much larger scale than those micro feedbacks in RNNs/LSTMs. They have shown to be a new and promising way to improve generalization.

5/5 Today, a few years after Jitendra's prediction, we witness that SOTA on ImageNet relies on rich and broad-range feedback loops. I think this is just the beginning, and we will see (and hopefully also understand) more about complex feedback loops in machine learning models.

Read on Twitter

Read on Twitter