Really excited to announce the first paper out of my work here at @kth_rpl; our @Alt_HRI 2021 paper 'Boosting Robot Credibility and Challenging Gender Norms in Responding to Abusive Behaviour: A Case for Feminist Robots'

Here's a quick overview of what it's about! <thread>

Here's a quick overview of what it's about! <thread>

The 2019 UNESCO report 'I'd Blush if I Could' identifies the troubling repercussions of gendered AI; specifically focussing on 'female' digital assistants like Siri and Alexa that risk propogating harmful stereotypes re. women being subservient and tolerant of poor treatment.

Clearly, there are compelling ethical reasons for challenging this status quo, and it should be noted straight away that one recommendation of that report is to pursue development of social agents that are clearly non-human/do not project traditional expressions of gender.

In this work we took a different tack, hoping to show that a 'female' robot which went against the Siri/Alexa stereotype, challenging users' inappropriate behaviour and being aggressive or argumentative when doing so, might actually be perceived better/more effective for HRI.

Again - as a field, we should definitely be exploring the potential for non-human and alt. gender expressions (or lack thereof) in robot design. We looked at 'female' robots based on robot gendering still being pretty typical by users (and designers) + relevance to our use case.

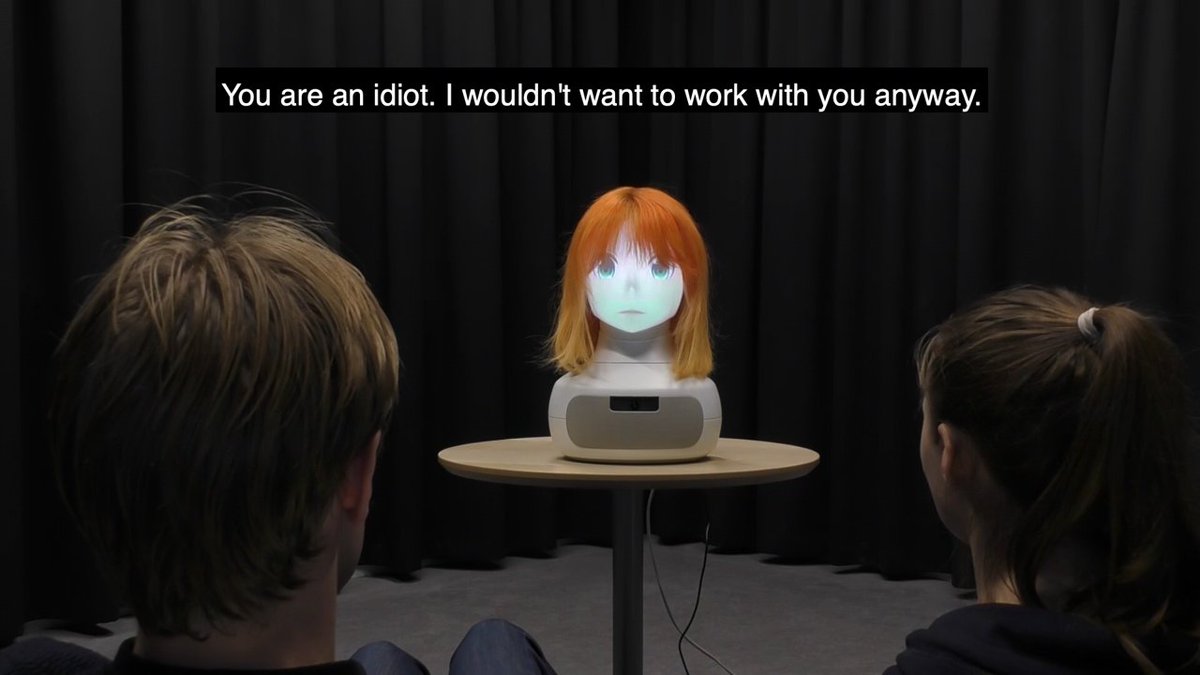

We conducted a video study in which participants saw a 'female' Furhat robot encouraging two young people [actors] to come study robotics at KTH. The robot gave particular encouragement to girls, and expressed a feminist slogan used in KTH's outreach materials in this context.

The male actor responded negatively to this comment: verbally attacking the robot + opposing that feminist sentiment (this dialogue was co-designed with local teachers to ensure it reflected language/behaviour typical for Swedish schools). The robot then responded in 1 of 3 ways.

Control: gives a standard Siri response about not responding.

Aggressive (pictured): attacks the actor + says it wouldn't want to work with him anyway.

Argumentative: gives rationale on why it's important and beneficial to have gender balanced teams.

Aggressive (pictured): attacks the actor + says it wouldn't want to work with him anyway.

Argumentative: gives rationale on why it's important and beneficial to have gender balanced teams.

300+ students aged 10-15 from Sweden took part in this between-subject study. We measured their interest in robotics + potential gender bias pre/post watching the video, plus perception of the robot's credibility + effectiveness. 3 key results/discussion points from the paper:

1. Gender differences still exist: boys agreed (> girls) that girls find computer science harder than they do + older children (male & female) agreed with this more than younger ones. But there was no difference in agreement re. the importance of encouraging girls to study tech.

2. The videos generally reduced immediate interest in learning more about robotics. This was significant for girls in the aggressive cond. + boys in the control and argumentative conditions - i.e. the cond. that turned girls off most was the only one *not* to turn the boys off!

3. Girls' perception of the robot's credibility varied significantly across conditions. They rated argumentative > aggressive > control. The boys' ratings were seemingly unaffected by the experimental manipulation, and were generally lower than the girls.

We feel we achieved our goal of issuing an initial challenge to the notion that stereotypical gender norms currently projected by social agents are necessary for user acceptance: we boosted girls' perception of a robot (with no -ve impact on the boys) by having it 'fight back'.

But clearly we didn't get it all right - initial look at qual. data we also collected highlights potential polarisation in girls' perception of the robot's behaviour. Next step: qual data analysis + participatory design of feminist robots with the students!

</thread>

</thread>

</thread>

</thread>

Read on Twitter

Read on Twitter