Our new experimental paper on AI-driven precision medicine and tort liability is out in the Journal of Nuclear Medicine ( https://jnm.snmjournals.org/content/early/2020/10/02/jnumed.120.256032)! ( @kevin_tobia, Aileen Nielsen, Alexander Stremitzer, @eth_cle, @eth, @eth_en)! 1/9

Tort liability holds physicians to the “standard of care” but what is the standard of care when AI precision-medicine products recommend non-standard treatments? Legal scholars fear that medical negligence liability could slow the uptake of AI recommendations 2/9

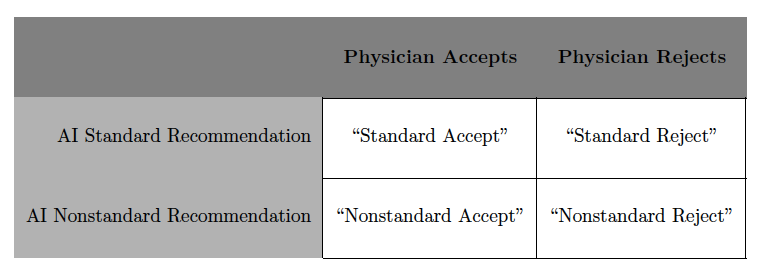

But that can depend on a jury’s decision. We asked a representative sample of jury-eligible Americans to evaluate a hypothetical story, in which a physician received AI advice to provide either a standard or non-standard dosage of a cancer drug. We found a surprising answer 3/9

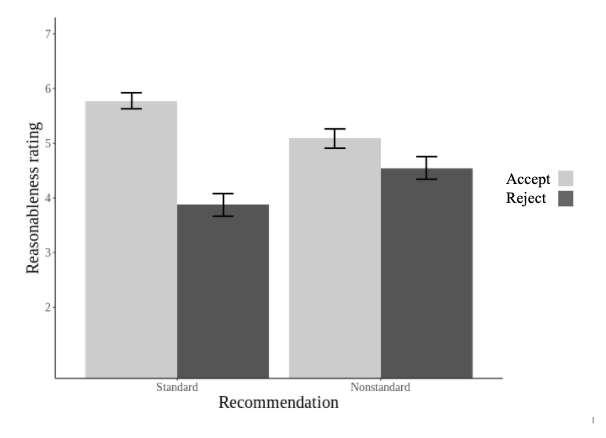

Ordinary people favor physicians that accept, rather than reject, AI advice. Accepting advice to provide standard care is evaluated most favorably, but accepting nonstandard advice is also preferred to rejecting it. 4/9

What does this mean for physicians? All else equal, jurors are surprisingly receptive to physicians accepting non-standard AI advice 5/9

Here is a short video summarizing the results of our paper 6/9

Of course, this experiment does not consider the role of testimony of medical experts, and that many malpractice cases settle. These limitations are emphasized in a thoughtful invited response by @wnicholsonprice, @gerke_sara and @CohenProf 7 / 9 https://jnm.snmjournals.org/content/62/1/15

Our paper addresses important discussions on medical AI also raised by others, such as @mfroomkin, @ianrkerr, and Prof. Joelle Pineau ( @rllabmcgill) who wrote about “When AIs Outperform Doctors” 8 / 9.

More generally the question of how tort liability will or should impact the rollout of AI products has been receiving a lot of attention in recent years, such as in work from @FrankPasquale, @rcalo, @aselbst, @vanessamak and many others. 9 / 9

Read on Twitter

Read on Twitter