1/ At @tryhaystack we have talked to over 500 engineering leaders, analyzed 400,000 private pull requests & 3M commits of non-opensource companies.

Here's our takeaway

Here's our takeaway

2/ We've seen engineering leaders having the hardest time with the following questions:

- Are we getting better over time?

- Where does work get stuck?

- How effectively are we working?

- What did we accomplish last month?

- How do we assess the value of fixing tech debt?

- Are we getting better over time?

- Where does work get stuck?

- How effectively are we working?

- What did we accomplish last month?

- How do we assess the value of fixing tech debt?

3/ These questions are hard to answer because of the problem's nature, multiple variables effects affecting the outcome. We just don't have all the data.

To progress further, we need to change the way we think from localized problems to what's the main goal?

To progress further, we need to change the way we think from localized problems to what's the main goal?

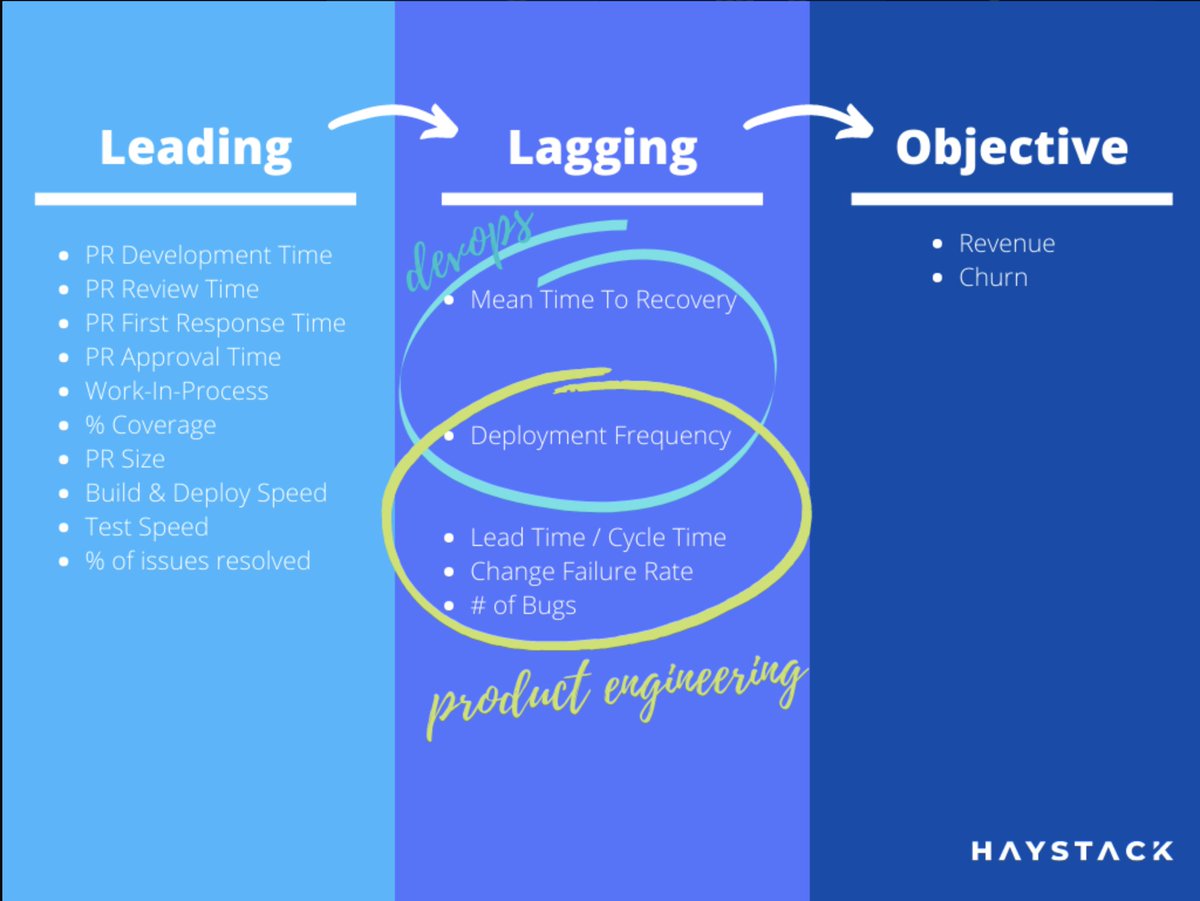

4/ Thinking from first principles, an organization is successful if they have  Revenue

Revenue  Churn. These are Lagging Metrics. They give you feedback later than you want

Churn. These are Lagging Metrics. They give you feedback later than you want

Revenue

Revenue  Churn. These are Lagging Metrics. They give you feedback later than you want

Churn. These are Lagging Metrics. They give you feedback later than you want

5/ Each part of the organization have their KPIs which reflect Revenue.

- sales -> Sales Revenue..

- product -> MAU..

- marketing -> CAC and LTV..

What about Engineering? We found that this metric is

"# of Successful Iterations"

- sales -> Sales Revenue..

- product -> MAU..

- marketing -> CAC and LTV..

What about Engineering? We found that this metric is

"# of Successful Iterations"

6/ YC recommends launching fast then iterate. @paulg mentions in his blog "13 Sentences" as #2 and #3 most important items.

@elonmusk calls this Iterative Development and tries to maximize the # of iterations for both @SpaceX and @Tesla

http://www.paulgraham.com/13sentences.html

@elonmusk calls this Iterative Development and tries to maximize the # of iterations for both @SpaceX and @Tesla

http://www.paulgraham.com/13sentences.html

7/ It's obvious why this metric succeeds. You try something, get feedback on it, fix the issue, repeat. The more you iterate, the more likely you'll build the correct product, the more likely you'll be ahead of the market.

8/ The million-dollar question is "How do we track # of successful iterations?"

Deployment Frequency: The more you deploy, the more you iterate.

This is correct, but missing a piece

Deployment Frequency: The more you deploy, the more you iterate.

This is correct, but missing a piece

9/ @RoyOsherove gives a really good example in his GOTO talk

The manager asks: "How can we deploy faster?"

The team responds: "Let's not write tests!"

We are ignoring half of the statement:

# of "SUCCESSFUL" iterations

The manager asks: "How can we deploy faster?"

The team responds: "Let's not write tests!"

We are ignoring half of the statement:

# of "SUCCESSFUL" iterations

10/ We not should only track speed, but we should track quality along with it.

- Change Failure Rate

- # of Bugs

- MttR

are proxies for high-level app quality. There are more details on the correct way to track these, which I'll not get into. DM if you want to know more.

- Change Failure Rate

- # of Bugs

- MttR

are proxies for high-level app quality. There are more details on the correct way to track these, which I'll not get into. DM if you want to know more.

/11 Summarizing

Aim: # of successful iterations

Track:

- Speed:

* Deployment Frequency

* Cycle Time

- Quality:

* Change Failure Rate

* # of Bugs

* MttR

We call these Northstar Metrics

Aim: # of successful iterations

Track:

- Speed:

* Deployment Frequency

* Cycle Time

- Quality:

* Change Failure Rate

* # of Bugs

* MttR

We call these Northstar Metrics

12/ What about all these metrics:

- % coverage

- pr size

- build & deploy speed

- review time

- # of work in progress

...

These are "Leading Metrics". They are tools to get faster feedback on your process changes.

DON'T use Leading metrics as KPIs.

- % coverage

- pr size

- build & deploy speed

- review time

- # of work in progress

...

These are "Leading Metrics". They are tools to get faster feedback on your process changes.

DON'T use Leading metrics as KPIs.

13/ Common pitfall most teams fell into is tracking exact numbers. Don't do this.

Track:

- Trends

- Distribution (aka outlier)

@CatSwetel has some interesting ways of visualizing trends and distribution.

Track:

- Trends

- Distribution (aka outlier)

@CatSwetel has some interesting ways of visualizing trends and distribution.

14/ Continuous Delivery advocates like @samnewman, @thoughtworks know what works and what doesn't work well.

They started the CI/CD movement to help engineers "Iterate Faster Successfully"

We must not forget @jezhumble, @RealGeneKim, @nicolefv for their incredible research.

They started the CI/CD movement to help engineers "Iterate Faster Successfully"

We must not forget @jezhumble, @RealGeneKim, @nicolefv for their incredible research.

15/ Other Sources:

1) Accelerate: Statistically shows what work https://www.amazon.com/Accelerate-Software-Performing-Technology-Organizations/dp/1942788339

2) Dora Research: The Sequel to Accelerate: https://services.google.com/fh/files/misc/state-of-devops-2019.pdf

3) @tastapod, @tdpauw has amazing talks about @GoldrattBooks and Theory of Constraints.

1) Accelerate: Statistically shows what work https://www.amazon.com/Accelerate-Software-Performing-Technology-Organizations/dp/1942788339

2) Dora Research: The Sequel to Accelerate: https://services.google.com/fh/files/misc/state-of-devops-2019.pdf

3) @tastapod, @tdpauw has amazing talks about @GoldrattBooks and Theory of Constraints.

16/ Here are some of these great fellows who made the ecosystem move forward @martinfowler, @jpetazzo, @jesserobbins, @allspawm, @AdamTornhill, @eoinwoodz, @jcoplien, @davidfarley, @andrew_glover, @PaulDuvall, and many more

17/ If you're more interested in this topic, feel free to check out our 3-page pdf  https://www.usehaystack.io/leading-lagging-metrics

https://www.usehaystack.io/leading-lagging-metrics

https://www.usehaystack.io/leading-lagging-metrics

https://www.usehaystack.io/leading-lagging-metrics

Read on Twitter

Read on Twitter