State of Testing in Rails apps — this is the survey we've run in @arkency recently.

Here's a thread with how people have responded:

Here's a thread with how people have responded:

80% surveyees find testing inseparable from software development.

I guess the number would be different in other language communities.

I'm happy to be a Rubyist where testing is a part of the culture.

I guess the number would be different in other language communities.

I'm happy to be a Rubyist where testing is a part of the culture.

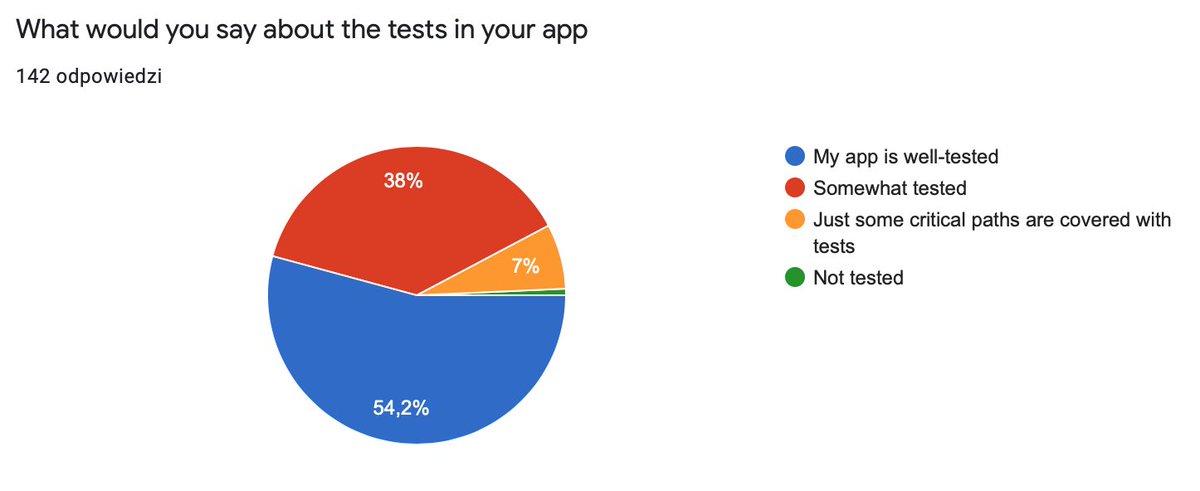

Now a bit of self-assessment.

55% say their app is well-tested. I wonder how people's definitions of "well-tested" would agree with each other.

40% say it's "somewhat" tested. An honest thing to say.

55% say their app is well-tested. I wonder how people's definitions of "well-tested" would agree with each other.

40% say it's "somewhat" tested. An honest thing to say.

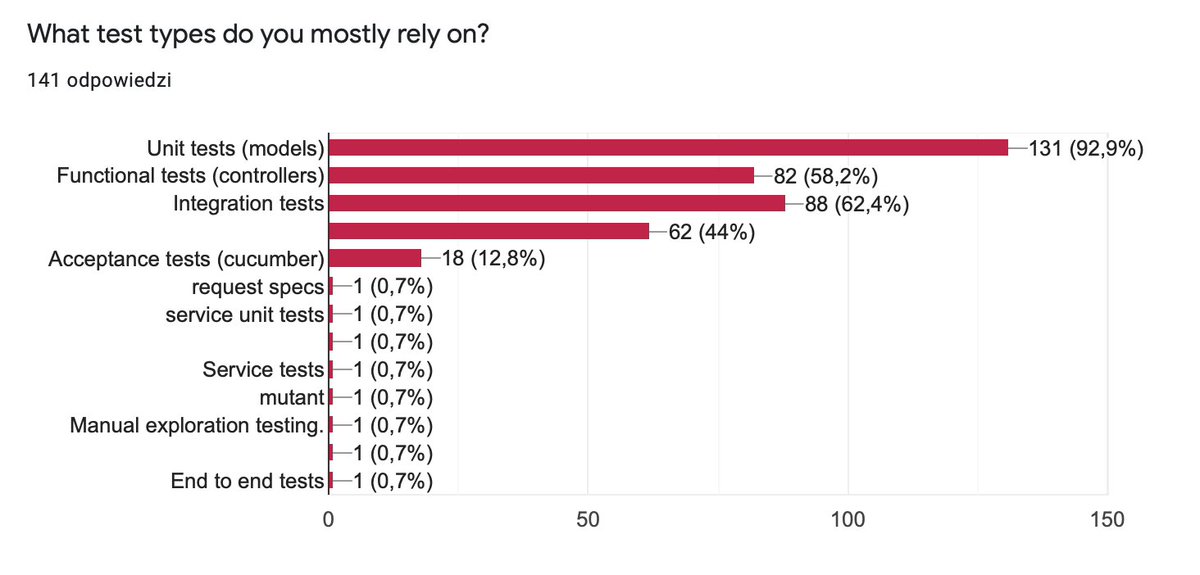

What tests you mostly rely on — here comes the never-ending issue of how we name different test types.

First three follow the original Rails naming and unit tests are leading.

First three follow the original Rails naming and unit tests are leading.

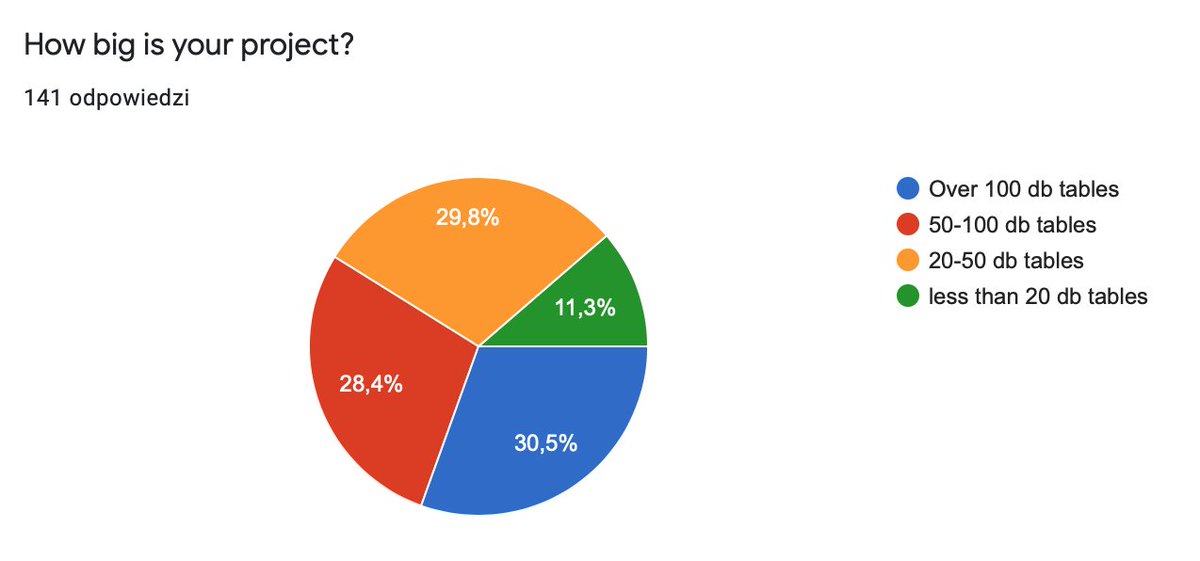

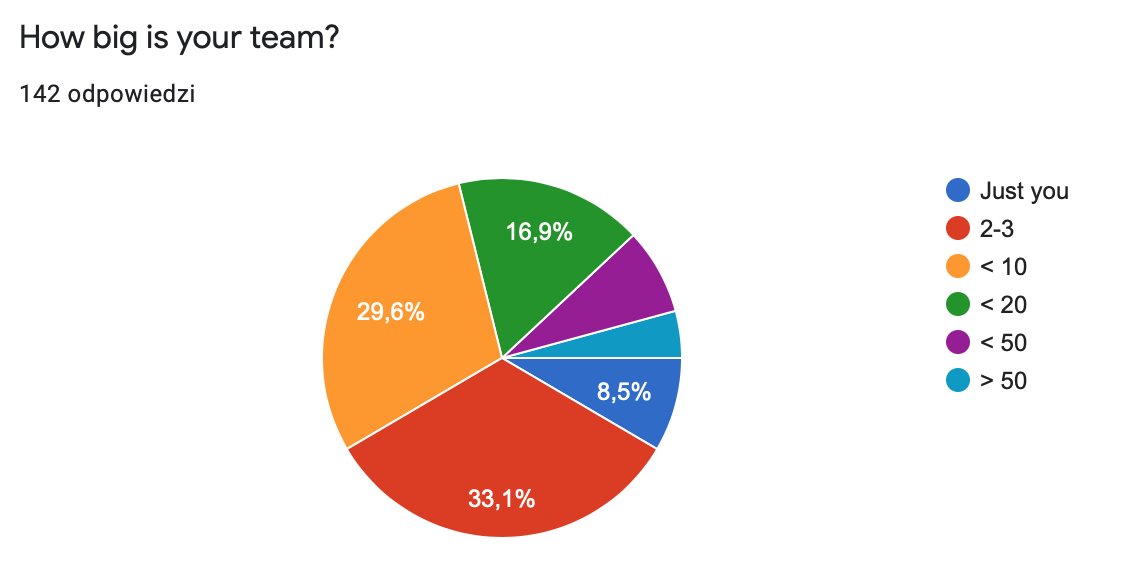

How big is your project.

Number db tables is a metric everyone understands.

- 30% 100-∞ tables

- 30% 50-100 tables

- 30% 20-50 tables

I'm glad we didn't ask for LOC here :)

Number db tables is a metric everyone understands.

- 30% 100-∞ tables

- 30% 50-100 tables

- 30% 20-50 tables

I'm glad we didn't ask for LOC here :)

How long does it take to run a single test case on your dev machine?

I was really curious about this one.

Actually I'm surprised with the results.

I was really curious about this one.

Actually I'm surprised with the results.

I wonder if the fortunate 17.9% who run a test in the "blink of an eye" refer to Rails projects or non-Rails.

Further 23.6% are fortunate enough to run a test within 5 seconds. Good too, but still far from the TDD nirvana.

Actually 5 secs is enough for me to loos the of

of

Further 23.6% are fortunate enough to run a test within 5 seconds. Good too, but still far from the TDD nirvana.

Actually 5 secs is enough for me to loos the

of

of

Actually, keeping single test run under 5 seconds requires dedicated effort to achieve in a typical rails project which hasn't been just bootstrapped, at least in my experience.

Not to mention the "blink of an eye" — you have to get off the default Rails testing conventions.

Not to mention the "blink of an eye" — you have to get off the default Rails testing conventions.

Oh, at least someone is reading. I'll keep going then :) https://twitter.com/lucianghinda/status/1348565748298481665

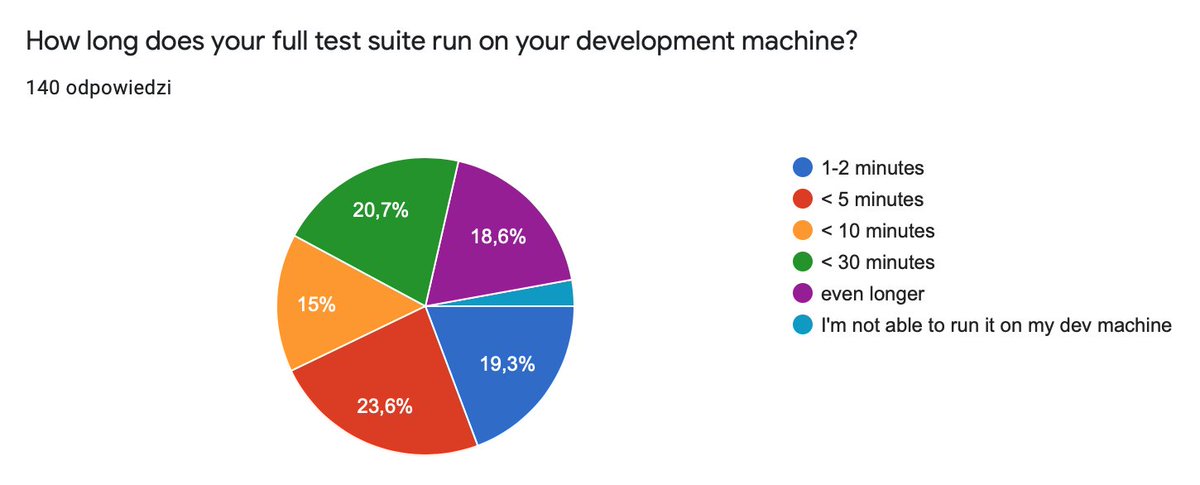

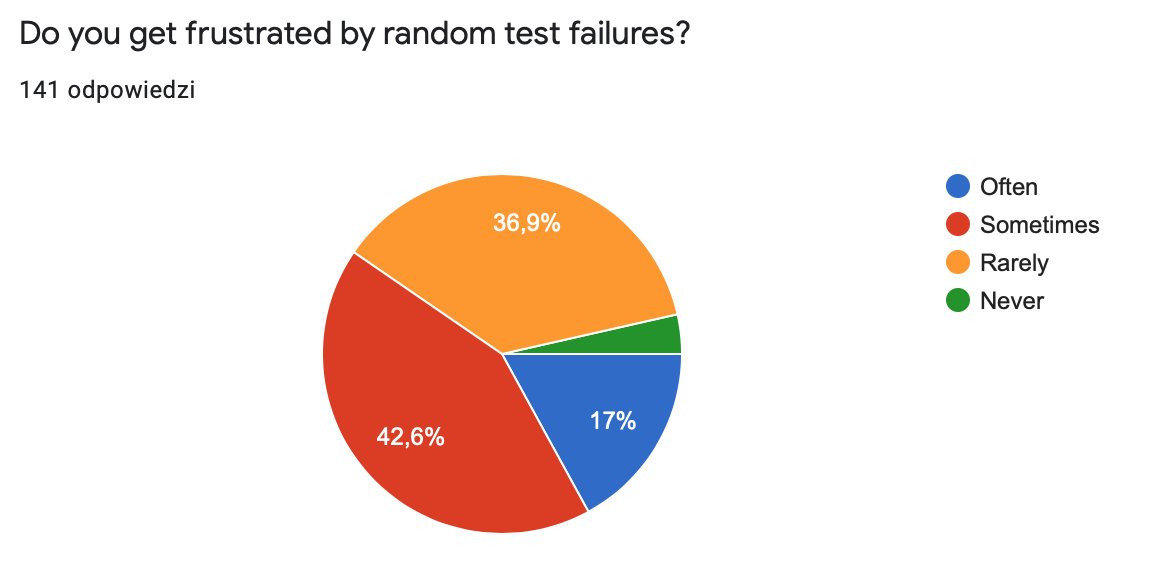

Now this is a question I was super-curious for:

How long does a FULL suite run on your machine.

20% folks need to bear with over half an hour. At this point it's pretty much impractical to run it locally. Perhaps even no one does, relying only on CI.

How long does a FULL suite run on your machine.

20% folks need to bear with over half an hour. At this point it's pretty much impractical to run it locally. Perhaps even no one does, relying only on CI.

Further 20% don't have it much better, responding that a full suite takes 10-30 minutes locally.

I wonder how people are satisfied with these numbers.

Is it fine and we're ok with relying on ever-increasing computing power.

Or is it some kind of tech-debt?

I wonder how people are satisfied with these numbers.

Is it fine and we're ok with relying on ever-increasing computing power.

Or is it some kind of tech-debt?

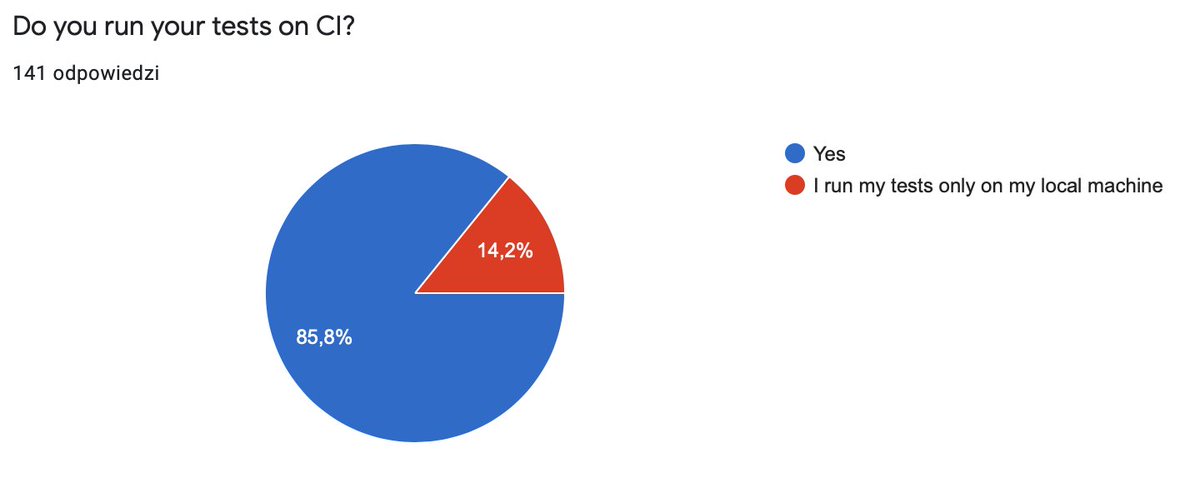

Now — do you CI?

14.2% do not. Reason unknown, I guess it's rarely a personal preference.

I wonder what's the overlap of this number with those who need 18.6% who need over half an hour to run the full suite.

14.2% do not. Reason unknown, I guess it's rarely a personal preference.

I wonder what's the overlap of this number with those who need 18.6% who need over half an hour to run the full suite.

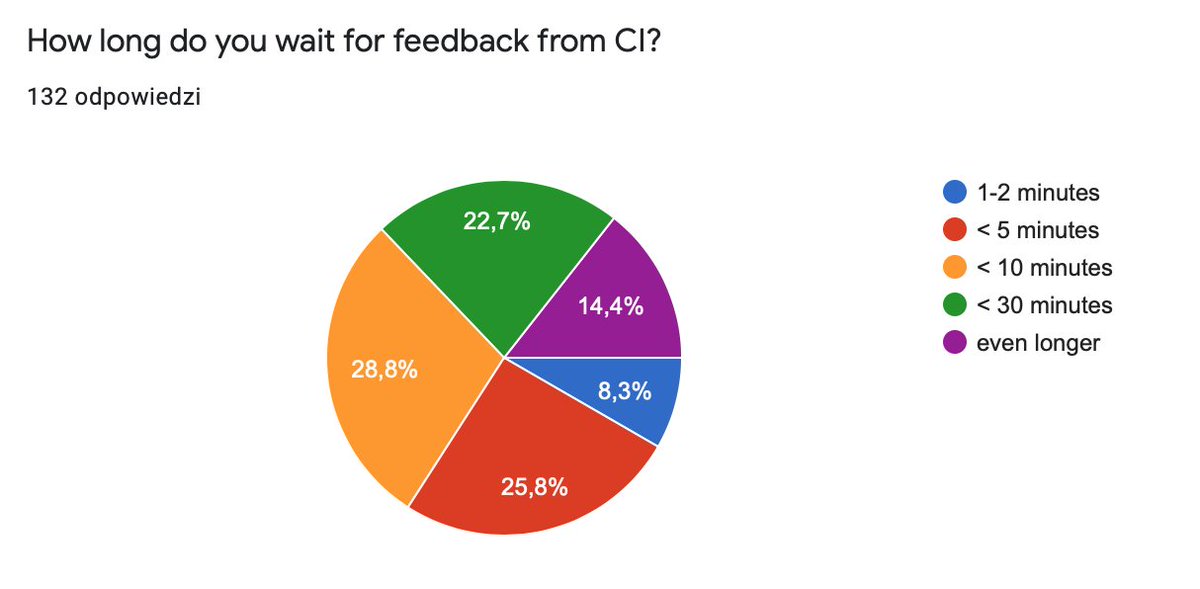

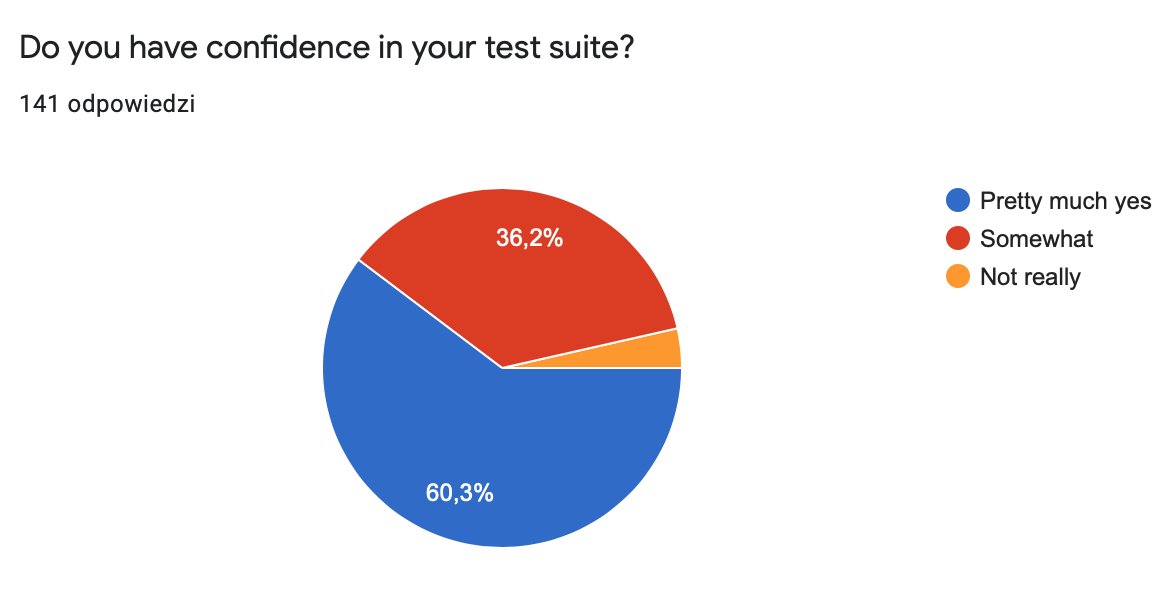

Now this is subjective but telling:

Do you have confidence in your tests suite?

60% people told "Pretty much yes".

That's one of the reasons you can be happy to work at your project.

Do you have confidence in your tests suite?

60% people told "Pretty much yes".

That's one of the reasons you can be happy to work at your project.

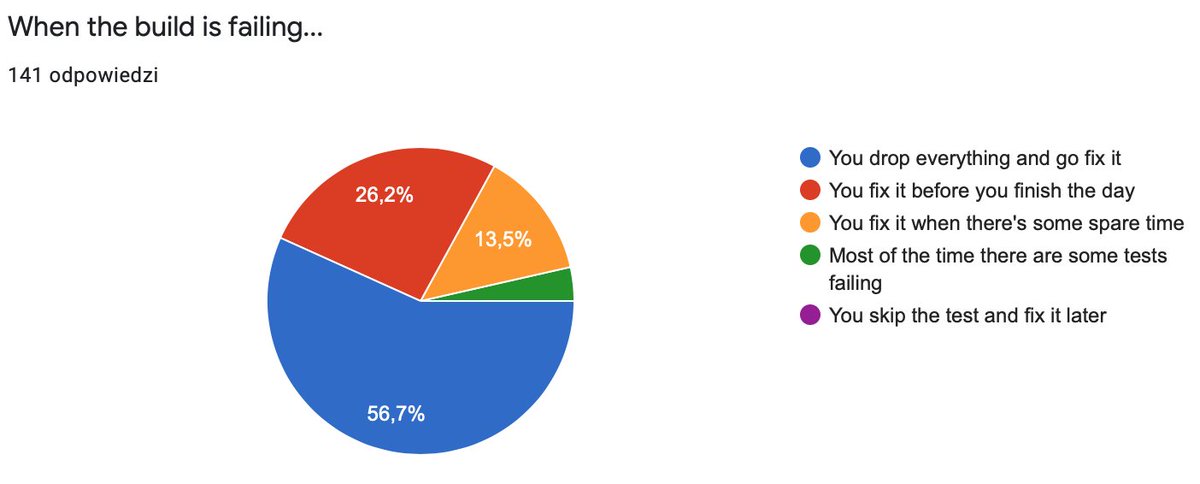

What do you do when the build is failing...

For 57% a green build is a sanctity.

For the rest it's negotiable.

For 57% a green build is a sanctity.

For the rest it's negotiable.

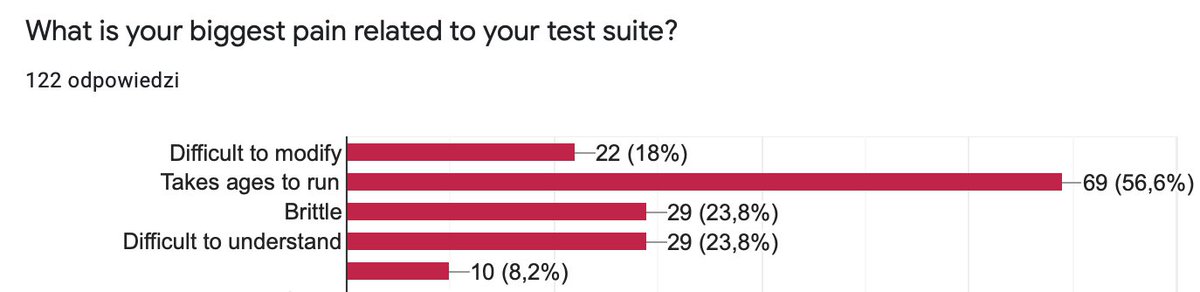

Top reasons people are dissatisfied with their tests:

- difficult to modify

- takes ages to run

- brittle

- difficult to understand

- makes it difficult to change code

- difficult to modify

- takes ages to run

- brittle

- difficult to understand

- makes it difficult to change code

^ that answers my question whether people are satisfied with long running builds.

And I didn't even ask for failures (instead of skipped cases). I know projects where most of the time there were some failed cases left. Teammates were supposed to remember which one are these to be able to tell if your change actually broke something. Skipping was forbidden :)

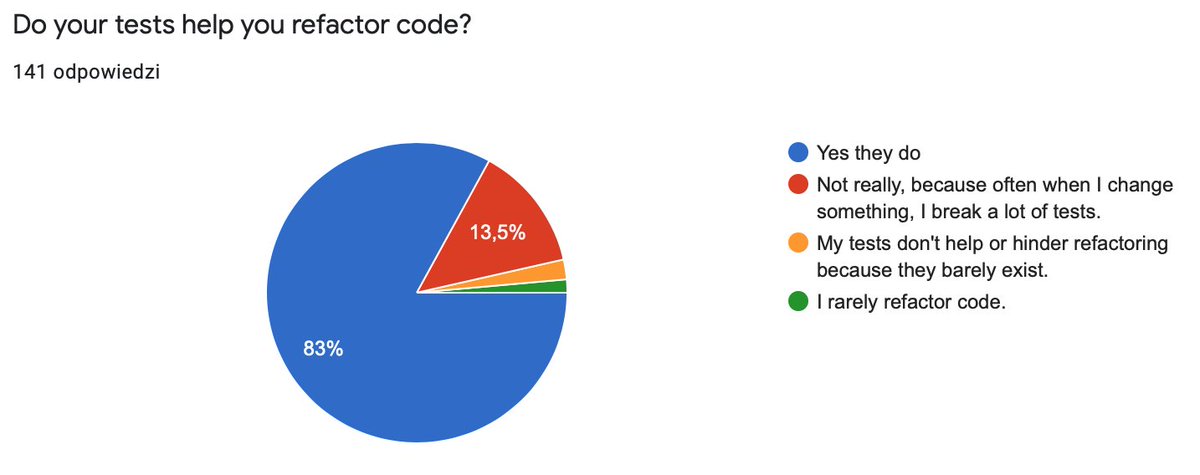

Do your tests help you refactor code?

For 83% surveyees they do.

Actually I'm surprised the number is this high.

For 83% surveyees they do.

Actually I'm surprised the number is this high.

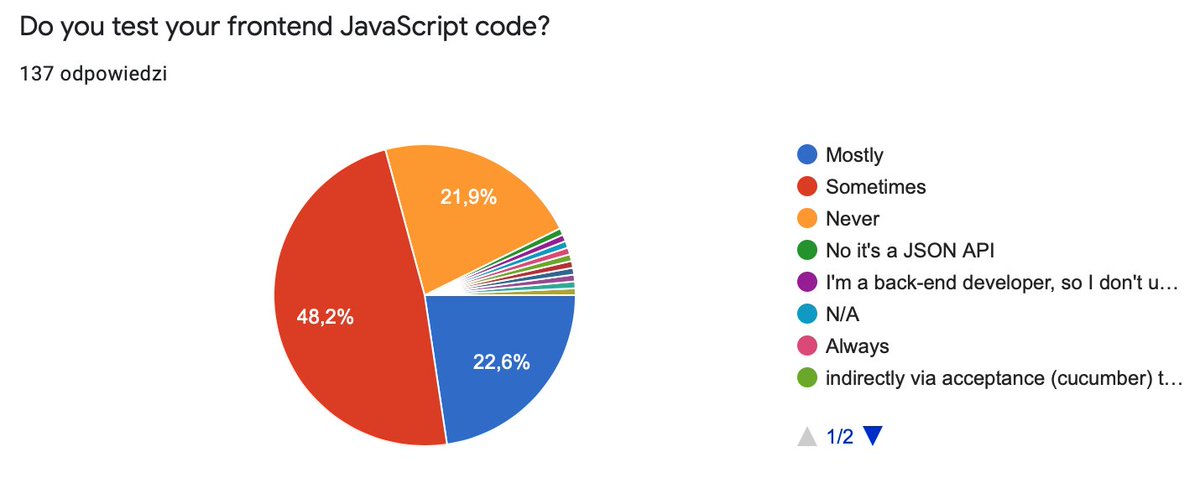

How do you replace dependencies in tests?

I guess we need another survey on how do people define these terms

I guess we need another survey on how do people define these terms

How do you assess coverage

40% do not

56% simplecov

My contrarian opinion — there's little difference between the above two

40% do not

56% simplecov

My contrarian opinion — there's little difference between the above two

Does simplecov give you anything beyond the knowledge that your code doesn't crash?

Mutation testing seems to be the only way assessing test coverage can be less superficial But it requires considerable effort to introduce.

But it requires considerable effort to introduce.

cc @_m_b_j_

Mutation testing seems to be the only way assessing test coverage can be less superficial

But it requires considerable effort to introduce.

But it requires considerable effort to introduce.cc @_m_b_j_

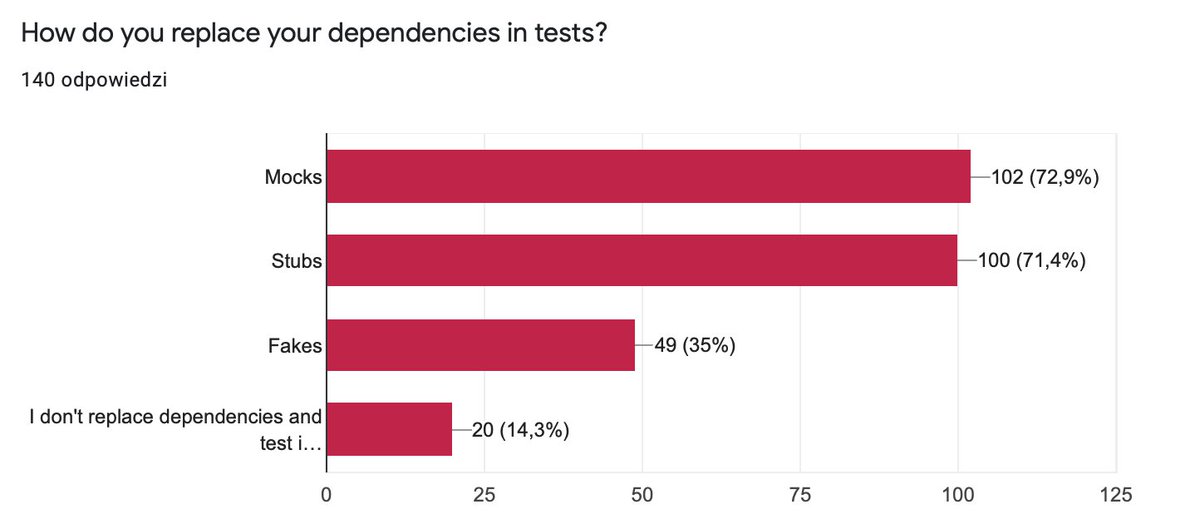

What coverage level do you aim for?

Personally I'm cautious about developer policies that enforce a number here.

Personally I'm cautious about developer policies that enforce a number here.

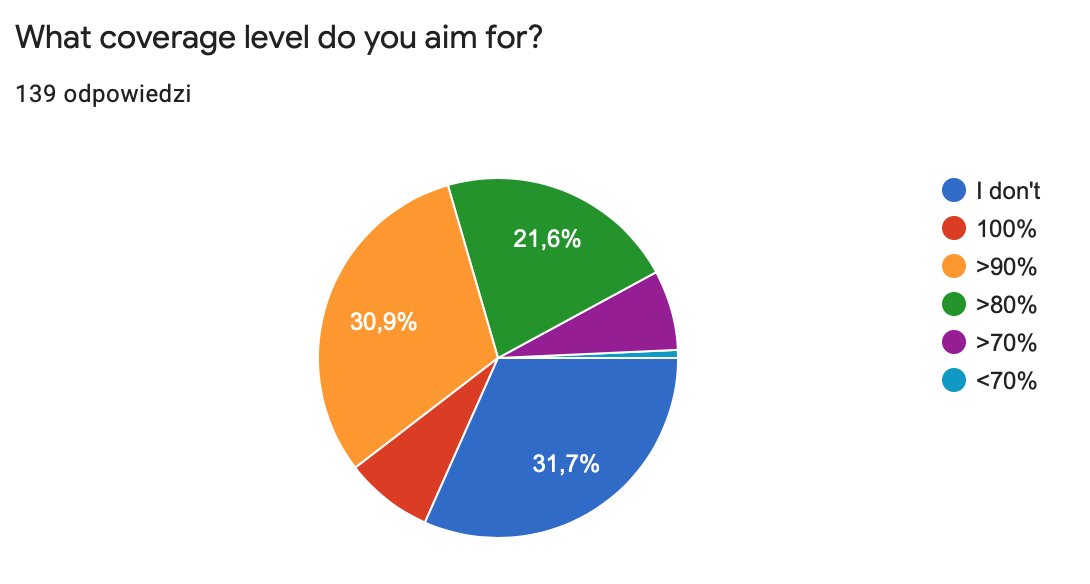

Most people "sometimes" get frustrated by random test failures. This doesn't tell much, but at least you know you're not alone

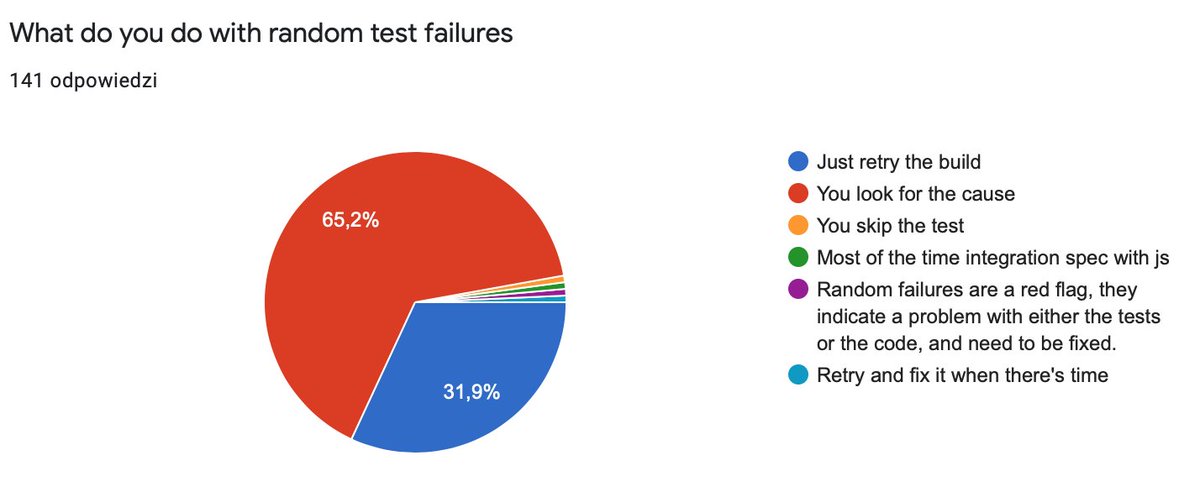

But this is a more specific question: what do you do with random test failures.

32% are fine with kicking the build.

65% look for the cause.

I could've also asked whether they're determined to find the cause at all costs.

32% are fine with kicking the build.

65% look for the cause.

I could've also asked whether they're determined to find the cause at all costs.

Read on Twitter

Read on Twitter