I can understand the singular need to remove an imminent threat from social media. HOWEVER. It didn’t have to get to this point, & it should never get to this point again. How we handle this going forward cannot be centered around Trump or other, specific bad actors. (thread)

Social media in its current iteration allows bad actors to pretend to be anyone, anywhere, with any kind of credentials. There are no guardrails whatsoever for the public to effectively ascertain whether what they’re seeing is real or fake; organic or purposely manipulated.

Social media in its current iteration allows bad actors to erect and command digital armies - from thin air and with little investment - with none of us the wiser that they’re in our midst.

Social media has a responsibility to allow us the tools that we’d have in normal, actual-reality life, to decide if we will trust someone or not. To decide if they’re dangerous or not. To decide if we’ll amplify them or not.

Social media has the responsibility to hamper those who wish us serious harm in their efforts to effect that harm - not through censorship, but through transparency.

Banning Trump at the 11th hour, only after:

-bloodshed and violence

-our entire Congress being in serious danger

-the line of succession being in serious danger

-a call for insurrection being allowed to dominate our online lives + news cycles

Does NOT let them off the hook.

-bloodshed and violence

-our entire Congress being in serious danger

-the line of succession being in serious danger

-a call for insurrection being allowed to dominate our online lives + news cycles

Does NOT let them off the hook.

I call on the new Congress, if they are serious about keeping democracy, to regulate social media. Again: I’m not asking for censorship, I’m asking for regulation. Hold Silicon Valley accountable.

Here is a place to start: https://twitter.com/saradannerdukic/status/1123589567146332162?s=20

And here: https://gdpr.eu/what-is-gdpr/

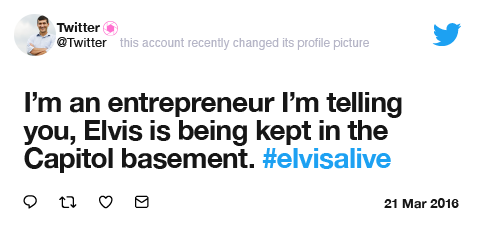

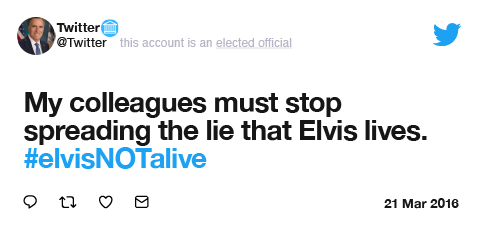

But let’s take it further. What if, since at least 2015, guardrails had been in place? Let’s imagine a UX focused on critical thinking, and the tools that help us make better choices.

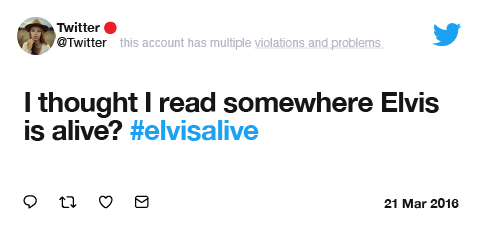

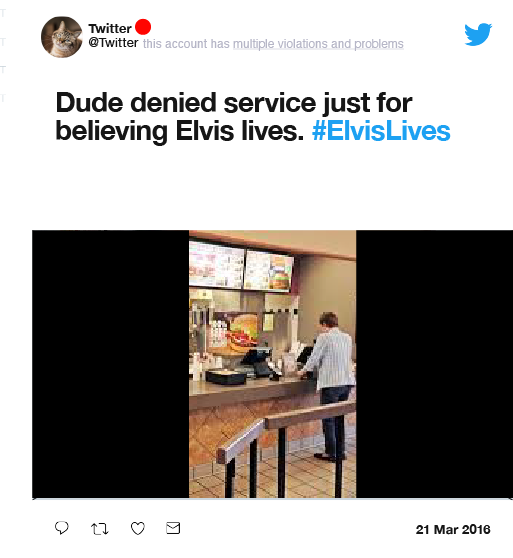

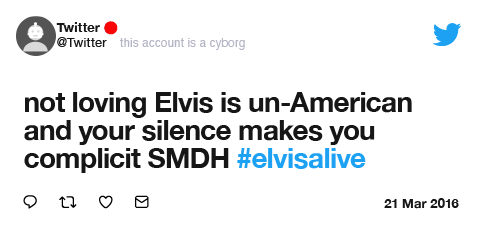

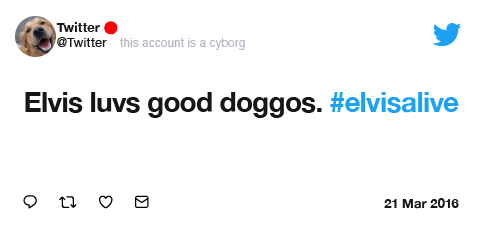

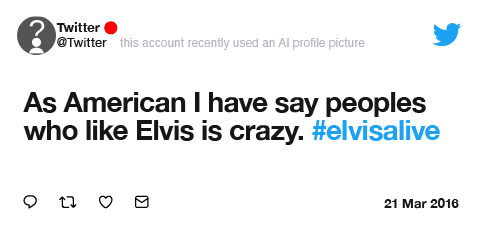

What if, when interacting with people, we had easily recognizable visual cues that let us assess whether to engage, like, or share?

In those graphics above, what if you could click on “violations” and see what they are? Violations like:

-cannot verify identity

-excess number of handle and/or display name changes

-use of AI photo for profile

-inorganic activity

-excessive posting of known disinformation

-excess number of handle and/or display name changes

-use of AI photo for profile

-inorganic activity

-excessive posting of known disinformation

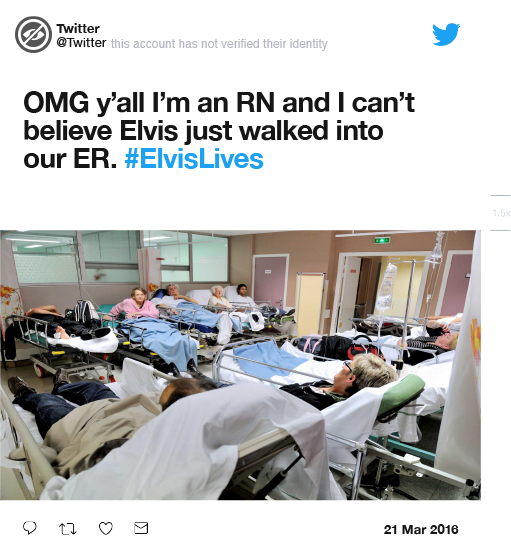

-unsupported claims about professional background

-false claims about profession

-excessive use of hashtags associated with disinformation and/or violations

-false claims about profession

-excessive use of hashtags associated with disinformation and/or violations

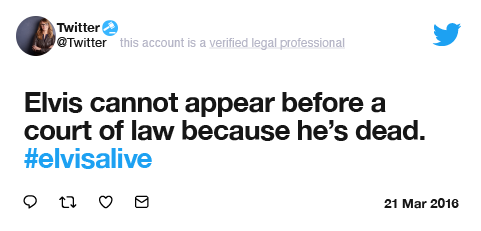

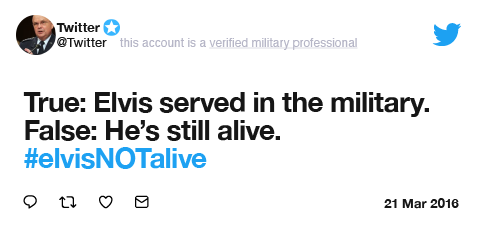

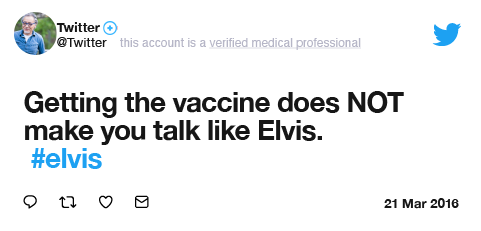

Now let’s talk about professionals, which many of us follow on this platform for expertise and advice. What if medical, law and military professionals who want to use that attribution in their bio had to undergo a verification process?

(and if you click on the "verified professional" part, you can see if it's a dermatologist or epidemiologist telling you what's what about COVID, etc.)

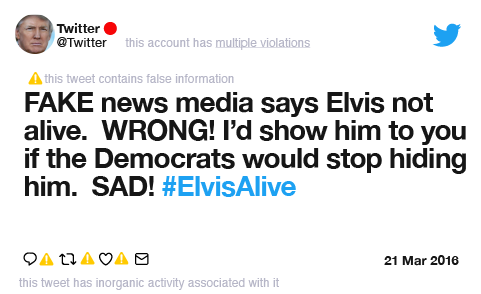

What if you could click on the caution symbol and see a list like:

-inorganic retweets and likes

-the content is known disinformation

-a high number of unverifiable accounts are engaging with it

-a high number of accounts with violations and problems are engaging with it

-inorganic retweets and likes

-the content is known disinformation

-a high number of unverifiable accounts are engaging with it

-a high number of accounts with violations and problems are engaging with it

What if, when viewing profiles, we could do this? https://twitter.com/saradannerdukic/status/1116768050999627776?s=20

What if we had more control over who can follow us and engage with us? What if these were some of the safety features we could toggle on and off?

-do not allow bots or cyborgs to follow me

-do not allow locked accounts to follow me without my permission

-do not allow bots or cyborgs to follow me

-do not allow locked accounts to follow me without my permission

-do not allow accounts using AI avis to follow me

-do not allow accounts without verified identities to follow me

-do not allow accounts without verified identities to follow me

And, instead of just making problem accounts go “poof,” what if there was a digital perp walk, followed by a publicly accessible archive? What if the archive allowed the public and journalists the ability (and responsibility) to identify networks and bad intent?

(Obviously, anything that’s dangerous or exploitative would not be included in this)

Maybe the perp walk could look like this:

-Before suspension, accounts must be left up for 1 week so that the public who interacted with them will know, and journalists can take note.

-Before suspension, accounts must be left up for 1 week so that the public who interacted with them will know, and journalists can take note.

-When avis auto-change due to activity or violations, the accounts and their tweets can’t be deleted for 72 hours, allowing people to examine their activity.

-If an account has been using an AI photo as an avi, it can’t update its profile or delete the account for 72 hours once AI is designated.

Finally:

TRANSPARENCY:

In the archive of suspended accounts -

-every tweet in archive flagged with: "this tweet is from an account that has been suspended due to violations"

-all tweets, including deleted tweets, are visible*

*except anything dangerous or exploitative

TRANSPARENCY:

In the archive of suspended accounts -

-every tweet in archive flagged with: "this tweet is from an account that has been suspended due to violations"

-all tweets, including deleted tweets, are visible*

*except anything dangerous or exploitative

-all accounts it followed and was followed by are visible

-accounts from which it received a high amount of engagement are displayed (even if likes and retweets were deleted)

-accounts from which it received a high amount of engagement are displayed (even if likes and retweets were deleted)

I of course don’t have all the answers - far from it. But we have to start somewhere. We have to do better if we want democracy to survive. We HAVE to.

Read on Twitter

Read on Twitter