A few thoughts about the Trump account suspension, not at all comprehensive:

I’m most concerned about how account suspension affects vulnerable & marginalized speakers who lack other options. The US President is literally the least vulnerable person in the world in that regard.

I’m most concerned about how account suspension affects vulnerable & marginalized speakers who lack other options. The US President is literally the least vulnerable person in the world in that regard.

In general, services should clearly explain their policies and enforce them as consistently as possible. These past few days, though, it seems like decisions about Trump’s accounts (across multiple sites) have been made via (important!) balancing tests and contextual analysis.

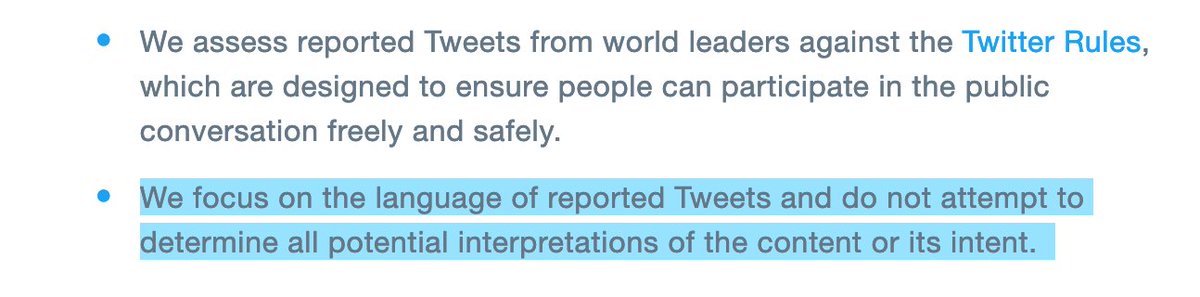

For example, Twitter gave a pretty detailed, thoughtful explanation of Trump’s suspension ( https://blog.twitter.com/en_us/topics/company/2020/suspension.html) that seems in tension, at least, with their post on world leaders’ accounts last year ( https://blog.twitter.com/en_us/topics/company/2019/worldleaders2019.html), where they say:

That’s not a “gotcha” of Twitter. Context is enormously important in understanding the meaning of language, including the threat conveyed by Trump’s final tweets. At the same time, that kind of nuanced contextual analysis is literally impossible to perform at scale.

So we get to the line-drawing questions: Take context into account for Trump, and for all world leaders? Only around elections/power-transfer times? When political violence has already been incited? Only for countries where moderators have deep contextual understanding?

Answering “yes” to those questions feels immensely unsatisfying, but OTOH so does the idea of social media companies routinely evaluating the statements & actions of political figures around the world and putting stringent limits on what kind of political rhetoric is acceptable.

For my part, I think we need to have honest public policy conversations about prioritization in moderation. Major companies could & should devote more resources to identifying risks of offline violence in countries around the world and developing mitigation/prevention strategies.

(This is much more like intelligence work than standard content moderation, something we’ve learned from various companies’ efforts to grapple with coordinated election interference these past 4 years. Challenging, resource-intensive, and a complement to regular moderation.)

But even major companies will face a limit, eventually, to the resources they can devote to contextual analysis in moderation (there’s a finite number of potential mods in the world & “AI” isn’t a magic solution). Smaller competitors face that limit pretty much immediately.

So: prioritization. It seems pretty clear that lots of US-based tech companies are prioritizing the violence and threats to democracy in the US right now, which makes sense for a lot of reasons. But what other issues deserve this level of scrutiny and attention to context?

That’s not a rhetorical question. There are many potential answers: Incitement to violence by other world leaders/political figures/celebrities/regular folks. Hate speech that can lay the groundwork for eventual violence. Disinformation that warps our shared sense of reality. &c.

It’s (been) clear that a contextualized response is absolutely necessary, in some circumstances, to prevent violence & it’s crucial for discerning between speech that incites and speech that educates/documents/informs. But that contextualized response is not replicable at scale.

In that sense, Trump’s suspensions aren’t really generalizable to the broader debates about moderation & intermediary liability. There, we need to grapple with systemic and scalable impacts. The kind of analysis Twitter did here will never undergird every moderation decision.

(More relevant to the Section 230 & DSA discussions, are the parallel stories about Apple & Google kicking Parler out of their app stores for failing to moderate sufficiently. Moderation by infrastructure-ish providers raises much more significant free expression concerns.)

(But that's a topic for a different thread! Thanks for making it to the end of this one.)

Read on Twitter

Read on Twitter