Why does @OpenAI's CLIP model matter? https://openai.com/blog/clip/

Traditionally, training a classification model (a "thing labeler") relies on collecting a lot of images of your specific thing.

This is effective, but brittle.

You're hoping that your images are perfectly representative of what they'd look like in *any* other condition.

This is effective, but brittle.

You're hoping that your images are perfectly representative of what they'd look like in *any* other condition.

How brittle can image models be?

A team at MIT used state-of-the-art classifiers on "common objects" but in odd contexts. The model was 40% less effective on identifying objects like chairs in hammers in less common positions.

A team at MIT used state-of-the-art classifiers on "common objects" but in odd contexts. The model was 40% less effective on identifying objects like chairs in hammers in less common positions.

Brittle models is a big reason data augmentation and active learning *really* matter. You need to continuously collect data from your production conditions (even with OpenAI's advancement!)

I've written more about active learning if interested: https://blog.roboflow.com/what-is-active-learning/

I've written more about active learning if interested: https://blog.roboflow.com/what-is-active-learning/

So what does @OpenAI's CLIP do differently?

Researchers used 400 million (!) image and text pairs to train models to train models to predict which caption (from 32,768 options) best matched a given image.

Training the two models took 30 days.

Researchers used 400 million (!) image and text pairs to train models to train models to predict which caption (from 32,768 options) best matched a given image.

Training the two models took 30 days.

As opposed to collecting a *specific* set of images with set tags, CLIP learns more generally what captions/words match an image's contents.

In a sense, it's like a "visual thesaurus." (h/t @rememberlenny)

CLIP is the world's best AI caption writer.

In a sense, it's like a "visual thesaurus." (h/t @rememberlenny)

CLIP is the world's best AI caption writer.

CLIP's approach is notable because it means: (1) the model is more general across object representations (helping to solve the brittle issue above) (2) a giant image dataset isn't required to get strong initial performance.

CLIP's big advancement isn't suddenly being the best classifier on a specific task.

It's being the best classifier for *any* task.

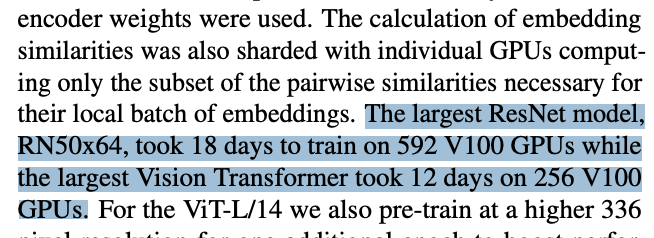

e.g. CLIP and ResNet are equal on an ImageNet benchmark, but CLIP generalizes to other datasets for more effectively.

It's being the best classifier for *any* task.

e.g. CLIP and ResNet are equal on an ImageNet benchmark, but CLIP generalizes to other datasets for more effectively.

CLIP doesn't beat a traditional network on every task, however.

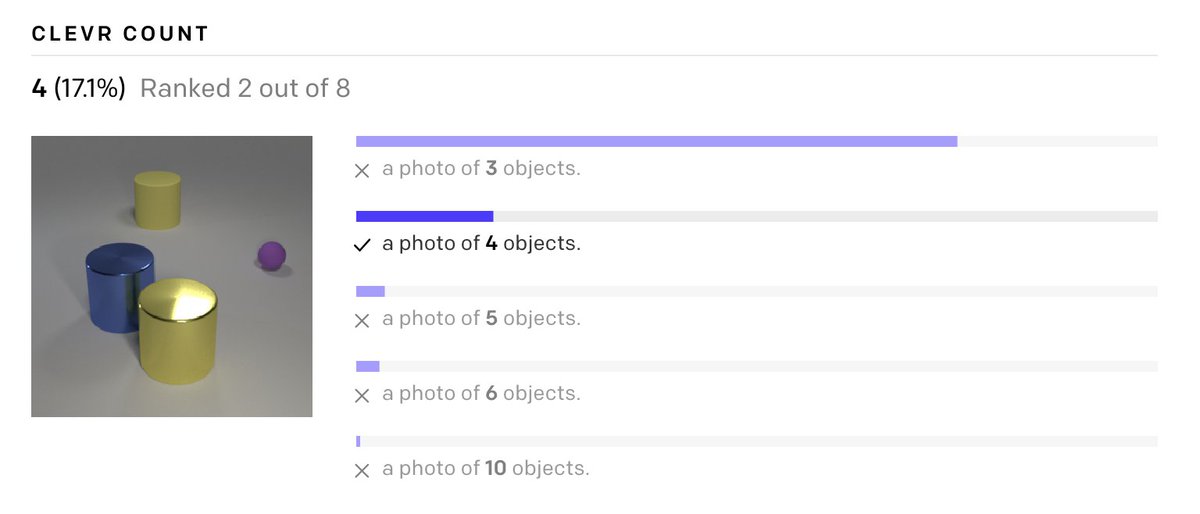

CLIP misses on things like counting object in an image or identifying closeness of objects in an image.

Again, think of a good caption writer.

CLIP misses on things like counting object in an image or identifying closeness of objects in an image.

Again, think of a good caption writer.

CLIP is one giant step towards generalizability in AI. By using a training technique to learn about describing attributes of what's in an image, @OpenAI has made a huge step towards *learning not memorizing* object appearance. Props to the team! https://github.com/openai/CLIP

Read on Twitter

Read on Twitter