Do SotA natural language understanding models care about word order?

Nope , 75% to 90% of the time, for BERT-based models, on many GLUE tasks (where they outperformed humans).

, 75% to 90% of the time, for BERT-based models, on many GLUE tasks (where they outperformed humans).

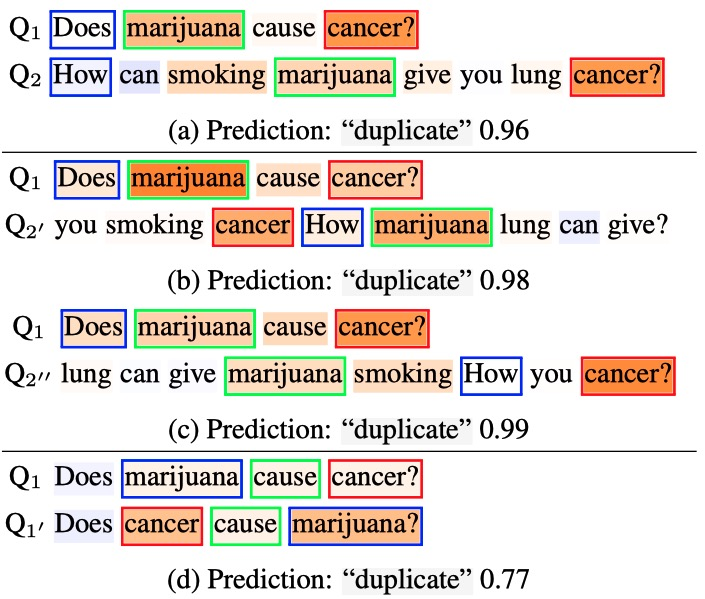

"marijuana cause cancer" == "cancer cause marijuana" Ouch...

https://arxiv.org/abs/2012.15180 1/4

Nope

, 75% to 90% of the time, for BERT-based models, on many GLUE tasks (where they outperformed humans).

, 75% to 90% of the time, for BERT-based models, on many GLUE tasks (where they outperformed humans)."marijuana cause cancer" == "cancer cause marijuana" Ouch...

https://arxiv.org/abs/2012.15180 1/4

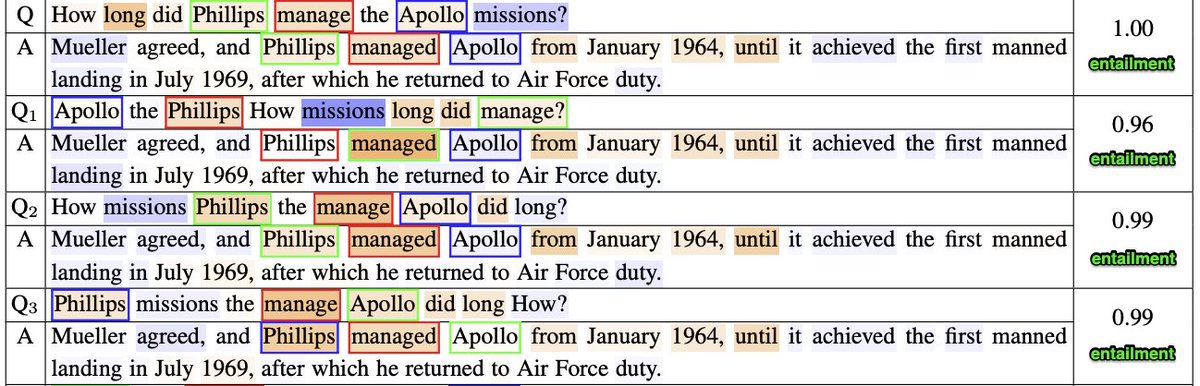

How? For *different* sequence-pair tasks, interestingly, RoBERTa-based models tend to use *the same* set of self-attention heads to match up similar words that are in both sequences.

This enabled model predictions to stay constant when all words in one question are shuffled. 2/4

This enabled model predictions to stay constant when all words in one question are shuffled. 2/4

For sentiment analysis (SST-2), ~60% of the sentence binary labels can be predicted from a single, most-important word. 3/4

This is intriguing given that pre-trained BERT's contextualized embeddings are known to be *contextual*.

Work led by the amazing PhD student @pmthangxai , and w/ Trung Bui & Long Mai!

We're grateful for any feedback :)

4/4

Work led by the amazing PhD student @pmthangxai , and w/ Trung Bui & Long Mai!

We're grateful for any feedback :)

4/4

Read on Twitter

Read on Twitter