The Intuition behind activation functions in deep learning

If you have built a basic model in deep learning, you saw things like sigmoid, ReLU (Rectified Linear Unit), tanh...

These are activation functions.

Why do we need activation functions?

Let's talk it

1/n

If you have built a basic model in deep learning, you saw things like sigmoid, ReLU (Rectified Linear Unit), tanh...

These are activation functions.

Why do we need activation functions?

Let's talk it

1/n

2/n

Activation functions are used to introduce non-linearities in the deep network. And this is because most of the data are not linear in the real world.

Let's take an example of the exam scores.

Activation functions are used to introduce non-linearities in the deep network. And this is because most of the data are not linear in the real world.

Let's take an example of the exam scores.

3/n

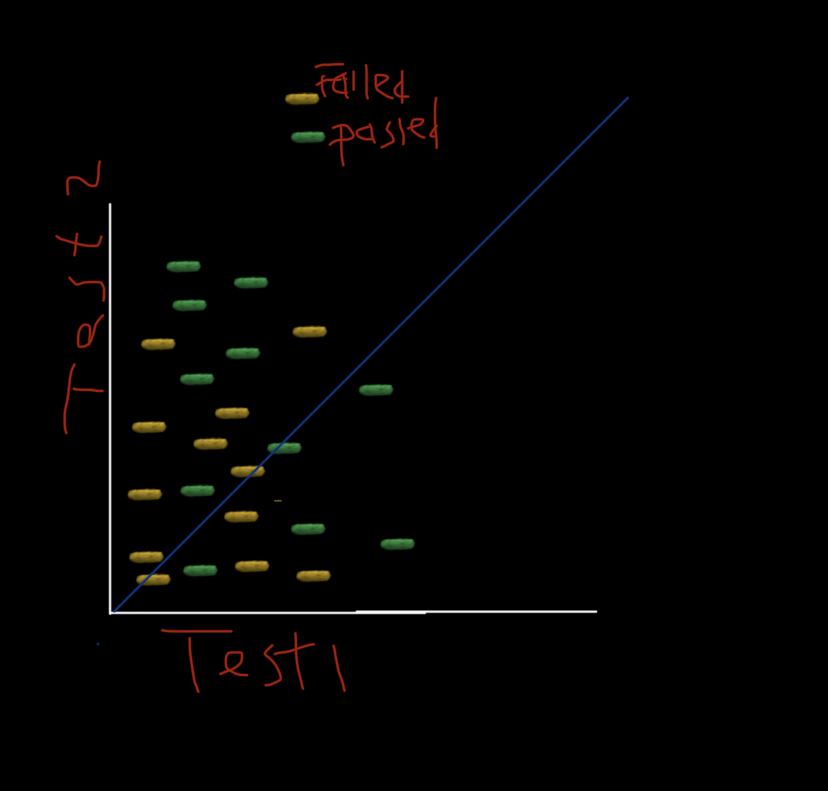

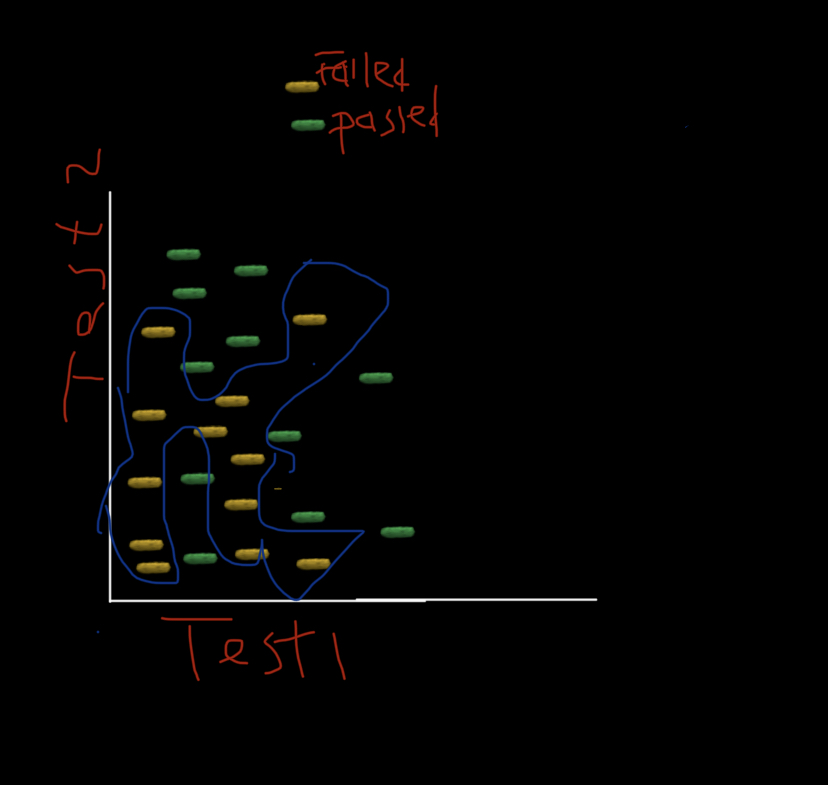

If you had a dataset like this where the axes represent Test 1&2, and the yellow dot represents students who failed the exam, the green represents students who passed.

where the axes represent Test 1&2, and the yellow dot represents students who failed the exam, the green represents students who passed.

If you wanted to draw a decision line using a linear function, you would have something like this:

If you had a dataset like this

where the axes represent Test 1&2, and the yellow dot represents students who failed the exam, the green represents students who passed.

where the axes represent Test 1&2, and the yellow dot represents students who failed the exam, the green represents students who passed. If you wanted to draw a decision line using a linear function, you would have something like this:

4/n

The blue decision line above not tell who passed or who didn't pass the exam.

This is where non-linearities come into play (and as you can see, the data is total not linear). It will allow you to fit the data well.

The blue decision line above not tell who passed or who didn't pass the exam.

This is where non-linearities come into play (and as you can see, the data is total not linear). It will allow you to fit the data well.

5/n

There are various types of activation functions such as ReLu (Rectified Linear Unit), sigmoid, tanh, LeakyRelu, softmax, ...

Let's talk about ReLU and sigmoid. They are used a lot.

There are various types of activation functions such as ReLu (Rectified Linear Unit), sigmoid, tanh, LeakyRelu, softmax, ...

Let's talk about ReLU and sigmoid. They are used a lot.

6/n

ReLu is a default activation in machine learning. It tends to work really well for many problems.

The idea behind ReLu is simple. If given a set of values, it will return these values as long as they are greater than zero. Any number less than zero within that set will be 0

ReLu is a default activation in machine learning. It tends to work really well for many problems.

The idea behind ReLu is simple. If given a set of values, it will return these values as long as they are greater than zero. Any number less than zero within that set will be 0

7/n

Sigmoid is used to give probabilities, it's output is always between 0 and 1 for any input number. This is typically used at the last layer of the deep network to give the output as a probability. In brief, Its output is a single number which is between 0 & 1.

Sigmoid is used to give probabilities, it's output is always between 0 and 1 for any input number. This is typically used at the last layer of the deep network to give the output as a probability. In brief, Its output is a single number which is between 0 & 1.

8/8

Understanding which activation to use is one of the necessary skills in developing effective deep learning applications. And it is helpful in debugging or improving model performance.

This is the end of the thread! Thank you for reading.

Understanding which activation to use is one of the necessary skills in developing effective deep learning applications. And it is helpful in debugging or improving model performance.

This is the end of the thread! Thank you for reading.

@svpino @AlejandroPiad your experience with developing a machine learning application is extensive.

Any take on how knowledge of activation function plays into building effective ML systems?

Any take on how knowledge of activation function plays into building effective ML systems?

Read on Twitter

Read on Twitter