Follow-up on ONS ad hoc teachers study (THREAD)

Many people have continued to ask me for updates on my earlier 2-thread series on the 6 November ONS “ad hoc” teachers risk study.

Sorry for the delay. I was trying to be patient with the authors. 1/ https://twitter.com/sarahdrasmussen/status/1330744740711698433

Many people have continued to ask me for updates on my earlier 2-thread series on the 6 November ONS “ad hoc” teachers risk study.

Sorry for the delay. I was trying to be patient with the authors. 1/ https://twitter.com/sarahdrasmussen/status/1330744740711698433

Back story: in Nov, due to a SAGE (schools group) request, a few ONS authors looked at random sampling covid test data (their associated questionnaires ask for occupation) to compare risks to teachers, health/social-care-related key workers, + an “Other professions” category. 2/

Unfortunately, the sample size was so small that it was nearly incapable FROM OUTSET of generating statistically significant evidence.

In defiance of Office for Statistics Regulation (OSR) rules, the authors reported this as “no evidence of difference” WITH NO EXPLANATION. 3/ https://twitter.com/sarahdrasmussen/status/1330744789218824192

In defiance of Office for Statistics Regulation (OSR) rules, the authors reported this as “no evidence of difference” WITH NO EXPLANATION. 3/ https://twitter.com/sarahdrasmussen/status/1330744789218824192

Don’t let anyone fool you: if researchers do a study and report “no evidence of difference” with no disclaimer at all,

that’s usually codespeak for “we found no difference, but since ‘no difference’ is a hard thing to demonstrate statistically, we didn’t say that out loud.” 4/

that’s usually codespeak for “we found no difference, but since ‘no difference’ is a hard thing to demonstrate statistically, we didn’t say that out loud.” 4/

At the very least, such a statement engenders the strong presumption that the study was CAPABLE OF GENERATING EVIDENCE HAD IT EXISTED, and thus the failure to find such evidence is interpreted as a form of evidence—sometimes even strong evidence—in its own right. 5/

...which explains why most researchers citing the ONS ad hoc teacher study interpreted it as they did.

That’s why SAGE wrote that “ONS data... show no difference,”

why Scottish Government wrote “[ONS] data found there is no difference,”

and those were only the first. 6/ https://twitter.com/sarahdrasmussen/status/1330294464846368778

That’s why SAGE wrote that “ONS data... show no difference,”

why Scottish Government wrote “[ONS] data found there is no difference,”

and those were only the first. 6/ https://twitter.com/sarahdrasmussen/status/1330294464846368778

So when the lead author insists they only said “no evidence,”

that’s a little analogous to the abuser who says, “if you try to leave me, you never know if something bad might happen to the dog”

...but then when called on it later, says, “Hey, I never made a direct threat!” 7/

that’s a little analogous to the abuser who says, “if you try to leave me, you never know if something bad might happen to the dog”

...but then when called on it later, says, “Hey, I never made a direct threat!” 7/

Of course, people do things by accident all the time.

What matters is how you correct a mistake when called on it.

The ONS lead author chose to double down with a statement that reinforced the initial damaging, winking message. 8/

What matters is how you correct a mistake when called on it.

The ONS lead author chose to double down with a statement that reinforced the initial damaging, winking message. 8/

Thing is, they actually DID find increased teacher risk outcomes, just with LOW CONFIDENCE due to small sample size.

That’s like spotting a dog in your garden and saying, “WE DID A STUDY and there’s NO EVIDENCE of higher dog rate on my street” (since only checked 1 garden) 9/

That’s like spotting a dog in your garden and saying, “WE DID A STUDY and there’s NO EVIDENCE of higher dog rate on my street” (since only checked 1 garden) 9/

—then other scientists saying A STUDY found no increased dog rate on that street.

Then at the OSR’s prompting, editing to A STUDY found NO EVIDENCE of higher dog rate.

As the ONS lead author and citing scientists know, such an edit doesn’t much alter the perceived message. 10/

Then at the OSR’s prompting, editing to A STUDY found NO EVIDENCE of higher dog rate.

As the ONS lead author and citing scientists know, such an edit doesn’t much alter the perceived message. 10/

In fact, the “no evidence” statement was made BECAUSE of the apparent increased risk. The author told me,

“The REASON I wrote ‘no evidence’ in the caption is I was afraid people would see the teacher of unknown type result and think that teachers were at higher risk.” 11/

“The REASON I wrote ‘no evidence’ in the caption is I was afraid people would see the teacher of unknown type result and think that teachers were at higher risk.” 11/

Let’s back up a little.

There’s always uncertainty in how well data from a sample reflect results for a whole subpopulation.

Usually small sample size means less certainty. Uncertainty is often measured by a 95% confidence interval (CI) put in parentheses, eg 3 (2.5-3.5). 12/

There’s always uncertainty in how well data from a sample reflect results for a whole subpopulation.

Usually small sample size means less certainty. Uncertainty is often measured by a 95% confidence interval (CI) put in parentheses, eg 3 (2.5-3.5). 12/

When community infection rates are lower, decent certainty from random sampling testing takes LOTS of people, to pick up enough cases.

ONS covid surveillance is longitudinal: they keep testing the same 200,000 or so people, but a few drop out and a few others are added in. 13/

ONS covid surveillance is longitudinal: they keep testing the same 200,000 or so people, but a few drop out and a few others are added in. 13/

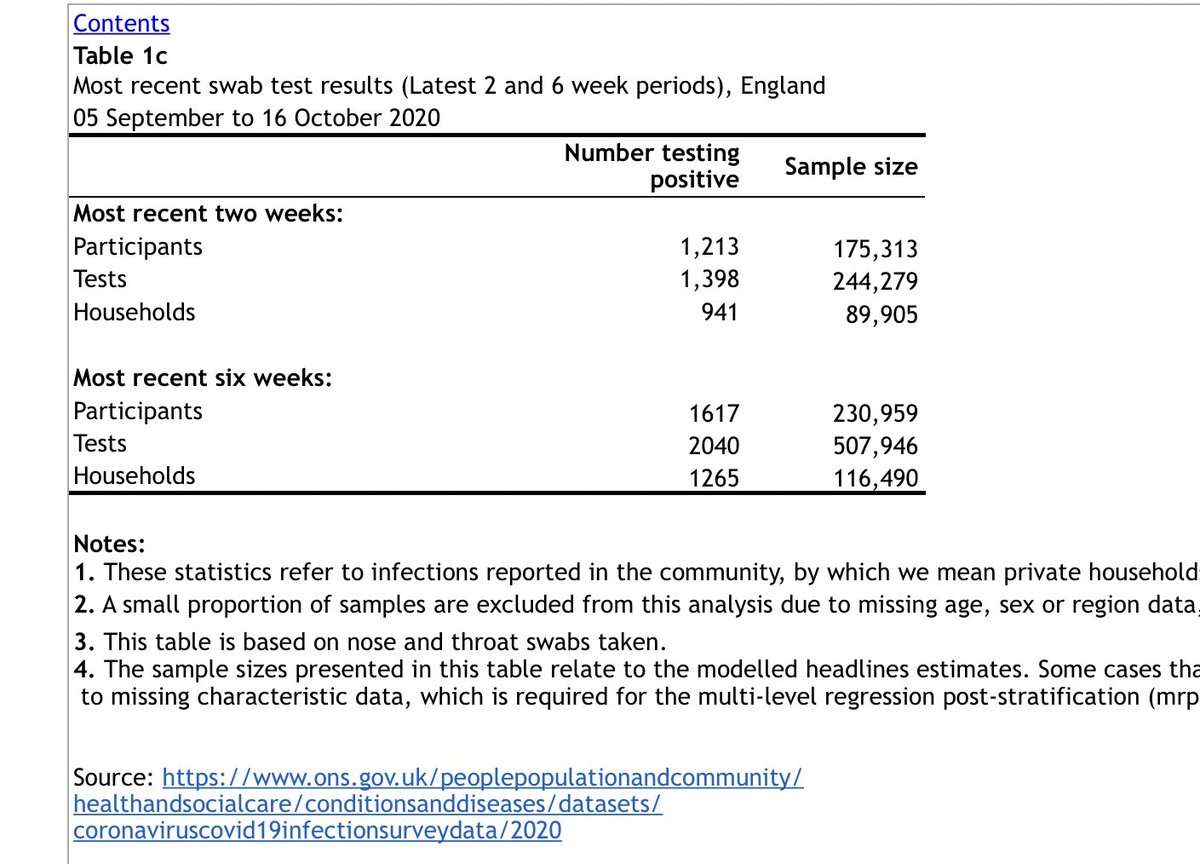

Eg, the ONS found

1617 cases testing 230,959 ppl 5 Sep-16 Oct (6wks)

including 1213 cases testing 175,313 ppl 3-16 Oct (2wks)

On 6 Nov, they reported

3458 cases from testing 260,548 ppl 20 Sep-31 Oct (6 wks),

including 1900 cases from testing 160,414 ppl 18-31 Oct (2 wks). 14/

1617 cases testing 230,959 ppl 5 Sep-16 Oct (6wks)

including 1213 cases testing 175,313 ppl 3-16 Oct (2wks)

On 6 Nov, they reported

3458 cases from testing 260,548 ppl 20 Sep-31 Oct (6 wks),

including 1900 cases from testing 160,414 ppl 18-31 Oct (2 wks). 14/

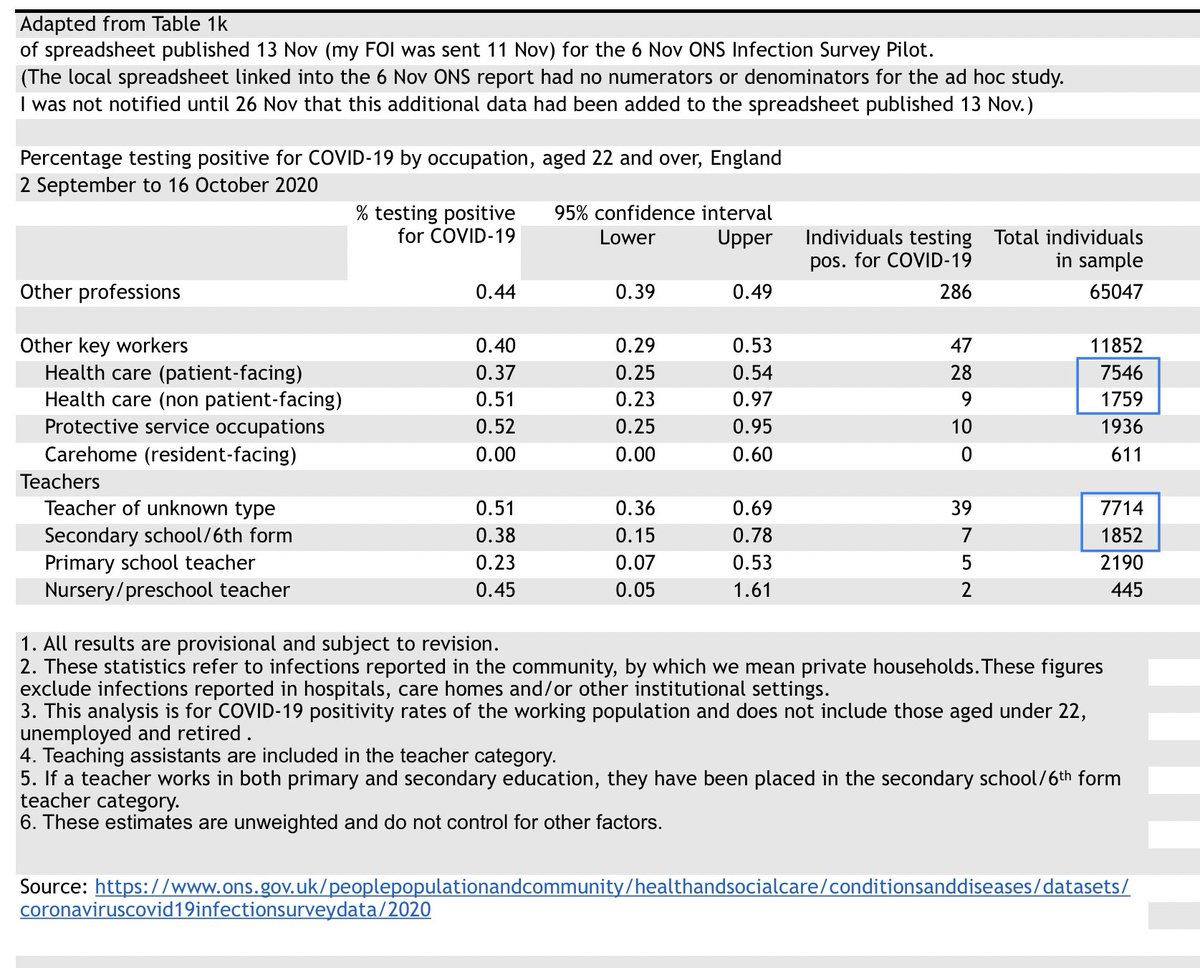

Turns out the ONS ad hoc study nursery teacher result was based on 2 cases.

As in 1+1.

But so few nursery teachers were tested that those 2 cases still amounted to higher prevalence than for “Other professions.”

Primary teacher result was based on 5 cases, secondary on 7. 15/

As in 1+1.

But so few nursery teachers were tested that those 2 cases still amounted to higher prevalence than for “Other professions.”

Primary teacher result was based on 5 cases, secondary on 7. 15/

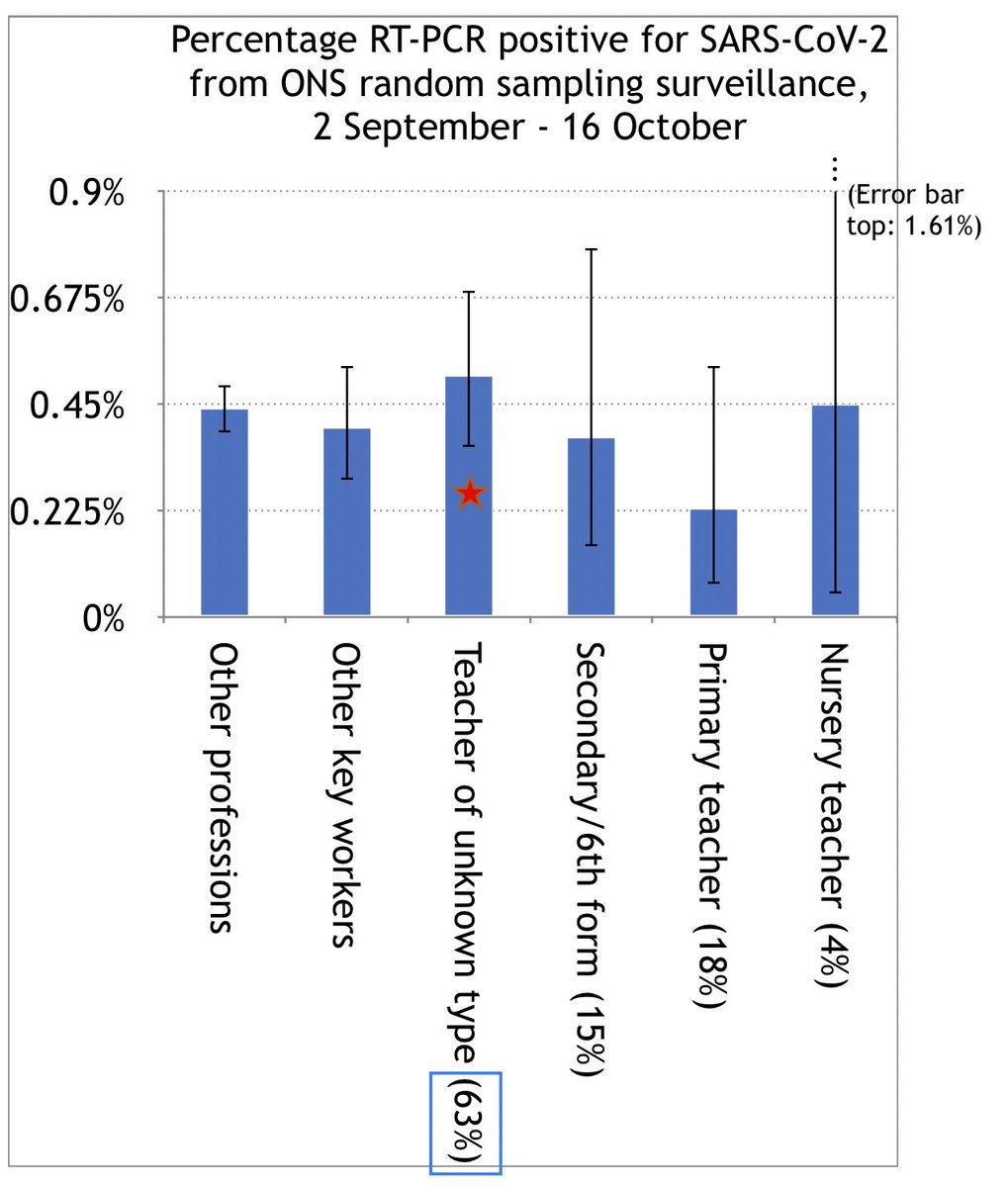

There was one teacher category left over—Teacher of Unknown Type (ToUT)—with ~8000 of the study’s ~12000 teachers, recording 39 cases.

Anyone who wrote “teacher” or “teaching assistant” without specifying “primary” or “secondary” went in this group. 16/

Anyone who wrote “teacher” or “teaching assistant” without specifying “primary” or “secondary” went in this group. 16/

Unfortunately, ToUT were too small as well.

Remember the lead author’s comment?:

“The reason I wrote ‘no evidence’ in the caption is I was afraid people would see the teacher of unknown type result and think that teachers were at higher risk.” 17/

Remember the lead author’s comment?:

“The reason I wrote ‘no evidence’ in the caption is I was afraid people would see the teacher of unknown type result and think that teachers were at higher risk.” 17/

Because ToUT kind of look higher, don’t they?

According to the listed positivities, ToUT are 16% higher than “Other professions,” 28% above “Other key workers,” and 38% above Patient-facing hcws.

(Just as ToUT are 63% of teachers, Pf hcws are 64% of Other key workers.) 18/

According to the listed positivities, ToUT are 16% higher than “Other professions,” 28% above “Other key workers,” and 38% above Patient-facing hcws.

(Just as ToUT are 63% of teachers, Pf hcws are 64% of Other key workers.) 18/

And those differences are between AVERAGES over time.

Since teachers likely started out BELOW Other professions for early September, in time-dependent data their difference to Other professions by 16 Oct would likely have looked LARGER than 16%.

What about other teachers? 19/

Since teachers likely started out BELOW Other professions for early September, in time-dependent data their difference to Other professions by 16 Oct would likely have looked LARGER than 16%.

What about other teachers? 19/

For a 2 Sep-16 Oct study, teachers of 2-11s should be treated separately.

Ie w/ a 16 Oct cut-off, most cases came from pre-11 Oct transmission. The 2-11yo prevalence for 2 Sep-10 Oct was HALF that for 11-16s, but 2-11s had a large disproportionate surge post 10 Oct (below). 20/

Ie w/ a 16 Oct cut-off, most cases came from pre-11 Oct transmission. The 2-11yo prevalence for 2 Sep-10 Oct was HALF that for 11-16s, but 2-11s had a large disproportionate surge post 10 Oct (below). 20/

So tho the “named” sec, primary, &nursery groups have too small sample size to consider separately, we should still split off “primary”+”nursery” as a group. Also, ToUT’s value was closer to secondary.

So 2 groups:

-Most teachers = ToUT+sec (78%)

-2-11s = primary+nurs (22%) 21/

So 2 groups:

-Most teachers = ToUT+sec (78%)

-2-11s = primary+nurs (22%) 21/

Both “Most teachers” (78%) and ToUT (63%) were above Other professions, Key workers, & Pf hcws.

And if you compare tops of error bars (CIs), all 3 of our teacher groups were higher by that metric.

But the CIs do OVERLAP, so LOW CONFIDENCE.

Thing is, that’s hard to avoid. 22/

And if you compare tops of error bars (CIs), all 3 of our teacher groups were higher by that metric.

But the CIs do OVERLAP, so LOW CONFIDENCE.

Thing is, that’s hard to avoid. 22/

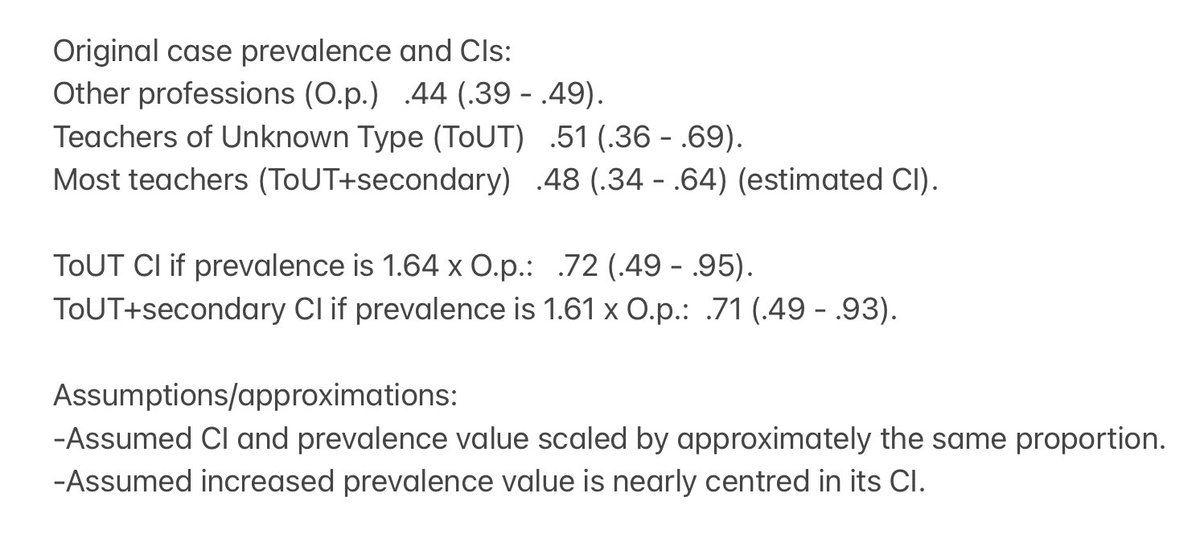

Under below-stated assumptions, ToUT would need 64% greater prevalence than Other professions (that’s as AVERAGES; so the 16 Oct difference likely much > 64%) for CIs not to overlap.

The ‘Most teachers’ avg (78% teachers) would need to be > 61% above O.p. for “evidence.” 23/

The ‘Most teachers’ avg (78% teachers) would need to be > 61% above O.p. for “evidence.” 23/

I can’t over-stress the importance of time dependence here. (And of including data past 16 Oct.)

To illustrate, let’s consider a healthcare-worker study the lead ad hoc teacher study author specifically pointed out to me: something that found “evidence” of a difference. 24/

To illustrate, let’s consider a healthcare-worker study the lead ad hoc teacher study author specifically pointed out to me: something that found “evidence” of a difference. 24/

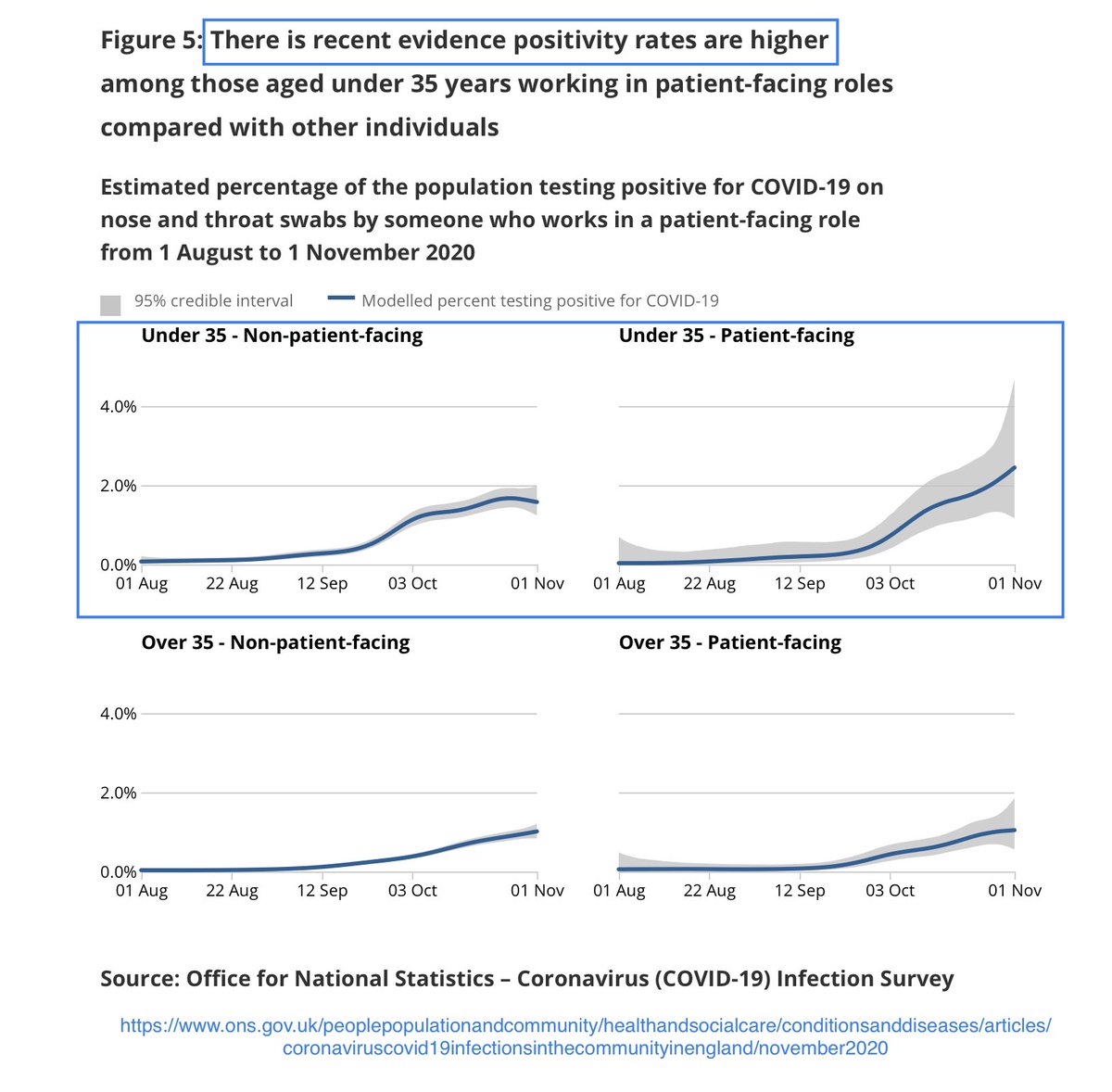

The ONS study considered patient-facing and non-Pf healthcare workers over time.

UNLIKE the teacher study, they

(a) actually used demographic weights (like nearly all ONS covid studies),

(b) modelled trends over time, &

(c) included data up to 1 Nov.

https://www.ons.gov.uk/peoplepopulationandcommunity/healthandsocialcare/conditionsanddiseases/articles/coronaviruscovid19infectionsinthecommunityinengland/november2020 25/

UNLIKE the teacher study, they

(a) actually used demographic weights (like nearly all ONS covid studies),

(b) modelled trends over time, &

(c) included data up to 1 Nov.

https://www.ons.gov.uk/peoplepopulationandcommunity/healthandsocialcare/conditionsanddiseases/articles/coronaviruscovid19infectionsinthecommunityinengland/november2020 25/

The new study declared “In the most recent weeks, THERE IS EVIDENCE that rates are higher among those working in patient-facing roles under 35 yrs compared with those not working in p.f. roles under 35 years.”

Great, now we can see what CIs do when there’s actual evidence. 26/

Great, now we can see what CIs do when there’s actual evidence. 26/

Er, so, for <35yos, the non-Pf hcw CIs (marked by dotted curves) sit entirely inside the Pf hcw CIs for the entire time, except when they peek out *above.*

This is literally the one example the lead ad hoc ONS author brought up to me, when the OSR got them to speak to me. 27/

This is literally the one example the lead ad hoc ONS author brought up to me, when the OSR got them to speak to me. 27/

Ordinarily, for statistically significant evidence, we like CIs that are disjoint or have as little overlap as possible. These CI envelopes have 100% overlap.

But it still *looks* like patient-facing are getting more infected, largely due to TIME-DEPENDENT TRENDS. 28/

But it still *looks* like patient-facing are getting more infected, largely due to TIME-DEPENDENT TRENDS. 28/

BUT, what if instead, we treated this like the teacher ad hoc study, and computed lump sums, starting from the beginning of the school year?

Whether we measure from 2 Sep to 16 Oct or from 2 Sep to 1 Nov, we would find <35s patient-facing is LOWER than non-patient-facing. 29/

Whether we measure from 2 Sep to 16 Oct or from 2 Sep to 1 Nov, we would find <35s patient-facing is LOWER than non-patient-facing. 29/

Even if we’re as generous as possible, and only measure starting from the day (11 Oct) that patient-facing swapped from lower to higher,

the patient-facing rate is only 18% higher than the non-patient-facing one.

(Remember how ToUT were 16% higher than Other professions?) 30/

the patient-facing rate is only 18% higher than the non-patient-facing one.

(Remember how ToUT were 16% higher than Other professions?) 30/

To sum up: despite Patient-facing prevalence looking 55% higher than non-patient-facing on 1 Nov,

-1 Sep-16 Oct avgs: Pf 21% LOWER than non-Pf,

-1 Sep-1 Nov avgs: Pf 2% LOWER than non-Pf,

-11 Oct-1 Nov avgs: Pf only 18% higher than non-Pf,

-CI envelopes entirely overlap. 31/

-1 Sep-16 Oct avgs: Pf 21% LOWER than non-Pf,

-1 Sep-1 Nov avgs: Pf 2% LOWER than non-Pf,

-11 Oct-1 Nov avgs: Pf only 18% higher than non-Pf,

-CI envelopes entirely overlap. 31/

A comparable effect would mean the 16% difference for ToUT v Other professions over 2 Sep-16 Oct corresponding to ToUT being (16/18)x55% = 49% higher than Op on 16 Oct.

THAT’S AN ANALOGY, not an actual estimate.

I can’t make an estimate because THEY WON’T GIVE ME THE DATA. 32/

THAT’S AN ANALOGY, not an actual estimate.

I can’t make an estimate because THEY WON’T GIVE ME THE DATA. 32/

I first just asked for the data up through the end of Oct, or however far they’d coded it. (I’ve learned their profession-coding lags roughly 2 wks behind their other work.)

I asked for the easiest possible version: raw unweighted numbers like before, but 1-2wks at a time. 33/

I asked for the easiest possible version: raw unweighted numbers like before, but 1-2wks at a time. 33/

I asked by FOI. I asked by email to the ONS ISP authors. I asked in person when the OSR made the ad hoc authors meet with me over Teams.

In front of an OSR rep, a junior author said they’d coded the data and suggested a different email address where I could request it. 34/

In front of an OSR rep, a junior author said they’d coded the data and suggested a different email address where I could request it. 34/

I asked at the new address, and they immediately forwarded it to the ONS ISP authors’ address with me cc’ed. I forwarded a copy to the ad hoc authors as well. I waited, and waited some more.

They told me no.

35/

They told me no.

35/

They said the numbers were too small. (I’d already given the option of using fractions, which has no privacy constraint on smallness, so that’s no excuse.)

I sent a new FOI and a new author email.

Yet later, I asked for weighted modelled curves like the hcw study used. 36/

I sent a new FOI and a new author email.

Yet later, I asked for weighted modelled curves like the hcw study used. 36/

They can’t claim the teachers study is too small for weighted modelled time-dependent curves, because they did it for healthcare workers. ONS covid data have FEWER hcws than teachers:

2 Sep-16 Oct ad hoc study had

ToUT=7714, pf hcws=7546

sec teachers=1852, npf hcws=1759. 37/

2 Sep-16 Oct ad hoc study had

ToUT=7714, pf hcws=7546

sec teachers=1852, npf hcws=1759. 37/

They also tried to tell me follow-up data were unnecessary because of the new ONS/LSHTM/PHE schools study.

The latter study’s nice, but it looks at a strongly ANTI-TARGETED subset—asymptomatic, nonisolating teachers at school—excluding those most likely to have covid. 38/

The latter study’s nice, but it looks at a strongly ANTI-TARGETED subset—asymptomatic, nonisolating teachers at school—excluding those most likely to have covid. 38/

In fact, the govt-influenced press office for PHE have already spun outcomes from this ONS/LSHTM/PHE study.

They actually tried to compare this asymptomatic nonisolating teacher group against TOTAL community prevalence—despite warnings in the ONS report against doing this. 39/ https://twitter.com/sarahdrasmussen/status/1339782289941803008

They actually tried to compare this asymptomatic nonisolating teacher group against TOTAL community prevalence—despite warnings in the ONS report against doing this. 39/ https://twitter.com/sarahdrasmussen/status/1339782289941803008

Yes, it would take the ad hoc authors time to plug the data into their software to spit out weighted modelled curves for Sep-Nov (& I would still also like raw numbers and weighted fortnightly estimates, please). 40/

But this they are the ones who created this monster.

And they are the ones who spurred the monster on when given a second chance to kill it. 41/

And they are the ones who spurred the monster on when given a second chance to kill it. 41/

And the monster is growing.

A 10 Dec UNICEF review wrote a whole section on teacher risk featuring this ad hoc result. In fact, it counted it with multiplicity, not seeming to understand that its different sources cited the same study https://www.unicef.org/media/89046/file/In-person-schooling-and-covid-19-transmission-review-of-evidence-2020.pdf 42/

A 10 Dec UNICEF review wrote a whole section on teacher risk featuring this ad hoc result. In fact, it counted it with multiplicity, not seeming to understand that its different sources cited the same study https://www.unicef.org/media/89046/file/In-person-schooling-and-covid-19-transmission-review-of-evidence-2020.pdf 42/

A PH Scotland study used the ONS ad hoc study to try to disqualify its own findings.

For 3 Sep-26 Nov, it found confirmed case hazard ratios (vs controls) of 1.47 (1.37-1.57) for all teachers, 1.71 (1.51-1.93) primary, 1.42 (1.28-1.59) secondary, 1.24 (1.06-1.44) nursery. 43/

For 3 Sep-26 Nov, it found confirmed case hazard ratios (vs controls) of 1.47 (1.37-1.57) for all teachers, 1.71 (1.51-1.93) primary, 1.42 (1.28-1.59) secondary, 1.24 (1.06-1.44) nursery. 43/

So 47% higher, 71%, etc.

But despite being based on >1000 teacher confirmed cases, & from 3 Sep-26 NOV, PHS authors hinted that since the ONS study used random sampling, its “no evidence” result should partly overrule their own high certainty result.

https://publichealthscotland.scot/media/2927/report-of-record-linkage-english-december2020.pdf 44/

But despite being based on >1000 teacher confirmed cases, & from 3 Sep-26 NOV, PHS authors hinted that since the ONS study used random sampling, its “no evidence” result should partly overrule their own high certainty result.

https://publichealthscotland.scot/media/2927/report-of-record-linkage-english-december2020.pdf 44/

But that PHS report is worth a thread of its own.

Eg, despite stating plenty of teacher hazard ratios and CIs below 1 for severe illness, the written report omitted all school reopening teacher case risk HRs & CIs—relegating these to an appendix not attached to the report. 45/

Eg, despite stating plenty of teacher hazard ratios and CIs below 1 for severe illness, the written report omitted all school reopening teacher case risk HRs & CIs—relegating these to an appendix not attached to the report. 45/

In the meantime, can the ONS ad hoc authors please, please, please make the time dependent data and weighted modelled curves available for 2 Sep-28 Nov?

Just think: maybe it will provide ACTUAL evidence of no increased teacher risk, and then the DfE can throw a party. 46/

Just think: maybe it will provide ACTUAL evidence of no increased teacher risk, and then the DfE can throw a party. 46/

But if instead it provides evidence consistent with increased teacher risk findings from Sweden, the PHS report appendix, and hints from the new ONS/LSHTM/PHE study,

then think what damage it’s doing to have a baseless no evidence “result” continue to be amplified instead. 47/

then think what damage it’s doing to have a baseless no evidence “result” continue to be amplified instead. 47/

Getting the answer right matters.

It impacts risk assessments for clinically extremely vulnerable teachers made to teach in classrooms.

It impacts local predictions for whether schools will be able to maintain adequate staffing to meet health & safety requirements. 48/

It impacts risk assessments for clinically extremely vulnerable teachers made to teach in classrooms.

It impacts local predictions for whether schools will be able to maintain adequate staffing to meet health & safety requirements. 48/

It impacts modelled predictions for transmission in UK schools. (Getting this wrong in one direction costs in-person school time, in the other direction costs community lives. Neither is good.)

It impacts whether the DfE should continue advising against masks in classrooms. 49/

It impacts whether the DfE should continue advising against masks in classrooms. 49/

It impacts parent and teacher well-being, knowing whether they can trust government-endorsed scientists and researchers to conduct honest evaluation and communication of what’s going on in schools.

Please, can’t someone at @ONS step up here?

This is worth getting right.

50/

Please, can’t someone at @ONS step up here?

This is worth getting right.

50/

Read on Twitter

Read on Twitter

![...which explains why most researchers citing the ONS ad hoc teacher study interpreted it as they did.That’s why SAGE wrote that “ONS data... show no difference,”why Scottish Government wrote “[ONS] data found there is no difference,”and those were only the first. 6/ https://twitter.com/sarahdrasmussen/status/1330294464846368778 ...which explains why most researchers citing the ONS ad hoc teacher study interpreted it as they did.That’s why SAGE wrote that “ONS data... show no difference,”why Scottish Government wrote “[ONS] data found there is no difference,”and those were only the first. 6/ https://twitter.com/sarahdrasmussen/status/1330294464846368778](https://pbs.twimg.com/media/EqWQr5qXYAAFN8W.jpg)