Have you ever wondered why neurons are like snowflakes? No two alike, even if they're the same type. In our latest preprint, we think we have (at least part of) the answer: it promotes robust learning.

Tweeprint follows (1/16)

https://www.biorxiv.org/content/10.1101/2020.12.18.423468v1

Tweeprint follows (1/16)

https://www.biorxiv.org/content/10.1101/2020.12.18.423468v1

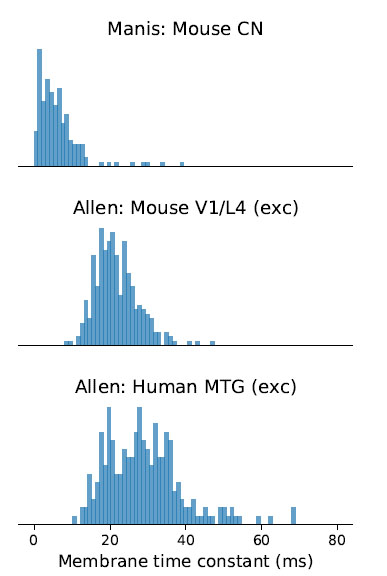

One of the striking things about the brain is how much diversity there is at so many levels, e.g. the distribution of membrane time constants. Check these out from @AllenInstitute and @AuditoryNeuro - wide range of values for single cell types, bit like a log normal dist. (2/16)

But, most models of networks of neurons that can carry out complex information processing tasks tend to use the same parameters for all neurons of the same type, with typically only connectivity being different for each neuron. (3/16)

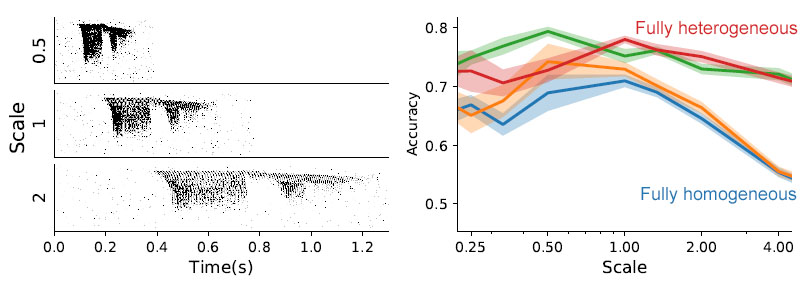

We guessed that networks of neurons with a wide range of time constants would be better at tasks where information is present at multiple time scales, such as auditory tasks like recognising speech. (4/16)

Since we were interested in temporal dynamics and comparing to biology, we used spiking neural networks trained using @hisspikeness @virtualmind surrogate gradient descent, adapted to learn time constants as well as weights. https://github.com/fzenke/spytorch (5/16)

We found no improvement for N-MNIST which has little to no useful temporal information in the stimuli, some improvement for DVS gestures which does have temporal info but can be solved well without using it, and a huge improvement for recognising spoken digits. (6/16)

The distributions of time constants learned are consistent for a given task, for each run, and regardless of whether you initialise time constants randomly or at a fixed value. And, they look like the distributions you find in the brain. (7/16)

And it's more robust. If you tune hyperparameters for sounds at a single speed, and then change the playback speed of stimuli, the heterogeneous networks can still learn the task but homogeneous ones start to fall down. (8/16)

We also tested another training method, spiking FORCE of Nicola and @ClopathLab and found the same robustness to hyperparameter mistuning. This fig shows the region where learning converges to a good solution in blue, axes are hyperparameters. (9/16)

So this looks to be pretty general: heterogeneity improves learning of temporal tasks and gives robustness against hyperparameter mistuning. And it does so at no metabolic cost. Same performance with homog networks requires 10x more neurons! So, surely the brain uses this (10/16)

This is also good for neuromorphic computing and ML: adding neuron level heterogeneity costs only O(n) time and memory, whereas adding more neurons and synapses costs O(n²). (11/16)

That's it for the results, we hope that this spurs people to look further into the importance of heterogeneity, e.g. spatial or cell type heterogeneity, and whether it can be useful in ML too. (12/16)

This work was done by two super talented PhD students Nicolas Perez and Vincent Cheung, neither of whom are on twitter unfortunately. (13/16)

And if you're interested in this area, there's a fascinating literature on heterogeneity, briefly reviewed in this paper, including similar work with a more neuromorphic angle from @SanderBohte, Tim Masquelier. Nice review in @GjorJulijana https://www.sciencedirect.com/science/article/abs/pii/S0959438815001865 (14/16)

This work was only possible thanks to two incredible scientific developments. The first is new methods of training spiking neural networks from @hisspikeness and others. We had a workshop on this over the summer, videos available at https://www.youtube.com/playlist?list=PL09WqqDbQWHFvM9DFYkM_GfnrVnIdLRhy (15/16)

The second is the availability of incredible experimental datasets thanks to orgs like @AllenInstitute, https://www.neuroelectro.org/ ( @neuronJoy @rgerkin) and individual labs like @AuditoryNeuro. Thank you so much for your generosity! (16/16)

And well done for making it to the end - let me know if you have any questions or comments!

Read on Twitter

Read on Twitter