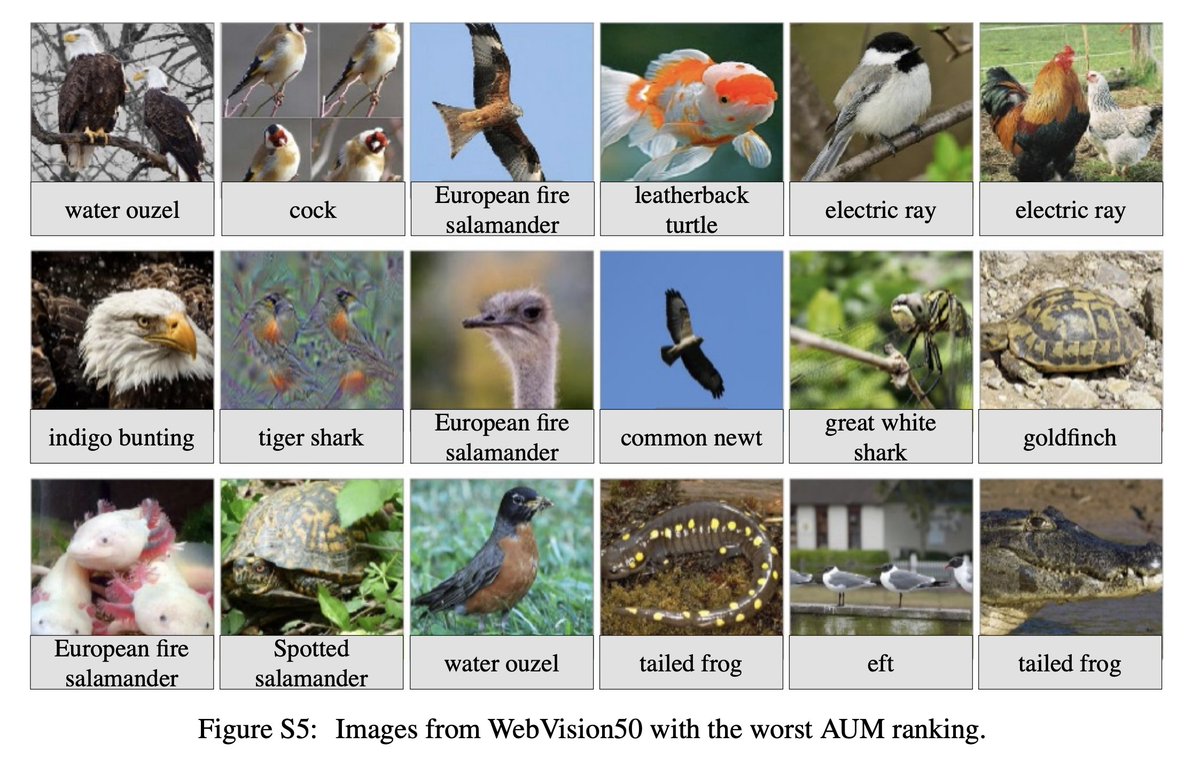

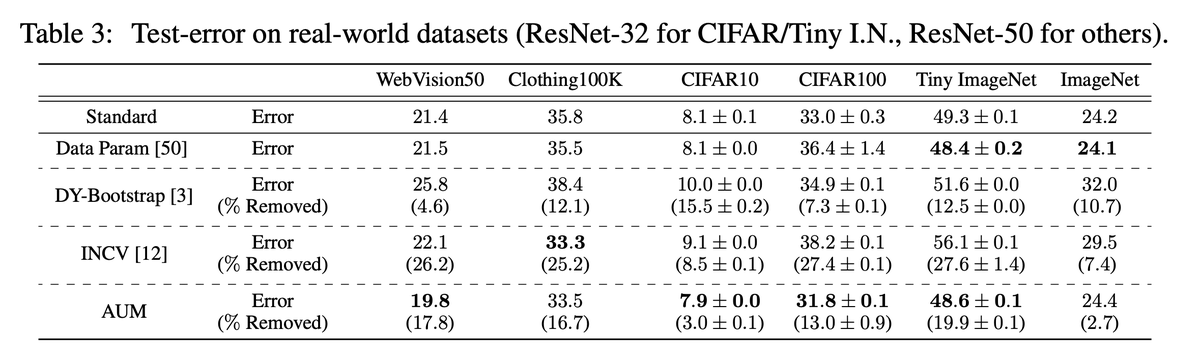

Thrilled to see a NeurIPS 2020 paper that eeks out a bit more performance on benchmark image datasets by...removing mislabeled examples from the train set!

"Identifying Mislabeled Data using the Area Under the

Margin Ranking": https://arxiv.org/pdf/2001.10528.pdf (1/4)

"Identifying Mislabeled Data using the Area Under the

Margin Ranking": https://arxiv.org/pdf/2001.10528.pdf (1/4)

When I do DS, I think about: "Whenever model performance is bad, we (scientists and practitioners) shouldn’t only resort to investigating model architecture and parameters. We should also be thinking about “culprits” of bad performance in the data." https://www.shreya-shankar.com/making-ml-work/ (2/4)

In industry, I learned so much from my DS colleagues by word-of-mouth: for example, oftentimes I get bigger performance boosts by dropping mislabeled examples or intelligently imputing features in "incomplete" data points rather than mindless hyperparameter tuning. (3/4)

It's quite amazing to see that this could work on benchmark datasets 1+ *decade* after release :)

h/t @AlexTamkin for sharing the paper with me and authors @gpleiss, @Tianyi_Zh, @side1track1, and Kilian Q. Weinberger (4/4)

h/t @AlexTamkin for sharing the paper with me and authors @gpleiss, @Tianyi_Zh, @side1track1, and Kilian Q. Weinberger (4/4)

Read on Twitter

Read on Twitter