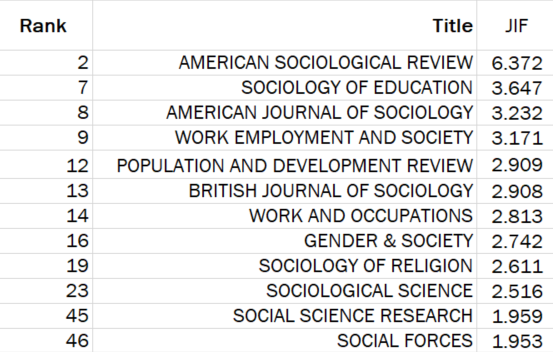

OK bibliometricians and related social scientists, let me know how you like this. If it passes twitter I'll elevate to a blog post. Here are 12 sociology journals and their Journal Impact Factors from Web of Science. They rank between 2 and 46 in the WoS "Sociology" category /1

JIF is "the average of the sum of the citations received in a given year to a journal's previous two years of publications." It's used to estimate future impact of new articles. If you publish in high-JIF journals, we assume your articles will be impactful. But... /2

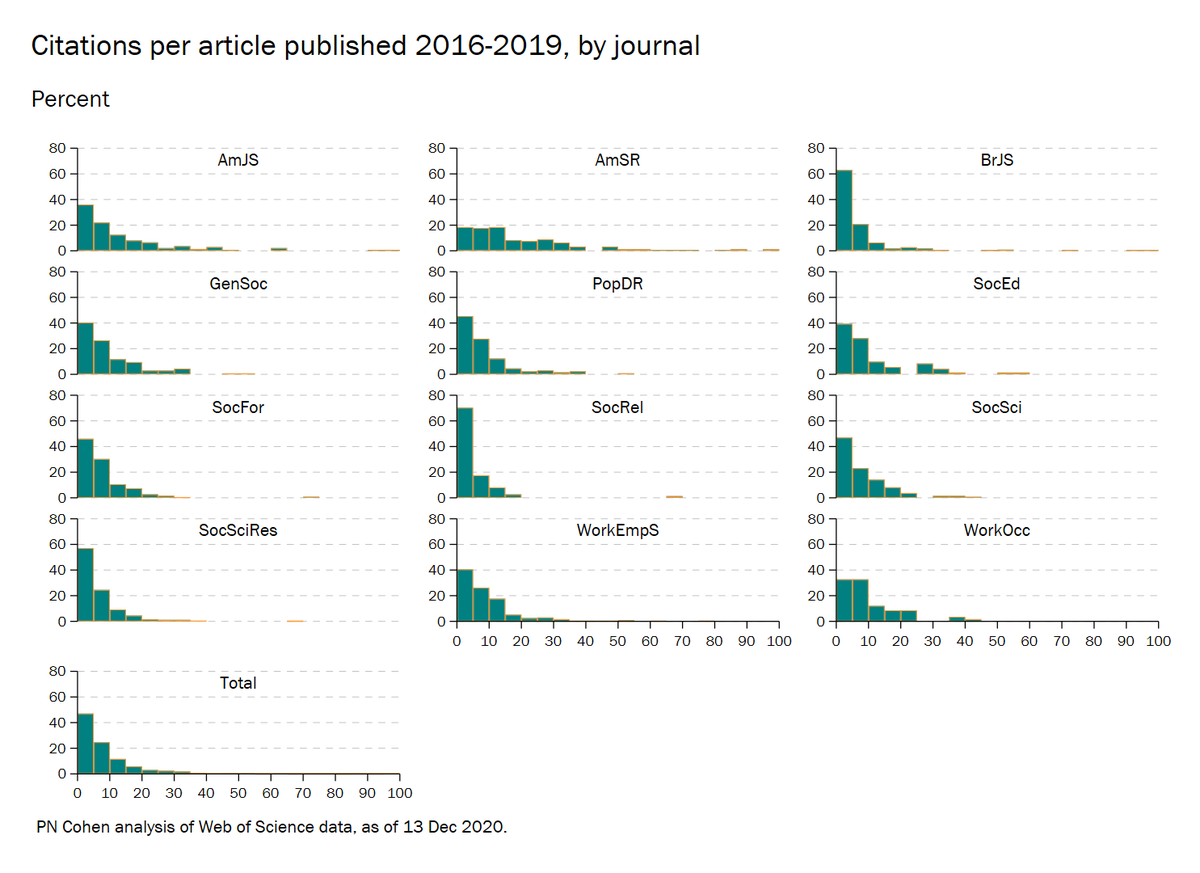

...there is a (very skewed) distribution of citations to papers within journals, so JIF is at best a noisy indicator. Taking all articles in the years 2016-2019, here is the distribution of citations for each of these journals. Big spreads and (generally lognormal) skew. /3

So, if you assume a paper in AmSR (American Sociological Review, JIF=6.37) will get more citations than one in SocFor (Social Forces, JIF=1.95), you will usually be right but sometimes wrong. If what you care about is actual citations (impact), this should concern you /4

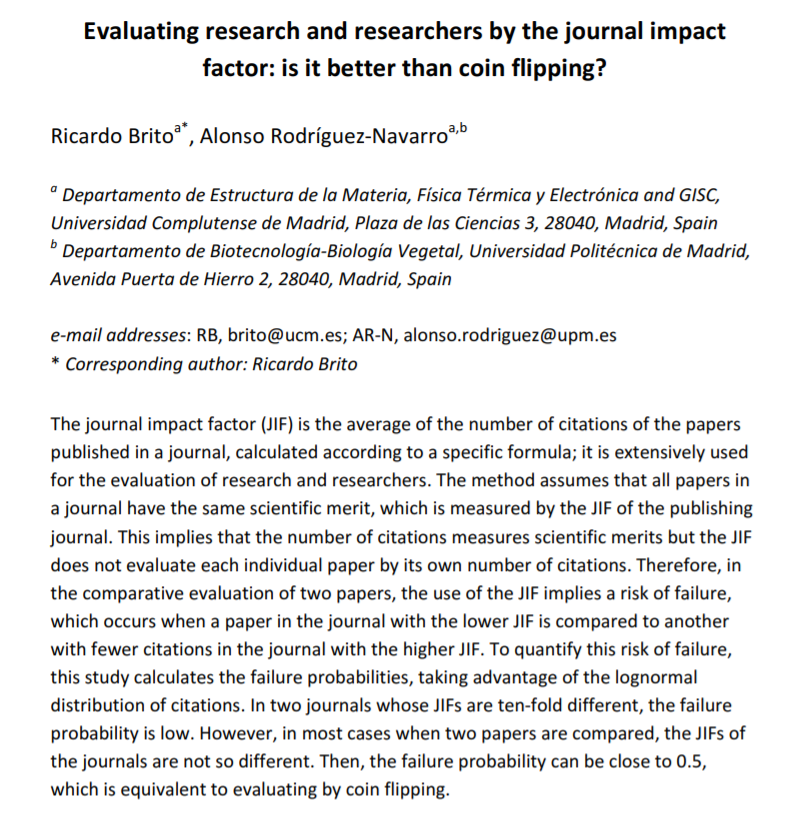

Today I read this cool paper that estimates the odds of this "failure probability," the odds that your guess about which paper will be more impactful turns out to be wrong. When JIFs are similar, the odds of an error are very high, like a coin flip. /5 https://arxiv.org/ftp/arxiv/papers/1809/1809.10999.pdf

The formulas look pretty complicated to me, so for my sociology approach I just did it by brute force. I did 10,000 comparisons of random papers from each of these 12 journals to see how often the one from the higher-JIF journal had more citations. /6

Warning: These sociology journals are a lot closer together in JIF than the science journals Brito & Rodríguez-Navarro used, which ranged from 14.8 to 1.3. These sociology journals only range from 6.37 to 1.95. That hurts our chances of guessing right. /7

So here is what I got: Percent correct in this color-coded table. 55% of matches replicated the JIF ranking - that is, the higher-JIF journal paper had more cites. Note: The way I did it, ties (including 0-0 ties) count as failure because they don't match the ranking. /8

These rates are driven by both mean and distribution differences, so it's nonlinear, but in general really high journals get better predictions. Really low ones score poorly because they have a lot of zeros, I think. That paper excluded zero-cited papers, but that seems wrong. /9

Conclusion: Using JIF to decide which of two papers in different sociology journals is likely to be more impactful (to be cited more) is a bad idea. So? Lots of people know JIF is noisy but can't help themselves when evaluating CVs for hiring or promotion. /10

When you show them evidence like this, they might say "but what is the alternative?" But as Brito & Rodríguez-Navarro write: "if something were wrong, misleading, and inequitable the lack of an alternative is not a cause for continuing using it." /11

So, these error rates are unacceptably high. OK thanks for listening. If it looks like I did it really wrong, just holler. Reviewer 2s welcome. Thanks. I'll post a link to the OSF project in a few minutes. /12

OK, data and code is up here: https://osf.io/zutws/ . This includes the lists of all articles in the 12 journals from 2016 to 2020 and their citation counts as of today (I excluded 2020 papers from the analysis). /fin

Read on Twitter

Read on Twitter