Grateful to receive the Best Paper Award at SemEval for our work on propaganda detection. The jury appreciated combining neural models with traditional ML methods and analyses providing "clear conclusions for future researchers."

https://www.aclweb.org/anthology/2020.semeval-1.187/ #COLING2020 #SemEval2020

https://www.aclweb.org/anthology/2020.semeval-1.187/ #COLING2020 #SemEval2020

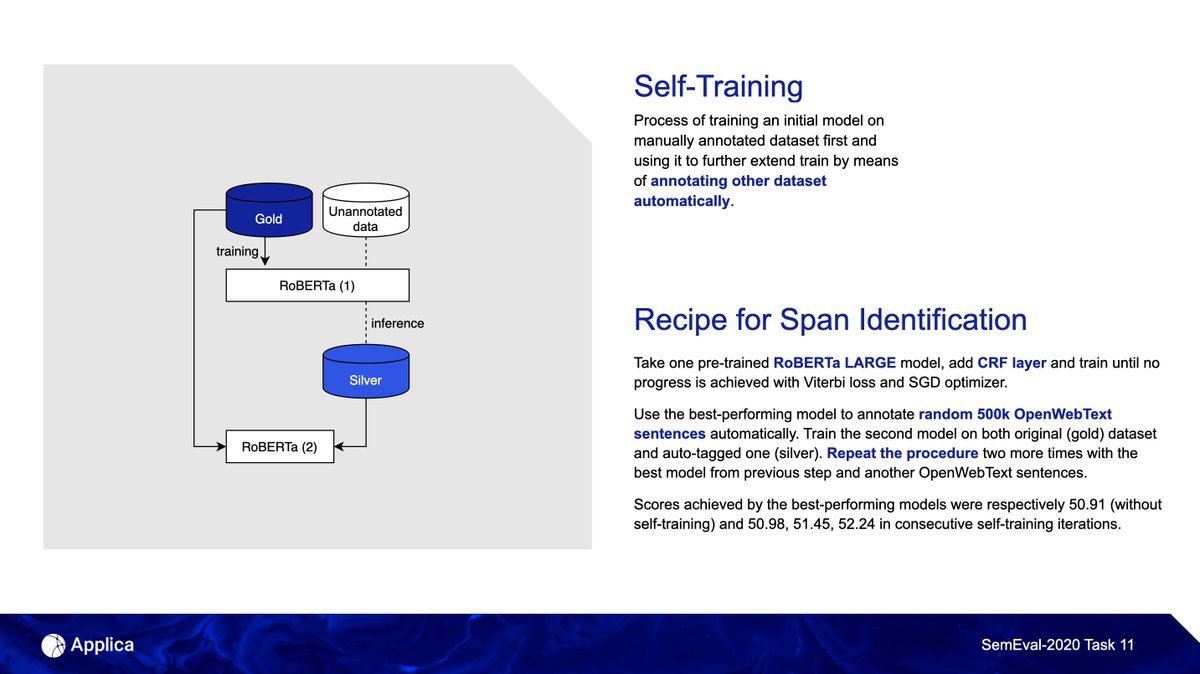

We approached the Span Identification problem as a sequence labeling and used the RoBERTa model with the Conditional Random Field layer. Our initial model trained on a manually annotated dataset was used to extend the train set by annotating other articles automatically.

It seems CRF has a noticeable positive impact on scores achieved without self-training. Further self-training iterations slightly improve mean scores and decrease variance. CRF layer has a positive effect regardless of the percentage of train set available.

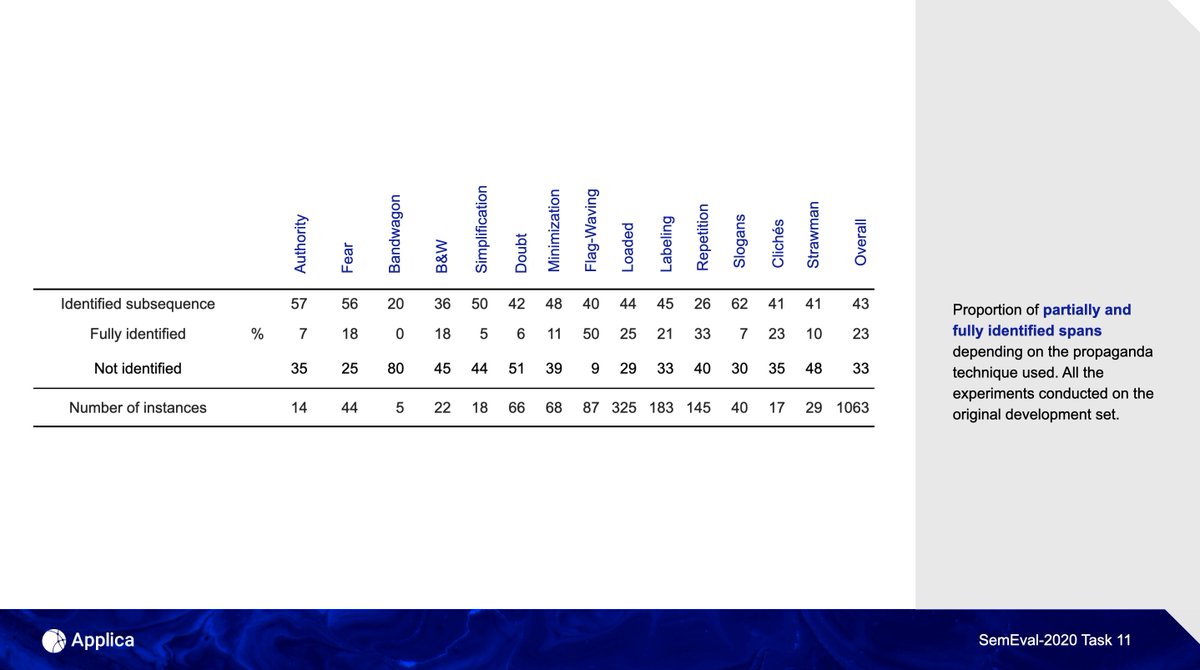

There were several common mistakes, e.g., our system tends to return ranges without adjacent punctuation. Moreover, the system frequently identifies techniques separated by “and” conjunction as a single span.

Our system could not identify one-third of expected spans, whereas most of those correctly identified were partial matches. Bandwagon, Doubt, and the group Whataboutism, Strawman, Red Herring turned out to be the hardest to spot.

In the second task, we already know the text span to classify. Here, we injected the special tokens indicating the beginning and the end of the text to classify. As a result, it was possible to proceed with the representation of [BOS], as in the simple sequence classification.

But there are other ways to classify the span of text. For example, one could use the RNN over the selected token only. We did something similar but with the transformer architecture. Our final submission was an ensemble of three models.

We discovered that the frequent predictor of low accuracy is, among others, span linguistic complexity, negation, and the bigram “according to,” related to reported or indirect speech.

The complete presentation video is available at https://www.underline.io/events/54/sessions/1426/lecture/6662-applicaai-at-semeval-2020-task-11-on-roberta-crf,-span-cls-and-whether-self-training-helps-them (at the moment for COLING participants only).

Read on Twitter

Read on Twitter

![In the second task, we already know the text span to classify. Here, we injected the special tokens indicating the beginning and the end of the text to classify. As a result, it was possible to proceed with the representation of [BOS], as in the simple sequence classification. In the second task, we already know the text span to classify. Here, we injected the special tokens indicating the beginning and the end of the text to classify. As a result, it was possible to proceed with the representation of [BOS], as in the simple sequence classification.](https://pbs.twimg.com/media/EpEvMckWEAEEDLA.jpg)