1/ Science Fictions (Stuart Ritchie)

"Entirely avoidable errors routinely make it past the Maginot Line of peer review. Books, media reports and our heads are being filled with ‘facts’ that are incorrect, exaggerated, or drastically misleading." (p. 5)

https://www.amazon.com/Science-Fictions-Negligence-Undermine-Search-ebook/dp/B07WZ7TRC4/

"Entirely avoidable errors routinely make it past the Maginot Line of peer review. Books, media reports and our heads are being filled with ‘facts’ that are incorrect, exaggerated, or drastically misleading." (p. 5)

https://www.amazon.com/Science-Fictions-Negligence-Undermine-Search-ebook/dp/B07WZ7TRC4/

2/ "A set of experiments on 1,000 people found evidence for the ability to see the future using ESP. The paper was written by a top psychology professor, Daryl Bem, from Cornell. It was published in one of the most highly regarded, mainstream peer-reviewed psychology journals."

3/ "Our replication attempt showed nothing. Our undergrads weren’t psychic.

"We sent the paper to the same journal, the Journal of Personality and Social Psychology. The editor rejected it, explaining their policy of never publishing studies that repeated a previous experiment.

"We sent the paper to the same journal, the Journal of Personality and Social Psychology. The editor rejected it, explaining their policy of never publishing studies that repeated a previous experiment.

4/ "The journal had published a paper that had made extremely bold claims, ones that, if true, would completely revolutionize science.

"Yet the editors wouldn’t even consider publishing a replication study that called the findings into question." (p. 1)

"Yet the editors wouldn’t even consider publishing a replication study that called the findings into question." (p. 1)

5/ "Science, widely considered one of the world’s most prestigious scientific journals (second only to Nature), published a paper by Diederik Stapel, a social psychologist at Tilburg University in the Netherlands.

6/ "The paper, entitled ‘Coping with Chaos’, described several studies performed in the lab and on the street, finding that people showed more prejudice – and endorsed more racial stereotypes – when in a messier or dirtier environment.

7/ "In a subsequent confessional autobiography, Stapel admitted that instead of collecting the data for his studies, he would sit alone late into the night, typing the numbers he required for his imaginary results into a spreadsheet, making them all up from scratch.

8/ "Since then, no fewer than 58 of his studies have been retracted due to their fake data.

"These cases were perfect examples of much wider problems with the way we do science.

"If it won’t replicate, it’s hard to describe what you’ve done as scientific at all." (p. 4)

"These cases were perfect examples of much wider problems with the way we do science.

"If it won’t replicate, it’s hard to describe what you’ve done as scientific at all." (p. 4)

9/ "In the case of Stapel, nobody had ever even *tried* to replicate his findings. In other words, the community had demonstrated that it was content to take the dramatic claims in these studies at face value without checking how durable the results really were.

10/ "Science, the discipline in which we should find the harshest skepticism, the most pin-sharp rationality and the hardest-headed empiricism, has become home to a dizzying array of incompetence, delusion, lies and self-deception." (p. 7)

11/ "Science is inherently a *social* thing: you have to convince other people of what you’ve found. And since science is also a *human* thing, scientists are prone to irrationality, biases, lapses in attention, in-group favoritism, and outright cheating to get what they want.

12/ "Our publication system, far from overriding the human problems, allows them to leave their mark on the scientific record – precisely because it believes itself to be unbiased. The mere existence of the peer-review system seems to have stopped us from recognizing its flaws.

13/ "The way academic research is currently set up *incentivizes* these problems, encouraging researchers to obsess about prestige, fame, funding and reputation at the expense of rigorous, reliable results.

14/ "More than perhaps any other field, psychology has begun to recognize our deep-seated flaws and to develop systematic ways to address them – ways that are beginning to be adopted across many different disciplines of science." (p. 7)

15/ "It took until the 1970s for all journals to adopt the modern model of sending out submissions to independent experts for peer review, giving them the gatekeeping role they have today.

"In 1942, Merton set out four scientific values, now known as the ‘Mertonian Norms’.

"In 1942, Merton set out four scientific values, now known as the ‘Mertonian Norms’.

16/ "1. Universalism: The race, sex, age, gender, sexuality, income, social background, nationality, popularity, or any other status of a scientist should have no bearing on how factual claims are assessed." (p. 21)

Related reading: the genetic fallacy

https://en.wikipedia.org/wiki/Genetic_fallacy

Related reading: the genetic fallacy

https://en.wikipedia.org/wiki/Genetic_fallacy

17/ "2. Disinterestedness

3. Communality: We have to know the details of other scientists’ work so we can assess/build on it.

4. Organized skepticism: a claim should never be accepted at face value. We suspend judgement until we’ve properly checked the data/methodology." (p.21)

3. Communality: We have to know the details of other scientists’ work so we can assess/build on it.

4. Organized skepticism: a claim should never be accepted at face value. We suspend judgement until we’ve properly checked the data/methodology." (p.21)

18/ "In theory, science is self-correcting.

"In practice, though, the publication system sits awkwardly with the Mertonian Norms, in many ways obstructing the process of self-correction." (p. 22)

More on this:

Structure of Scientific Revolutions (Kuhn) https://twitter.com/ReformedTrader/status/1331998734276653059

"In practice, though, the publication system sits awkwardly with the Mertonian Norms, in many ways obstructing the process of self-correction." (p. 22)

Structure of Scientific Revolutions (Kuhn) https://twitter.com/ReformedTrader/status/1331998734276653059

19/ "Perhaps Kahneman shouldn’t have placed such complete trust in priming effects, despite their being published in the journal Science.

"Along with Stapel’s fraud and Bem’s weird psychic results, the attempted replication of a priming study spurred the replication crisis.

"Along with Stapel’s fraud and Bem’s weird psychic results, the attempted replication of a priming study spurred the replication crisis.

20/ "Kahneman later admitted that he’d made a mistake in overemphasising the scientific certainty of priming effects. ‘The experimental evidence for the ideas was significantly weaker than I believed when I wrote it,’ he commented 6 years after publishing Thinking, Fast and Slow.

21/ " ‘This was an error: I knew all I needed to know to moderate my enthusiasm… but I did not think it through.’

"But the damage had already been done: millions of people had been informed by a Nobel Laureate that they had ‘no choice’ but to believe in those studies." (p. 25)

"But the damage had already been done: millions of people had been informed by a Nobel Laureate that they had ‘no choice’ but to believe in those studies." (p. 25)

22/ "Perhaps the most famous psychology study of all time is the 1971 Stanford Prison Experiment.

"Put a good person into a bad situation, the story goes, and things might get very bad, very fast. The experiment is taught to essentially every undergraduate psychology student.

"Put a good person into a bad situation, the story goes, and things might get very bad, very fast. The experiment is taught to essentially every undergraduate psychology student.

23/ "On its basis, Zimbardo became among the most respected psychologists. He used the findings to testify as an expert witness at the trials of US military guards at Abu Ghraib prison.

"Only recently have we begun to see just how poor a study the Stanford Prison Experiment was.

"Only recently have we begun to see just how poor a study the Stanford Prison Experiment was.

24/ "In 2019, researcher and film director Thibault Le Texier published the paper ‘Debunking the Stanford Prison Experiment’, where he produced transcripts of tapes of Zimbardo intervening directly in the experiment, giving his ‘guards’ very precise instructions on how to behave.

25/ "He went as far as to suggest specific ways of dehumanizing the prisoners, like denying them the use of toilets.

"Despite the enormous attention it’s received over the years, the ‘results’ of the experiment are scientifically meaningless." (p. 29)

"Despite the enormous attention it’s received over the years, the ‘results’ of the experiment are scientifically meaningless." (p. 29)

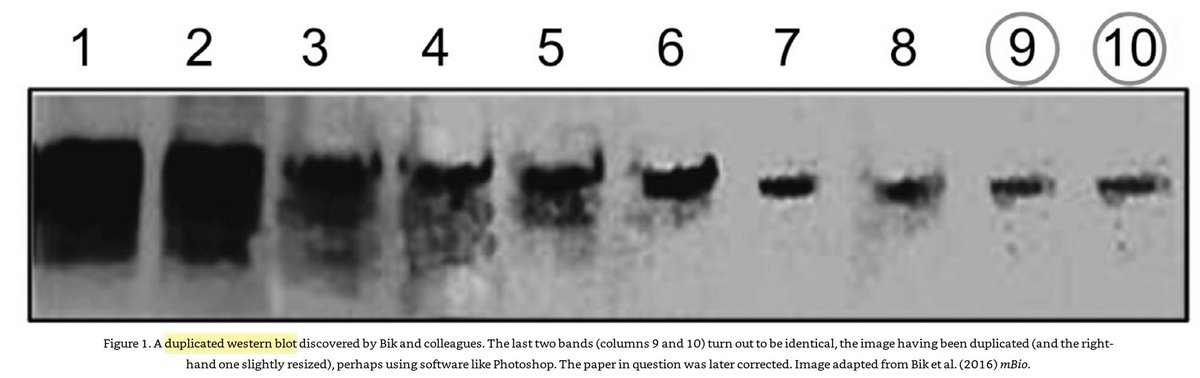

26/ "To get an idea of how bad things were, psychologists ran large-scale replications of prominent studies across multiple different labs. The highest-profile of these involved a large consortium of scientists who tried to replicate 100 studies from 3 top psychology journals.

27/ "The results were published in Science in 2015. Only 39% of studies were judged to have replicated successfully.

"Another effort in 2018 tried to replicate 21 social-science papers from the world’s top two journals, Nature and Science. The replication rate was 62%." (p. 29)

"Another effort in 2018 tried to replicate 21 social-science papers from the world’s top two journals, Nature and Science. The replication rate was 62%." (p. 29)

28/ "Further collaborations that looked at a variety of different kinds of psychological phenomena phenomena found rates of 77%, 54%, and 38%.

"Almost all of the replications, even where successful, found that the original studies had exaggerated the size of their effects.

"Almost all of the replications, even where successful, found that the original studies had exaggerated the size of their effects.

29/ "The replication crisis wiped half of all psychology research off the map.

"The studies that failed to replicate continued to be routinely cited by scientists and other writers: entire lines of research and bestselling popular books were built on their foundation." (p. 31)

"The studies that failed to replicate continued to be routinely cited by scientists and other writers: entire lines of research and bestselling popular books were built on their foundation." (p. 31)

30/ "Although no other sciences have investigated their replication rates as systematically as for psychology, there are glimmers of these problems across many different fields (economics, neuroscience, evolutionary biology, ecology, marine biology, organic chemistry)." (p. 31)

31/ "Even with the exact same data sets as used in published studies, some results still can't be reproduced. Sometimes it’s due to errors in the original studies. Other times, the original authors weren’t clear, so the exact steps can't be retraced by independent researchers.

32/ "In macroeconomics, a re-analysis of 67 studies could only reproduce the results from 22 of them using the same datasets, and the level of success improved only modestly after the researchers appealed to the original authors for help.

33/ "In geoscience, researchers had at least minor problems getting the same results in 37 out of 39 studies.

"ML researchers could reproduce only 7 of the 18 recommendation-algorithm studies that had been recently presented at prestigious computer science conferences." (p. 34)

"ML researchers could reproduce only 7 of the 18 recommendation-algorithm studies that had been recently presented at prestigious computer science conferences." (p. 34)

34/ "Scientists Amgen attempted to replicate fifty-three landmark preclinical cancer studies that had been published in top scientific journals. A mere 11% of the replication attempts were successful.

"Similar attempts at another firm, Bayer, produced a 20% success rate.

"Similar attempts at another firm, Bayer, produced a 20% success rate.

35/ "This lack of a firm underpinning in preclinical research might be among the reasons why the results from trials of cancer drugs are so often disappointing. By one estimation, only 3.4% of such drugs make it all the way from preclinical studies to use in humans." (p. 36)

36/ "In 2013, cancer researchers attempted to replicate 51 important preclinical cancer studies in independent labs.

"In every one of the original papers, for every one of the experiments reported, there wasn’t enough information provided to know how to re-run the experiment.

"In every one of the original papers, for every one of the experiments reported, there wasn’t enough information provided to know how to re-run the experiment.

37/ "When asked for that information, 45% of the original researchers were rated by replicators as ‘minimally/not at all’ helpful.

"A study took a random sample of 268 biomedical papers, including clinical trials, and found that all but one failed to report their full protocol.

"A study took a random sample of 268 biomedical papers, including clinical trials, and found that all but one failed to report their full protocol.

38/ "Another analysis found that 54% of biomedical studies didn’t fully describe what kind of animals, chemicals or cells they used.

"If the necessary details only appear after months of emailing with the authors (or are lost forever), what is the point of publishing papers?

"If the necessary details only appear after months of emailing with the authors (or are lost forever), what is the point of publishing papers?

39/ "For the cancer research replication project, all the troubles with trying to replicate the studies, combined with some financial problems, meant that the researchers had to cut steadily down the number of intended to-be-replicated studies, going from fifty to only eighteen.

40/ "Fourteen of these have been reported at the time of this writing. Five clearly replicated crucial results from the original studies, four replicated parts of them, three were clear failed replications, and two had results that couldn’t even be interpreted." (p. 38)

41/ "Common treatments, it turns out, are often based on low-quality research; instead of being solidly grounded in evidence, accepted medical wisdom is instead regularly contradicted by new studies." (p. 38)

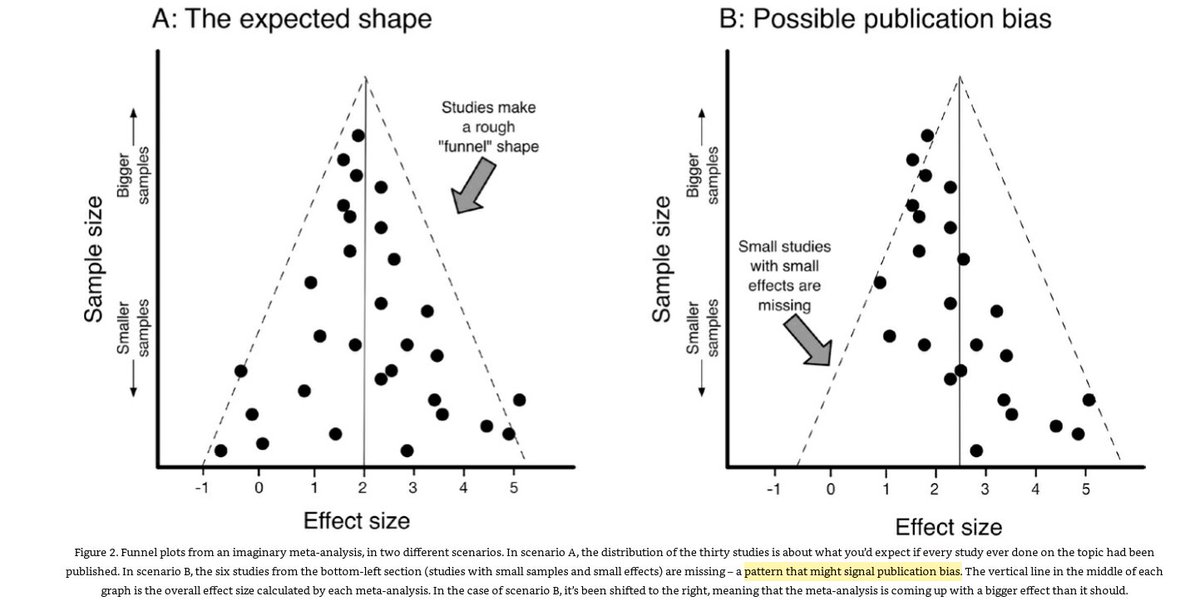

42/ "Scientists have let doctors and patients down by creating a constant state of flux in the medical literature, running and publishing poor-quality studies that even students in undergraduate classes would recognize as inadequate. We knew how to do better – and yet we didn’t.

43/ "Of the many comprehensive reviews published by the Cochrane Collaboration, a highly reputable charity that assesses the quality of medical treatments, 45% conclude that there’s insufficient evidence to decide whether the treatment in question works or not." (p. 40)

44/ "A 2016 survey of over 1,500 researchers – though admittedly not a properly representative one, since it just involved those who filled in a questionnaire on the website of the journal Nature – found that 52% thought there was a ‘significant crisis’ of replicability.

45/ "90% (80%) of chemists (biologists) said that they’d had the experience of failing to replicate another researcher’s result, as did 70% of physicists, engineers, and medical scientists.

"A slightly lower percentage said they’d had trouble replicating their *own* results.

"A slightly lower percentage said they’d had trouble replicating their *own* results.

46/ "A 2005 article by John Ioannidis titled ‘Why Most Published Research Findings Are False’ had a mathematical model that concluded just that:

"Once you consider the many ways that studies can go wrong, any given claim in a paper is more likely to be false than true." (p. 42)

"Once you consider the many ways that studies can go wrong, any given claim in a paper is more likely to be false than true." (p. 42)

47/ More on the Stanford Prison Experiment: https://twitter.com/ReformedTrader/status/1187039786256584704

48/ "The fact that the scientific community so proudly cherishes an image of itself as objective and scrupulously honest – a system where fraud is complete anathema – might, perversely, be what prevents it from spotting the bad actors in its midst.

49/ "In 1961, Science magazine apologized for publishing an article by Indian researchers who claimed to have found the parasite toxoplasma gondii for the first time in chicken eggs. The evidence – microscope photos of its cysts in an egg – was, it turned out, fake.

50/ "What the researchers claimed were two different cysts were in fact the same photo that had been zoomed out and flipped horizontally, a duplication that’s crashingly obvious in retrospect, but that the peer reviewers missed.

51/ "Image duplication featured in some of the most prominent fraud cases of recent decades.

"In 2004, Woo-Suk Hwang announced in Science that he’d cloned human embryos. In that journal, he later reported he’d produced, from those embryos, the first cloned human stem cell lines.

"In 2004, Woo-Suk Hwang announced in Science that he’d cloned human embryos. In that journal, he later reported he’d produced, from those embryos, the first cloned human stem cell lines.

52/ "Hwang was venerated in the media. Posters of his face, with statements like ‘Hope of the World – Dream of Korea.’ The Korean post office issued a stamp celebrating his work.

"The Korean government named Hwang ‘the Supreme Scientist, ’pouring enormous sums into his research.

"The Korean government named Hwang ‘the Supreme Scientist, ’pouring enormous sums into his research.

53/ "But further inspection of the paper in Science revealed that two of the images, purportedly showing Hwang’s cell lines for different patients, were identical.

"There was also overlap in two more, which came from parts of the same photo but had been passed off as different.

"There was also overlap in two more, which came from parts of the same photo but had been passed off as different.

54/ "Whistleblowers from Hwang’s lab revealed that only two cell lines had been created, not eleven, and neither were from cloned embryos. The rest of the cell photos had been doctored or deliberately mislabeled under Hwang’s instructions. The research project had been a charade.

55/ "Hwang had siphoned off some of his research funding into bank accounts he controlled. Although he claimed the money was still spent on scientific apparatus, an investigation revealed that the ‘apparatus’ included a new car for his wife & donations to supportive politicians.

56/ "Hwang was easily the most famous scientist in his country and one of the world’s most prominent biologists.

"The system is largely built on trust: everyone assumes ethical behavior on the part of everyone else. Unfortunately, that’s an environment where fraud can thrive.

"The system is largely built on trust: everyone assumes ethical behavior on the part of everyone else. Unfortunately, that’s an environment where fraud can thrive.

57/ "Fakers, like parasites, can free-ride off of the community's collective goodwill.

"The fact that Hwang's acts were so shameless showcases how gullible reviewers and editors (the people we rely on to be rigorously skeptical) can be when faced with exciting results." (p. 58)

"The fact that Hwang's acts were so shameless showcases how gullible reviewers and editors (the people we rely on to be rigorously skeptical) can be when faced with exciting results." (p. 58)

58/ "In 2014, scientists at Japan’s RIKEN institute published two papers in Nature reporting new results on induced pluripotent stem cells.

"It claimed to have found another way to produce the cells: a technique called STAP, for ‘Stimulus-Triggered Acquisition of Pluripotency’.

"It claimed to have found another way to produce the cells: a technique called STAP, for ‘Stimulus-Triggered Acquisition of Pluripotency’.

59/ "All you had to do was bathe adult cells in a weak acid (or provide another kind of mild stress, like physical pressure).

"The lead researcher, Haruko Obokata, assembled impressive-looking evidence, illustrated with microscope images, graphs and blots showing DNA evidence.

"The lead researcher, Haruko Obokata, assembled impressive-looking evidence, illustrated with microscope images, graphs and blots showing DNA evidence.

60/ "But the images had been doctored.

"She’d also included figures from older research that she rebranded as new and faked data that showed how quickly the cells had grown. Any actual evidence was due to her allowing samples to become contaminated with embryonic stem cells."

"She’d also included figures from older research that she rebranded as new and faked data that showed how quickly the cells had grown. Any actual evidence was due to her allowing samples to become contaminated with embryonic stem cells."

61/ "If this kind of fraud occurs at the very highest levels of science, it suggests that there’s much more of it that flies under the radar, in less well-known journals.

"So how often do biologists fake the images in their papers?

"So how often do biologists fake the images in their papers?

62/ "In 2016, microbiologist Elisabeth Bik and her colleagues searched forty biology journals for papers that included western blots, eventually finding 20,621. 3.8% of the published papers included a problematic image.

63/ "In a later analysis of papers from just one cell-biology journal, Bik and her found an even higher percentage: 6.1%. Of these, many were just honest mistakes and the authors could issue a correction that solved the problem. However, around 10% of the papers were retracted.

64/ "More prestigious journals were less likely to have published papers with image duplication.

"When Bik and her team found faked images, they checked to see if other publications by the same author also had image duplications. This was true 40% of the time." (p. 60)

"When Bik and her team found faked images, they checked to see if other publications by the same author also had image duplications. This was true 40% of the time." (p. 60)

65/ "Michael LaCour, a grad student at the UCLA, published an eye-catching result in Science in 2014 from a large-scale door-to-door survey. The data showed that being approached by a gay campaigner had a big, positive long-term impact on people’s opinions towards gay marriage.

66/ "That is, meeting someone from the minority whose rights are in question made respondents much more likely to support those rights. It was immediately used by the (ultimately successful) campaign for the legalization of same-sex marriage in Ireland’s 2015 referendum.

67/ "But it turned out that LaCour had taken the results from the older CCAP survey, jiggled the numbers by adding random noise, and pretended they’d come from his new study.

"The many details LaCour provided in the paper about training the canvassers were entirely fictional.

"The many details LaCour provided in the paper about training the canvassers were entirely fictional.

68/ ‘There were stories, anecdotes, graphs and charts. You’d think no one would do this except to explore a real data set.’

"Science retracted the paper. LaCour lost a job offer – largely based on his precocious publication in such a prestigious journal – from Princeton." (p.64)

"Science retracted the paper. LaCour lost a job offer – largely based on his precocious publication in such a prestigious journal – from Princeton." (p.64)

69/ "What LaCour, like Sanna, Smeesters, and Stapel before him, got from his data fabrication was *control*. The study fit the exact specifications specifications required to convince Science’s peer reviewers it was worth publishing.

70/ "It was what the publication system and the university job market appeared to demand: not a snapshot of a messy reality where results can be unclear and interpretations uncertain, but a clean, impactful result that could immediately translate to use in the real world.

71/ "The lesson of the data fraud stories is that the bar might be set rather too low for true organized skepticism to be taking place. For the sake of the science, it might be time for scientists to start trusting each other a little less." (p. 65)

72/ "Honest mistakes make up 40% or less of total retractions. The majority are due to some form of immoral behaviour, including fraud (around 20%), duplicate publication, and plagiarism.

"Just 2% of individual scientists are responsible for 25% of all retractions." (p. 66)

"Just 2% of individual scientists are responsible for 25% of all retractions." (p. 66)

73/ "The champion of retractions is the anaesthesiologist Yoshitaka Fujii, who invented data from nonexistent drug trials and whose retracted papers number 183.

"The investigators listed papers published by Fujii that they concluded didn’t contain any fraud. There were three.

"The investigators listed papers published by Fujii that they concluded didn’t contain any fraud. There were three.

74/ "The data, just like in the cases we saw above, were far too uniform to be real. But absolutely nothing was done for over a decade, and all the while Fujii continued to publish fake papers in highly regarded anaesthesiology journals." (p. 66)

75/ "What happens if instead you simply ask scientists, anonymously, whether they’ve ever committed fraud? The biggest study on this question to date pooled research from seven surveys, finding that 1.97% of scientists admit to faking their data at least once.

76/ "People are naturally loath to confess to fraud, even in an anonymous survey, so the real number is surely much higher. Indeed, when surveys asked scientists how many other researchers they know who have falsified data, the figure jumped up to 14.1%." (p. 68)

77/ "In one survey of Chinese biomedical researchers, participants estimated that 40% of all biomedical articles by their compatriots were affected by some kind of scientific misconduct; 71% said that authorities in China paid ‘no or little attention’ to misconduct cases." (p.70)

78/ "In 2001, Jan Hendrik Schön, working at the famed Bell Labs, claimed to have invented a carbon-based transistor where the switching happened within a single molecule.

"A Stanford professor enthused that Schön’s technique was ‘particularly elegant in its simplicity.’

"A Stanford professor enthused that Schön’s technique was ‘particularly elegant in its simplicity.’

79/ "He won numerous prizes from scientific societies. From 2000-2002, in addition to many papers in excellent specialist physics journals, he published nine articles in Science and seven in Nature. For most scientists, even one paper in either would be the pinnacle of a career.

80/ "There were whispers of a Nobel Prize.

"But other labs had major difficulty repeating Schön’s experiments.

"Several of his papers, ones that claimed to be reporting entirely different experiments, showed exactly the same figure for their results.

"But other labs had major difficulty repeating Schön’s experiments.

"Several of his papers, ones that claimed to be reporting entirely different experiments, showed exactly the same figure for their results.

81/ "Bell Labs started an investigation, asking for the raw data. Schön informed them that he’d deleted most of his data because his computer ‘lacked sufficient memory’. In the data he did provide, the investigation was still able to find clear evidence of misconduct." (p. 72)

82/ "As whistleblowers, data sleuths and anyone else who’s contacted a scientific journal or university with allegations of impropriety will tell you, getting even a demonstrably fraudulent paper retracted is a glacial process – and that’s if you aren’t simply ignored." (p. 72)

83/ "Joachim Boldt’s faked results made it look as if hydroxyethyl starch was safe to use as a blood volume expander, a verdict bolstered by the fact that a meta-analysis – a review study that pools together all the previous papers on the subject – reached the same conclusion.

84/ "This was only true because Boldt’s fraud hadn’t yet been revealed; the meta-analysis included his fake results. When Boldt’s his papers were excluded, the results changed dramatically: patients who’d been given hydroxyethyl starch were, in fact, more likely to die.

85/ "Boldt’s fraud had distorted the entire field of research, endangering patients whose surgeons, perfectly understandably, took the results at face value." (p. 73)

86/ "Since 1998, there have been several large-scale, rigorous studies showing no relation between the MMR vaccine (or any other vaccine) and autism spectrum disorder. It’s also been shown that combination vaccines are just as safe as individual ones.

87/ "Wakefield invented the ‘fact’ that all the children showed their first autism-related symptoms soon after receiving MMR. In reality, some had records of symptoms beforehand, others only had symptoms many months afterwards, and some never received an autism diagnosis at all.

88/ "When Deer contacted the Lancet with his concerns, he encountered fierce resistance from the editors (this happened again in the case of Macchiarini a decade later). The paper was finally retracted after being a part of the scientific literature for twelve years." (p. 76)

89/ "By focusing too much on the ideal of science as an infallible, impartial method, we forget that in practice, biases appear at every stage of the process: reading previous work, setting up a study, collecting the data, analyzing the results, and choosing whether to publish.

90/ "Daniele Fanelli, in a 2010 study, searched through almost 2,500 papers from across all scientific disciplines, totting up how many reported a positive result for the first hypothesis they tested. Different fields of science had different positivity levels.

91/ "The lowest rate, though still high, at 70.2%, was space science. The highest was psychology/psychiatry (91.5%).

"This is publication bias. It’s also known as the ‘file-drawer problem’ – since that’s where scientists are said to be keeping all their null results." (p. 85)

"This is publication bias. It’s also known as the ‘file-drawer problem’ – since that’s where scientists are said to be keeping all their null results." (p. 85)

92/ Related reading on the idea that science is driven by assumptions and biases as well as by empirical investigation: https://twitter.com/ReformedTrader/status/1331998734276653059

93/ "A double standard, based on the entrenched human tendency towards confirmation bias (interpreting evidence in the way that fits our pre-existing beliefs and desires), is at the root of publication bias." (p. 93)

94/ "Fifteen published papers, documenting forty-three separate experiments, seemed to support the hypothesis of romantic priming (the idea that after being shown a picture of an attractive woman, men are willing to take more risks and to spend more on consumer goods.

95/ "However, when those studies were plotted for the meta-analysis, the funnel had a huge chunk missing: compelling evidence that many of the studies that hadn’t found the effect had been nixed prior to publication.

96/ "Indeed, when Shanks and his colleagues tried to replicate romantic priming in large-scale experiments of their own, their study found no effect from it whatsoever, with all their replication effect sizes converging around zero." (p. 94)

97/ This Amazon review has some interesting counterpoint:

"Testing essentially random hypothesis on inadequate data really isn't experimental science. I would call it an initial effort to understand things well enough that useful experiments can be run."

https://www.amazon.com/Science-Fictions-Negligence-Undermine-Search/dp/1250222699/ref=sr_1_1?dchild=1&keywords=science+fictions&qid=1609353587&sr=8-1#customerReviews

"Testing essentially random hypothesis on inadequate data really isn't experimental science. I would call it an initial effort to understand things well enough that useful experiments can be run."

https://www.amazon.com/Science-Fictions-Negligence-Undermine-Search/dp/1250222699/ref=sr_1_1?dchild=1&keywords=science+fictions&qid=1609353587&sr=8-1#customerReviews

98/ "Publication bias appears no less prominent in medicine. An analysis in 2007, for instance, found that over 90% of articles describing the effectiveness of prognostic tests for cancer reported positive results.

99/ "In reality, we still aren’t particularly good at predicting who’ll get cancer. Another study, which looked at 49 meta-analyses of potential markers of cardiovascular disease, found that 36 showed evidence of a bias towards positive results.

100/ "A 2014 survey of meta-analyses in top medical journals found that 31% didn’t check for publication bias. (Once it was checked for, 19% of those indicated that the bias was present.) A later review of cancer-research reviews indicated 72% didn’t include such checks." (p. 93)

101/ "Funnel plots can have weird shapes for reasons other than publication bias, especially if there are a lot of differences between the assorted studies that go into the meta-analysis.

"Are there better ways to check for publication bias?

"Are there better ways to check for publication bias?

102/ "One approach would be to take a set of studies you know for sure were completed, with a range of results from strongly positive to null, then check how many of each type made it through to get published.

"That’s what a group of Stanford researchers did in 2014.

"That’s what a group of Stanford researchers did in 2014.

103/ "41% of completed studies found strong evidence for their hypothesis, 37% had mixed results, and 22% were null.

"Of *published* articles, the percentages were 53%, 37%, and 9%.

"65% of studies with null results were never written up (little chance of publication)." (p. 95)

"Of *published* articles, the percentages were 53%, 37%, and 9%.

"65% of studies with null results were never written up (little chance of publication)." (p. 95)

104/ "Scientists whose studies show ambiguous results regularly use practices that nudge ("hack") their p-values below the crucial 0.05 threshold.

"They may run re-re-run analyses, each time in a marginally different way, until chance eventually provides a p-value below 0.05.

"They may run re-re-run analyses, each time in a marginally different way, until chance eventually provides a p-value below 0.05.

105/ "They can make impromptu changes by dropping particular data points, re-calculating the numbers within specific subgroups (for instance, checking just in males, then females), trying different statistical tests, or collecting new data until something reaches significance.

106/ "Alternatively, they may run lots of ad hoc statistical tests on a dataset with no specific hypothesis, then report effects that happen to get p-values below 0.05. They can then declare, convincing even themselves, that they’d been searching for these results from the start.

107/ "If we run five (unrelated) tests, there is a 23% chance of at least one false positive; for twenty tests, it’s 64%. Thus, with multiple tests, we go well beyond the 5% tolerance level.

"This is the same logic that makes seemingly amazing coincidences possible...

"This is the same logic that makes seemingly amazing coincidences possible...

108/ " ‘I randomly thought about someone I hadn’t contacted in months, and they sent me a text message at that exact moment!’ is less amazing once you consider how many millions of people in the world have random thoughts about someone and don’t receive such a message." (p. 99)

109/ "The head of Cornell Food and Brand Lab, Wansink was the world’s most prominent voice on food psychology, with a directorship of USDA’s Center for Nutrition Policy and Promotion under Bush and hundreds of papers (many cited in the Obama-era ‘Smarter Lunchrooms’ movement).

110/ "He won an Ig Nobel Prize – the fun version of the Nobels for studies that ‘make people laugh, then think’. It was for a study in 2007 where he tricked people into consuming much more soup than they’d intended, by using a rigged soup bowl that continually refilled itself.

111/ "He found that if you go food-shopping while hungry, you’ll buy more calories... and that adding a sticker of Elmo from Sesame Street to an apple makes children more likely to choose it instead of a cookie.

"It wasn’t until late 2016 that it all fell apart.

"It wasn’t until late 2016 that it all fell apart.

112/ "p-hacking was only one of a multitude of statistical screw-ups. Across the four papers he’d published using the pizza dataset, a team of skeptics found 150 errors: a host of numbers that were inconsistent between different studies (and sometimes within a single paper).

113/ "In one paper about cookbooks, a re-analysis of the data showed he’d reported almost every single number incorrectly. In the paper about putting Elmo stickers on apples, he’d mislabeled a graph and misdescribed the methodology of the study.

114/ "At the time of writing, eighteen of Wansink’s articles have been pulled from the literature, and one suspects that more will follow.

"Wansink later tendered his resignation to Cornell." (p. 102)

"Wansink later tendered his resignation to Cornell." (p. 102)

115/ "In 2012, a poll of over 2,000 psychologists asked if they had engaged in a range of p-hacking practices.

"65% said they had collected data on several different outcomes but not reported all of them.

"65% said they had collected data on several different outcomes but not reported all of them.

116/ "40% said they had excluded particular data points from their analysis after peeking at the results.

"57% said they’d decided to collect further data after running their analyses – and presumably finding them unsatisfactory." (p. 101)

"57% said they’d decided to collect further data after running their analyses – and presumably finding them unsatisfactory." (p. 101)

117/ "A survey of biomedical statisticians in 2018 found that 30% had been asked by scientist clients to ‘interpret statistical findings based on expectations, not actual results.’ 55% had been asked to ‘stress only significant findings but underreport nonsignificant ones’.

118/ "32% of economists in another survey admitted to ‘presenting empirical findings selectively so that they confirmed their argument.’ 37% said they had ‘stopped statistical analysis when they had their result’ (whenever p happened to drop below 0.05, even if by chance).

119/ "If you collect together all the p-values from published papers and graph them by size, you see a strange, sudden bump just under 0.05 – there are a lot more 0.04s, 0.045s, 0.049s, and so on, than you’d expect by chance." (p. 104)

120/ "P-hacking can feel to the scientist as if they’re making their result, which is in their mind probably true, clearer or more realistic.

"That one petri dish? It did have a speck of dirt and might be contaminated, so it’s best to drop its results from the dataset.

"That one petri dish? It did have a speck of dirt and might be contaminated, so it’s best to drop its results from the dataset.

121/ "Yes, it does make more sense to run statistical test X instead of test Y (and, oh look, X gives positive results!).

"If you already believe your hypothesis is true before testing it, it can seem reasonable to give any uncertain results a push in the right direction.

"If you already believe your hypothesis is true before testing it, it can seem reasonable to give any uncertain results a push in the right direction.

122/ "There’s never just one way to analyze a dataset.

"Meta-science experiments in which multiple research groups analyze the same dataset or design their own studies to test the same hypothesis have found a high degree of variation in method and results." (p. 104)

"Meta-science experiments in which multiple research groups analyze the same dataset or design their own studies to test the same hypothesis have found a high degree of variation in method and results." (p. 104)

123/ "Publication bias and p-hacking are two manifestations of the same phenomenon: a desire to erase results that don’t fit well with a preconceived theory.

"This phenomenon was illustrated by a clever meta-scientific study by a group of business-studies researchers.

"This phenomenon was illustrated by a clever meta-scientific study by a group of business-studies researchers.

124/ "The researchers called what happened between dissertation and journal publication ‘the chrysalis effect’. By publication, initially ugly findings often changed, with messy-looking, non-significant results dropped or altered in favor of a clear, positive narrative." (p. 109)

125/ "Ben Goldacre’s ‘COMPare Trials’ project took all clinical trials published in the five highest-impact medical journals for a period of four months, attempting to match them to their registrations. Of sixty-seven trials, only nine reported everything they said they would.

126/ "354 outcomes had disappeared between registration and publication; 357 unregistered outcomes appeared ex nihilo. An audit in anaesthesia found that 92% of trials had switched at least one outcome (it tended to be towards outcomes with stat. significant results)." (p. 111)

127/ "Industry-funded drug trials are more likely to report positive results. In a recent review, for every positive trial funded by a government or non-profit source, there were 1.27 positive trials by drug companies.

128/ "Industry-funded trials are more likely to compare a new drug to a placebo rather than to the next-best-alternative drug, artificially making the new product look better.

"Industry-sponsored trials are file-drawered more often than those funded by other sources." (p. 111)

"Industry-sponsored trials are file-drawered more often than those funded by other sources." (p. 111)

129/ "When a lucrative career (bestselling books, 5- and 6-figure sums for lectures and consulting) rests on the truth of a certain theory, scientist gains new motivations in their day jobs: to publish only studies that support that theory (or p-hack them until they do).

130/ "Other scientists really *want* their studies to provide strong results in order to fight a disease, a social or environmental ill, or some other important problem.

"But null results matter too if a study is properly designed." (p. 113)

"But null results matter too if a study is properly designed." (p. 113)

131/ "Amyloid plaques are *associated* with Alzheimer's symptoms but aren't the *cause*.

"Proponents of the amyloid hypothesis, many of whom are powerful, well-established professors, act as a ‘cabal’, shooting down papers that question the hypothesis with nasty peer reviews...

"Proponents of the amyloid hypothesis, many of whom are powerful, well-established professors, act as a ‘cabal’, shooting down papers that question the hypothesis with nasty peer reviews...

132/ "torpedoing the attempts of heterodox researchers to get funding and tenure.

"The researchers are so attached to the amyloid hypothesis – which they genuinely believe in as the best route towards breakthroughs – that they’ve developed a strong bias in its favor." (p. 114)

"The researchers are so attached to the amyloid hypothesis – which they genuinely believe in as the best route towards breakthroughs – that they’ve developed a strong bias in its favor." (p. 114)

133/ "Scientists routinely fail to apply organized skepticism to their own favored theories.

"Surveys in the U.S. have found liberals:conservatives in psychology to be 10:1.

"A 2015 piece by several prominent psychologists argued political bias could be particularly damaging.

"Surveys in the U.S. have found liberals:conservatives in psychology to be 10:1.

"A 2015 piece by several prominent psychologists argued political bias could be particularly damaging.

134/ "If the vast majority of a community shares a political perspective, peer review is substantially weakened.

"Scientists might pay disproportionate attention to politically acceptable topics, even if they’re backed by relatively weak evidence, and avoid others." (p. 116)

"Scientists might pay disproportionate attention to politically acceptable topics, even if they’re backed by relatively weak evidence, and avoid others." (p. 116)

135/ "Nuijten et al. fed statcheck over thirty thousand papers: a gigantic sample of studies published in eight major psychology research journals between 1985 and 2013.

"13 per cent had a serious mistake that might have completely changed the interpretation of their results.

"13 per cent had a serious mistake that might have completely changed the interpretation of their results.

136/ "Rather than being random, the inconsistencies that statcheck flagged tended to be in the authors’ favor – that is, mistaken numbers tended to make the results more, rather than less, likely to fit with the study’s hypothesis." (p. 126)

137/ "An editorial in the prestigious Nature Reviews Cancer: ‘Thousands of misleading papers have been published using incorrectly identified cell lines.‘

"A 2017 analysis found 32,755 papers that used impostor cells and over 500,000 papers that cited those contaminated studies.

"A 2017 analysis found 32,755 papers that used impostor cells and over 500,000 papers that cited those contaminated studies.

138/ "A survey of Chinese labs found that up to 46% of cell lines were misidentified. Another study noted that up to 85% of cell lines thought to be newly established in China were contaminated with U.S. cells.

"36% of papers using contaminated cells were from the US." (p. 131)

"36% of papers using contaminated cells were from the US." (p. 131)

139/ "I found editorials/calls to action about cell line misidentification in prominent journals from 2010, 2012, 2015, 2017 & 2018, continuing the calls that go back to the 1950s.

"Cell biologists' lackadaisical response is a decades-long denial of a serious failing." (p. 132)

"Cell biologists' lackadaisical response is a decades-long denial of a serious failing." (p. 132)

140/ "2013 review looked at a variety of neuroscientific studies, including research on sex differences in the ability of mice to navigate mazes. To have enough statistical power, this type of study would typically require 134 mice. The average study included only 22 mice.

141/ "This seems to be a problem across most types of neuroscience. Large-scale reviews have also found that underpowered research is rife in medical trials, biomedical research more generally, economics, brain imaging, nursing research, behavioral ecology & psychology." (p. 136)

142/ "Since underpowered studies only have the power to detect large effects, those are the only effects they see: so if you find an effect in an underpowered study, *that effect is probably exaggerated*.

"Since large effects are exciting, they're more likely to get published.

"Since large effects are exciting, they're more likely to get published.

143/ "This suggests why so many tiny studies in the scientific literature seem to be reporting big effects: as we saw with the funnel plots in the last chapter, journals often miss the small studies that were discarded for failing to detect anything ‘interesting’." (p. 138)

144/ "When it comes to studies of complex systems (the body, brain, ecosystem, economy, or society), it’s rare to find one factor that has a massive effect. Instead, most of the psychological, social and medical phenomena we’re interested in are made up of lots of small effects.

145/ "The fact that small effects are so much more common and, collectively, so much more influential than big ones makes underpowered studies, which portray a world full of large effects, all the more misleading." (p. 139)

146/ "A study in the prestigious journal Nature Neuroscience in 2003 claimed that memory performance was 21% poorer in people who had one particular variant of the 5-HT2a gene.

"Geneticists also started elucidating the biological pathways between genes and traits.

"Geneticists also started elucidating the biological pathways between genes and traits.

147/ "When I was an undergraduate (2005-09), candidate gene studies were the subject of intense and excited discussion. By the time I got my PhD in 2014, they were almost entirely discredited.

"Technology improved, making it possible to measure people’s genotypes more cheaply.

"Technology improved, making it possible to measure people’s genotypes more cheaply.

148/ "Consequently, we could use much larger samples. Geneticists also started taking a different approach: instead of looking at just a handful of candidate genes, they looked simultaneously across many thousands of points on DNA. These studies had far better statistical power.

149/ "Efforts that specifically tried to replicate the candidate gene studies with high statistical power have produced flat-as-a-pancake null results.

"They were building a massive edifice of detailed studies on foundations that we now know to be completely false.

"They were building a massive edifice of detailed studies on foundations that we now know to be completely false.

150/ "The initial candidate gene studies, being small-scale, could only see large effects – so large effects were what they reported. In hindsight, these were freak accidents of sampling error.... a chain of misleading findings that became mainstream, gold-standard science.

151/ "Large chunks of scientific literature – ones based on small studies with implausibly large effects – could well be just as mistaken and mirage-filled as the research on candidate genes.

152/ " ‘My students need to publish papers to be competitive on the job market, and they can’t afford to do large-scale studies.’ Well-intentioned individual scientists are systematically encouraged to accept compromises that ultimately render their work unscientific." (p. 142)

Read on Twitter

Read on Twitter