A deeply interesting tutorial by @fchollet @MelMitchell1 @ChrSzegedy at #NeurIPS2020

" #Abstraction & #Reasoning in #AI systems: Modern Perspectives "

or "What are abstraction, generalization and analogic reasoning?"

1/

https://neurips.cc/virtual/2020/protected/tutorial_877466ffd21fe26dd1b3366330b7b560.html

https://neurips.cc/virtual/2020/protected/tutorial_877466ffd21fe26dd1b3366330b7b560.html

" #Abstraction & #Reasoning in #AI systems: Modern Perspectives "

or "What are abstraction, generalization and analogic reasoning?"

1/

https://neurips.cc/virtual/2020/protected/tutorial_877466ffd21fe26dd1b3366330b7b560.html

https://neurips.cc/virtual/2020/protected/tutorial_877466ffd21fe26dd1b3366330b7b560.html

@fchollet begins by laying some foundations in notation and background.

E.g., Generalization is a spectrum (and we are looking at lower bands in #ML nowadays)

2/

E.g., Generalization is a spectrum (and we are looking at lower bands in #ML nowadays)

2/

And Abstraction? It is the engine behind generalization!

And of course it comes in different flavors:

- program-centric: that is akin to high-level reasoning (inducing & merging programs)

- value-centric (interpolating examples) essentially what #DL does and excels at!

essentially what #DL does and excels at!

3/

And of course it comes in different flavors:

- program-centric: that is akin to high-level reasoning (inducing & merging programs)

- value-centric (interpolating examples)

essentially what #DL does and excels at!

essentially what #DL does and excels at!3/

and since we most likely need BOTH kinds of generalization via abstraction ....whatever some #DL aficionados conjectures, #DL alone is not going to solve everything alone

right, @GaryMarcus ; ) ?

4/

right, @GaryMarcus ; ) ?

4/

so the most natural thing is to leverage both program-centric abstraction, e.g., via program induction and value-centric by DL

This successfull #hybrid #AI approach is for instance the cooking recipe of Alpha{Go|Star|Fold|...}!

5/

This successfull #hybrid #AI approach is for instance the cooking recipe of Alpha{Go|Star|Fold|...}!

5/

now is the time for @MelMitchell1 to talk about #analogical reasoning!

...back from the seminal inception of #AI at Dartmouth, the challenge of learning #concepts and #abstractions is still wide open!

6/

...back from the seminal inception of #AI at Dartmouth, the challenge of learning #concepts and #abstractions is still wide open!

6/

this challenge of course did not go unnoticed in the modern #AI, #ML and #DL communities.

Take for instance the task of Raven's progressive matrices to measure (A)I: complete a sequence of 8 images as solving an analogical reasoning task

Take for instance the task of Raven's progressive matrices to measure (A)I: complete a sequence of 8 images as solving an analogical reasoning task

What would the #DL approach be on Raven's matrices?

Generate enough #data procedurally and...feed a #deep neural net to it!

And ta-da!

If you place your convolutions correctly, you got super-human performances!

If you place your convolutions correctly, you got super-human performances!

arxiv: https://arxiv.org/abs/2002.01646

8/

Generate enough #data procedurally and...feed a #deep neural net to it!

And ta-da!

If you place your convolutions correctly, you got super-human performances!

If you place your convolutions correctly, you got super-human performances!arxiv: https://arxiv.org/abs/2002.01646

8/

...however, this is another case where the NN is smart enough to take a #shortcut, hidden in the data-generation process

(waiting for another tutorial where #intelligence is correlated to #lazyness)

If you remove this shortcut, performances suddenly drop close to random

9/

(waiting for another tutorial where #intelligence is correlated to #lazyness)

If you remove this shortcut, performances suddenly drop close to random

9/

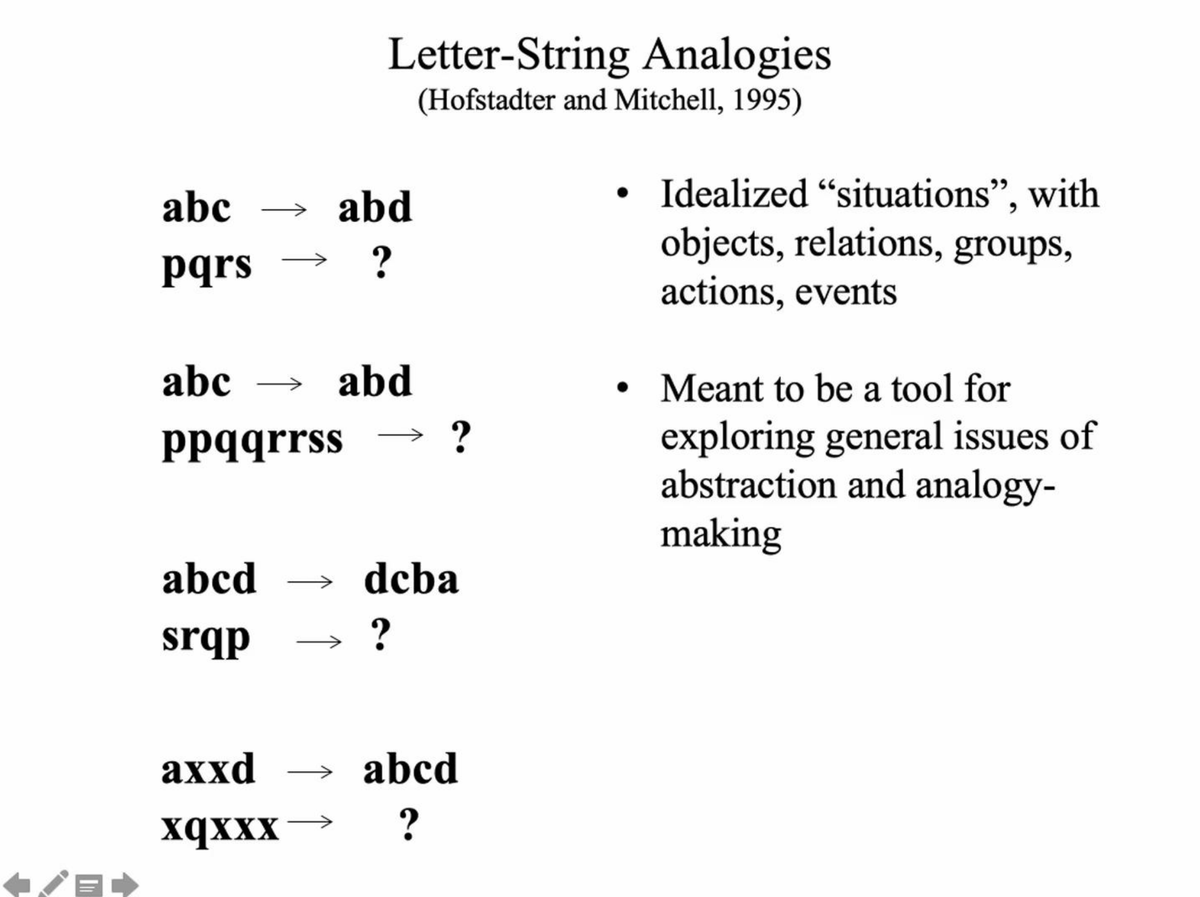

A fascinating prototype of a #hybrid #AI for analogical reasoning is COPYCAT (1995) by @MelMitchell1 and Doug Hofstadter

Solving analogies over a string matching domain by harnessing program-centric abstraction via codelets reading and writing from two kinds of memories

10/

Solving analogies over a string matching domain by harnessing program-centric abstraction via codelets reading and writing from two kinds of memories

10/

How to go from COPYCAT to more general concept learning that can be marries with #Deeplearning?

Among the future directions @MelMitchell1 sketches, I like the hidden deep analogy that essentially says we shall not try to exploit our benchmarks and metrics as... shortcuts!

11/

Among the future directions @MelMitchell1 sketches, I like the hidden deep analogy that essentially says we shall not try to exploit our benchmarks and metrics as... shortcuts!

11/

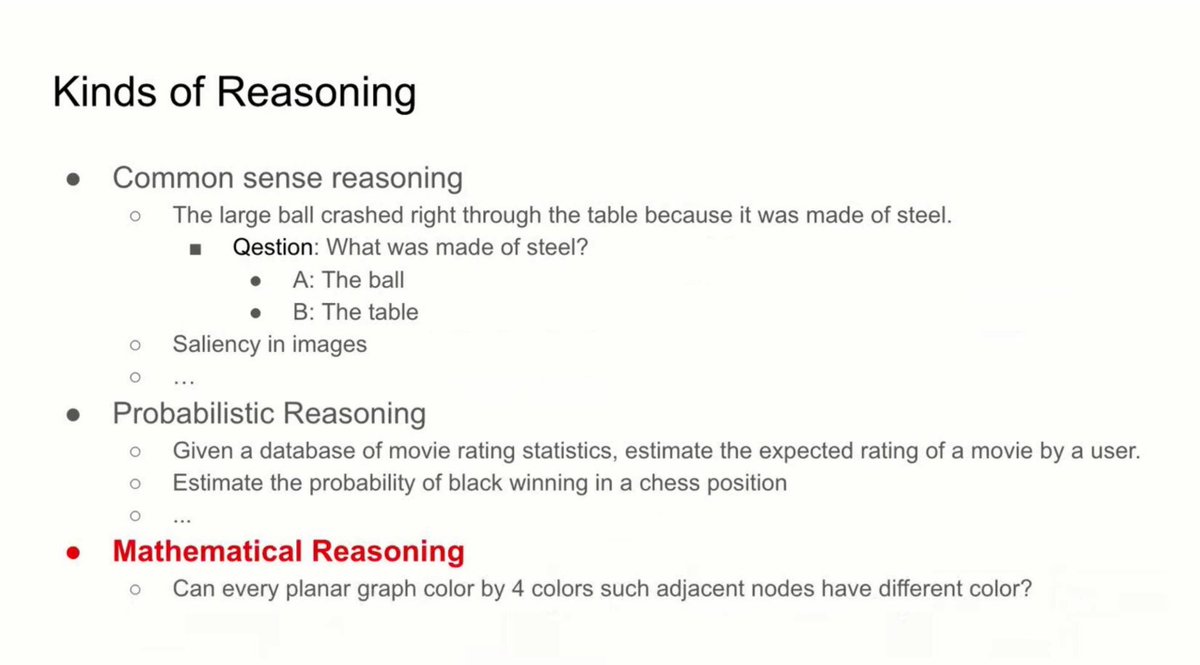

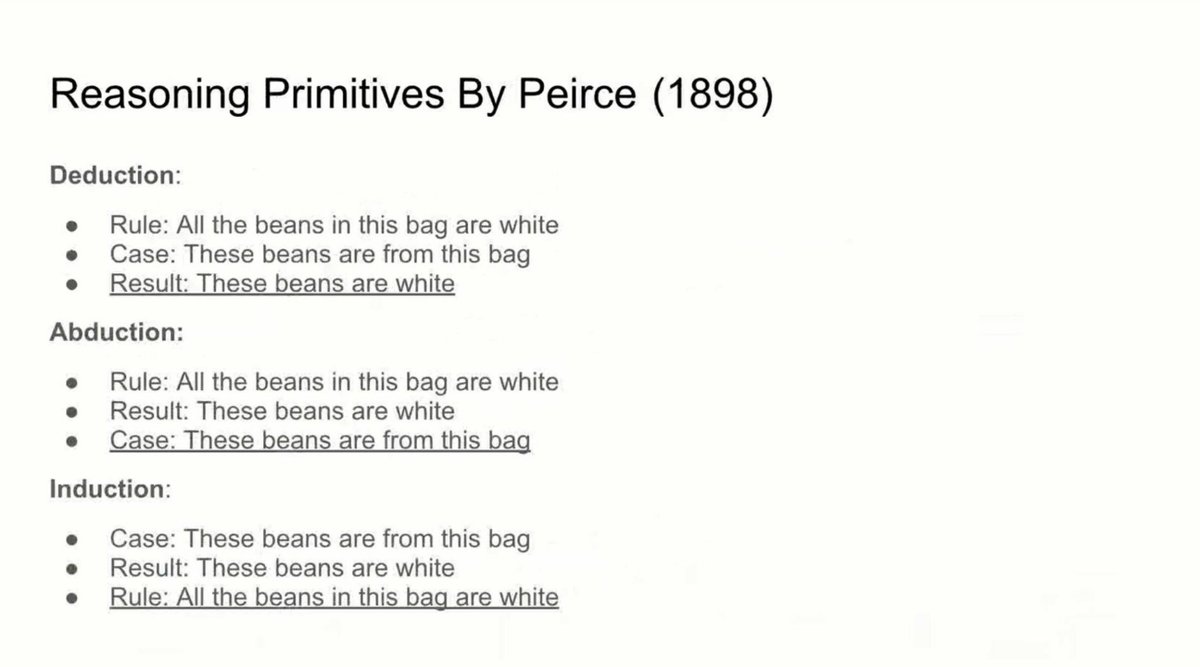

Lastly, @ChrSzegedy looks at #Deep models for mathematical reasoning -- aka crisp deductive reasoning

Kudos for citing Peirce! essentially the inventor of #semiotics and the father of modern #design (design = abduction, right @salvzingale?)

12/

Kudos for citing Peirce! essentially the inventor of #semiotics and the father of modern #design (design = abduction, right @salvzingale?)

12/

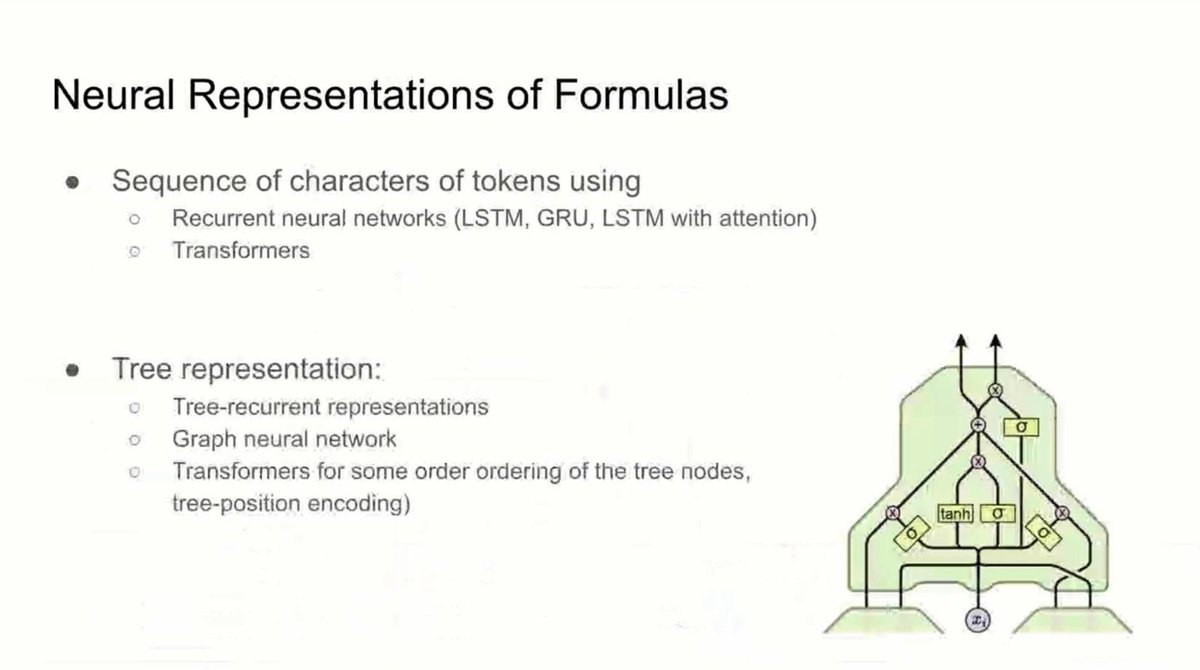

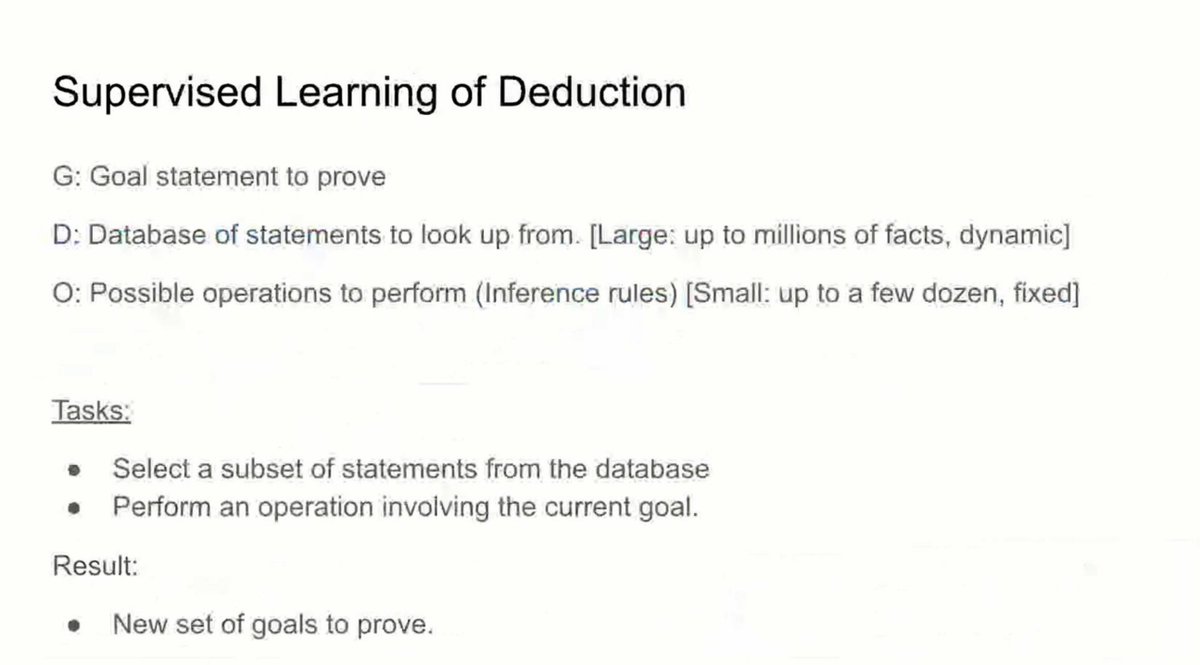

Q: how to do mathematical reasoning with deep architectures?

A: same old deep recipe:

- turn reasoning into (supervised) prediction

- create huge datasets (millions of data points)

- play with neural variants (recurrent/recursive/graph/transformers/...)

13/

A: same old deep recipe:

- turn reasoning into (supervised) prediction

- create huge datasets (millions of data points)

- play with neural variants (recurrent/recursive/graph/transformers/...)

13/

It's not clear how these models generalize or extrapolate, especially when multiple steps (hops) are required

This definitely looks like a current challenge!

And reminds me of the works on Neural Theorem Provers by @PMinervini @riedelcastro @_rockt

14/

This definitely looks like a current challenge!

And reminds me of the works on Neural Theorem Provers by @PMinervini @riedelcastro @_rockt

14/

The tutorial has ended, but there is still a QA session tomorrow

https://twitter.com/MelMitchell1/status/1336110646014791681?s=20

Bonus slide by Doug Hofstadter:

Q: "What is a concept?"

A: "A package of analogies"

15/end

https://twitter.com/MelMitchell1/status/1336110646014791681?s=20

Bonus slide by Doug Hofstadter:

Q: "What is a concept?"

A: "A package of analogies"

15/end

Read on Twitter

Read on Twitter