An AI diversity/ethics researcher/activist was fired after threatening to resign from Google during a spat over a paper she was writing. I won't discuss the drama and the paper hasn't been leaked, but this article describes the paper. https://www.technologyreview.com/2020/12/04/1013294/google-ai-ethics-research-paper-forced-out-timnit-gebru/

So, we can get a small sense of whether this paper was right or not. Of course we cannot make any strong conclusions about the paper just based on this article. So keep in mind that this is all tentative.

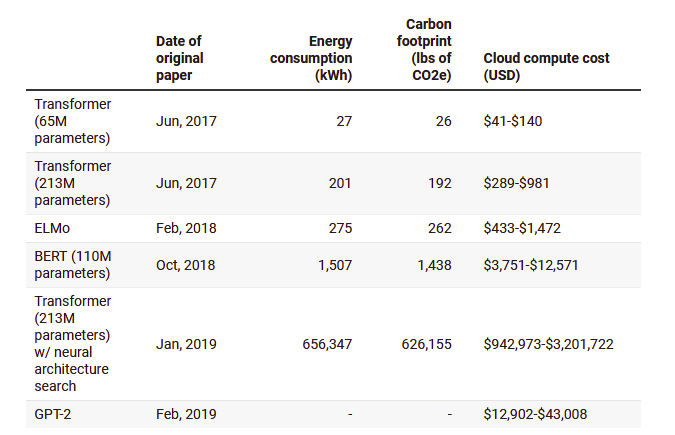

The authors criticize large language models (LLMs) on various points. First, energy use. This is clearly very minor. Assuming an SCC of $300/tonne, the largest model creates $85k worth of GHG emissions, against a $1-3M actual expense of training it.

So there is almost nobody who derives enough value from LLMs to financially justify their investment but not enough value to morally justify the emissions.

You can account for this by making an unusually high SCC estimate (as did I). Also the beneficiaries of LLMs are not just company shareholders but also the users, who can be anyone around the world with internet access.

It would also be more beneficial for everyone if a company like Google went full throttle with LLMs while just spending 0.2% of the revenue protecting rainforests or funding in cleantech. These authors should be lobbying for offsets, not restraint.

Also, according to Jeff Dean:

"It ignored too much relevant research — for example, it talked about the environmental impact of large models, but disregarded subsequent research showing much greater efficiencies." https://www.platformer.news/p/the-withering-email-that-got-an-ethical

"It ignored too much relevant research — for example, it talked about the environmental impact of large models, but disregarded subsequent research showing much greater efficiencies." https://www.platformer.news/p/the-withering-email-that-got-an-ethical

I thought this topic has been done to death already. Aren't companies already censoring outputs? I thought I heard somewhere that they do this with the AI Dungeon GPT-3? Plus can't you just automatically search and replace the bad words in the dataset?

It is also a problem that people have. But just because people are occasionally racist doesn't mean we should have second thoughts against creating new people.

I'm pretty sure this is not fundamentally different from other, nonpolitical cases of language evolution, and pretty sure that the engineers who make these models know how to make them so that they reflect the language of 2020 rather than the language of 2015? Am I wrong?

Plus when you get down to it, this is complaining that the AI may sound like a lot of offline, slightly-out-of-touch people, which strikes me as benign.

Interpreted another way, maybe the authors want to make sure that the LLMs are positively made to embrace the latest woke language even before most people are using it.

That would be incredibly contentious social engineering.

That would be incredibly contentious social engineering.

This idea makes some sense, but is also true of other media, and human translators and so on. Plus I'm not sure if it's a bad thing. Language standardization can make sense. English speakers may not be used to this, but some languages even have bodies which do it officially.

Regardless, LLMs provide an opportunity for so many more people to translate and generate content that they may actually cause language fragmentation reflecting a diversity of newly empowered groups.

Also, the contrast of these two paragraphs is deliciously ironic. Which is it: do you want AI language to reflect rich country trends like BLM and MeToo, or not? I hope the authors were aware of this and addressed it!

yes, tho I am inclined to wonder if this is a good thing - how much do we trust Google to set norms for how we write? A large dataset could help compel them to reflect humanity rather than their own ideology. (This is a tough issue where either side could make real arguments.)

I hope the actual paper says something better than just repeating philosophical pedantry over the nature of language. Are these other model ideas really more beneficial, more tractable?

Also, the private sector doesn't have a fixed pie. If they make money on LLMs that could allow them to spend even more money developing other models.

Humans can write bullshit too. There are bad self-help bloggers and translators out there. Should we have second thoughts about making new humans?

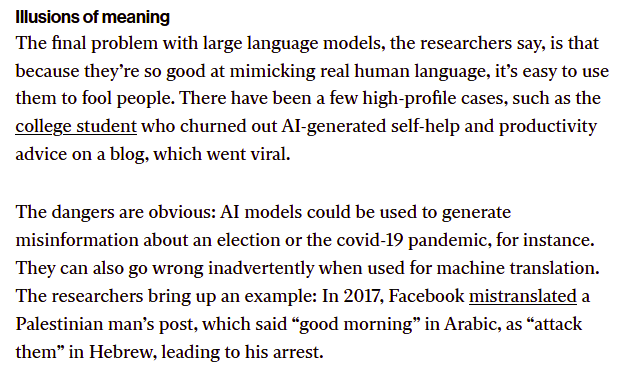

There are real risks with mass-scale content generation, but at the same time people could adjust to become more discerning.

There are real risks with mass-scale content generation, but at the same time people could adjust to become more discerning.

I think we should worry less about people not realizing they're reading stuff from AI and worry more about people being such uncritical consumers of bullshit that they can be persuaded by bad sources including GPT-3 as well as a variety of humans.

And of course there may be a point where AI actually has good ideas and people should just listen to it.

Basically, either AI is smart in which case we should listen to it, or it's dumb in which case the people listening to it would otherwise be listening to dumb humans.

Basically, either AI is smart in which case we should listen to it, or it's dumb in which case the people listening to it would otherwise be listening to dumb humans.

To be clear, just because AI is better than humans at something doesn't mean we shouldn't try to improve it even more. But it does mean we shouldn't delay in deploying and using them now, which is what the authors appear to be arguing for.

And it does suggest that we should frame these problems in a more positive manner, i.e. "look how much better the future can be if we try," rather than sounding an alarm that software will "perpetuate harm without recourse."

Conclusion: again I'm not commenting on the drama of her termination, and it's impossible for me to say whether the paper is truly poor quality, but based on this summary of its arguments, they do not look convincing. Large language models are good!

Read on Twitter

Read on Twitter