Having gone a little further down this rabbit hole, I want to nuance this a bit. Specifically, I'm better informed re: the nature of the beef Gebru has with LeCun. This is difficult to summarize in thread, but I am FASCINATED by it & almost want to quit everything & write a book. https://twitter.com/jonst0kes/status/1334714604904177666

There is a very close resonance in this LeCun vs. Gebru dispute over ML & bias to the police defunding debate. In fact, these are kind of the same debate, on some meaningful level. But first let me unpack.

LeCun is saying that bias is algos the result of a kind of GIGO problem. To oversimplify, if you train a face recognition algo on a dataset full of white men, then it has problems pattern matching the face of a black woman.

So LeCun says that this is not the fault of the ML researchers or the algos -- it's the fault of the engineers who design the datasets that the algos are then trained on for real-world use. He's trying to punt the blame for the bias down a peg. Basically this here:

What Gebru & her allies push back with, is that that the ML researchers have moral & professional culpability for the fact that their algos are being fed biased datasets that produce crappy outcomes for minorities & reinforce systemic racism etc.

So what should the ML researchers do to address this, & to make sure that these algos they produce aren't trained to misrecognize black faces & deny black home loans etc? Well, what LeCun wants is a fix -- procedural or otherwise. Like maybe a warning label, or protocol.

But what Gebru et al want is something bigger: they want for ML reseachers to not be a bunch of white dudes. In other words, they do not want a fix that leaves a bunch of white dudes designing the algos that govern if black ppl can get a loan, even if the training data is perfect

Rather, they want Black people, women, womxn, latinx, & enby, & whole rainbow of folx to be designing the algos that decide if a black family can get a loan. In other words, the point is not the loan -- because that's a pretty simple patch via the dataset. No...

...the point is to eliminate the entire field as it's presently constructed, & to reconstitute it as something else -- not nerdy white dudes doing nerdy white dude things, but folx doing folx things where also some algos pop out who knows what else but it'll be inclusive!

You might call Gebru's product: Abolish AI. Or maybe Defund AI. It resonates with the police stuff, in that the point is not to patch a system via process or protocol. It's to have a /totally different group of ppl/ doing whatever things this group of oppressors was doing...

...under the expectation that the outcome would be better because different people with different interests & backgrounds & hereditary characteristics etc. would produce outcomes that are less white-dude-centric.

There is a direct analog between the "Black in AI" work and the police abolition work, then. The complaint from both groups is that patching/fixing the existing guild or profession (cops, AI) to produce better outcomes, while leaving it looking the same, is a non-starter.

What they want is for people who look & think & speak like LeCun -- insists on presumption of good faith, norms of civility, evidence, process, protocol -- to be pushed out, & for folks who look/think/speak like Gebru (i.e. successor ideology discourse norms) to dominate.

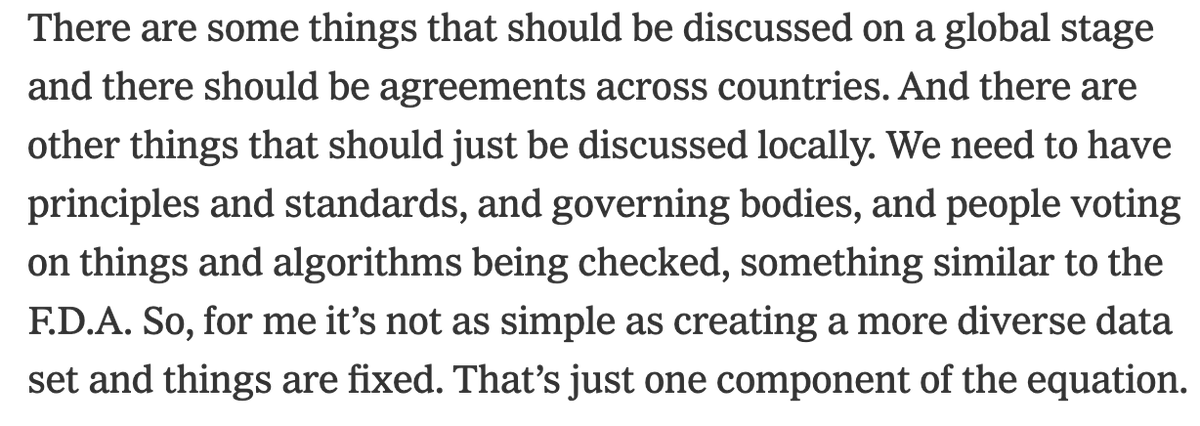

Gebru & co. also want veto power over the kinds of uses to which AI is put. For instance, the gender recognition stuff -- they'd like to able to say "no, don't use AI to divide people into male/female because that's violence. Any task or app that would do that is problematic."

This is very much like the Ibrahim X Kendi plan to have some committee oversee all of society to make sure outcomes are equal. It's a straight-up power play. They want to be able to vet which ends ML can even be used for based on a priori "ethical" considerations. Gebru:

The thing I struggle with is that this push to have applications for ML somehow approved & tracked by an FDA-like entity -- I'm kinda not certain it's obviously wrong. ML is a bit like nuclear -- great stuff, but very powerful & with great potential to be used for evil at scale.

And like nuclear, we are gonna fight and fight and fight over who gets to call the shots. Who gets to use it and to what end and how much and under what conditions. This "Black in AI" thing is just the opening salvo in that conflict.

Anyway, the TL;DR here is this: LeCun made the mistake of thinking he was in a discussion with a colleague about ML. But really he was in a discussion about power -- which group w/ which hereditary characteristics & folkways gets to wield the terrifying sword of AI, & to what end

As a coda to this, you know who is not struggling with these issues around AI? The Chinese. The CCP is going to push these technologies of mass control as far as they will go, and this very well may give them an edge that means total dominance of the next 100yrs.

Read on Twitter

Read on Twitter