Happy to share "Revisiting Rainbow" w/ @JS_Obando where we argue small/mid-scale envs can promote more insightful & inclusive deep RL research.

Paper: http://arxiv.org/abs/2011.14826

Paper: http://arxiv.org/abs/2011.14826

Blog: http://bit.ly/2VkPJ4r

Blog: http://bit.ly/2VkPJ4r

Code: http://bit.ly/3mrCyuD

Code: http://bit.ly/3mrCyuD

Video: http://bit.ly/2I0BAXk

Video: http://bit.ly/2I0BAXk

1/X

1/X

Paper: http://arxiv.org/abs/2011.14826

Paper: http://arxiv.org/abs/2011.14826  Blog: http://bit.ly/2VkPJ4r

Blog: http://bit.ly/2VkPJ4r  Code: http://bit.ly/3mrCyuD

Code: http://bit.ly/3mrCyuD  Video: http://bit.ly/2I0BAXk

Video: http://bit.ly/2I0BAXk  1/X

1/X

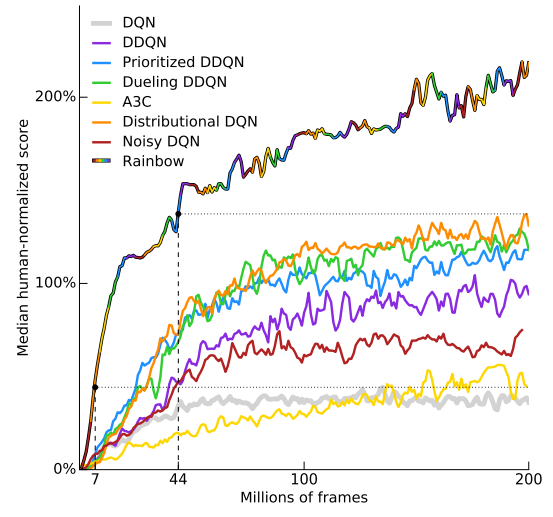

New RL methods are typically eval'd on standard envs (ALE, MuJoCo, DM ctrl suites, etc).

While good for standards, they implicitly establish a min amount of power to be recognized as valid scientific contributions.

power to be recognized as valid scientific contributions.

Underprivileged newcomers thus face enormous hurdles!

2/X

While good for standards, they implicitly establish a min amount of

power to be recognized as valid scientific contributions.

power to be recognized as valid scientific contributions.Underprivileged newcomers thus face enormous hurdles!

2/X

In this work we argue for a need to change the status-quo in evaluating and proposing new research to avoid exacerbating the barriers to entry for newcomers from underprivileged communities. We do so by revisiting the Rainbow algorithm ( http://arxiv.org/abs/1710.02298 )

3/X

3/X

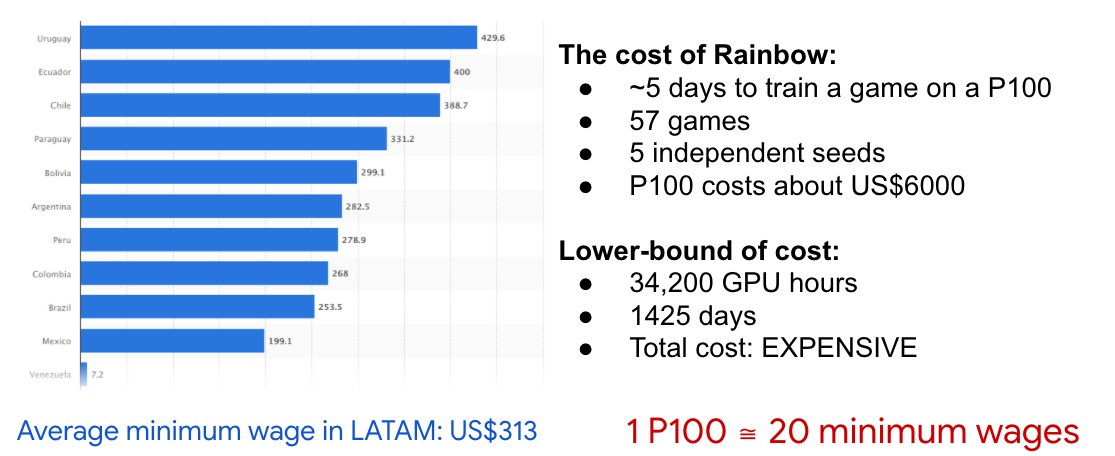

The Cost of Rainbow

The Cost of Rainbow

Although the value of Rainbow agent is undeniable, this result could have only come from a large research laboratory with ample access to compute.

The figure below puts things in perspective, when compared against average minimum wages in LATAM:

4/X

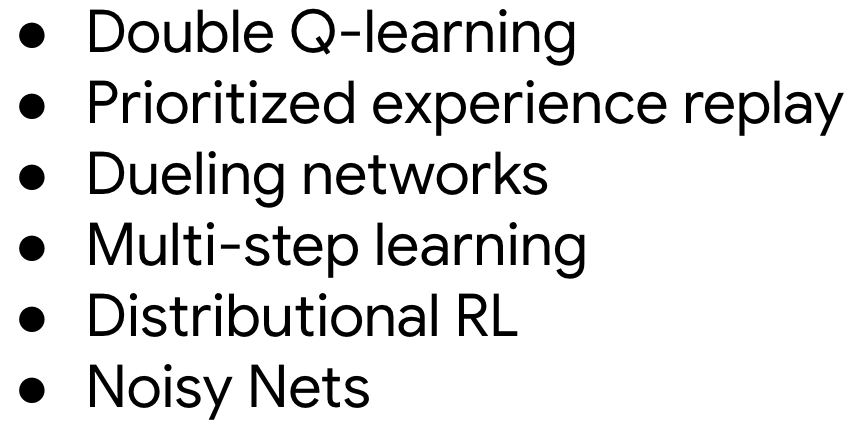

Revisiting Rainbow

Revisiting Rainbow

As in original paper, we evaluate the effect of adding various algorithmic components to the original DQN, but run the evaluation on 4 classic control environments, and on MinAtar ( http://arxiv.org/abs/1903.03176 ), which are "miniature" Atari games.

5/X

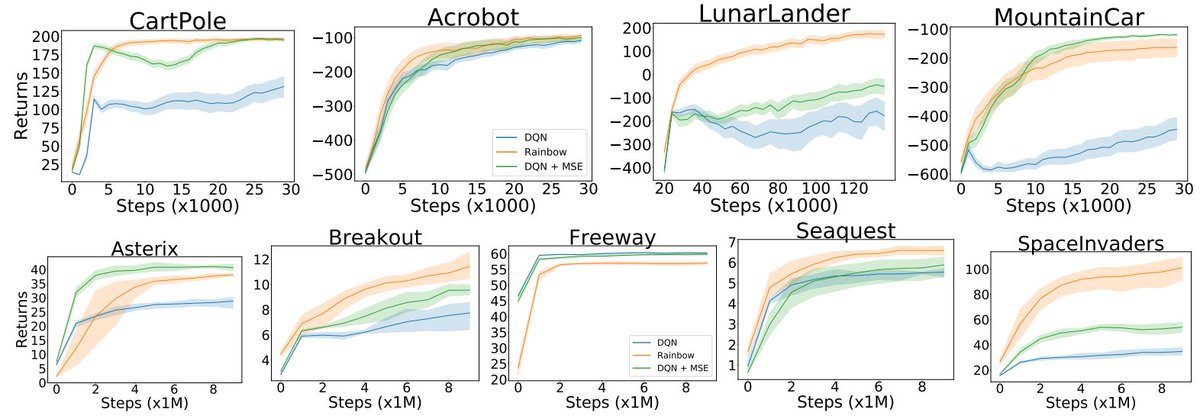

In classic control, in aggregate, addition of components helps. What's interesting is when distr. RL is added *without any other components*, performance is mostly unchanged or hurt! (Top row)

But *removing* distr. RL from the full Rainbow is what hurts the most. (Bottom row)

6/X

But *removing* distr. RL from the full Rainbow is what hurts the most. (Bottom row)

6/X

In MinAtar, distr. RL is the most important addition, and *doesn't require being coupled with another addition*.

Does distr. RL coupled with ConvNets avoid the pitfalls we observed in classic control (which don't use ConvNets)?

7/X

Does distr. RL coupled with ConvNets avoid the pitfalls we observed in classic control (which don't use ConvNets)?

7/X

Conclusion

Conclusion

In aggregate, we would reach the same conclusions as the original Rainbow paper: combining all algos is best.

However, our results highlight important details in the combinations of the different algos and network arch's that merits further investigation.

8/X

Beyond the Rainbow

Beyond the Rainbow

We can leverage low cost of small-scale envs to conduct a more thorough investigation.

We begin by questioning the choice of Huber loss for original DQN. Surprisingly, MSE surpasses performance across the board!

Important caveat: this is with Adam.

9/X

Network architectures

Network architectures

We perform broad sweeps across network architectures and find both DQN and Rainbow to be fairly robust to the choice of number of layers and hidden units.

10/X

Batch sizes

Batch sizes

Another often overlooked hyper-parameter in training RL agents is the batch size. What we found is that while for DQN (top row) it is sub-optimal to use a batch size below 64, Rainbow (bottom row) seems fairly robust to both small and large batch sizes.

11/X

QR-DQN

QR-DQN

Given the importance of distr. RL in

, we look at using QR-DQN for repr. the distrib. ( http://arxiv.org/abs/1710.10044 ).

, we look at using QR-DQN for repr. the distrib. ( http://arxiv.org/abs/1710.10044 ).We get comparable results, but less sensitivity to learning rates!

A very interesting finding is it seems to *not* play well with dueling nets!

12/X

On a limited computational budget we were able to reproduce, at a high-level, the results from the  paper and uncover some new and interesting results.

paper and uncover some new and interesting results.

We believe these less computationally intensive envs lend themselves well to a more critical and thorough analysis.

13/X

paper and uncover some new and interesting results.

paper and uncover some new and interesting results.We believe these less computationally intensive envs lend themselves well to a more critical and thorough analysis.

13/X

Worth noting we initially ran 10 indep. trials for classic control envs, but conf. intervals were wide and inconclusive; boosting the indep. trials to 100 gave us tighter conf. intervs w/ little extra compute. This would be impractical for most large-scale environments!

14/X

14/X

We are by no means calling for less emphasis to be placed on large benchmarks. We're simply urging researchers to consider smaller envs as a valuable tool in their investigations, and reviewers to avoid dismissing empirical work that focuses on these smaller problems.

15/X

15/X

By doing so, we believe, we will get both a clearer picture of the research landscape and will reduce the barriers for newcomers from diverse, and often underprivileged, communities. These two points can only help make our community and our scientific advances stronger.

16/X

16/X

Read on Twitter

Read on Twitter