Researchers make terrible futurists. Really.

Why?

Several reasons:

(1) Because they spend their days trying to solve really hard problems. They experience 99.9% "hard" and "it doesn't work yet". This induces a bias in favor of saying that things are hard and won't work. https://twitter.com/egrefen/status/1333501622895439874

Why?

Several reasons:

(1) Because they spend their days trying to solve really hard problems. They experience 99.9% "hard" and "it doesn't work yet". This induces a bias in favor of saying that things are hard and won't work. https://twitter.com/egrefen/status/1333501622895439874

(2) Researchers researching topic X are not trained to think about timelines and predictions. This is still true for fields that are very math heavy. They're also not trained to think about consequences.

(3) There is a culture within science to play down the extent of both your own work and of the field as a whole. It makes you sound serious and erudite. It distinguishes you from the crank on the street corner talking about quantum healing.

This is a bias though.

This is a bias though.

Ray Kurzweil's broad thesis about how AI was going to go down was right. Back in 2007-2010 the idea that the "amount" of compute power you had would matter for the power of AI you could make was controversial and people were scoffing at it.

Nowadays small fish simply cannot compete against the likes of @Deepmind or @OpenAI (in part) because they simply don't have the compute power. Alphazero took 500,000 TPU-hours. GPT-3 Was something like 3 million hours on the V-100.

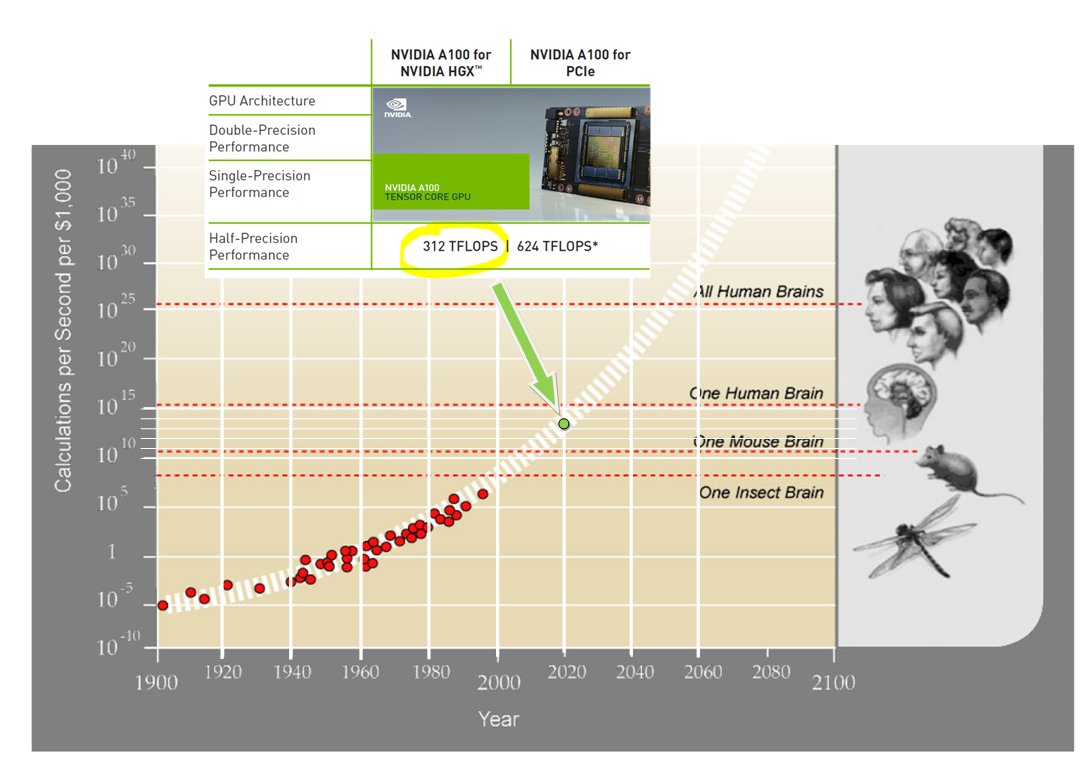

I just looked at The Singularity is Near from 2005 and updated this graph of compute power with the new Nvidia A100.

Surprisingly, it is exactly where Kurzweil predicted it would be, based on data that only went as far as 2000.

Surprisingly, it is exactly where Kurzweil predicted it would be, based on data that only went as far as 2000.

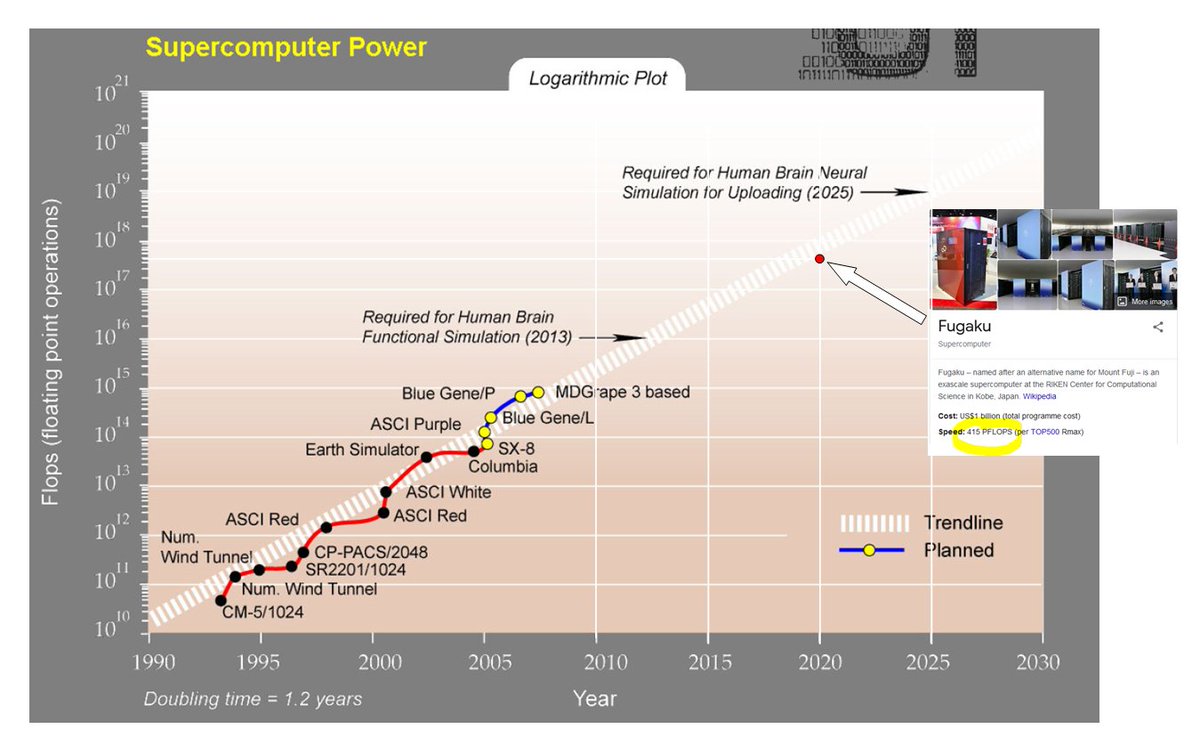

And the graph for supercomputer power, updated with the latest top500 supercomputer leader, Fugaku. It's eerily accurate.

The consequences of this progress are starting to show. The GPT-3 language model just sort of writes poetry and babbles about any subject.

Alphazero crushed human chess and all other chess computers with 0 training data. Like Chess from an alien planet.

Alphazero crushed human chess and all other chess computers with 0 training data. Like Chess from an alien planet.

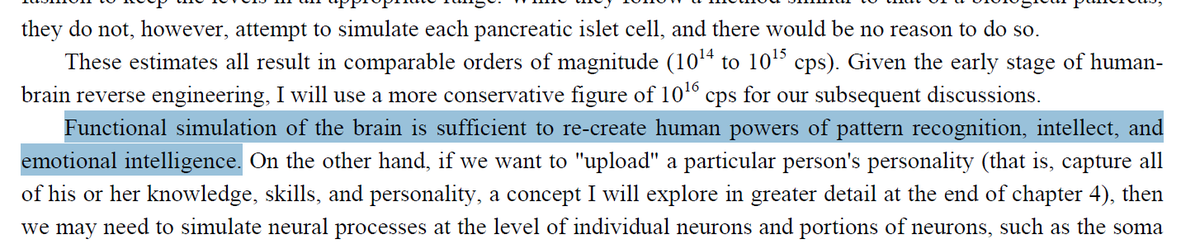

Kurzweil is telling us that a system like the DGX-A100 which costs £200,000 will get you almost exactly enough computer power for a "functional human brain simulation".

https://www.scan.co.uk/3xs/configurator/nvidia-dgx-a100-ai-supercomputer-appliance

https://www.scan.co.uk/3xs/configurator/nvidia-dgx-a100-ai-supercomputer-appliance

Of course that doesn't mean that we'll make it happen instantly. Just because the power is there doesn't mean we'll know how to use it optimally

There's work on how the the compute required to perform various ML tasks goes down over time; often by a lot. https://openai.com/blog/ai-and-efficiency/

There's work on how the the compute required to perform various ML tasks goes down over time; often by a lot. https://openai.com/blog/ai-and-efficiency/

https://arxiv.org/abs/2005.04305

"We show that the number of floating-point operations required to train a classifier to AlexNet-level performance on ImageNet has decreased by a factor of 44x between 2012 and 2019."

"We show that the number of floating-point operations required to train a classifier to AlexNet-level performance on ImageNet has decreased by a factor of 44x between 2012 and 2019."

At that rate of algorithmic improvement, we might imagine that current algorithms are hundreds or thousands of times less efficient than they should be.

The DGX-A100 is capable of performing a functional human role, but probably not with today's algorithms.

The DGX-A100 is capable of performing a functional human role, but probably not with today's algorithms.

If you do buy this, don't forget the £11.99 for delivery though! Gotta include that lol

Read on Twitter

Read on Twitter