It's that time of year again, when universities run surveys where they ask students what they thought of their instructors. They use the results as "data" regarding teaching effectiveness.

This is a completely unscientific and unjust practice and it should stop. A thread.

This is a completely unscientific and unjust practice and it should stop. A thread.

There is now a tremendous body of research about what student surveys do and do not measure. Results do correlate well with how much students ENJOY the class. They do not correlate at all strongly with teaching EFFECTIVENESS.

There is also evidence of various forms of bias in student surveys — against women, against visible minorities, against people with unfamiliar accents, and against less attractive people.

(With respect to bias, the results do seem a bit complex — some studies have shown preference for women instructors in some contexts. This is not surprising because racist and sexist biases do not operate in super simple ways. People like being nurtured by women for instance.)

The bias issue makes this an equity issue, but the fundamental scientific problem with using student surveys to measure teaching effectiveness is that they just don't accurately measure it.

These — bias, inaccuracy — are logically independent problems, but they reinforce each other in practice. Something could be inaccurate with no bias (totally random results, say) or somewhat accurate but biased (women get consistently docked 1 point). Both problems exist here.

The bias issue tends to get more attention, for understandable reasons, but the slam dunk case against using surveys in this way is the reliability one.

The best available evidence indicates NO LINK between evaluation and teaching effectiveness. https://ocufa.on.ca/assets/RFA.v.Ryerson_Stark.Expert.Report.2016.pdf

The best available evidence indicates NO LINK between evaluation and teaching effectiveness. https://ocufa.on.ca/assets/RFA.v.Ryerson_Stark.Expert.Report.2016.pdf

I think it's completely reasonable for university administrators to measure, and be interested in, student experience of their courses. If students love a course or hate a course, that's good to know. So I think it's fine to collect data like that.

But it's not at all fine to pretend data about student enjoyment is data about teaching effectiveness. Our job is to teach, not to entertain. One can be good at one without being good at the other.

Note also the obvious fact that how much students enjoy a course will depend a TON on factors outside the instructor's control, such as:

* the difficulty of the material

* the time of day the course meets

* whether it's a required course

* whether their classmates are nice

* the difficulty of the material

* the time of day the course meets

* whether it's a required course

* whether their classmates are nice

These factors influence answers to all questions, even ones that ask about specific unrelated aspects ("how prepared was the instructor for class?").

So aggregating student data and comparing it across courses or individuals is not a reasonable way to measure teaching effectiveness. ("Instructor A averages a 4.4 and B averages 4.7, so B is a better teacher.")

This kind of procedure should not be used to make career decisions!

This kind of procedure should not be used to make career decisions!

(Surveys CAN be useful to instructors to see how different strategies are perceived by their students across terms. I do learn things that are helpful for me in my future course plans. But that's very different from my employer learning whether I'm any good at teaching.)

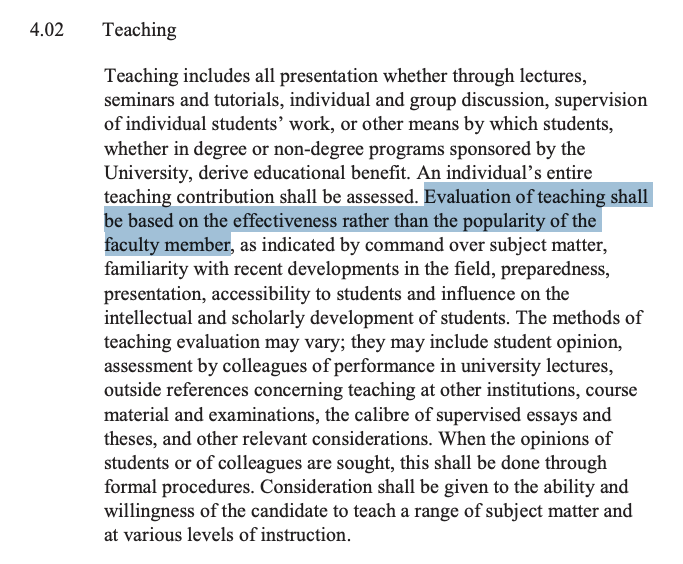

For this reason, the use of student surveys to measure teaching effectiveness in employment contexts is arguably inconsistent with the @FacultyUbc Collective Agreement language highlighted below. Nevertheless, this remains standard practice. https://www.facultyassociation.ubc.ca/assets/media/Faculty_CA_2019-2022_FINAL.pdf

What should any of us do about this?

Fundamentally, this is an administration/employment issue. My union fought hard this year in bargaining to explicitly prohibit UBC from using student surveys for promotion and reappointment decisions. We didn't get it; the fight must continue

Fundamentally, this is an administration/employment issue. My union fought hard this year in bargaining to explicitly prohibit UBC from using student surveys for promotion and reappointment decisions. We didn't get it; the fight must continue

What of individuals?

Contingent/untenured instructors could I guess consider sacrificing teaching effectiveness for student enjoyment, by teaching easier material, grading easier, or spending more of their time on makeup and less on teaching prep.

Contingent/untenured instructors could I guess consider sacrificing teaching effectiveness for student enjoyment, by teaching easier material, grading easier, or spending more of their time on makeup and less on teaching prep.

(I wouldn't blame them for this choice under the circumstances but I think it's very regrettable that they're incentivised in this way!)

Students could look at the data and try to make a point of being less biased and more accurate, I guess. But this is a really hard thing to do!

And — and this is nothing against students, it's just a fact about knowledge/experience — most students just aren't in a position to know how effectively they were taught! How are my Intro students supposed to know, e.g., how familiar I am with recent developments in my field??

One could conscientiously refuse to complete course evaluations. But this would have the effect of amplifying the voices of students who do complete them, who are presumably less thoughtful about these issues than the student whose decision I'm now considering.

Also, again, I think there is some good information to be gained by the surveys, so I don't want people to opt out. I DO want to know what my students thought.

I just don't want employers to pretend this is data about teaching effectiveness.

I just don't want employers to pretend this is data about teaching effectiveness.

I've seen some students complete the evaluations and leave a comment within them, saying that they are an invalid measure of teaching effectiveness and should not be used the way they are used. That's probably what I would do.

But there's obviously no good solution at the individual level. This needs to be dealt with at an administrative level — either because the university decides itself that it is interested in making decisions based on good data instead of junk science, or via labour negotiations.

That's my thread, it's done.

Here are some more links you can dig around in, backing up some of my claims about what studies show. Dig in if you want to look into it some of the studies yourself:

https://ocufa.on.ca/assets/OCUFA-SQCT-Report.pdf

https://www.asanet.org/sites/default/files/asa_statement_on_student_evaluations_of_teaching_feb132020.pdf

https://ocufa.on.ca/assets/RFA.v.Ryerson_Freishtat.Expert.Supplemental.Reports_2016.2018.pdf

Here are some more links you can dig around in, backing up some of my claims about what studies show. Dig in if you want to look into it some of the studies yourself:

https://ocufa.on.ca/assets/OCUFA-SQCT-Report.pdf

https://www.asanet.org/sites/default/files/asa_statement_on_student_evaluations_of_teaching_feb132020.pdf

https://ocufa.on.ca/assets/RFA.v.Ryerson_Freishtat.Expert.Supplemental.Reports_2016.2018.pdf

Another good example: catching cheating https://twitter.com/jichikawa/status/1333302088118329349

"But students need a voice!" https://twitter.com/jichikawa/status/1333462148228411392

Read on Twitter

Read on Twitter