New paper "Research Quality of Registered Reports Compared to the Traditional Publishing Model" by @cksoderberg Tim Errington @SchiavoneSays @julia_gb @FSingletonThorn @siminevazire Kevin Esterling and me.

Preprint: https://osf.io/preprints/metaarxiv/7x9vy

1/

Preprint: https://osf.io/preprints/metaarxiv/7x9vy

1/

We recruited 353 researchers to peer review papers. They evaluated 2 articles each, 1 of 27 RRs and 1 of 2 matched comparison articles (same author or same journal).

They evaluated articles on 19 outcome criteria such as rigor & quality of methods, results, novelty, importance..

They evaluated articles on 19 outcome criteria such as rigor & quality of methods, results, novelty, importance..

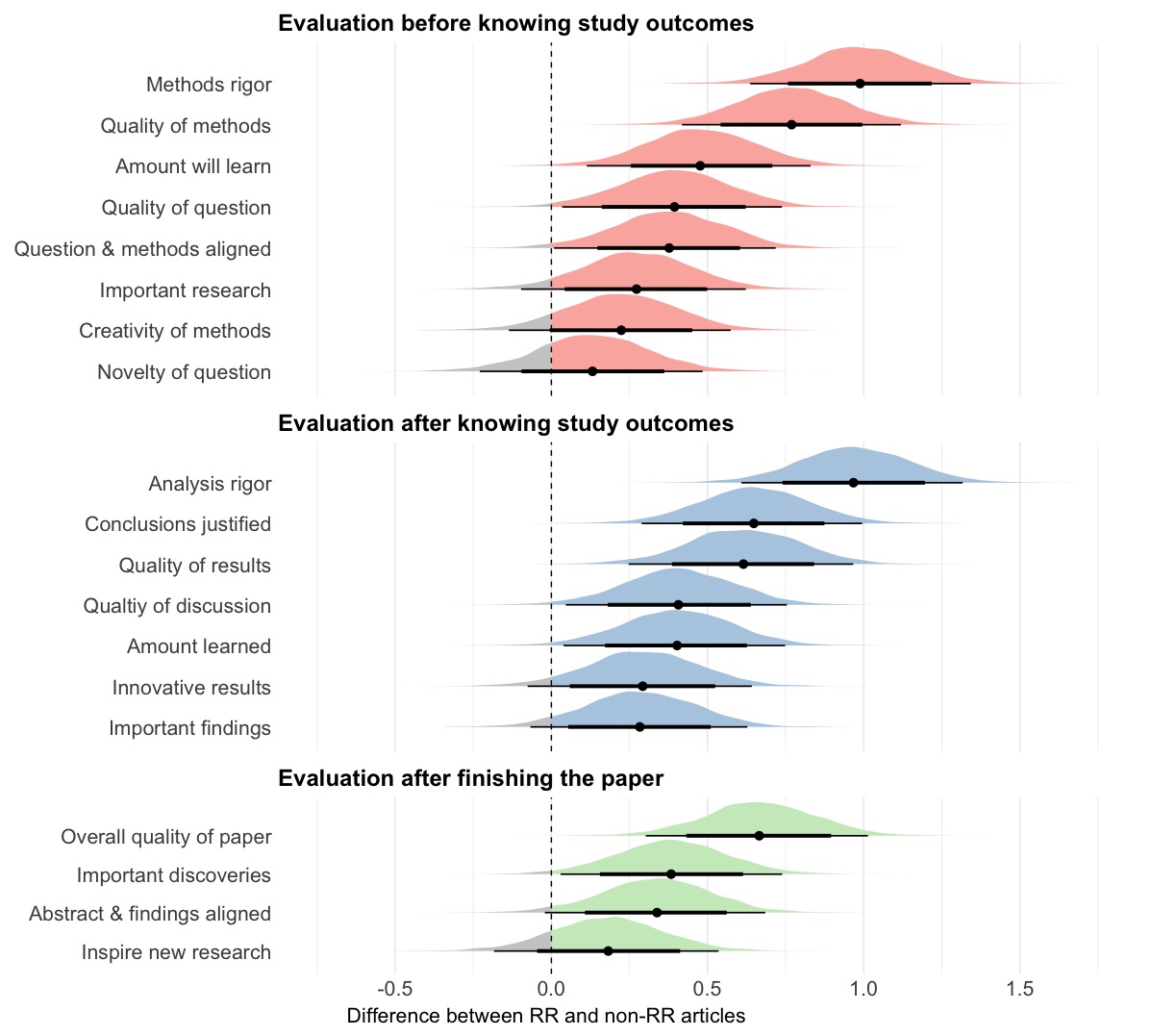

Registered Reports outperformed comparison articles slightly to strongly on every outcome criterion--some assessed before knowing the results, some after, and some evaluating the paper as a whole.

Figure shows bayesian credible intervals for the 19 outcome criteria.

Figure shows bayesian credible intervals for the 19 outcome criteria.

Registered Reports showed particularly strong performance on rigor and quality of methods/results/paper and justification of conclusions.

But, RRs even matched or exceeded comparison articles on creativity, novelty, importance in this sample. That surprised me.

But, RRs even matched or exceeded comparison articles on creativity, novelty, importance in this sample. That surprised me.

This paper provides initial empirical evidence for the theorized benefits of the Registered Reports model. Beyond reducing publication bias, it appears to promote more rigorous research that addresses important questions. https://osf.io/preprints/metaarxiv/7x9vy

These data do not indicate whether those performance benefits occur by the authors alone focusing on asking important questions and designing good methods, or by the reviewers helping to enhance quality in the review process. My money is on both.

Thank you to the 353 reviewers who contributed substantial effort to conducting these peer reviews! I hope this paper will spur many more investigations of costs and benefits of the RR model. I am particularly keen to do a randomized trial.

Project page: https://osf.io/aj4zr/

Project page: https://osf.io/aj4zr/

Read on Twitter

Read on Twitter