Something that may sound odd at first:

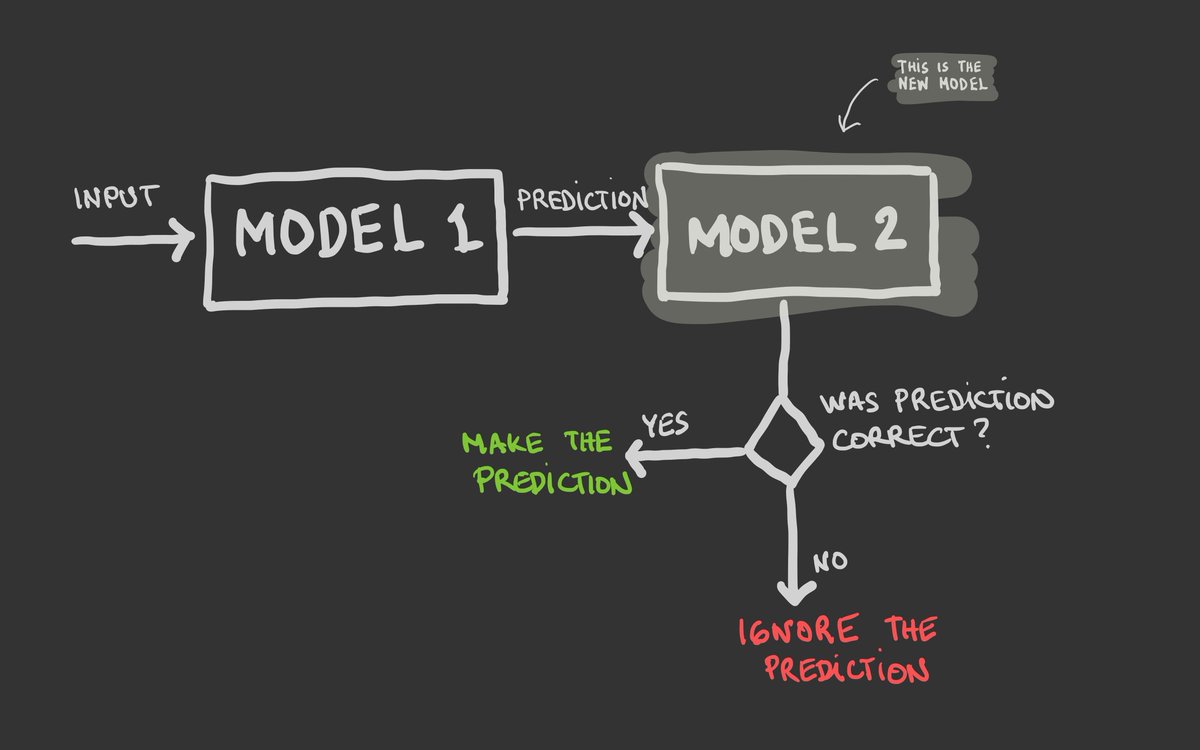

We created a Machine Learning model that predicts whether a prediction from another Machine Learning model is correct.

The combination of these models dramatically reduces mistakes when compared with the first model alone.

We created a Machine Learning model that predicts whether a prediction from another Machine Learning model is correct.

The combination of these models dramatically reduces mistakes when compared with the first model alone.

The first model makes predictions. Let's say they predict the breed of a dog on the input image.

The second model looks at the prediction and decides whether we should trust that prediction or pass.

Basically, Model 2 is a bullshit detector.

The second model looks at the prediction and decides whether we should trust that prediction or pass.

Basically, Model 2 is a bullshit detector.

Model 1 is a classification model. Model 2 acts as a discriminator. Both use very different approaches.

Model 1 tries to learn as much as possible to classify objects correctly.

Model 2 tries to learn as much as possible to recognize Model 1 mistakes.

Model 1 tries to learn as much as possible to classify objects correctly.

Model 2 tries to learn as much as possible to recognize Model 1 mistakes.

To train Model 2, we use a decently large set of features, but the core concept that we use is the historical performance of Model 1.

Imagine Model 2 asking this question: "When we got a similar input, and Model 1 predicted this specific value, how did it do in the past?

Imagine Model 2 asking this question: "When we got a similar input, and Model 1 predicted this specific value, how did it do in the past?

Here is a specific example:

What would happen if Model 1 classifies animals but it was never trained with images of zebras?

When Model 1 sees a zebra, it will probably output "Horse" with very high confidence.

Model 2's job is to catch this.

What would happen if Model 1 classifies animals but it was never trained with images of zebras?

When Model 1 sees a zebra, it will probably output "Horse" with very high confidence.

Model 2's job is to catch this.

Read on Twitter

Read on Twitter