As participants of #SICSS 2018, we were excited about using image recognition for social science research, but it didn't take long for us to realize substantial problems. So we analyzed 220,000 Twitter and Wikipedia images of U.S. Congress to investigate:

https://doi.org/10.1177/2378023120967171

https://doi.org/10.1177/2378023120967171

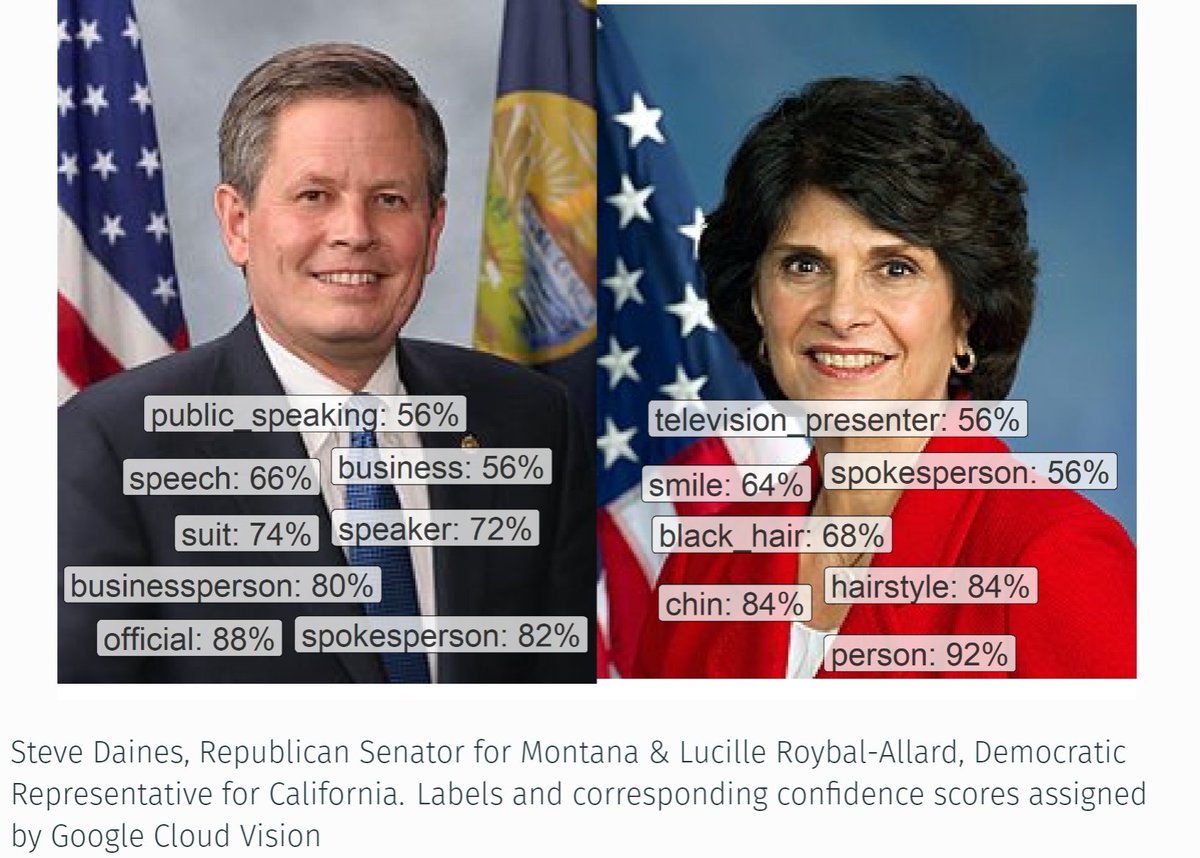

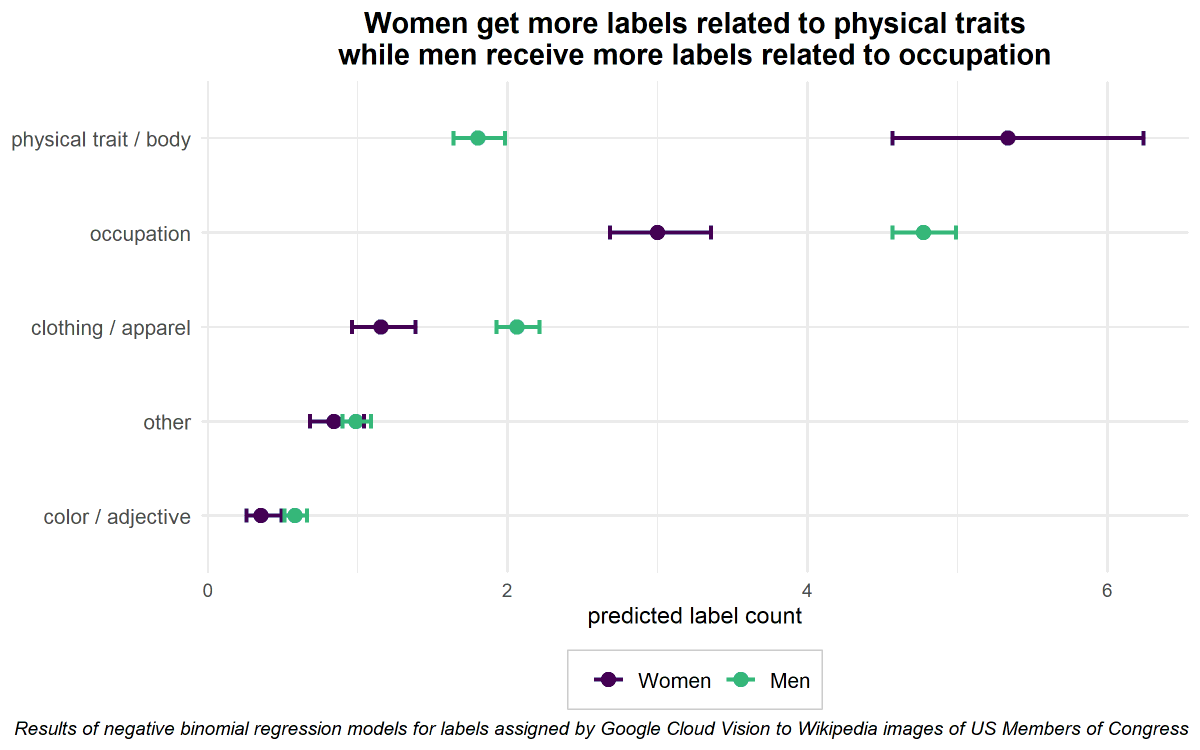

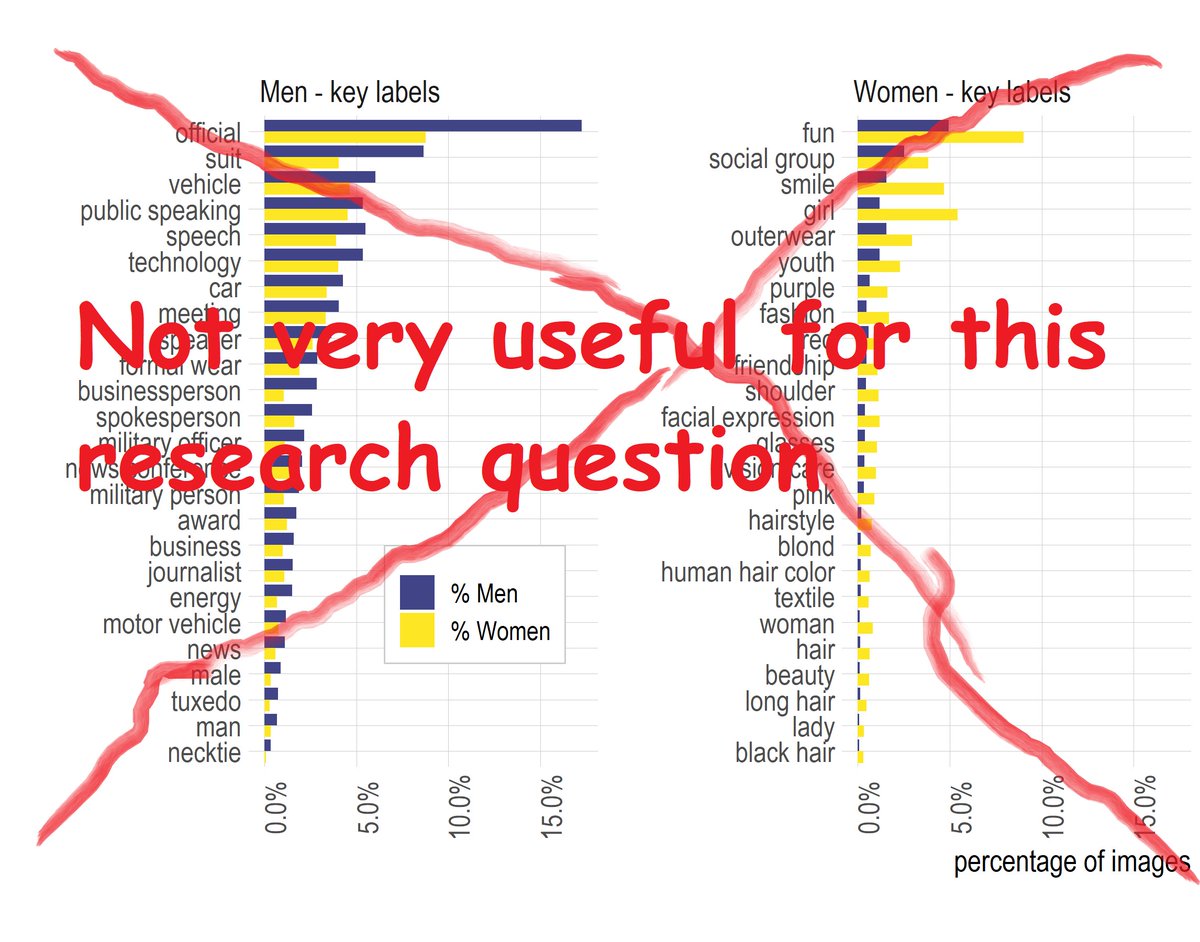

We examined 3 commercial systems: @GCPcloud Vision, @Azure Vision and @awscloud Rekognition. All of them produced biased output. One example: labels that reinforce stereotypes, where images of women are labeled with physical traits and those of men with high status occupations.

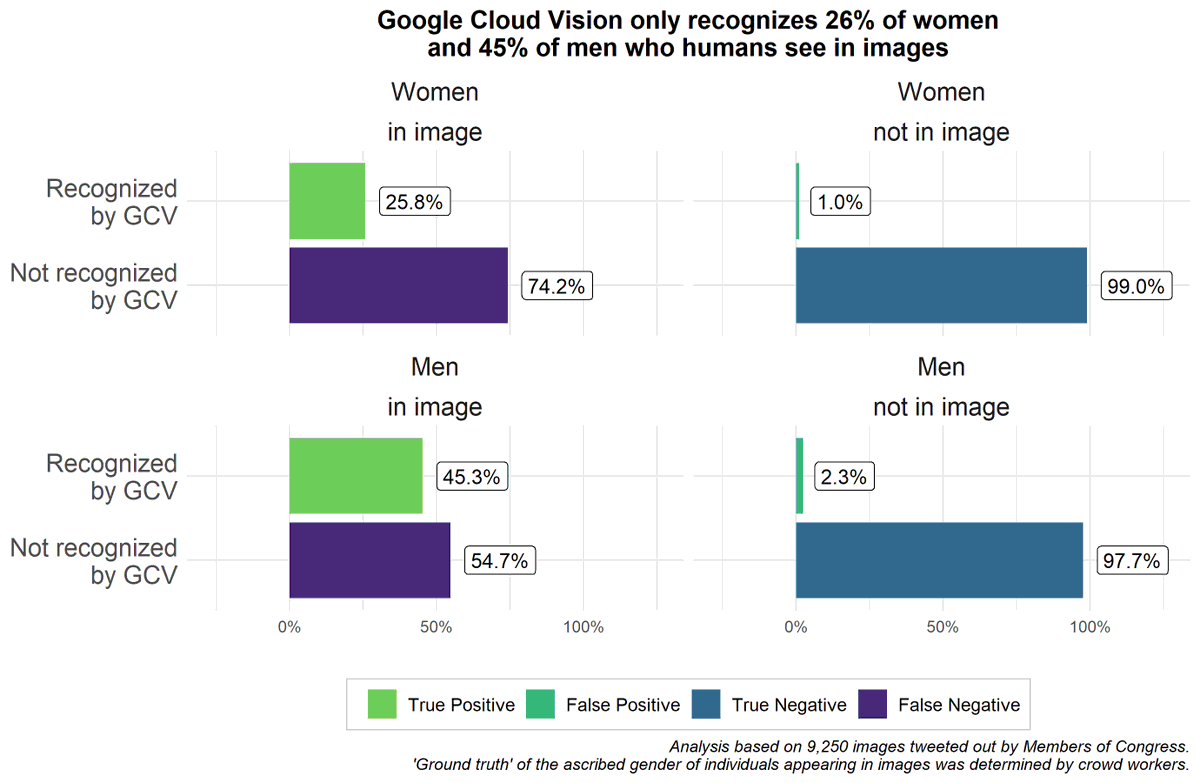

But there are other problems as well. Women in images are recognized at substantially lower rates in comparison with men. In other words, these algorithms often just don't "see" women, which is in line with research on facial recognition by @timnitGebru and @jovialjoy.

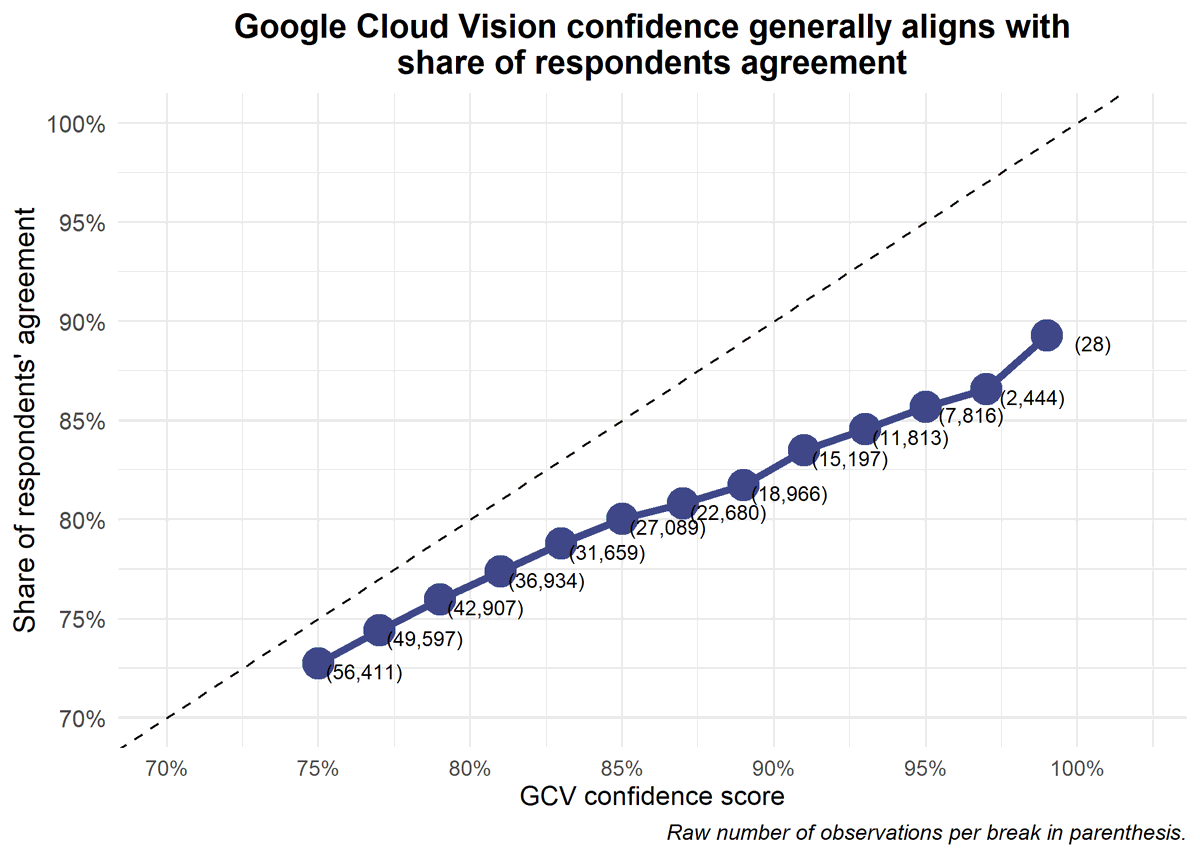

These findings cannot be explained by poor label quality. Using crowdsourced validation, we found that humans mostly agree with high-confidence labels of @GCPcloud Vision. Image labeling services have high precision, but low recall and can be correct and biased at the same time.

Image recognition systems are changing constantly and could still be useful for specific applications in research and industry. But for analyzing gender differences in political communication, they are pretty bad! In case you use them make sure to validate, validate, validate.

There's much more in our paper, including "pizza", "home decor" and many sociological insights on gender stereotypes, inequalities and AI systems.

Here's our replication material:

https://doi.org/10.7910/DVN/2CEYWV

And here you can find auxiliary R software: https://cschwem2er.github.io/imgrec/

Here's our replication material:

https://doi.org/10.7910/DVN/2CEYWV

And here you can find auxiliary R software: https://cschwem2er.github.io/imgrec/

Thank you @carlyrknight1, @BelloPardo, @stan_okl, @hjms and @jw_lockhart for being awesome! I learned a lot and had as much fun as you can have when studying biased AI systems. At last, thanks to the #SICSS community, @chris_bail and @msalganik for bringing us all together.

Read on Twitter

Read on Twitter