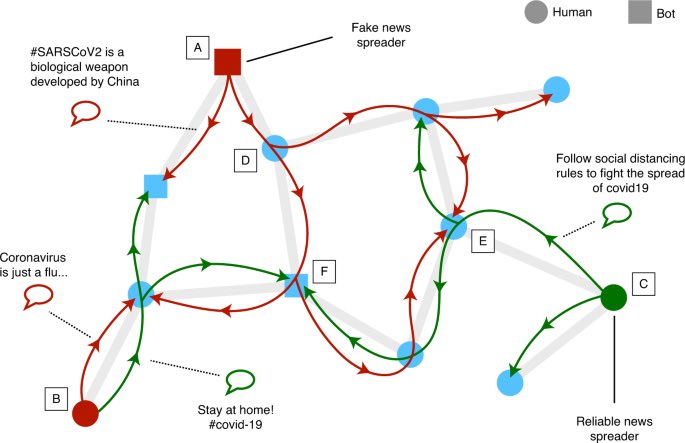

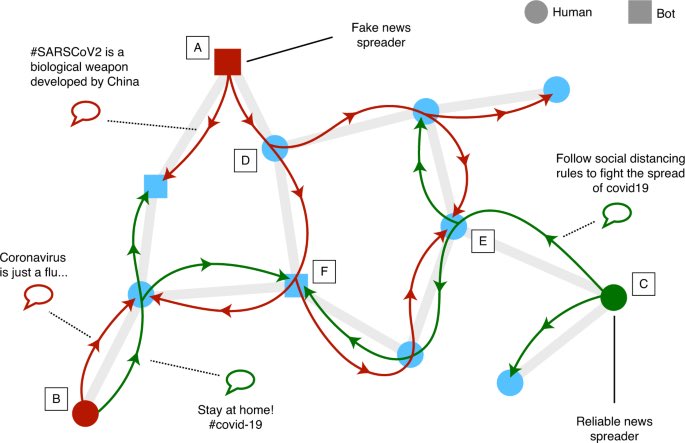

This is an interesting diagram from a paper about “infodemics” in response to epidemics.

I think the same applies to political disinformation.

Source: https://www.nature.com/articles/s41562-020-00994-6#Sec1

Diagram explained in my next tweet.

I think the same applies to political disinformation.

Source: https://www.nature.com/articles/s41562-020-00994-6#Sec1

Diagram explained in my next tweet.

Human (circles) and non-human (squares) accounts spread news across a social network. Some users (A and B) create unreliable content, while others (C) create content informed by reliable sources.

When the topic attracts a lot of attention, the volume of info circulating makes it difficult to identify reliable sources. Some users (D) might be exposed to ONLY UNRELIABLE INFO, while others (E and F) might receive contradictory info and become uncertain what info to trust.

This is exacerbated when multiple spreading processes co-occur, and some users might be exposed multiple times to the same content or to different contents generated by distinct accounts.

Put simply, there are some people who are not only bad at identifying poor info, but they are ONLY SEEING FALSE INFO.

It is a *rare* case with all the info circulating that someone sees ONLY RELIABLE INFO.

Let that sink in.

It is a *rare* case with all the info circulating that someone sees ONLY RELIABLE INFO.

Let that sink in.

Heartbreaking https://www.latimes.com/california/story/2020-11-06/stockton-mayor-election-michael-tubbs-risks-defeat

Related to the diagram I shared about “infodemics” and how disinformation circulates, check out this thread about how we decide which stories we believe: https://twitter.com/operaqueenie/status/1309888276359118848

Read on Twitter

Read on Twitter