NEW from a big collaboration at Google: Underspecification Presents Challenges for Credibility in Modern Machine Learning

Explores a common failure mode when applying ML to real-world problems. 1/14

1/14

https://arxiv.org/abs/2011.03395

Explores a common failure mode when applying ML to real-world problems.

1/14

1/14https://arxiv.org/abs/2011.03395

We argue that underspecification is (1) an obstacle to reliably training models that behave as expected in deployment, and (2) really common in practice.

We demonstrate on examples from computer vision, NLP, EHR, medical imaging, genomics. 2/14

We demonstrate on examples from computer vision, NLP, EHR, medical imaging, genomics. 2/14

An ML pipeline is underspecified when it can return many predictors with equivalently strong held-out performance in the training domain (iid performance).

Underspecification can explain the following phenomena: 3/14

Underspecification can explain the following phenomena: 3/14

(1) Models can behave badly even when the prediction problem is well-aligned with the application

(2) Models that predict well in the training domain don’t always fail in the same way in deployment. 4/14

(2) Models that predict well in the training domain don’t always fail in the same way in deployment. 4/14

Underspecified pipelines can’t tell the difference between predictors with identical iid performance. They return an arbitrarily chosen predictor from this class. 5/14

This ambiguity makes a model’s behavior in deployment sensitive to small choices that determine which iid-equivalent predictor the pipeline will return.

Choices as small as the random seed used in training can break the tie. 6/14

Choices as small as the random seed used in training can break the tie. 6/14

We show that underspecification-induced sensitivity crops up in a lot of practical ML pipelines.

Predictors that perform near-identically on iid evals have much higher variability on stress tests that simulate practically relevant scenarios.

Selected examples: 7/14

Predictors that perform near-identically on iid evals have much higher variability on stress tests that simulate practically relevant scenarios.

Selected examples: 7/14

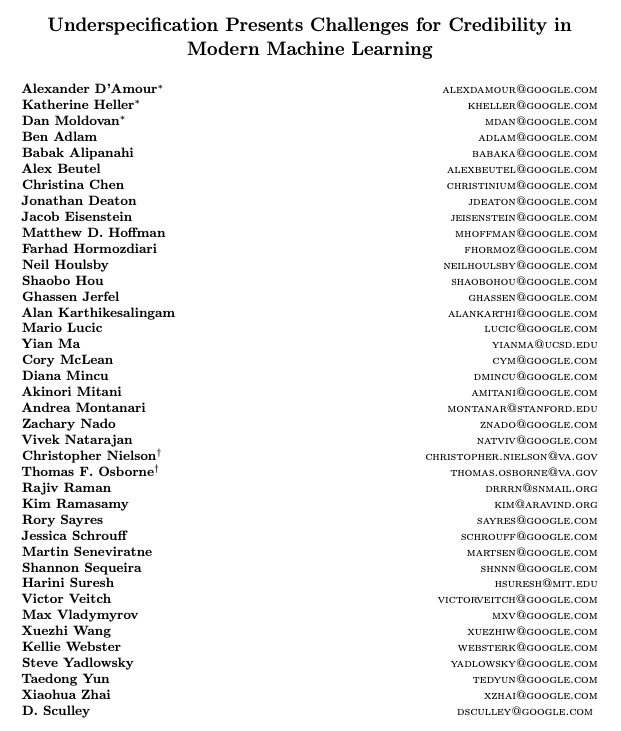

Image Classification: How well does an ImageNet-trained classifier with fixed iid performance perform on corrupted images (ImageNet-C)? Depends on the random seed. 8/14

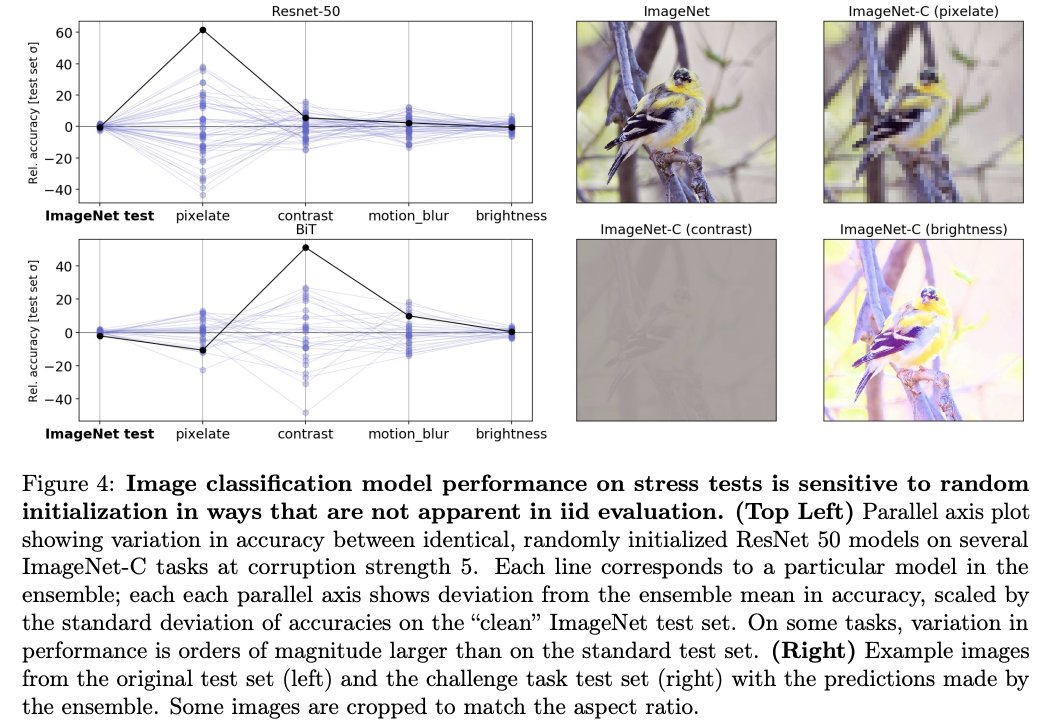

NLP: How much does a BERT-derived model with fixed validation performance depend on correlations between gender and profession? Depends on the random seeds. 9/14

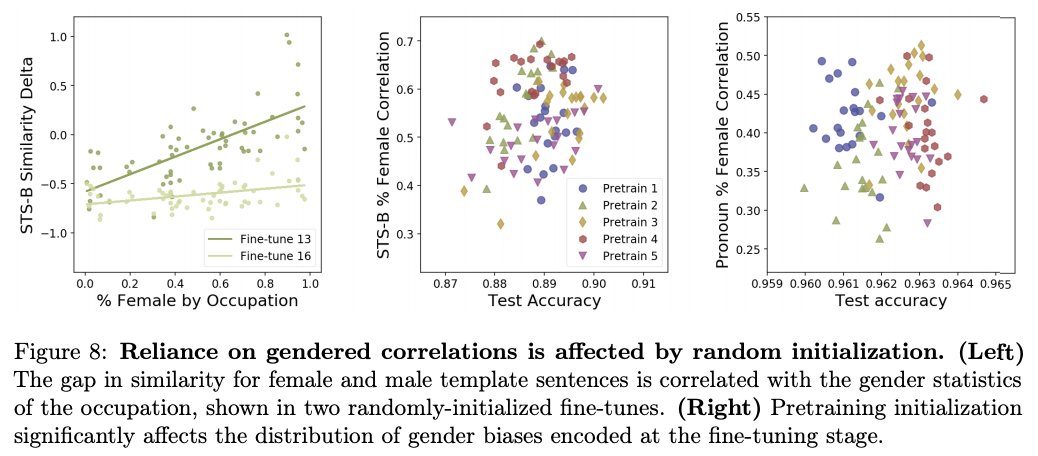

NLP: How much does a BERT-derived model with fixed validation performance depend on surface cues to solve natural language inference tasks? Depends on the random seeds. 10/14

Many more examples in the paper, including a number from medical applications that I won't present here to make sure they're read in full context.

Linear models in genomics, random feature models, and even an SIR model example to boot. 11/14

Linear models in genomics, random feature models, and even an SIR model example to boot. 11/14

Implications: Choices we make for convenience probably have substantive implications. We should be stress testing any model meant for deployment. We need better ways to constrain models to satisfy real-world requirements. More! 12/14

This was a huge undertaking across a bunch of different teams at Google. There are few places where you can have such a variety of teams run experiments for a single effort.

Thanks to @kat_heller and @mdanatg for co-leading. 13/14

Thanks to @kat_heller and @mdanatg for co-leading. 13/14

Read on Twitter

Read on Twitter