I am thrilled to announce that "Trust Issues: Uncertainty Estimation Does Not Enable Reliable OOD Detection On Medical Tabular Data" w/ L. Meijerink and @CinaGiovanni got accepted to @NeurIPSConf's #ml4h workshop!

Short synopsis in thread below

(1)

(1)

Short synopsis in thread below

(1)

(1)

(2) We test a variety of uncertainty estimation techniques, including e.g. MC Dropout, Bayes-by-Backprop, deep ensembles and more on a variety of scenarios in electronic health records ( #ehr)

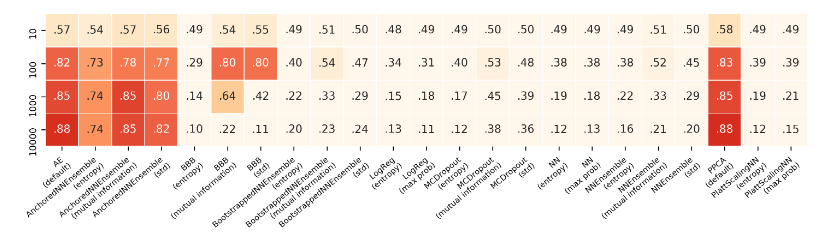

(3) First, we artificially corrupt data by scaling feature values by an increasing amount (y-axis). Except for some density estimation baselines, most models fail to discern scaled data points as OOD.

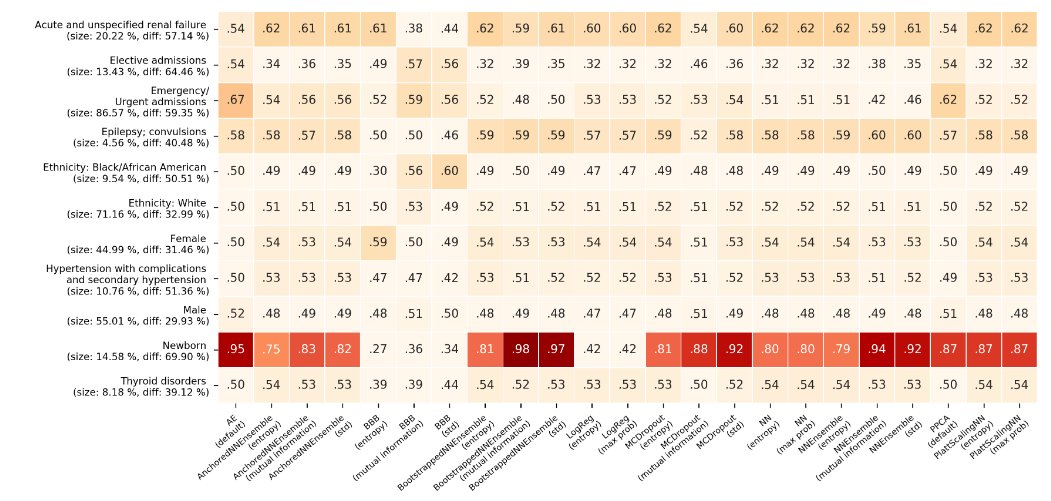

(4) Secondly, we define OOD patients groups based on diagnosis, admission type or demographic. Models are trained on the remaining data and tested to identify these unseen groups as OOD. Here, all models fail to identify these groups correctly (except for newborns)

(5) Lastly, we use two dfifferent #ehc data sets, train on of them and treat the other as an OOD test set. Again, most tested methods fail to accurately identify the other data as OOD. #mimic #eicu

(6) We hypothesize that these effects are due to open-ended "holes" of low-uncertainty produced by all discussed methods, which appear even in parts of the feature space where no training data has been seen.

(7) Thus, future work lies lies in trying to find a theoretical justification for this behavior and applying more powerful density estimation / hybrid models to this problem.

(End of thread )

)

(End of thread

)

)

Read on Twitter

Read on Twitter