This is such weird framing, as if AI is one coherent, knowable, predictable thing: ““I’m happy to hear there’s such a programme and AI is a great way to do it,” he said. “Why not use our most advanced technologies to get at these important questions.” https://on.ft.com/3mM2ErM

Surely, “What is the most effective way to solve this problem? Will it be effective? Can we test and replicate it and have certainty? What unforeseen consequences will it have? Is it entrenching bias?” are more important to ask than “Are you using AI?”, “Cooooool!”

AI discourse is so weird, imagine if there were newspaper articles that said: “We’re using maths to solve this problem!” “Cool! Maths is cool! I’m glad we’re using maths to solve this critical problem!”

See also, “We’re doing something we’ve never done before! Let’s use some untested technologies!”

Anyway, what is interesting about this - other than the lack of critical thinking of those being invited to comment - is that it will enable large numbers of people to report adverse reactions to a vaccine. Use of language, social confidence, doubt will all play a part.

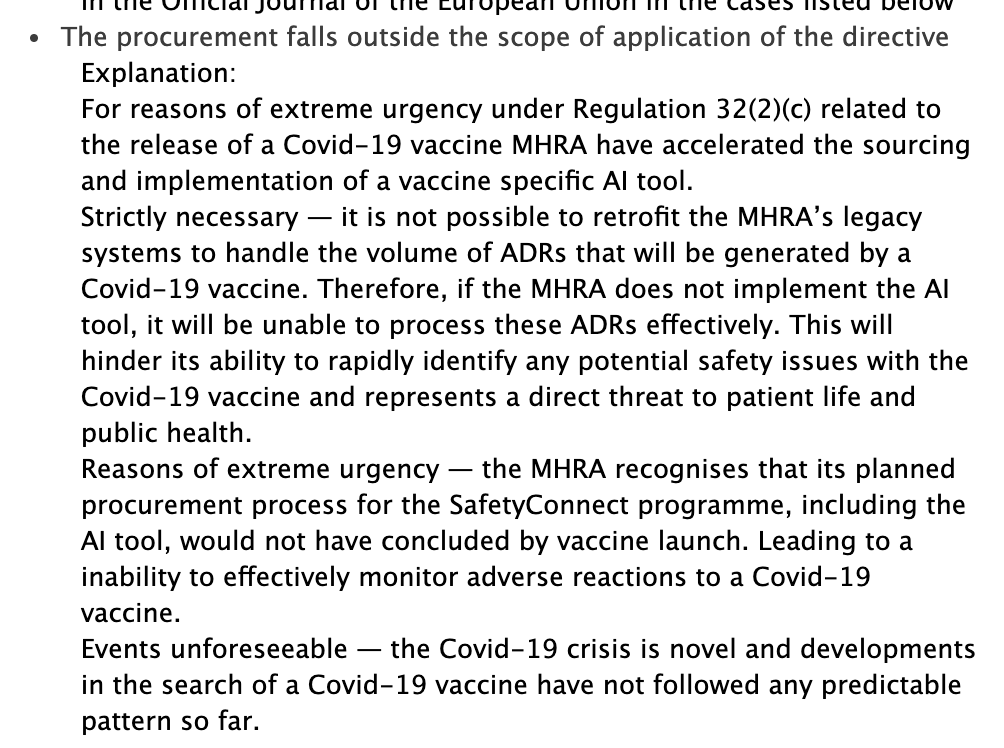

According to that article - and I’m getting a lot of mileage out of it but it’s a humdinger - the MHRA says, not using AI would “hinder [the trials] ability to rapidly identify any potential safety issues . . . and represents a direct threat to patient life and public health”.

Presumably it means: we need to automate aspects of this because we don’t have enough skilled humans to do the job in a timely and accurate manner. They are saying using AI will save lives - perhaps - but will it save all lives equally, and who and how will know if it doesn’t?

The actual purpose of the project: “The UK drugs regulator is planning to use artificial intelligence to sift through the “high volume” of reports of adverse reactions to Covid-19 vaccines in the coming months, as it prepares for an inoculation programme of groundbreaking scale.”

It is absolute nonsense that fear and lack of public understanding are repeatedly framed as “holding back innovation” if the use of AI is framed this way. It could mean anything at all, from sorting empty form fields through to scoring the validity of responses.

Also, anyone who had poked around in NHS data will be

at this: “Kate Bingham ...said the use of AI was “just what the MHRA should be doing”, adding that the UK is “incredibly well set up to do this given we all have NHS records which are electronic and connected”.”

at this: “Kate Bingham ...said the use of AI was “just what the MHRA should be doing”, adding that the UK is “incredibly well set up to do this given we all have NHS records which are electronic and connected”.”

at this: “Kate Bingham ...said the use of AI was “just what the MHRA should be doing”, adding that the UK is “incredibly well set up to do this given we all have NHS records which are electronic and connected”.”

at this: “Kate Bingham ...said the use of AI was “just what the MHRA should be doing”, adding that the UK is “incredibly well set up to do this given we all have NHS records which are electronic and connected”.”

Anyway, sorry, I realise this is an unacceptable number of tweets about one thing at such an early hour, *but* the article does link to the contract award notice (I’m not sure what that actually means, but anyway) https://ted.europa.eu/udl?uri=TED:NOTICE:506291-2020:TEXT:EN:HTML&src=0

"the MHRA recognises that its planned procurement process for the SafetyConnect programme, including the AI tool, would not have concluded by vaccine launch. Leading to a inability to effectively monitor adverse reactions to a Covid-19 vaccine."

I would have liked the person running the vaccine prog to talk about the importance of safety and diligence under pressure in an under-resourced system responding at speed, not the fact that AI is super cool. Leaders don’t need to understand everything, but they must ask good Qs.

Read on Twitter

Read on Twitter