Folks, stop refreshing the forecasts. The comfort you seek isn't there—and can't be. The excessive uncertainty this year–especially about turnout in a pandemic and which votes will count—makes these models structurally less useful than before. New piece: https://www.nytimes.com/2020/11/01/opinion/election-forecasts-modeling-flaws.html

Check out this thread for a sophisticated discussion of what it means to "predict" essentially an unrepeatable event, one that is rare to boot—once every four years every one of which happens under greatly different conditions. Now amidst a pandemic. https://twitter.com/SimonDeDeo/status/1322770550352216064

Before anyone jumps on this, this is not a polemic against FiveThirtyEight's or anyone else's modeling choices. As I say in the piece, I was hopeful early on modeling would cut down on misleading horse-race coverage. I even wrote a piece on that! But that's not what's happened.

For weather models we have detailed, fine-grained grasp of the underlying dynamics, a mountain of data, and chances to test our predictions every day. Presidential elections? Numbers fly around every four years, then lots of debate and no conclusion. Because it *cannot* conclude.

Electoral forecast presentation has gotten better since 2016 but the public understanding is still not there. That probability number is not communicating this fact: as things stand, they do not, and cannot, rule out either party winning. That's the key thing people need to know.

Never fails. Yeah, you got me. I don't understand probabilities. https://twitter.com/sfspaulding/status/1322979579204636677

As addendum, for @insight, I wrote about why I changed my mind on this, and why I was wrong in 2012 when I defended modeling when Nate Silver was being trashed by pundits. The pundits were wrong for sure. But things did not turn out the way I had hoped. https://zeynep.substack.com/p/stop-refreshing-that-forecast

Example from today: Stories about polls and predictions do great among the "most read" pages. I get it. A lot is at stake. But there is just no way for forecasts to deliver what we seek, and as 2016 showed, it can even do harm if we rely on them and assume "likely" means certain.

The number of comments like this—often from people who understand models and probability—really make my case. If we shouldn't be surprised with *either* outcome, that just reinforces my point. And here's why people "treat probabilities as forecasts"! https://twitter.com/liammannix/status/1323025081363099648

People keep asking if I think the forecasts are right or wrong. But you cannot ask that question of them. They can't be right or wrong. They weren't wrong in 2016. They won't be wrong now. That's their nature. But focus on prediction *can* affect the outcome. That's the danger.

People in my inbox with that are favorable or not to Biden. I never said that the models are wrong. It's that they CANNOT be right OR wrong. The model probabilities you are seeing are NOT making that kind of prediction, and, crucially, the unknown unknowns are not factored in.

It's that they CANNOT be right OR wrong. The model probabilities you are seeing are NOT making that kind of prediction, and, crucially, the unknown unknowns are not factored in.

It's that they CANNOT be right OR wrong. The model probabilities you are seeing are NOT making that kind of prediction, and, crucially, the unknown unknowns are not factored in.

It's that they CANNOT be right OR wrong. The model probabilities you are seeing are NOT making that kind of prediction, and, crucially, the unknown unknowns are not factored in.

If you're looking for a distraction: Excellent academic article from @StatModeling @JessicaHullman @CBWlezien, @gelliottmorris & @JessicaHullman on communicating about forecasts—what they are, what they aren't. Again: the forecasts aren't wrong! Or right! https://twitter.com/JessicaHullman/status/1322981825040523271

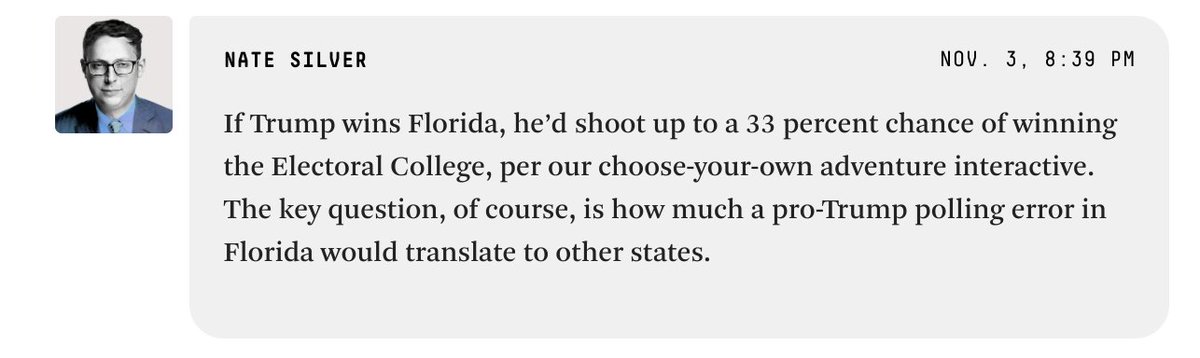

Without making claims about any other state or the outcome. (I don't know!). Polling has clearly missed Florida, at least. Polling in a year like this included extra uncertainty. And it's also plausible that forecasts impacted/energized voters differently. https://twitter.com/samstein/status/1323786156551581697

This is how fragile those forecasts were. One state has a polling error, and the forecast now says Trump has one-in-three chance of winning. If errors are broadly correlated—not just Miami Cubans—the odds go up more. Big numbers don't imply big certainty. https://fivethirtyeight.com/live-blog/2020-election-results-coverage/

Nothing. Before the election, organize, donate, vote. The forecasts are fragile for so many reasons; plus this year has pandemic and now we have, likely, court battles. https://twitter.com/CorbinSupak/status/1323811695760912385

More model uncertainty! NYT needle (another model!) may be overcorrecting for Trump because he did better than expected in FL: model assumes same in NC + GA—but if FL is only because of Miami Cubans, that won't be the case. Wait for the counts. ¯\\_(ツ)_/¯ https://twitter.com/Nate_Cohn/status/1323811540018077696

Correcting my own garbled tweet! The 80% chance of Trump winning both is model overshoot, say Nates Cohn and Silver. Errors are sometimes correlated & sometimes not. My error: it doesn't pertain to the presidential chances (which NYT says would be equal). https://twitter.com/NateSilver538/status/1323813664164585474

To people asking: I can't predict this. I don't know how anyone can. My prediction is that we will not have a for certain answer tonight (many key states will be counting into tomorrow and then there may well be court cases).

Yep. The polls were systematically wrong *again*. Also, the forecasts—which led to a sense of certainty of a Biden win, since their fragility to even tiny shift is not understood well—also likely affected the outcome: more R turnout, more ticket-splitting. https://twitter.com/Redistrict/status/1323976110510657539

"Ticket-splitting" might have come not just from Biden at top voters: I know of people who could not vote for Trump, but did not want Democratic trifecta and voted for & worked hard for R senators. Also Maine: some Collins voters may have thought she'd in a Dem Senate/Prez setup.

Look, if I were betting, I'd bet Biden would win, probably comfortably. He might still win, but narrowly. But I was *uncertain*. I don't think we can model rare events well. We can't poll well anymore, let alone during a pandemic. But uncertainty isn't what forecasts communicate.

Yes. I know electoral forecasts are one thing among many things, but it's part of a broader pattern where we focus too much on the wrong things. We just need to do better acknowledging when we honestly don't know, and when predicting is besides the point. https://twitter.com/spicerjason/status/1324036088177004544

Updated my pre-election op-ed on the case for ignoring forecasts. I know, I know. But there's the future. We can't poll with enough certainty and precision; we can't do good models of events that happen only once every four years; it distorts the process https://www.nytimes.com/2020/11/01/opinion/election-forecasts-modeling-flaws.html

Read on Twitter

Read on Twitter